Healthcare enterprises globally are working with NVIDIA to drive AI-accelerated solutions that are detecting diseases earlier from medical images, delivering critical insights to care teams and revolutionizing drug discovery workflows.

NVIDIA Clara, a suite of software and services that powers AI healthcare solutions, is enabling this transformation industry-wide. The Clara ecosystem includes BioNeMo for drug discovery, Holoscan for medical devices, Parabricks for genomics and MONAI for medical imaging.

Using NVIDIA Clara, healthcare researchers and companies have recently achieved milestones including generating blueprints for two novel proteins with BioNeMo, conducting a first-of-its-kind surgery with Holoscan, and deploying MONAI-powered solutions in radiology departments.

BioNeMo Enables Generative AI for Drug Discovery

Traditional drug discovery is a time- and resource-intensive process. Many drugs take more than a decade to go to market, with an average drug candidate success rate of just 10%. Generative AI, which makes use of large language models, can help increase the chances of success in less time with fewer costs.

Just as the large language models behind services like ChatGPT can generate text, generative AI models trained on biomolecular data can generate blueprints for new molecules and proteins, a critical step in drug discovery.

NVIDIA BioNeMo is a cloud service for generative AI in biology, offering a variety of AI models for small molecules and proteins. With BioNeMo, pharmaceutical research and industry professionals can use generative AI to accelerate the identification and optimization of new drug candidates.

Startup Evozyne used NVIDIA BioNeMo for AI protein identification to engineer new proteins with enhanced functionality. A joint paper describes the engineered proteins — one to potentially be used for treating disease and another designed for carbon consumption.

Deloitte is using AI models ESM and OpenFold in BioNeMo for its AI drug discovery platform for 3D protein structure prediction, model rank classification and druggable region prediction.

NVIDIA Inception member Innophore uses BioNeMo with its product Cavitomix, a tool that allows users to analyze protein cavities from any input structure. PyTorch-based AI model OpenFold is accelerated up to 6x in BioNeMo, resulting in lightning-fast 3D protein structure prediction of linear amino acids.

Holoscan Powers Real-Time AI in Medical Devices

Millions of medical devices are used every day across hospitals to enable robot-assisted surgery, radiation therapy, CT scans and more. NVIDIA Holoscan — a scalable, software-defined AI computing platform for processing real-time data at the edge — accelerates these devices to deliver the low-latency inference required for AI in a clinical setting.

In a landmark step, doctors at Belgium-based surgical training center ORSI Academy brought NVIDIA Holoscan into the operating room to support real-world, robot-assisted surgery for the first time.

At Onze-Lieve-Vrouw Hospital, urologists trained at ORSI successfully removed the patient’s kidney using Intuitive’s da Vinci robotic-assisted surgical system, with the help of an augmented reality overlay of the patient’s anatomy from a CT scan, rendered in real time and AI-augmented with Holoscan. The video feed overlay allowed the surgeon to clearly view the patient’s vascular and tissue structures that may have been obstructed from view by the surgical instruments used during the procedure.

Parabricks Accelerates Genomics for Precision Medicine

Accelerating genomic sequencing, the process of determining the genetic makeup of a specific organism or cell type, is critical to unlocking the full potential of precision medicine.

NVIDIA Parabricks is a suite of AI-accelerated genomic analysis applications that enhances the speed and accuracy of the entire sequencing process, from gathering genetic data to analyzing and reporting it. A whole genome can be analyzed in 16 minutes vs. about 24 hours on CPU, meaning that around 32,000 genomes can be analyzed in a year on a single server.

Accessible from either the genomics instrument itself or through cloud services, Parabricks allows for flexible, scalable and efficient genomics analysis that can lead to more accurate diagnoses and tailored treatments.

Form Bio has recently integrated NVIDIA Parabricks into its computational life sciences platform, resulting in a 52% reduction in overall costs and an over 80x speedup, enabling life sciences professionals to accelerate whole genome sequence analysis.

PacBio began shipping its Revio system, a long-read sequencer designed to deliver accurate, complete genomes at high throughput. With on-board NVIDIA GPUs, Revio has 20x more computing power than prior PacBio systems. The compute is used to handle the increased scale and to utilize advanced AI models for basecalling and methylation analysis. For spatial biology workflows, Nanostring is using NVIDIA technology in its CosMx instrument to power 5-20x faster cell segmentation.

MONAI Helps to Build and Deploy Medical AI

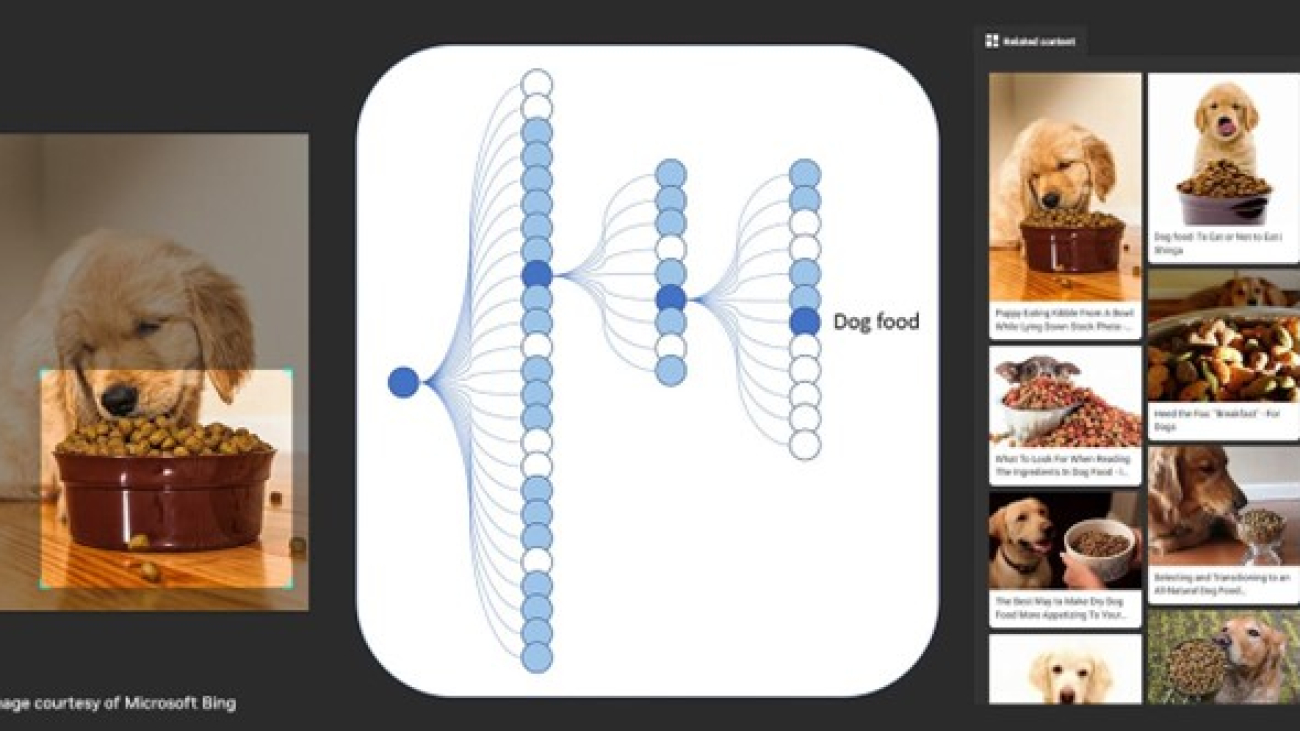

Accurate, detailed processing of medical images is crucial for precise diagnosis. MONAI, a medical imaging AI framework accelerated by NVIDIA, simplifies the creation of healthcare AI applications that can label and analyze medical images.

MONAI recently surpassed 1 million downloads, solidifying its position as an industry-standard tool for healthcare AI developers. MONAI MAPs streamline the deployment of AI models created with the framework as applications that integrate within healthcare workflows and medical software ecosystems.

Biomedical research data platform Flywheel is incorporating MONAI in its offerings. In collaboration with the University of Wisconsin Radiology Department, Flywheel has used MONAI to develop a model-based image classifier that predicts and labels the body regions present in medical images. The AI application speeds up data preparation from up to eight months to just one day.

MLOps platform Weights & Biases is bringing MONAI to Cincinnati Children’s Hospital, providing AI researchers there with a full suite of tools to train and tune computer vision algorithms for AI-assisted object detection to aid diagnosis.

AI Available Anytime, Anywhere

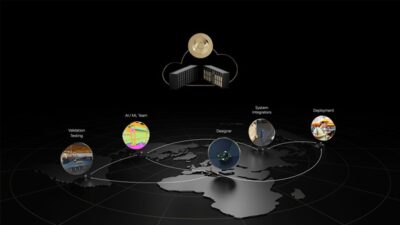

With the vast applications and impact of AI in healthcare, strategic implementation of the technology is essential. NVIDIA Clara is reaching developers wherever they are, however it’s needed, through global systems integrators, original design manufacturers, cloud platforms and more.

- Bringing AI to a global network: Global systems integrator Deloitte is helping solution providers around the world bring NVIDIA Clara to the healthcare ecosystem. With access to Clara, Deloitte’s professionals are leveraging MONAI for medical imaging, NVIDIA FLARE for federated learning and BioNeMo for drug discovery to develop innovative solutions for customers across the industry.

- AI solutions expertise: Service delivery partner Quantiphi consults with clients on AI solutions using its expertise in NVIDIA healthcare software, including Clara Discovery, MONAI, BioMegatron and BioNeMo.

- Managing data in the cloud: MONAI has been integrated with all major cloud hyperscalers, allowing for optimized processing and data sharing in a single environment. NVIDIA Parabricks is available in every public cloud and on genomics-specific cloud platforms, including the Terra cloud platform, which is co-developed by The Broad Institute of MIT and Harvard, Microsoft and Verily and has more than 25,000 users.

- Software-defined devices: System builder Advantech is adopting NVIDIA IGX, an industrial-grade edge AI platform, for low-latency, real-time healthcare applications in its all-in-one, medical-grade computers.

Discover the latest in AI and healthcare at GTC, running online through Thursday, March 23. Registration is free.

Watch the GTC keynote address by NVIDIA founder and CEO Jensen Huang below: