This week marks the beginning of the premier annual Computer Vision and Pattern Recognition conference (CVPR 2023), held in-person in Vancouver, BC (with additional virtual content). As a leader in computer vision research and a Platinum Sponsor, Google Research will have a strong presence across CVPR 2023 with 90 papers being presented at the main conference and active involvement in over 40 conference workshops and tutorials.

If you are attending CVPR this year, please stop by our booth to chat with our researchers who are actively exploring the latest techniques for application to various areas of machine perception. Our researchers will also be available to talk about and demo several recent efforts, including on-device ML applications with MediaPipe, strategies for differential privacy, neural radiance field technologies and much more.

You can also learn more about our research being presented at CVPR 2023 in the list below (Google affiliations in bold).

Board and organizing committee

Senior area chairs include: Cordelia Schmid, Ming-Hsuan Yang

Area chairs include: Andre Araujo, Anurag Arnab, Rodrigo Benenson, Ayan Chakrabarti, Huiwen Chang, Alireza Fathi, Vittorio Ferrari, Golnaz Ghiasi, Boqing Gong, Yedid Hoshen, Varun Jampani, Lu Jiang, Da-Cheng Jua, Dahun Kim, Stephen Lombardi, Peyman Milanfar, Ben Mildenhall, Arsha Nagrani, Jordi Pont-Tuset, Paul Hongsuck Seo, Fei Sha, Saurabh Singh, Noah Snavely, Kihyuk Sohn, Chen Sun, Pratul P. Srinivasan, Deqing Sun, Andrea Tagliasacchi, Federico Tombari, Jasper Uijlings

Publicity Chair: Boqing Gong

Demonstration Chair: Jonathan T. Barron

Program Advisory Board includes: Cordelia Schmid, Richard Szeliski

Panels

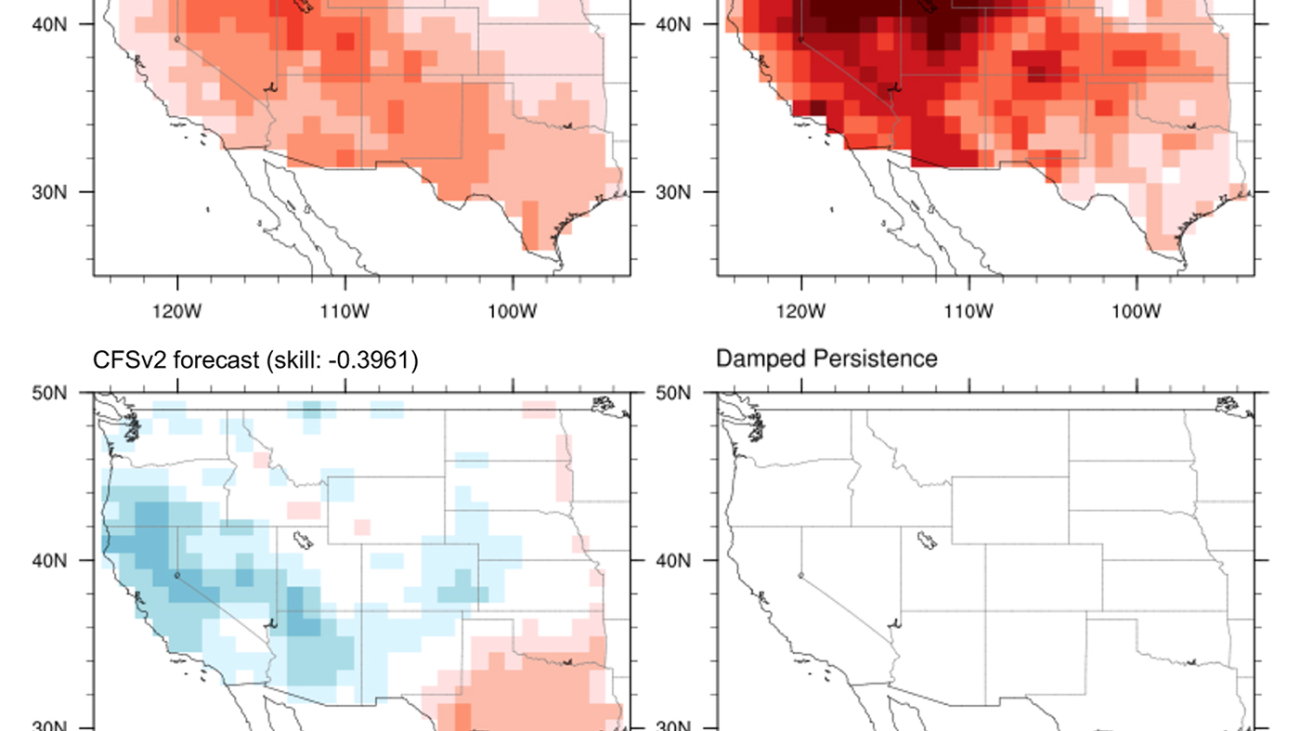

Scientific Discovery and the Environment

Best Paper Award candidates

MobileNeRF: Exploiting the Polygon Rasterization Pipeline for Efficient Neural Field Rendering on Mobile Architectures

Zhiqin Chen, Thomas Funkhouser, Peter Hedman, Andrea Tagliasacchi

DynIBaR: Neural Dynamic Image-Based Rendering

Zhengqi Li, Qianqian Wang, Forrester Cole, Richard Tucker, Noah Snavely

DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation

Nataniel Ruiz*, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, Kfir Aberman

On Distillation of Guided Diffusion Models

Chenlin Meng, Robin Rombach, Ruiqi Gao, Diederik Kingma, Stefano Ermon, Jonathan Ho, Tim Salimans

Highlight papers

Connecting Vision and Language with Video Localized Narratives

Paul Voigtlaender, Soravit Changpinyo, Jordi Pont-Tuset, Radu Soricut, Vittorio Ferrari

MaskSketch: Unpaired Structure-Guided Masked Image Generation

Dina Bashkirova*, Jose Lezama, Kihyuk Sohn, Kate Saenko, Irfan Essa

SPARF: Neural Radiance Fields from Sparse and Noisy Poses

Prune Truong*, Marie-Julie Rakotosaona, Fabian Manhardt, Federico Tombari

MAGVIT: Masked Generative Video Transformer

Lijun Yu*, Yong Cheng, Kihyuk Sohn, Jose Lezama, Han Zhang, Huiwen Chang, Alexander Hauptmann, Ming-Hsuan Yang, Yuan Hao, Irfan Essa, Lu Jiang

Region-Aware Pretraining for Open-Vocabulary Object Detection with Vision Transformers

Dahun Kim, Anelia Angelova, Weicheng Kuo

I2MVFormer: Large Language Model Generated Multi-View Document Supervision for Zero-Shot Image Classification

Muhammad Ferjad Naeem, Gul Zain Khan, Yongqin Xian, Muhammad Zeshan Afzal, Didier Stricker, Luc Van Gool, Federico Tombari

Improving Robust Generalization by Direct PAC-Bayesian Bound Minimization

Zifan Wang*, Nan Ding, Tomer Levinboim, Xi Chen, Radu Soricut

Imagen Editor and EditBench: Advancing and Evaluating Text-Guided Image Inpainting (see blog post)

Su Wang, Chitwan Saharia, Ceslee Montgomery, Jordi Pont-Tuset, Shai Noy, Stefano Pellegrini, Yasumasa Onoe, Sarah Laszlo, David J. Fleet, Radu Soricut, Jason Baldridge, Mohammad Norouzi, Peter Anderson, William Cha

RUST: Latent Neural Scene Representations from Unposed Imagery

Mehdi S. M. Sajjadi, Aravindh Mahendran, Thomas Kipf, Etienne Pot, Daniel Duckworth, Mario Lučić, Klaus Greff

REVEAL: Retrieval-Augmented Visual-Language Pre-Training with Multi-Source Multimodal Knowledge Memory (see blog post)

Ziniu Hu*, Ahmet Iscen, Chen Sun, Zirui Wang, Kai-Wei Chang, Yizhou Sun, Cordelia Schmid, David Ross, Alireza Fathi

RobustNeRF: Ignoring Distractors with Robust Losses

Sara Sabour, Suhani Vora, Daniel Duckworth, Ivan Krasin, David J. Fleet, Andrea Tagliasacchi

Papers

AligNeRF: High-Fidelity Neural Radiance Fields via Alignment-Aware Training

Yifan Jiang*, Peter Hedman, Ben Mildenhall, Dejia Xu, Jonathan T. Barron, Zhangyang Wang, Tianfan Xue*

BlendFields: Few-Shot Example-Driven Facial Modeling

Kacper Kania, Stephan Garbin, Andrea Tagliasacchi, Virginia Estellers, Kwang Moo Yi, Tomasz Trzcinski, Julien Valentin, Marek Kowalski

Enhancing Deformable Local Features by Jointly Learning to Detect and Describe Keypoints

Guilherme Potje, Felipe Cadar, Andre Araujo, Renato Martins, Erickson Nascimento

How Can Objects Help Action Recognition?

Xingyi Zhou, Anurag Arnab, Chen Sun, Cordelia Schmid

Hybrid Neural Rendering for Large-Scale Scenes with Motion Blur

Peng Dai, Yinda Zhang, Xin Yu, Xiaoyang Lyu, Xiaojuan Qi

IFSeg: Image-Free Semantic Segmentation via Vision-Language Model

Sukmin Yun, Seong Park, Paul Hongsuck Seo, Jinwoo Shin

Learning from Unique Perspectives: User-Aware Saliency Modeling (see blog post)

Shi Chen*, Nachiappan Valliappan, Shaolei Shen, Xinyu Ye, Kai Kohlhoff, Junfeng He

MAGE: MAsked Generative Encoder to Unify Representation Learning and Image Synthesis

Tianhong Li*, Huiwen Chang, Shlok Kumar Mishra, Han Zhang, Dina Katabi, Dilip Krishnan

NeRF-Supervised Deep Stereo

Fabio Tosi, Alessio Tonioni, Daniele Gregorio, Matteo Poggi

Omnimatte3D: Associating Objects and their Effects in Unconstrained Monocular Video

Mohammed Suhail, Erika Lu, Zhengqi Li, Noah Snavely, Leon Sigal, Forrester Cole

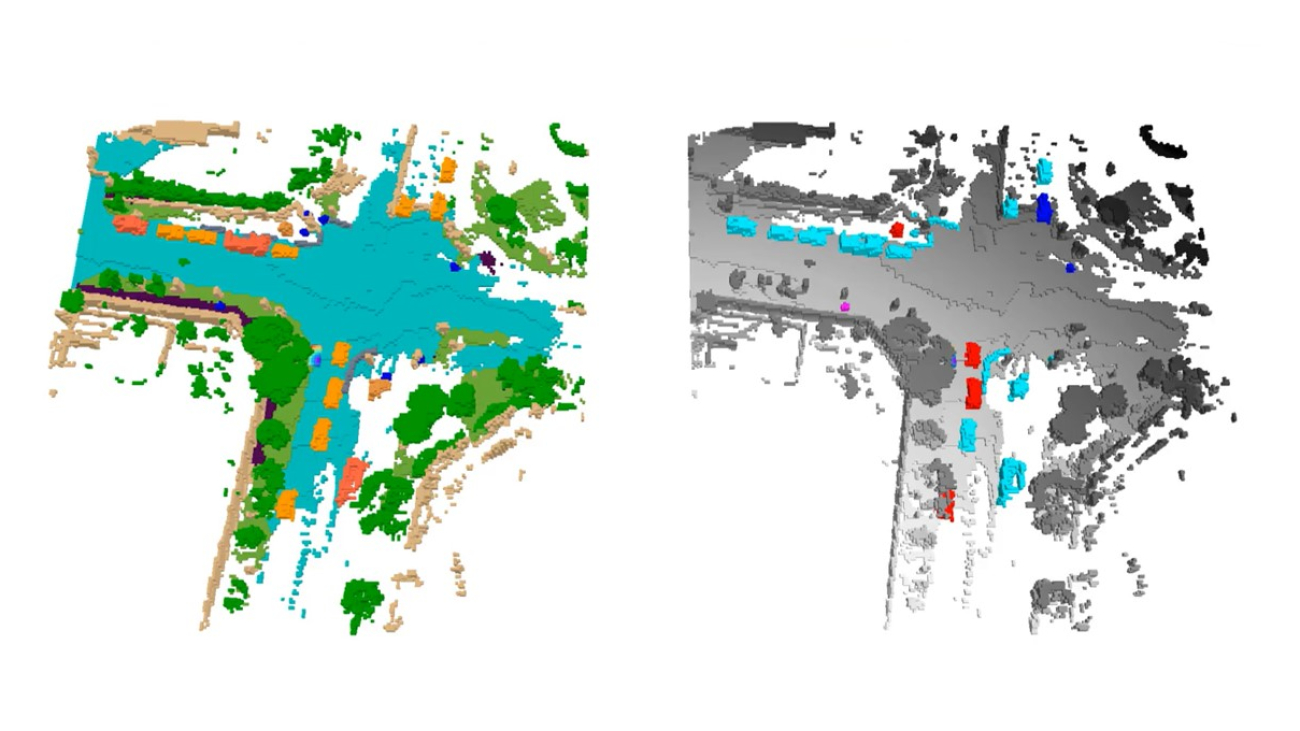

OpenScene: 3D Scene Understanding with Open Vocabularies

Songyou Peng, Kyle Genova, Chiyu Jiang, Andrea Tagliasacchi, Marc Pollefeys, Thomas Funkhouser

PersonNeRF: Personalized Reconstruction from Photo Collections

Chung-Yi Weng, Pratul Srinivasan, Brian Curless, Ira Kemelmacher-Shlizerman

Prefix Conditioning Unifies Language and Label Supervision

Kuniaki Saito*, Kihyuk Sohn, Xiang Zhang, Chun-Liang Li, Chen-Yu Lee, Kate Saenko, Tomas Pfister

Rethinking Video ViTs: Sparse Video Tubes for Joint Image and Video Learning (see blog post)

AJ Piergiovanni, Weicheng Kuo, Anelia Angelova

Burstormer: Burst Image Restoration and Enhancement Transformer

Akshay Dudhane, Syed Waqas Zamir, Salman Khan, Fahad Shahbaz Khan, Ming-Hsuan Yang

Decentralized Learning with Multi-Headed Distillation

Andrey Zhmoginov, Mark Sandler, Nolan Miller, Gus Kristiansen, Max Vladymyrov

GINA-3D: Learning to Generate Implicit Neural Assets in the Wild

Bokui Shen, Xinchen Yan, Charles R. Qi, Mahyar Najibi, Boyang Deng, Leonidas Guibas, Yin Zhou, Dragomir Anguelov

Grad-PU: Arbitrary-Scale Point Cloud Upsampling via Gradient Descent with Learned Distance Functions

Yun He, Danhang Tang, Yinda Zhang, Xiangyang Xue, Yanwei Fu

Hi-LASSIE: High-Fidelity Articulated Shape and Skeleton Discovery from Sparse Image Ensemble

Chun-Han Yao*, Wei-Chih Hung, Yuanzhen Li, Michael Rubinstein, Ming-Hsuan Yang, Varun Jampani

Hyperbolic Contrastive Learning for Visual Representations beyond Objects

Songwei Ge, Shlok Mishra, Simon Kornblith, Chun-Liang Li, David Jacobs

Imagic: Text-Based Real Image Editing with Diffusion Models

Bahjat Kawar*, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, Michal Irani

Incremental 3D Semantic Scene Graph Prediction from RGB Sequences

Shun-Cheng Wu, Keisuke Tateno, Nassir Navab, Federico Tombari

IPCC-TP: Utilizing Incremental Pearson Correlation Coefficient for Joint Multi-Agent Trajectory Prediction

Dekai Zhu, Guangyao Zhai, Yan Di, Fabian Manhardt, Hendrik Berkemeyer, Tuan Tran, Nassir Navab, Federico Tombari, Benjamin Busam

Learning to Generate Image Embeddings with User-Level Differential Privacy

Zheng Xu, Maxwell Collins, Yuxiao Wang, Liviu Panait, Sewoong Oh, Sean Augenstein, Ting Liu, Florian Schroff, H. Brendan McMahan

NoisyTwins: Class-Consistent and Diverse Image Generation Through StyleGANs

Harsh Rangwani, Lavish Bansal, Kartik Sharma, Tejan Karmali, Varun Jampani, Venkatesh Babu Radhakrishnan

NULL-Text Inversion for Editing Real Images Using Guided Diffusion Models

Ron Mokady*, Amir Hertz*, Kfir Aberman, Yael Pritch, Daniel Cohen-Or*

SCOOP: Self-Supervised Correspondence and Optimization-Based Scene Flow

Itai Lang*, Dror Aiger, Forrester Cole, Shai Avidan, Michael Rubinstein

Shape, Pose, and Appearance from a Single Image via Bootstrapped Radiance Field Inversion

Dario Pavllo*, David Joseph Tan, Marie-Julie Rakotosaona, Federico Tombari

TexPose: Neural Texture Learning for Self-Supervised 6D Object Pose Estimation

Hanzhi Chen, Fabian Manhardt, Nassir Navab, Benjamin Busam

TryOnDiffusion: A Tale of Two UNets

Luyang Zhu*, Dawei Yang, Tyler Zhu, Fitsum Reda, William Chan, Chitwan Saharia, Mohammad Norouzi, Ira Kemelmacher-Shlizerman

A New Path: Scaling Vision-and-Language Navigation with Synthetic Instructions and Imitation Learning

Aishwarya Kamath*, Peter Anderson, Su Wang, Jing Yu Koh*, Alexander Ku, Austin Waters, Yinfei Yang*, Jason Baldridge, Zarana Parekh

CLIPPO: Image-and-Language Understanding from Pixels Only

Michael Tschannen, Basil Mustafa, Neil Houlsby

Controllable Light Diffusion for Portraits

David Futschik, Kelvin Ritland, James Vecore, Sean Fanello, Sergio Orts-Escolano, Brian Curless, Daniel Sýkora, Rohit Pandey

CUF: Continuous Upsampling Filters

Cristina Vasconcelos, Cengiz Oztireli, Mark Matthews, Milad Hashemi, Kevin Swersky, Andrea Tagliasacchi

Improving Zero-Shot Generalization and Robustness of Multi-modal Models

Yunhao Ge*, Jie Ren, Andrew Gallagher, Yuxiao Wang, Ming-Hsuan Yang, Hartwig Adam, Laurent Itti, Balaji Lakshminarayanan, Jiaping Zhao

LOCATE: Localize and Transfer Object Parts for Weakly Supervised Affordance Grounding

Gen Li, Varun Jampani, Deqing Sun, Laura Sevilla-Lara

Nerflets: Local Radiance Fields for Efficient Structure-Aware 3D Scene Representation from 2D Supervision

Xiaoshuai Zhang, Abhijit Kundu, Thomas Funkhouser, Leonidas Guibas, Hao Su, Kyle Genova

Self-Supervised AutoFlow

Hsin-Ping Huang, Charles Herrmann, Junhwa Hur, Erika Lu, Kyle Sargent, Austin Stone, Ming-Hsuan Yang, Deqing Sun

Train-Once-for-All Personalization

Hong-You Chen*, Yandong Li, Yin Cui, Mingda Zhang, Wei-Lun Chao, Li Zhang

Vid2Seq: Large-Scale Pretraining of a Visual Language Model for Dense Video Captioning (see blog post)

Antoine Yang*, Arsha Nagrani, Paul Hongsuck Seo, Antoine Miech, Jordi Pont-Tuset, Ivan Laptev, Josef Sivic, Cordelia Schmid

VILA: Learning Image Aesthetics from User Comments with Vision-Language Pretraining

Junjie Ke, Keren Ye, Jiahui Yu, Yonghui Wu, Peyman Milanfar, Feng Yang

You Need Multiple Exiting: Dynamic Early Exiting for Accelerating Unified Vision Language Model

Shengkun Tang, Yaqing Wang, Zhenglun Kong, Tianchi Zhang, Yao Li, Caiwen Ding, Yanzhi Wang, Yi Liang, Dongkuan Xu

Accidental Light Probes

Hong-Xing Yu, Samir Agarwala, Charles Herrmann, Richard Szeliski, Noah Snavely, Jiajun Wu, Deqing Sun

FedDM: Iterative Distribution Matching for Communication-Efficient Federated Learning

Yuanhao Xiong, Ruochen Wang, Minhao Cheng, Felix Yu, Cho-Jui Hsieh

FlexiViT: One Model for All Patch Sizes

Lucas Beyer, Pavel Izmailov, Alexander Kolesnikov, Mathilde Caron, Simon Kornblith, Xiaohua Zhai, Matthias Minderer, Michael Tschannen, Ibrahim Alabdulmohsin, Filip Pavetic

Iterative Vision-and-Language Navigation

Jacob Krantz, Shurjo Banerjee, Wang Zhu, Jason Corso, Peter Anderson, Stefan Lee, Jesse Thomason

MoDi: Unconditional Motion Synthesis from Diverse Data

Sigal Raab, Inbal Leibovitch, Peizhuo Li, Kfir Aberman, Olga Sorkine-Hornung, Daniel Cohen-Or

Multimodal Prompting with Missing Modalities for Visual Recognition

Yi-Lun Lee, Yi-Hsuan Tsai, Wei-Chen Chiu, Chen-Yu Lee

Scene-Aware Egocentric 3D Human Pose Estimation

Jian Wang, Diogo Luvizon, Weipeng Xu, Lingjie Liu, Kripasindhu Sarkar, Christian Theobalt

ShapeClipper: Scalable 3D Shape Learning from Single-View Images via Geometric and CLIP-Based Consistency

Zixuan Huang, Varun Jampani, Ngoc Anh Thai, Yuanzhen Li, Stefan Stojanov, James M. Rehg

Improving Image Recognition by Retrieving from Web-Scale Image-Text Data

Ahmet Iscen, Alireza Fathi, Cordelia Schmid

JacobiNeRF: NeRF Shaping with Mutual Information Gradients

Xiaomeng Xu, Yanchao Yang, Kaichun Mo, Boxiao Pan, Li Yi, Leonidas Guibas

Learning Personalized High Quality Volumetric Head Avatars from Monocular RGB Videos

Ziqian Bai*, Feitong Tan, Zeng Huang, Kripasindhu Sarkar, Danhang Tang, Di Qiu, Abhimitra Meka, Ruofei Du, Mingsong Dou, Sergio Orts-Escolano, Rohit Pandey, Ping Tan, Thabo Beeler, Sean Fanello, Yinda Zhang

NeRF in the Palm of Your Hand: Corrective Augmentation for Robotics via Novel-View Synthesis

Allan Zhou, Mo Jin Kim, Lirui Wang, Pete Florence, Chelsea Finn

Pic2Word: Mapping Pictures to Words for Zero-Shot Composed Image Retrieval

Kuniaki Saito*, Kihyuk Sohn, Xiang Zhang, Chun-Liang Li, Chen-Yu Lee, Kate Saenko, Tomas Pfister

SCADE: NeRFs from Space Carving with Ambiguity-Aware Depth Estimates

Mikaela Uy, Ricardo Martin Brualla, Leonidas Guibas, Ke Li

Structured 3D Features for Reconstructing Controllable Avatars

Enric Corona, Mihai Zanfir, Thiemo Alldieck, Eduard Gabriel Bazavan, Andrei Zanfir, Cristian Sminchisescu

Token Turing Machines

Michael S. Ryoo, Keerthana Gopalakrishnan, Kumara Kahatapitiya, Ted Xiao, Kanishka Rao, Austin Stone, Yao Lu, Julian Ibarz, Anurag Arnab

TruFor: Leveraging All-Round Clues for Trustworthy Image Forgery Detection and Localization

Fabrizio Guillaro, Davide Cozzolino, Avneesh Sud, Nicholas Dufour, Luisa Verdoliva

Video Probabilistic Diffusion Models in Projected Latent Space

Sihyun Yu, Kihyuk Sohn, Subin Kim, Jinwoo Shin

Visual Prompt Tuning for Generative Transfer Learning

Kihyuk Sohn, Yuan Hao, Jose Lezama, Luisa Polania, Huiwen Chang, Han Zhang, Irfan Essa, Lu Jiang

Zero-Shot Referring Image Segmentation with Global-Local Context Features

Seonghoon Yu, Paul Hongsuck Seo, Jeany Son

AVFormer: Injecting Vision into Frozen Speech Models for Zero-Shot AV-ASR (see blog post)

Paul Hongsuck Seo, Arsha Nagrani, Cordelia Schmid

DC2: Dual-Camera Defocus Control by Learning to Refocus

Hadi Alzayer, Abdullah Abuolaim, Leung Chun Chan, Yang Yang, Ying Chen Lou, Jia-Bin Huang, Abhishek Kar

Edges to Shapes to Concepts: Adversarial Augmentation for Robust Vision

Aditay Tripathi*, Rishubh Singh, Anirban Chakraborty, Pradeep Shenoy

MetaCLUE: Towards Comprehensive Visual Metaphors Research

Arjun R. Akula, Brendan Driscoll, Pradyumna Narayana, Soravit Changpinyo, Zhiwei Jia, Suyash Damle, Garima Pruthi, Sugato Basu, Leonidas Guibas, William T. Freeman, Yuanzhen Li, Varun Jampani

Multi-Realism Image Compression with a Conditional Generator

Eirikur Agustsson, David Minnen, George Toderici, Fabian Mentzer

NeRDi: Single-View NeRF Synthesis with Language-Guided Diffusion as General Image Priors

Congyue Deng, Chiyu Jiang, Charles R. Qi, Xinchen Yan, Yin Zhou, Leonidas Guibas, Dragomir Anguelov

On Calibrating Semantic Segmentation Models: Analyses and an Algorithm

Dongdong Wang, Boqing Gong, Liqiang Wang

Persistent Nature: A Generative Model of Unbounded 3D Worlds

Lucy Chai, Richard Tucker, Zhengqi Li, Phillip Isola, Noah Snavely

Rethinking Domain Generalization for Face Anti-spoofing: Separability and Alignment

Yiyou Sun*, Yaojie Liu, Xiaoming Liu, Yixuan Li, Wen-Sheng Chu

SINE: Semantic-Driven Image-Based NeRF Editing with Prior-Guided Editing Field

Chong Bao, Yinda Zhang, Bangbang Yang, Tianxing Fan, Zesong Yang, Hujun Bao, Guofeng Zhang, Zhaopeng Cui

Sequential Training of GANs Against GAN-Classifiers Reveals Correlated “Knowledge Gaps” Present Among Independently Trained GAN Instances

Arkanath Pathak, Nicholas Dufour

SparsePose: Sparse-View Camera Pose Regression and Refinement

Samarth Sinha, Jason Zhang, Andrea Tagliasacchi, Igor Gilitschenski, David Lindell

Teacher-Generated Spatial-Attention Labels Boost Robustness and Accuracy of Contrastive Models

Yushi Yao, Chang Ye, Gamaleldin F. Elsayed, Junfeng He

Workshops

Computer Vision for Mixed Reality

Speakers include: Ira Kemelmacher-Shlizerman

Workshop on Autonomous Driving (WAD)

Speakers include: Chelsea Finn

Multimodal Content Moderation (MMCM)

Organizers include: Chris Bregler

Speakers include: Mevan Babakar

Medical Computer Vision (MCV)

Speakers include: Shekoofeh Azizi

VAND: Visual Anomaly and Novelty Detection

Speakers include: Yedid Hoshen, Jie Ren

Structural and Compositional Learning on 3D Data

Organizers include: Leonidas Guibas

Speakers include: Andrea Tagliasacchi, Fei Xia, Amir Hertz

Fine-Grained Visual Categorization (FGVC10)

Organizers include: Kimberly Wilber, Sara Beery

Panelists include: Hartwig Adam

XRNeRF: Advances in NeRF for the Metaverse

Organizers include: Jonathan T. Barron

Speakers include: Ben Poole

OmniLabel: Infinite Label Spaces for Semantic Understanding via Natural Language

Organizers include: Golnaz Ghiasi, Long Zhao

Speakers include: Vittorio Ferrari

Large Scale Holistic Video Understanding

Organizers include: David Ross

Speakers include: Cordelia Schmid

New Frontiers for Zero-Shot Image Captioning Evaluation (NICE)

Speakers include: Cordelia Schmid

Computational Cameras and Displays (CCD)

Organizers include: Ulugbek Kamilov

Speakers include: Mauricio Delbracio

Gaze Estimation and Prediction in the Wild (GAZE)

Organizers include: Thabo Beele

Speakers include: Erroll Wood

Face and Gesture Analysis for Health Informatics (FGAHI)

Speakers include: Daniel McDuff

Computer Vision for Animal Behavior Tracking and Modeling (CV4Animals)

Organizers include: Sara Beery

Speakers include: Arsha Nagrani

3D Vision and Robotics

Speakers include: Pete Florence

End-to-End Autonomous Driving: Perception, Prediction, Planning and Simulation (E2EAD)

Organizers include: Anurag Arnab

End-to-End Autonomous Driving: Emerging Tasks and Challenges

Speakers include: Sergey Levine

Multi-Modal Learning and Applications (MULA)

Speakers include: Aleksander Hołyński

Synthetic Data for Autonomous Systems (SDAS)

Speakers include: Lukas Hoyer

Vision Datasets Understanding

Organizers include: José Lezama

Speakers include: Vijay Janapa Reddi

Precognition: Seeing Through the Future

Organizers include: Utsav Prabhu

New Trends in Image Restoration and Enhancement (NTIRE)

Organizers include: Ming-Hsuan Yang

Generative Models for Computer Vision

Speakers include: Ben Mildenhall, Andrea Tagliasacchi

Adversarial Machine Learning on Computer Vision: Art of Robustness

Organizers include: Xinyun Chen

Speakers include: Deqing Sun

Media Forensics

Speakers include: Nicholas Carlini

Tracking and Its Many Guises: Tracking Any Object in Open-World

Organizers include: Paul Voigtlaender

3D Scene Understanding for Vision, Graphics, and Robotics

Speakers include: Andy Zeng

Computer Vision for Physiological Measurement (CVPM)

Organizers include: Daniel McDuff

Affective Behaviour Analysis In-the-Wild

Organizers include: Stefanos Zafeiriou

Ethical Considerations in Creative Applications of Computer Vision (EC3V)

Organizers include: Rida Qadri, Mohammad Havaei, Fernando Diaz, Emily Denton, Sarah Laszlo, Negar Rostamzadeh, Pamela Peter-Agbia, Eva Kozanecka

VizWiz Grand Challenge: Describing Images and Videos Taken by Blind People

Speakers include: Haoran Qi

Efficient Deep Learning for Computer Vision (see blog post)

Organizers include: Andrew Howard, Chas Leichner

Speakers include: Andrew Howard

Visual Copy Detection

Organizers include: Priya Goyal

Learning 3D with Multi-View Supervision (3DMV)

Speakers include: Ben Poole

Image Matching: Local Features and Beyond

Organizers include: Eduard Trulls

Vision for All Seasons: Adverse Weather and Lightning Conditions (V4AS)

Organizers include: Lukas Hoyer

Transformers for Vision (T4V)

Speakers include: Cordelia Schmid, Huiwen Chang

Scholars vs Big Models — How Can Academics Adapt?

Organizers include: Sara Beery

Speakers include: Jonathan T. Barron, Cordelia Schmid

ScanNet Indoor Scene Understanding Challenge

Speakers include: Tom Funkhouser

Computer Vision for Microscopy Image Analysis

Speakers include: Po-Hsuan Cameron Chen

Embedded Vision

Speakers include: Rahul Sukthankar

Sight and Sound

Organizers include: Arsha Nagrani, William Freeman

AI for Content Creation

Organizers include: Deqing Sun, Huiwen Chang, Lu Jiang

Speakers include: Ben Mildenhall, Tim Salimans, Yuanzhen Li

Computer Vision in the Wild

Organizers include: Xiuye Gu, Neil Houlsby

Speakers include: Boqing Gong, Anelia Angelova

Visual Pre-Training for Robotics

Organizers include: Mathilde Caron

Omnidirectional Computer Vision

Organizers include: Yi-Hsuan Tsai

Tutorials

All Things ViTs: Understanding and Interpreting Attention in Vision

Hila Chefer, Sayak Paul

Recent Advances in Anomaly Detection

Guansong Pang, Joey Tianyi Zhou, Radu Tudor Ionescu, Yu Tian, Kihyuk Sohn

Contactless Healthcare Using Cameras and Wireless Sensors

Wenjin Wang, Xuyu Wang, Jun Luo, Daniel McDuff

Object Localization for Free: Going Beyond Self-Supervised Learning

Oriane Simeoni, Weidi Xie, Thomas Kipf, Patrick Pérez

Prompting in Vision

Kaiyang Zhou, Ziwei Liu, Phillip Isola, Hyojin Bahng, Ludwig Schmidt, Sarah Pratt, Denny Zhou

* Work done while at Google