TUSHER CHAKRABORTY: Hi. Thank you for having me here, Gretchen, today. Thank you.

HUIZINGA: So because this show is all about abstracts, in just a few sentences, tell us about the problem your paper addresses and why we should care about it.

CHAKRABORTY: Yeah, so think of, I’m a farmer living in a remote area and bought a sensor to monitor the soil quality of my farm. The big headache for me would be how to connect the sensor so that I can get access to the sensor data from anywhere. We all know that connectivity is a major bottleneck in remote areas. Now, what if, as a farmer, I could just click the power button of the sensor, and it gets connected from anywhere in the world. It’s pretty amazing, right? And that’s what our research is all about. Get your sensor devices connected from anywhere in the world with just the click of power button. We call it one-click connectivity. Now, you might be wondering, what’s the secret sauce? It’s not magic; it’s direct-to-satellite connectivity. So these sensors directly get connected to the satellites overhead from anywhere on Earth. The satellites, which are orbiting around the earth, collect the data from the sensing devices and forward to the ground stations in some other convenient parts of the world where these ground stations are connected to the internet.

HUIZINGA: So, Tusher, tell us what’s been tried before to address these issues and how your approach contributes to the literature and moves the science forward.

CHAKRABORTY: So satellite connectivity is nothing new and has been there for long. However, what sets us apart is our focus on democratizing space connectivity, making it affordable for everyone on the planet. So we are talking about the satellites that are at least 10 to 20 times cheaper and smaller than state-of-the-art satellites. So naturally, this ambitious vision comes with its own set of challenges. So when you try to make something cheaper and smaller, you’ll face lots of challenges that all these big satellites are not facing. So if I just go a bit technical, think of the antenna. So these big satellite antennas, they can actually focus on particular part of the world. So this is something called beamforming. On the other hand, when we try to make the satellites cheaper and smaller, we can’t have that luxury. We can’t have beamforming capability. So what happens, they have omnidirectional antenna. So it seems like … you can’t focus on a particular part of the earth rather than you create a huge footprint on all over the earth. So this is one of the challenges that you don’t face in the state-of-the-art satellites. And we try to solve these challenges because we want to make connectivity affordable with cheaper and smaller satellites.

HUIZINGA: Right. So as you’re describing this, it sounds like this is a universal problem, and people have obviously tried to make things smaller and more affordable in the past. How is yours different? What methodology did you use to resolve the problems, and how did you conduct the research?

CHAKRABORTY: OK, I’m thrilled that you asked this one because the research methodology was the most exciting part for me here. As a part of this research, we launched a satellite in a joint effort with a satellite company. Like, this is very awesome! So it was a hands-on experience with a real-deal satellite system. It was not simulation-based system. The main goal here was to learn the challenge from a real-world experience and come up with innovative solutions; at the same time, evaluate the solutions in real world. So it was all about learning by doing, and let me tell you, it was quite the ride! [LAUGHTER] We didn’t do anything new when we launched the satellites. We just tried to see how industry today does this. We want to learn from them, hey, what’s the industry practice? We launched a satellite. And then we faced a lot of problems that today’s industry is facing. And from there, we learned, hey, like, you know, this problem is industry facing; let’s go after this, and let’s solve this. And then we tried to come up with the solutions based on those problems. And this was our approach. We didn’t want to assume something beforehand. We want to learn from how industry is going today and help them. Like, hey, these are the problems you are facing, and we are here to help you out.

HUIZINGA: All right, so assuming you learned something and wanted to pass it along, what were your major findings?

CHAKRABORTY: OK, that’s a very good question. So I was talking about the challenges towards this democratization earlier, right? So one of the most pressing challenges: shortage of spectrum. So let me try to explain this from the high level. So we need hundreds of these satellites, hundreds of these small satellites, to provide 24-7 connectivity for millions of devices around the earth. Now, I was talking, the footprint of a satellite on Earth can easily cover a massive area, somewhat similar to the size of California. So now with this large footprint, a satellite can talk with thousands of devices on Earth. You can just imagine, right? And at the same time, a device on Earth can talk with multiple satellites because we are talking about hundreds of these satellites. Now, things get tricky here. [LAUGHTER] We need to make sure that when a device and a satellite are talking, another nearby device or a satellite doesn’t interfere. Otherwise, there will be chaos—no one hearing others properly. So when we were talking about this device and satellite chat, right, so what is that all about? This, all about in terms of communication, is packet exchange. So the device sends some packet to the satellite; satellite sends some packet to the device—it’s all about packet exchange. Now, you can think of, if multiple of these devices are talking with a satellite or multiple satellites are talking with a device, there will be a collision in this packet exchange if you try to send the packets at the same time. And if you do that, then your packet will be collided, and you won’t be able to get any packet on the receiver end. So what we do, we try to send this packet on different frequencies. It’s like a different sound or different tone so that they don’t collide with each other. And, like, now, I said that you need different frequencies, but frequency is naturally limited. And the choice of frequency is even limited. This is very expensive. But if you have limited frequency and you want to resolve this collision, then you have a problem here. How do you do that? So we solve this problem by smartly looking at an artifact of these satellites. So these satellites are moving really fast around the earth. So when they are moving very fast around the earth, they create a unique signature on the frequency that they are using to talk with the devices on Earth. And we use this unique signature, and in physics, this unique signature is known as Doppler signature. And now you don’t need a separate frequency to sound them different, to have packets on different frequencies. You just need to recognize that unique signature to distinguish between satellites and distinguish between their communications and packets. So in that sense, there won’t be any packet collision. And this is all about our findings. So with this, now multiple devices and satellites can talk with each other at the same time without interference but using the same frequency.

HUIZINGA: It sounds, like, very similar to a big room filled with a lot of people. Each person has their own voice, but in the mix, you, kind of, lose track of who’s talking and then you want to, kind of, tune in to that specific voice and say, that’s the one I’m listening to.

CHAKRABORTY: Yeah, I think you picked up the correct metaphor here! This is the scenario you can try to explain here. So, yeah, like what we are essentially doing, like, if you just, in a room full of people and they are trying to talk with each other, and then if they’re using the same tone, no one will be distinguished one person from another.

HUIZINGA: Right …

CHAKRABORTY: Everyone will sound same and that will be colliding. So you need to make sure that, how you can differentiate the tones …

HUIZINGA: Yeah …

CHAKRABORTY: … and the satellites differentiate their tones due to their fast movement. And we use our methodology to recognize that tone, which satellite is sending that tone.

HUIZINGA: So you sent up the experimental satellite to figure out what’s happening. Have you since tested it to see if it works?

CHAKRABORTY: Yeah, yeah, so we have tried it out, because this is a software solution, to be honest.

HUIZINGA: Ah.

CHAKRABORTY: As I was talking about, there is no hardware modification required at this point. So what we did, we just implemented this software in the ground stations, and then we tried to recognize which satellite is creating which sort of signature. That’s it!

HUIZINGA: Well, it seems like this research would have some solid real-world impact. So who would you say it helps most and how?

CHAKRABORTY: OK, that’s a very good one. So the majority of the earth still doesn’t have affordable connectivity. The lack of connectivity throws a big challenge to critical industries such as agriculture—the example that I gave—energy, and supply chain, so hindering their ability to thrive and innovate. So our vision is clear: to bring 24-7 connectivity for devices anywhere on Earth with just a click of power button. Moreover, affordability at the heart of our mission, ensuring that this connectivity is accessible to all. So in core, our efforts are geared towards empowering individuals and industries to unlock their full potential in an increasingly connected world.

HUIZINGA: If there was one thing you want our listeners to take away from this research, what would it be?

CHAKRABORTY: OK, if there is one thing I want you to take away from our work, it’s this: connectivity shouldn’t be a luxury; it’s a necessity. Whether you are a farmer in a remote village or a business owner in a city, access to reliable, affordable connectivity can transform your life and empower your endeavors. So our mission is to bring 24-7 connectivity to every corner of the globe with just a click of a button.

HUIZINGA: I like also how you say every corner of the globe, and I’m picturing a square! [LAUGHTER] OK, last question. Tusher, what’s next for research on satellite networks and Internet of Things? What big unanswered questions or unsolved problems remain in the field, and what are you planning to do about it?

CHAKRABORTY: Uh … where do I even begin? [LAUGHTER] Like, there are countless unanswered questions and unsolved problems in this field. But let me highlight one that we talked here: limited spectrum. So as our space network expands, so does our need for spectrum. But what’s the tricky part here? Just throw more and more spectrum. The problem is the chunk of spectrum that’s perfect for satellite communication is often already in use by the terrestrial networks. Now, a hard research question would be how we can make sure that the terrestrial and the satellite networks coexist in the same spectrum without interfering [with] each other. It’s a tough nut to crack, but it’s a challenge we are excited to tackle head-on as we continue to push the boundaries of research in this exciting field.

[MUSIC]

HUIZINGA: Tusher Chakraborty, thanks for joining us today, and to our listeners, thanks for tuning in. If you want to read this paper, you can find a link at aka.ms/abstracts (opens in new tab). You can also read it on the Networked Systems Design and Implementation, or NSDI, website, and you can hear more about it at the NSDI conference this week. See you next time on Abstracts!

[MUSIC FADES]

Anthony Liguori is an AWS VP and Distinguished Engineer for EC2

Anthony Liguori is an AWS VP and Distinguished Engineer for EC2 Colm MacCárthaigh is an AWS VP and Distinguished Engineer for EC2

Colm MacCárthaigh is an AWS VP and Distinguished Engineer for EC2

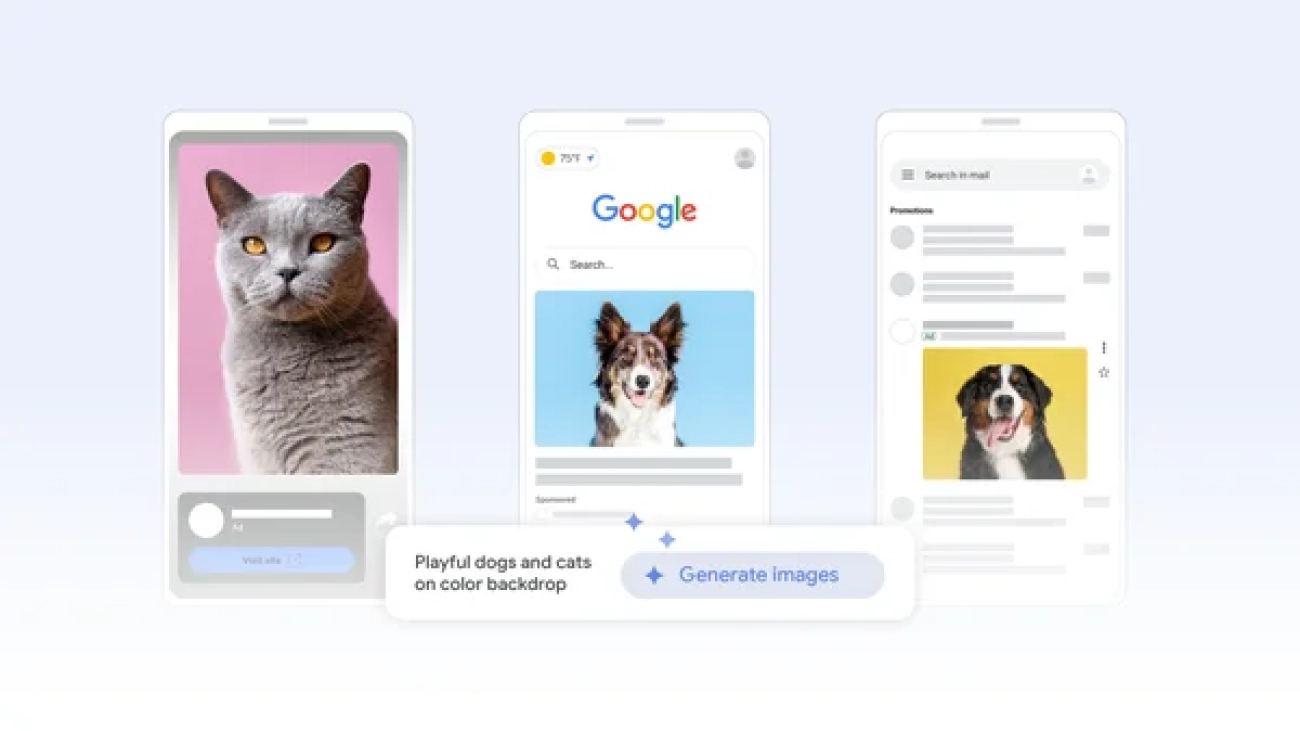

New generative image tools are coming to Demand Gen to help you create a variety of high-quality, stunning image assets with ease.

New generative image tools are coming to Demand Gen to help you create a variety of high-quality, stunning image assets with ease.