In this episode, I’m talking with Principal PM Manager Weishung Liu. Wei has used her love of storytelling and interest in people and their motivations to deliver meaningful products and customer experiences. This includes the creation of a successful line of Disney plush toys and contributions to the satellite internet system Starlink. With Microsoft, she helped develop Watch For, a real-time video analytics platform that has gone on to enhance gaming via streaming highlights and to support content moderation in products such as Xbox. Today, she’s facilitating connections and devising strategies to empower teams within Microsoft Research to maximize their reach. Here’s my conversation with Wei, beginning with her childhood in Silicon Valley.

JOHANNES GEHRKE: Hi, Wei. Welcome to What’s your Story. You’re our principal PM manager here in the lab, and we’ll talk in a little while about, you know, what you’re doing here right now, but maybe let’s start with, how did you actually end up in tech? Where did you grow up?

WEISHUNG LIU: Oh, wow. OK. So this is a very long, long and, like, nonlinear story about how I got into tech. So I grew up in Silicon Valley, which one would assume means just, like, oh, yes, you grew up in Silicon Valley; therefore, you must be in the STEM field, and therefore, you will be in tech for the rest of your life.

GEHRKE: Yep, that’s, sort of, a too familiar a story.

LIU: That’s a very linear story. And I totally actually wanted to rebel against that whole notion of going into tech. So I grew up in Silicon Valley and thought, like, man, I want to not do STEM.

GEHRKE: So did your parents want you to be either a doctor or engineer? Is that the … ?

LIU: Absolutely. It was either a doctor, engineer, or lawyer. So thankfully my sister went the PhD in psychology route, so she, kind of, checked that box for us. And so I was a little bit more free to pursue my very, very, very wide variety of interests. So a little bit of personal information about me. So I grew up a very sick child, and so I was hospitalized a lot. I was in the ER a lot. But that actually afforded me a lot of opportunities to be, sort of, an indoor-only child of reading and playing video games and all sorts of things that I would say, like, expanded my worldview. Like, it was just all sorts of different stories. Like, reading has stories; video games have stories.

GEHRKE: Tell us a story about reading and a story about video games. What …

LIU: Oh my goodness …

GEHRKE: … were your favorite set of books?

LIU: I was really interested in, like, historical fiction at the time. One book that I remember reading about—oh my gosh, it’s a very famous book, and I don’t remember the name anymore. However, it was about a young girl’s perspective of being, living in an internment camp, the Japanese internment camps, back during World War II, I believe, after Pearl Harbor.[1] And it was just kind of her diary and her perspective. It was almost like Diary of Anne Frank but from a Japanese American girl’s perspective instead. And I just loved, kind of, reading about different viewpoints and different eras and trying to understand, like, where do we overlap, how do things change over time, how does history repeat itself in some ways? And, and I love that. And then video games. So I was really into Japanese RPGs back in the day. So it’s funny. I started … my first console was a Mattel Intellivision II, and then it gradually went up to like Nintendo, Super Nintendo, all those, all those consoles. But I had a friend who I used to play RPGs with …

GEHRKE: So these were network RPGs or individual RPGs?

LIU: These were individual RPGs. This is, you know, when I was around 10, the internet appeared, so it probably dates me a little bit. Every time a new RPG came out like by—the company is now called Square Enix but back then it was called SquareSoft—or Nintendo like Zelda, he and I would immediately go out and buy the game or, you know, convince our parents at the time to buy the game, and then we would compete. So, like, this is not couch co-op; he was actually in Texas.

GEHRKE: Like long-distance co-op?

LIU: This is long-distance, long-distance gaming where we would compete to see who would beat the game first.

GEHRKE: Wow.

LIU: No, you’re not allowed to use walkthroughs. And he almost always beat me.

GEHRKE: But these games are like 60-hour, 80-hour games?

LIU: Yeah, like 60- or 80-hour games, but, like, you know, we got so good at them that, well, you had to figure out like how do you, kind of, bypass and get through the main quest as fast as possible. So that was always—

GEHRKE: So any of the side quests and things like that just … ?

LIU: Yeah, oh, yeah, no. So I’m actually a huge completionist, though, so I’d always go back after and do all the side quests to get, you know, we’ll just say “100 percent” achievement. I’m a little bit of an achievement machine that way. But so, like, that kind of stuff was always super fun for me. And so I spent so much of my time then—because I was, kind of, more homebound a lot—just exploring and being curious about things. And, and that got me into art and into design, and I thought, man, I’m going to be an architect someday because I love designing experiences, like spaces for people.

GEHRKE: You thought at that point in time like a real, like a building architect or an architect for like virtual worlds or so … ?

LIU: No, real, like a real physical space that people inhabit and experience. And so, like, I avoided as much STEM as I could in school. I couldn’t, just due to where I lived and grew up and the high school requirements that I had. But the minute I went to college, which happened to be at the University of Washington, which has a great architecture program, I was like, I’m never going to take another STEM class in my life.

GEHRKE: So you enrolled as an architecture major?

LIU: I enrolled as an architecture major, and I was like, I will do what we would call the “natural world” credits, which is kind of the STEM-like things. But I would intentionally find things that were not, like, hard science because I’m like, I’m never going to do this again. I’m never going to be in tech. All these people that are so obsessed with tech who, you know, went to MIT and Stanford, and I’m like, no, no, no, I’m going to be an architecture major.

GEHRKE: So you took, like, the physics for poets class or so …?

LIU: Stuff like that, right. [LAUGHS] Very, very similar. But I ended up just loving learning at school, which is very unsurprising. You know, I took, like, an Arabic poetry class. I took a French fairy tales class. And I just, kind of, explored college and all the things that it had to offer in terms of academics so much that I actually ended up deciding to get two degrees: one in industrial design, which is not too far away from architecture. Architecture is like with large spaces, like you build one building or design one building that lasts maybe 100 years. Industrial design, I, kind of, joke about it. It’s, you know, you design smaller form factors that sometimes, if they’re manufactured with plastics, last millions of years, [LAUGHS] and you build millions of them. But then I also ended up getting a degree in comparative religion, as well. Which it meant that, like, my schooling and my class schedules are always a little bit odd because I’d go from, you know, like, the industrial design shop down in our design building and like making things with my hands and working at the bandsaw, and then I’d, you know, rush to this other class where we have like very fascinating philosophical debates about various things in, sort of, the comparative religion space. And I’d write, you know, 10-page essays and … about all sorts of things. And, you know, there’s, like, the study of death is a great example and how different cultures react to death. But, you know, that was as far away from STEM [LAUGHS] as I could have possibly gone.

GEHRKE: Right. I was just thinking, can you maybe explain to our listeners a little bit who may come a little bit more from the STEM field traditionally, what do you study in comparative [religion], and what is the field like?

LIU: So for me, it was really just, like, I took a lot of classes just trying to understand people. I really … and it sounds, kind of, silly to say it that way, but religion is really formed and shaped by people. And so for me, like, the types of classes that I took were, sort of, like studying Western religion, studying Eastern religion, studying the philosophy of religion, like or even—and this still, I still think about it from time to time—how do you define religion? And just even … there’s still so many scholarly debates about how to define, like, what is a “pure” definition of religion, and nobody can really still identify that yet. Is it, you know, because then there’s this distinction of spiritualism and being religious versus something else or just completely made-up, you know, pseudoscience, whatever, right. People have this wide spectrum of things that they describe. But it’s really around learning about the different foundations of religion. And then people tend to specialize. You know, they might specialize in a particular area like Hinduism or, you know, broadly speaking, Eastern religions, or people will, you know, start focusing on Western religions. Or sometimes I think about a specific topic like the intersection of, for example, religion and death or religion and art or even, you know, religion and violence. And there’s a broad spectrum of things that people start specializing in. And it’s very, it’s, sort of, very much in the mind but very much in the heart of how you understand that.

GEHRKE: Yeah, I can see how it even connects to industrial design because there you also want to capture the heart …

LIU: Yes.

GEHRKE: … the hearts of people, right.

LIU: Yep. And that’s kind of how I, how I describe, you know, when people are like, why did you major in that? Like, what do you even do with that? Did you even think about what career you would have with that? I’m like, no, I just really wanted to learn, and I really wanted to understand people. And I felt like religion is one way to understand, sort of, like, sociologically how people think and get into that deep, like, that deep feeling of faith and where does it come from and how does it manifest and how does it motivate people to do things in life. And to your point, it’s very similar to industrial design because you’re, you know, we talk about design thinking and you have to really deeply understand the user and the people that you’re designing for in order to create something that really lasts, that matters to them. So that’s, kind of, my, at least my undergrad experience. And in a very, very brief way, I’ll just kind of walk through or at least tell you the very nonlinear path that I took to get to where I am here now at Microsoft Research. So like the day after I graduated from the University of Washington, I moved to Florida.

GEHRKE: And just as a question: so you graduated from the University of Washington—did you have like a plan, you know, this is like the career I want to have?

LIU: Oh no! So here’s the funny thing about design, and I hope that, you know, my other, the designers who might be watching or listening [LAUGHS] to this might not get upset—hopefully don’t get upset with me about this—is I love the design thinking aspect of design, like understanding why people do the things they do, what types of habits can you build with the products—physical products? I was very obsessed with physical, tangible things at the time. And then I learned through, like, internships and talking to other designers who were, you know, already in the field that that’s not what they do. That they don’t go and like, oh, let’s go talk to people and understand deeply what they do. Like, there’s other people that do that. OK, well, what do you do? Well, I work in, you know, CAD, or I work on SolidWorks, or I do Rhino, and I do surfacing. I’m like, OK, what else? Who decides what gets made? Oh, that’s like, you know, a product manager or product—oh, what’s that? Who? What? What does that even mean? Like, tell me more about that.

GEHRKE: So it’s like the dichotomy that you see even here in the company where the engineers have to, sort of, build the things, but the product managers are …

LIU: But someone else is …

GEHRKE: … in the middle

LIU: … someone else is, kind of, interpreting what the market and the users are saying, what the business is saying. And I was like, I like doing that because that’s more about understanding people and the business and the reason—the why. And so …

GEHRKE: Just before you go to your career, I mean, I must … I have to ask, what are some of the favorite things that you built during your undergrad? Because you said you really like to build physical things.

LIU: Oh my gosh!

GEHRKE: Maybe one or two things that you actually built …

LIU: Yeah …

GEHRKE: … that was, sort of, so fun.

LIU: So one of my projects was actually a Microsoft-sponsored project for one quarter, and all they showed up with—his name’s Steve Kaneko. He retired not too long ago from here. Steve showed up and said, I want you all to design a memory-sharing device.

GEHRKE: Interesting …

LIU: And that was it.

GEHRKE: So what is memory sharing? He didn’t define what that means?

LIU: He didn’t define it because as designers, that was our way of interpret—we had to interpret and understand what that meant for ourselves. And it was a very, very free-form exploration. And I thought … the place that I started from was … at the time, I was like, there’s like 6 or 7 billion people in the world. How many of them do I actually know? And then how many of them do I actually want to know or maybe I want to know better?

GEHRKE: To share a memory with …

LIU: To share my memories with, to share a part of me. Like, memories are …

GEHRKE: Pretty personal.

LIU: … who we are—or not who we are but parts of who we are—and drive who we become in some ways. And so I thought, you know, what would be cool is if you had a bracelet, and the bracelet were individual links, and each individual link was a photo, like a digital photo, very tiny digital photo, of something that you chose to share. And so, you know, I designed something at the time … like, the story I told was, like, well, you know, this woman who’s young decided to go to, you know, she’s taking the bus, and she put on her, like, “I wish to go to Paris” kind of theme, right. So she had a bunch of Parisian-looking things or something in that vein, right. And, you know, she gets on the bus and her bracelet vibrates. There’s, like, a haptic reaction from this bracelet. And that means that there’s someone else on the bus with this, you know, with a bracelet with their memories. It’s kind of an indicator that people want to share their stories with someone else. And, you know, wouldn’t it be great if, you know, this woman now sits down on the bus, because she sits next to the person who’s wearing it. Turns out to be an elderly woman who’s wearing, coincidentally, you know, her Paris bracelet, but it’s of her honeymoon of her deceased husband from many years ago. And, you know, like, think of the power of the stories that they could share with each other. That, you know, this woman, elderly woman, can share with, you know, this younger woman, who has aspirations to go, and the memories and the relationship that they can build from that. And so that was, kind of, my memory-sharing device at the time.

GEHRKE: I mean, it’s super interesting because, I mean, the way I think about this is that we have memory-sharing applications now like Facebook and Instagram and TikTok and so on, but they, the algorithm decides really …

LIU: Yes …

GEHRKE: … who to share it with and where and why to share it. Whereas here, it’s proximity, right? It somehow leads to this physical and personal connection afterwards, right? The connection is not like, OK, suddenly on my bracelet, her stories show up …

LIU: Yes …

GEHRKE: … but, you know, maybe we sit next to each other on the bus, and it vibrates, and then we start a conversation.

LIU: Exactly. It’s you own, you know, whatever content is on that you choose to have on your physical person, but you’re sharing yourself in a different way, and you’re sharing your memories and you’re sharing a moment. And it might just be a moment in time, right. It doesn’t have to be a long-lasting thing. That, you know, this elderly woman can say, hey, there’s this really great bistro that we tried on, you know, this particular street, and I hope it’s still there, because if you go, ask for this person or try this thing out and, like, what an incredible opportunity it is for this other woman, who, you know, maybe she does someday go to Paris and she does find it. And she thinks of that time, like, how grateful she was to have met, you know, this woman on the bus. And just for that brief whatever bus … however long that bus ride was, to have that connection, to learn something new about someone else, to share and receive a part of somebody else who you may never have known otherwise. And then that was, that was what I was thinking of, you know, in terms of a memory-sharing device was memory creates connections or it reinforces connections. So I guess very similarly to my people thing and being fascinated by people, like, this was my way of trying to connect people in a different way, in the space that they inhabit and not necessarily on their devices.

GEHRKE: And then what did Microsoft say to that? Was there like an end-of-quarter presentation?

LIU: Oh, yeah! There was a, there was a, you know, big old presentation. I can’t even remember which building we were at, but I think everybody was just like, wow, this is great. And that was it. [LAUGHTER]

GEHRKE: And that was it. It sounds like a really fascinating device.

LIU: Yeah, it was. And lots of people came up with all sorts of really cool things because everybody interpreted the, I’ll just say, the prompt differently, right.

GEHRKE: Right …

LIU: … And that was my interpretation of the prompt at the time.

GEHRKE: Well, super interesting.

LIU: Yeah.

GEHRKE: Coming back to, so OK, so you’ve done just a bunch of really amazing projects. You, sort of, it seems like you literally lived the notion of liberal education.

LIU: I did. I, like, even now I just love learning. I get my hands on all sorts of weird things. I picked up whittling as a random example.

GEHRKE: What is whittling? Do I even know what that is? [LAUGHS]

LIU: So whittling is basically carving shapes into wood. So … I’m also very accident prone, so there’s, like, lots of gloves I had to wear to protect my hands. But, you know, it was like, oh, I really just want to pick up whittling. And I literally did, you know. You can grab a stick and you can actually buy balsa wood that’s in a, in decent shape. But you can just start carving away at whatever … whatever you would like to form that piece of wood into, it can become that. So I made a cat, and then I made what I jokingly refer to as my fidget toy at home. It’s just a very smooth object. [LAUGHS]

GEHRKE: That you can hold and …

LIU: I just made it very round and smooth and you can just, kind of, like, rub it, and yeah, it’s …

GEHRKE: Super interesting.

LIU: … it’s … I pick up a lot of random things because it’s just fascinating to me. I learned a bunch of languages when I was in school. I learned Coptic when I was in school for no other reason than, hey, that sounds cool; you can read the Dead Sea Scrolls [LAUGHS] when you learn Coptic—OK!

GEHRKE: Wow. And so much, so important in today’s world, right, which is moving so fast, is a love for learning. And then especially directed in some areas.

LIU: Yeah.

GEHRKE: You know, that’s just really an awesome skill.

LIU: Yeah.

GEHRKE: And so you just graduated. You said you moved to Florida.

LIU: Oh, yes, yes. Yes. So, so about a month before this happened, right—it didn’t just spontaneously happen. A month before, I had a good friend from the architecture program who had said, hey, Wei, you know, I’m applying for this role in guest services at Disney. I was like, really? You can do that? And she’s like, yeah, yeah, yeah. So I was like, that sounds really cool. And I, you know, went to, like, the Disney careers site. I’m like one month or two months away from graduating. Still, like, not sure what I’m totally going to do because at that point, I’m like, I don’t think I want to be a designer because I don’t—the part that I love about it, the part that I have passion about, is not in the actual design of the object, but it’s about the understanding of why it needs to exist.

GEHRKE: The interconnection between the people and the design.

LIU: The people and the design, exactly. And so when I found, I found this, like, product development internship opportunity, and I was like, what does that even mean? That sounds cool. I get to …

GEHRKE: At Disney?

LIU: At Disney. And it was, like—and Disney’s tagline, the theme park merchandise’s tagline, was “creating tangible memories.” I was like, oh boy, this just checks all the boxes. So I applied, I interviewed, did a phone interview, and they hired me within 24 hours. They were like, we would like you to come. And I was like, I would absolutely love to move to Florida and work there. So, yeah, the day after I graduated from U-Dub, I drove all the way across the country from Seattle.

GEHRKE: You drove?

LIU: From Seattle with two cats.

GEHRKE: That must have been an interesting adventure by itself.

LIU: Oh, yes. With two cats in the car, let me tell you, it was fascinating. All the way to Florida, Orlando, Florida. And the day that I got there or, no, two days after I got there, I found out that I was going to be working in the toys area. So plush and dolls, which is, like, you can imagine just absolutely amazing. Making, like, stuffed toys that then—because my office was a mile down the road from Disney’s Animal Kingdom and therefore a couple miles away from Magic Kingdom or Hollywood Studios or EPCOT—I could actually go see, I’ll just say, the “fruits of my labor” instantly and not only that. See it bring joy to children.

GEHRKE: So what is the path? So you would design something, and how quickly would it then actually end up in the park? Or how did you, I mean, how did you start the job?

LIU: What did I do there? Yeah, yeah …

GEHRKE: Well, what’s the interface between the people and the design here?

LIU: Yeah … so, so, really, I didn’t actually do any design. There was an entire group called Disney Design Group that does all the designing there. And so what I did was I understood, what do we need to make and why? What memories are we—what tangible memories do we want to create for people? Why does it matter to them? In many ways, it’s, sort of, like, it’s still a business, right. You’re creating tangible memories to generate revenue and increase the bottom line for the company. But … so my role was to understand what trends were happening: what were the opportunities? What were guests doing in the parks? What types of things are guests looking for? What are we missing in our SKU lineup, or stock-keeping-unit lineup, and then in which merchandising areas do they need to happen? And so I, actually, as part of my internship, my manager said, hey, I let every intern every time they’re here come up with any idea they want, and you just have to see it from start to execution—in addition to all the other stuff that I worked on. I was like, sounds good. And I came up with this idea that I was like, you know, it would be cool … Uglydolls was really popular at the time. Designer toys were getting really popular from Kidrobot, which was kind of, like, there was this vinyl thing and you can—it was just decorative of all different art styles on the same canvas. And I was like, you know, what if we did that with Mickey, and then, you know, what if the story that we’re telling is, you know, just for the parks—Walt Disney World and Disneyland—that there were aliens or monsters coming to visit the park, but they wanted to blend in and fit in? Well, how would they do that? Well, they clearly see Mickey heads everywhere, and Mickey is very popular here clearly, and so they try to dress up like Mickey, but they don’t do it quite well. So they got the shape right, but everything else about them is a little bit different, and they all have their own unique personalities and …

GEHRKE: You can tell a story around them …

LIU: You can tell a story—see, it’s all about stories. And then it … I got buy-in from everybody there, like, all the way up to the VP. I had to get brand because I was messing with the brand icon. But, you know, it became an entire line called Mickey Monsters at Disney. I still have them all. There were two—then it went from plush; it became consumables, which are like edible things. It went into key chains. It went, it was super … it was … I probably went a little bit too hard, or I took the, I think, I took the assignment very seriously. [LAUGHS]

GEHRKE: Yep, yep. Well, it seemed to be a huge success, as well.

LIU: Yeah. It did really well in the time that it was there. We did a test, and I was really, really proud of it. But you know, my—what I did though is, you know, very concretely was I started with an idea. I, you know, convinced and aligned with lots of people in various disciplines that this is something that we should try and experiment on. You know, worked with the designers to really design what this could look like. You know, scoped out what types of fabrics because there’s all sorts of different textures out there. Working with, kind of, our sourcing team to understand, like, which vendors do we want to work with. And then typically, in the plush industry, manufacturing back in the day could happen—and in terms of supply chain, manufacturing, and then delivery of product—could take about six months.

GEHRKE: OK …

LIU: And so when I was there, anything I worked on would, kind of, appear in six months, which is actually very cool. I mean, it’s not like software, where anything you work on is, you’re like boop, compile—oh look [there] it is. It depends on how fast your computer is. You know, it’s pretty instantaneous compared to six months to see the fruits of your labor. But it was a really, just such a great experience. And then seeing, you know, then going to the parks and seeing children with …

GEHRKE: Yeah, the stuff that you …

LIU: … the thing that I worked on, the thing that I had the idea on, and, like, them going like, Mom, I really want this.

GEHRKE: Right …

LIU: You know, we’re not really selling to the kids; we’re, kind of, selling to the parents.

GEHRKE: It’s a bit like this feeling that we can have here at Microsoft, right, if any of our ideas makes it into products …

LIU: Yup …

GEHRKE: … that are then used by 100 million people and hopefully bring them joy and connection.

LIU: Exactly. And that’s why, like, I just think Microsoft is great, because our portfolio is so broad, and so much of our work touches different parts of our lives. And I’ll even pick on, you know, like I have, you know, in my family, my daughter goes to school—clearly, obviously, she would go to school—but she used Flipgrid, now known as Flip, for a while. And I was like, hey, that’s cool. Like, she uses something that, you know, I don’t directly work on, but my company works on.

GEHRKE: Well, and you were involved with it through Watch For, right …

LIU: Yes, I was …

GEHRKE: … which did become the motivation for Flip.

LIU: Yep. Watch For, you know, helps to detect inappropriate content on Flip. And, you know, that’s super cool because now I’m like, oh, the work that I’m doing actually is directly impacting and helping people like my daughter and making a difference and, you know, keeping users safe from content that maybe we don’t want them to see. You know, other areas like Microsoft Word, I’m like, wow, this is a thing. Like, I’m at the company that makes the thing that I’ve used forever, and, you know, like, it’s just fascinating to see the types of things that we can touch here at Microsoft Research, for example. And how, you know, I, you know, Marie Kondo popularized the term “joy,” like, “sparking joy,” but …

GEHRKE: If you look at an item and if it doesn’t sparkle joy …

LIU: If it doesn’t spark joy, right …

GEHRKE: … then you know on which side it goes.

LIU: Exactly. But, but, you know, like, I’ve always felt like I want the things that I work on to create joy in people. And it was very obvious when you make toys that you see the joy on children’s faces with it. It’s a little bit different, but it’s so much more nuanced and rewarding when you also see, sort of, the products that, the types of things that we work on in research create joy. It’s, you know, it’s funny because I mentioned software is instantaneous in many ways, and then, you know, toys takes a little bit longer. But then, you know, in the types of research that we do, sometimes it takes a little bit longer than, a little bit longer [LAUGHS] …

GEHRKE: It takes years sometimes!

LIU: … than six months. Years to pay off. But, like, that return on that investment is so worth it. And, you know, I see that in, kind of, the work that lots of folks around MSR [Microsoft Research] do today. And knowing that even, sort of, the circles that I hang out in now do such crazy, cool, impactful things that help benefit the world. And, you know, it’s funny, like, never say never. I’m in tech and I love it, and I don’t have a STEM background. I didn’t get a STEM background. I didn’t get it, well, I don’t have a STEM degree. Like, I did not go—like, I can’t code my way out of a paper bag. But the fact that I can still be here and create impact and do meaningful work and, you know, work on things that create joy and positively impact society is, like, it speaks to me like stories speak to me.

GEHRKE: I mean, there’s so many elements that come together in what you’re saying. I mean, research is not a game of the person sitting in the lowly corner on her whiteboard, right? But it’s a team sport.

LIU: Yep.

GEHRKE: It requires many different people with many different skills, right? It requires the spark of ingenuity. It requires, you know, the deep scientific insight. It requires then the scaling and engineering. It requires the PM, right, to make actually the connection to the value, and the execution then requires the designer to actually create that joy with the user interface to seeing how it actually fits.

LIU: Exactly. And it’s fascinating that we sometimes talk about research being like a lonely journey. It can be, but it can also be such an empowering collaborative journey that you can build such incredible cool things when you bring people together—cross-disciplinary people together—to dream bigger and dream about new ideas and new ways of thinking. And, like, that’s why I also love talking to researchers here because they all have such unique perspectives and inner worlds and lives that are frankly so different from my own. And I think when they encounter me, they’re like, she’s very different from us, too.

GEHRKE: But I think these differences are our superpower, right, because …

LIU: Exactly. And that’s what brings us together.

GEHRKE: … they have to be bridged and that brings us together. Exactly. So how, I mean, if you think about Microsoft Research as over here. You’re here in Disney in Florida?

LIU: Yes, yes, yes. So …

GEHRKE: You had quite a few stops along the way.

LIU: I did have a lot of stops along the way.

GEHRKE: And very nonlinear also?

LIU: It was also very nonlinear. So Disney took me to the third, at the time, the third-largest toy company in the US, called JAKKS Pacific, where I worked on again, sort of, Disney-licensed and Mattel-licensed products, so “dress up and role play” toys is what we refer to them as. “Dress up” meaning, like, if you go to your local Target or Walmart or whatever, kind of, large store, they will have in their toy sections like dresses for Disney princesses, for example, or Disney fairies. Like, I worked on stuff like that, which is also very cool because, you know, usually around Halloween time here in the US is when I’m like, hey, I know that. And then that, kind of, took me to a video game accessory organization here in Woodinville.

GEHRKE: There’s the connection to tech starting to appear.

LIU: There’s a little bit connection of tech where I was like, I love video games! And I got to work on audio products there, as well, like headphones. And it was the first time I started working on things that, I’ll just say, had electrons running through them. So I had already worked on things that were, like, both soft lines—we refer to a soft line as bags and things that require, like, fabrics and textiles—and then I worked on hard lines, which were things that are more, things that are more physically rigid, like plastics. And so I was like, OK, well, I’ve worked on hard-lines-like stuff, and now I’m going to work on hard lines with electrons running through them. That’s kind of neat. And I learned all sorts of things about electricity. I was like, oh, this is weird and fascinating and circuits and … . And then I was like, well, this is cool, but … what else is there? And it took me to not a very well-known company in some circles, but a company called Fluke Corporation. Fluke is best known for its digital multimeters, and I worked there on their thermal imaging cameras. So it’s, for people who don’t know, it’s kind of like Predator vision. You can see what’s hot; you can see what’s not. It’s very cool. And Fluke spoke to me because their, you know, not only is their tagline “they keep your world up and running”; a lot of the things that Fluke does, especially when I heard stories from, like, electricians and technicians who use Fluke products, are like, this Fluke saved my life. I’m like, it did? What? And they’re like, you know, I was in a high-voltage situation, and I just wasn’t paying attention. I, you know, didn’t ground properly. And then there was an incident. But, you know, my multimeter survived, and more importantly, I survived. And you’re like, wow, like, that’s, that’s really cool. And so while I was at Fluke, they asked me if I wanted to work on a new IoT project. And I was like, I don’t even know what IoT is. “Internet of Things” … like, OK, well, you said “things” to me, and I like things. I like tangible things. Tell me more. And so that was, kind of, my first foray into things that had … of products with electrons on them with user interfaces and then also with software, like pure software, that were running on devices like your smartphones or your tablets or your computers. And so I started learning more about like, oh, what does software development look like? Oh, it’s a lot faster than hardware development. It’s kind of neat. And then that took me to SpaceX, of all places. It was super weird. Like, SpaceX was like, hey, do you want to come work in software here? I was like, but I’m not a rocket scientist. They’re like, you don’t need to be. I was like, huh, OK. And so I worked on Starlink before Starlink was a real thing. I worked on, kind of, the back-office systems for the ISP. I also worked on what we would refer to as our enterprise resource planning system that powers all of SpaceX. It’s called Warp Drive.

GEHRKE: That’s where you got all your software experience.

LIU: That’s where I learned all about software and working on complex systems, also monoliths and older systems, and how do you think about, you know, sometimes zero-fault tolerance systems and also, that also remain flexible for its users so they can move fast. And then from SpaceX, that took me to a startup called Likewise. It’s here in Bellevue. And then from the startup, I was like, I really like those people in Microsoft. I really want to work in research because they come up with all these cool ideas, and then they could do stuff with it. And I’m such an idea person, and maybe I’m pretty good at execution, but I love the idea side of things. And I discovered that over the course of my career, and that’s actually what brought me here to begin with.

GEHRKE: And that’s, sort of, your superpower that you bring now here. So if I think about a typical day, right, what do you do throughout, throughout your day? What is it, what is it to be a PM manager here at MSR?

LIU: So it’s funny because when I was just a PM and not a manager, I was more, kind of, figuring out, how do I make this product go? How do I make this product ship? How do I move things forward and empower organizations with the products that I—people and organizations on the planet to achieve more [with] what I’m working on? And now as a PM manager, I’m more empowering the people in my team to do that and thinking about uniquely like, who are they, what are their motivations, and then how do I help them grow, and then how do I help their products ship, and how do I help their teams cohere? And so really my day-to-day is so much less, like, being involved in the nitty-gritty details of any project at any point in time, but it’s really meeting with different people around Microsoft Research and just understanding, like, what’s going on and making sure that we’re executing on the impactful work that we want to move forward. You know, it’s boring to say it’s—it doesn’t sound very interesting. Like, mostly, it’s emails and meetings and talking, and, you know, talking to people one-on-one, occasionally writing documents and creating artifacts that matter. But more importantly, I would say it’s creating connections, helping uplift people, and making sure that they are moving and being empowered in the way that they feel that—to help them achieve more.

GEHRKE: That’s super interesting. Maybe in closing, do you have one piece of career advice for everybody, you know, anybody who’s listening? Because you have such an interesting nonlinear career, yet when you are at Disney you couldn’t probably … didn’t imagine that you would end up here at MSR, and you don’t know what, like, we had a little pre-discussion. You said you don’t know where you’re going to go next. So what’s your career advice for any listener?

LIU: I would say, you know, if you’re not sure, it’s OK to not be sure, and, you know, instead of asking yourself why, ask yourself why not. If you look at something and you’re like, hey, that job looks really cool, but I am so unqualified to do it for whatever reason you want to tell yourself, ask yourself why not. Even if it’s, you know, you’re going from toys to something in STEM, or, you know, I’m not a rocket scientist, but somehow, I can create value at SpaceX? Like, if you want to do it, ask yourself why not and try and see what happens. Because if you stop yourself at the start, before you even start trying, then you’re never going to find out what happens next.

[MUSIC]

GEHRKE: It’s just such an amazing note to end on. So thank you very much for the great conversation, Wei.

LIU: Yeah. Thanks, Johannes.

GEHRKE: To learn more about Wei or to see photos of her work and of her childhood in Silicon Valley, visit aka.ms/ResearcherStories (opens in new tab).

[MUSIC FADES]

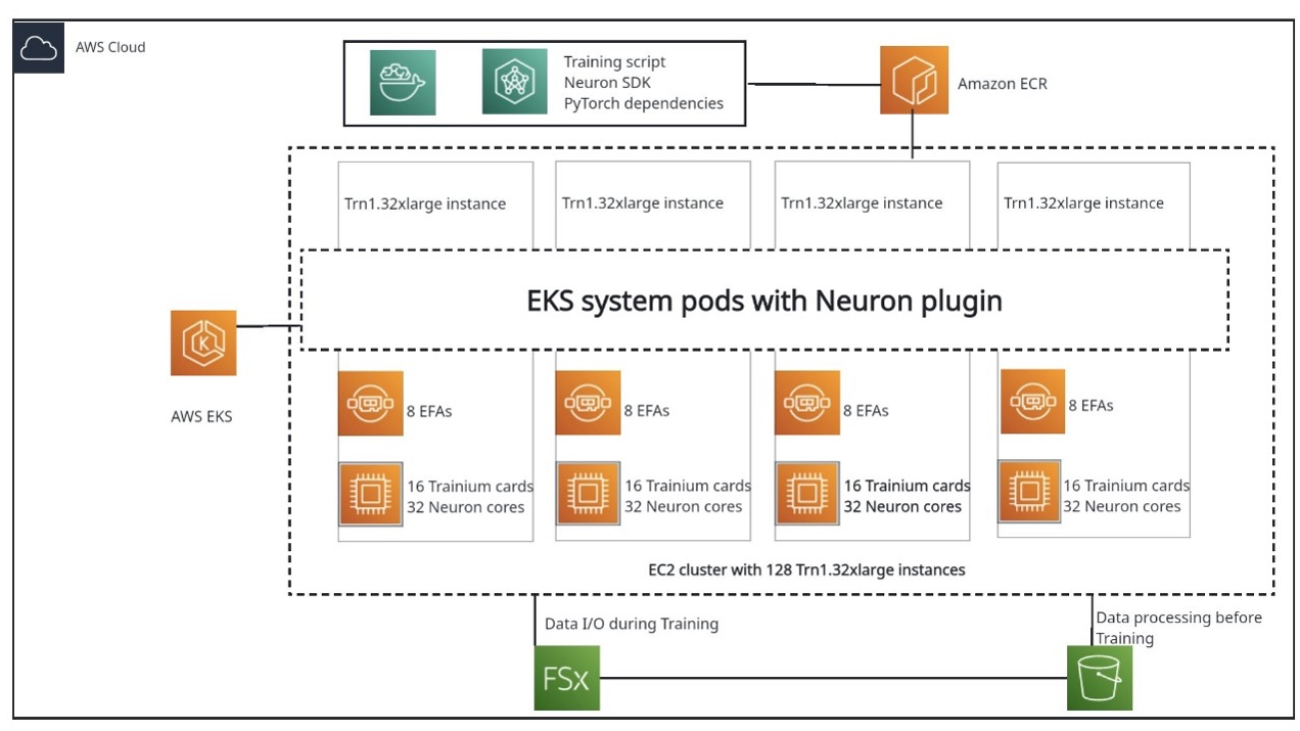

Maysara Hamdan is a Partner Solutions Architect based in Atlanta, Georgia. Maysara has over 15 years of experience in building and architecting Software Applications and IoT Connected Products in Telecom and Automotive Industries. In AWS, Maysara helps partners in building their cloud practices and growing their businesses. Maysara is passionate about new technologies and is always looking for ways to help partners innovate and grow.

Maysara Hamdan is a Partner Solutions Architect based in Atlanta, Georgia. Maysara has over 15 years of experience in building and architecting Software Applications and IoT Connected Products in Telecom and Automotive Industries. In AWS, Maysara helps partners in building their cloud practices and growing their businesses. Maysara is passionate about new technologies and is always looking for ways to help partners innovate and grow. Eric Bolme is a Specialist Solution Architect with AWS based on the East Coast of the United States. He has 8 years of experience building out a variety of deep learning and other AI use cases and focuses on Personalization and Recommendation use cases with AWS.

Eric Bolme is a Specialist Solution Architect with AWS based on the East Coast of the United States. He has 8 years of experience building out a variety of deep learning and other AI use cases and focuses on Personalization and Recommendation use cases with AWS.

Jianying Lang is a Principal Solutions Architect at AWS Worldwide Specialist Organization (WWSO). She has over 15 years of working experience in the HPC and AI field. At AWS, she focuses on helping customers deploy, optimize, and scale their AI/ML workloads on accelerated computing instances. She is passionate about combining the techniques in HPC and AI fields. Jianying holds a PhD in Computational Physics from the University of Colorado at Boulder.

Jianying Lang is a Principal Solutions Architect at AWS Worldwide Specialist Organization (WWSO). She has over 15 years of working experience in the HPC and AI field. At AWS, she focuses on helping customers deploy, optimize, and scale their AI/ML workloads on accelerated computing instances. She is passionate about combining the techniques in HPC and AI fields. Jianying holds a PhD in Computational Physics from the University of Colorado at Boulder. Fei Chen has 15 years’ industry experiences of leading teams in developing and productizing AI/ML at internet scale. At AWS, she leads the worldwide solution teams in Advanced Compute, including AI accelerators, HPC, IoT, visual and spatial compute, and the emerging technology focusing on technical innovations (AI and generative AI) in the aforementioned domains.

Fei Chen has 15 years’ industry experiences of leading teams in developing and productizing AI/ML at internet scale. At AWS, she leads the worldwide solution teams in Advanced Compute, including AI accelerators, HPC, IoT, visual and spatial compute, and the emerging technology focusing on technical innovations (AI and generative AI) in the aforementioned domains. Haozheng Fan is a software engineer at AWS. He is interested in large language models (LLMs) in production, including pre-training, fine-tuning, and evaluation. His works span from framework application level to hardware kernel level. He currently works on LLM training on novel hardware, with a focus on training efficiency and model quality.

Haozheng Fan is a software engineer at AWS. He is interested in large language models (LLMs) in production, including pre-training, fine-tuning, and evaluation. His works span from framework application level to hardware kernel level. He currently works on LLM training on novel hardware, with a focus on training efficiency and model quality. Hao Zhou is a Research Scientist with Amazon SageMaker. Before that, he worked on developing machine learning methods for fraud detection for Amazon Fraud Detector. He is passionate about applying machine learning, optimization, and generative AI techniques to various real-world problems. He holds a PhD in Electrical Engineering from Northwestern University.

Hao Zhou is a Research Scientist with Amazon SageMaker. Before that, he worked on developing machine learning methods for fraud detection for Amazon Fraud Detector. He is passionate about applying machine learning, optimization, and generative AI techniques to various real-world problems. He holds a PhD in Electrical Engineering from Northwestern University. Yida Wang is a principal scientist in the AWS AI team of Amazon. His research interest is in systems, high-performance computing, and big data analytics. He currently works on deep learning systems, with a focus on compiling and optimizing deep learning models for efficient training and inference, especially large-scale foundation models. The mission is to bridge the high-level models from various frameworks and low-level hardware platforms including CPUs, GPUs, and AI accelerators, so that different models can run in high performance on different devices.

Yida Wang is a principal scientist in the AWS AI team of Amazon. His research interest is in systems, high-performance computing, and big data analytics. He currently works on deep learning systems, with a focus on compiling and optimizing deep learning models for efficient training and inference, especially large-scale foundation models. The mission is to bridge the high-level models from various frameworks and low-level hardware platforms including CPUs, GPUs, and AI accelerators, so that different models can run in high performance on different devices. Jun (Luke) Huan is a Principal Scientist at AWS AI Labs. Dr. Huan works on AI and data science. He has published more than 160 peer-reviewed papers in leading conferences and journals and has graduated 11 PhD students. He was a recipient of the NSF Faculty Early Career Development Award in 2009. Before joining AWS, he worked at Baidu Research as a distinguished scientist and the head of Baidu Big Data Laboratory. He founded StylingAI Inc., an AI startup, and worked as the CEO and Chief Scientist from 2019–2021. Before joining the industry, he was the Charles E. and Mary Jane Spahr Professor in the EECS Department at the University of Kansas. From 2015–2018, he worked as a program director at the US NSF, in charge of its big data program.

Jun (Luke) Huan is a Principal Scientist at AWS AI Labs. Dr. Huan works on AI and data science. He has published more than 160 peer-reviewed papers in leading conferences and journals and has graduated 11 PhD students. He was a recipient of the NSF Faculty Early Career Development Award in 2009. Before joining AWS, he worked at Baidu Research as a distinguished scientist and the head of Baidu Big Data Laboratory. He founded StylingAI Inc., an AI startup, and worked as the CEO and Chief Scientist from 2019–2021. Before joining the industry, he was the Charles E. and Mary Jane Spahr Professor in the EECS Department at the University of Kansas. From 2015–2018, he worked as a program director at the US NSF, in charge of its big data program.

Dr. Changsha Ma is an AI/ML Specialist at AWS. She is a technologist with a PhD in Computer Science, a master’s degree in Education Psychology, and years of experience in data science and independent consulting in AI/ML. She is passionate about researching methodological approaches for machine and human intelligence. Outside of work, she loves hiking, cooking, hunting food, and spending time with friends and families.

Dr. Changsha Ma is an AI/ML Specialist at AWS. She is a technologist with a PhD in Computer Science, a master’s degree in Education Psychology, and years of experience in data science and independent consulting in AI/ML. She is passionate about researching methodological approaches for machine and human intelligence. Outside of work, she loves hiking, cooking, hunting food, and spending time with friends and families. Jun Shi is a Senior Solutions Architect at Amazon Web Services (AWS). His current areas of focus are AI/ML infrastructure and applications. He has over a decade experience in the FinTech industry as software engineer.

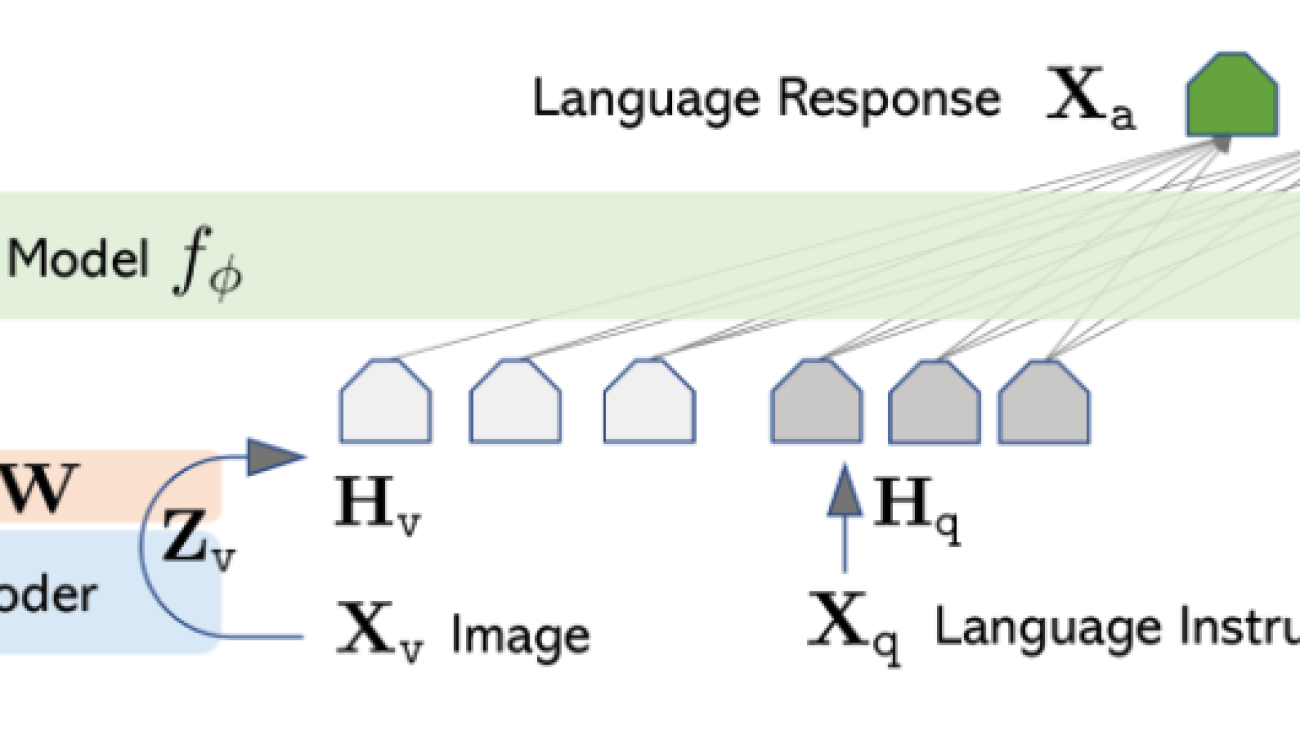

Jun Shi is a Senior Solutions Architect at Amazon Web Services (AWS). His current areas of focus are AI/ML infrastructure and applications. He has over a decade experience in the FinTech industry as software engineer. Alfred Shen is a Senior AI/ML Specialist at AWS. He has been working in Silicon Valley, holding technical and managerial positions in diverse sectors including healthcare, finance, and high-tech. He is a dedicated applied AI/ML researcher, concentrating on CV, NLP, and multimodality. His work has been showcased in publications such as EMNLP, ICLR, and Public Health.

Alfred Shen is a Senior AI/ML Specialist at AWS. He has been working in Silicon Valley, holding technical and managerial positions in diverse sectors including healthcare, finance, and high-tech. He is a dedicated applied AI/ML researcher, concentrating on CV, NLP, and multimodality. His work has been showcased in publications such as EMNLP, ICLR, and Public Health.