Learn how new Google AI on Android features can boost employee productivity, help developers to build smarter tools and improve business workflows.Read More

Learn how new Google AI on Android features can boost employee productivity, help developers to build smarter tools and improve business workflows.Read More

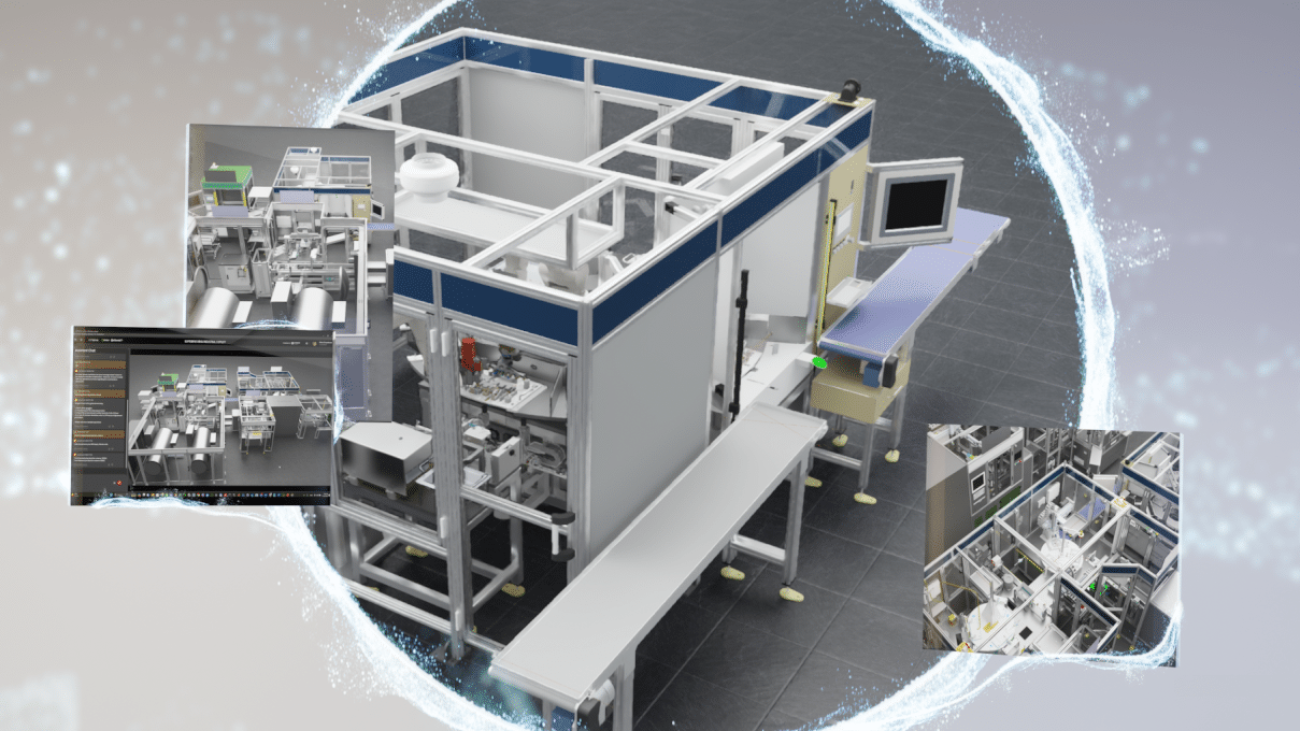

Into the Omniverse: SoftServe and Continental Drive Digitalization With OpenUSD and Generative AI

Editor’s note: This post is part of Into the Omniverse, a series focused on how artists, developers and enterprises can transform their workflows using the latest advances in OpenUSD and NVIDIA Omniverse.

Industrial digitalization is driving automotive innovation.

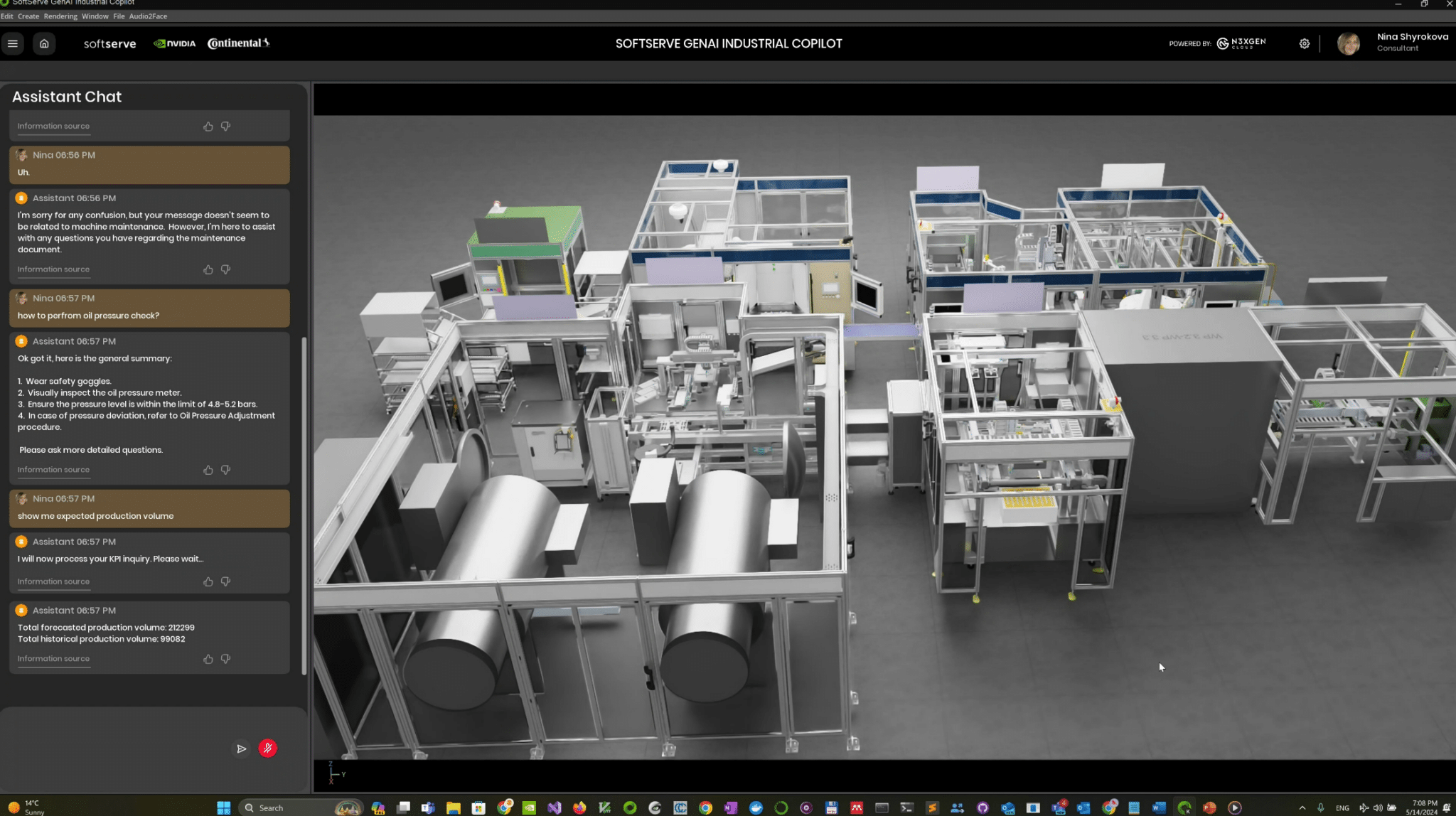

In response to the industry’s growing demand for seamless, connected driving experiences, SoftServe, a leading IT consulting and digital services provider, worked with Continental, a leading German automotive technology company, to develop Industrial Co-Pilot, a virtual agent powered by generative AI that enables engineers to streamline maintenance workflows.

SoftServe helps manufacturers like Continental to further optimize their operations by integrating the Universal Scene Description, or OpenUSD, framework into virtual factory solutions — such as Industrial Co-Pilot — developed on the NVIDIA Omniverse platform.

OpenUSD offers the flexibility and extensibility organizations need to harness the full potential of digital transformation, streamlining operations and driving efficiency. Omniverse is a platform of application programming interfaces, software development kits and services that enable developers to easily integrate OpenUSD and NVIDIA RTX rendering technologies into existing software tools and simulation workflows.

Realizing the Benefits of OpenUSD

SoftServe and Continental’s Industrial Co-Pilot brings together generative AI and immersive 3D visualization to help factory teams increase productivity during equipment and production line maintenance. With the copilot, engineers can oversee production lines and monitor the performance of individual stations or the shop floor.

They can also interact with the copilot to conduct root cause analysis and receive step-by-step work instructions and recommendations, leading to reduced documentation processes and improved maintenance procedures. It’s expected that these advancements will contribute to increased productivity and a10% reduction in maintenance effort and downtime.

In a recent Omniverse community livestream, Benjamin Huber, who leads advanced automation and digitalization in the user experience business area at Continental, highlighted the significance of the company’s collaboration with SoftServe and its adoption of Omniverse.

The Omniverse platform equips Continental and SoftServe developers with the tools needed to build a new era of AI-enabled industrial applications and services. And by breaking down data silos and fostering multi-platform cooperation with OpenUSD, SoftServe and Continental developers enable engineers to work seamlessly across disciplines and systems, driving efficiency and innovation throughout their processes.

“Any engineer, no matter what tool they’re working with, can transform their data into OpenUSD and then interchange data from one discipline to another, and from one tool to another,” said Huber.

This sentiment was echoed by Vasyl Boliuk, senior lead and test automation engineer at SoftServe, who shared how OpenUSD and Omniverse — along with other NVIDIA technologies like NVIDIA Riva, NVIDIA NeMo and NVIDIA NIM microservices — enabled SoftServe and Continental teams to develop custom large language models and connect them to new 3D workflows.

“OpenUSD allows us to add any attribute or any piece of metadata we want to our applications,” he said.

Boliuk, Huber and other SoftServe and Continental representatives joined the livestream to share more about the potential unlocked from these OpenUSD-powered solutions. Watch the replay:

By embracing cutting-edge technologies and fostering collaboration, SoftServe and Continental are helping reshape automotive manufacturing.

Get Plugged Into the World of OpenUSD

Watch SoftServe and Continental’s on-demand NVIDIA GTC talks to learn more about their virtual factory solutions and experience developing on NVIDIA Omniverse with OpenUSD:

- Transforming Factory Planning and Manufacturing Operations Within Digital Mega Plants

- Getting Started With Generative AI and OpenUSD for Industrial Metaverse Applications

Learn about the latest technologies driving the next industrial revolution by watching NVIDIA founder and CEO Jensen Huang’s COMPUTEX keynote on Sunday, June 2, at 7 p.m. Taiwan time.

Check out a new video series about how OpenUSD can improve 3D workflows. For more resources on OpenUSD, explore the Alliance for OpenUSD forum and visit the AOUSD website.

Get started with NVIDIA Omniverse by downloading the standard license free, access OpenUSD resources and learn how Omniverse Enterprise can connect teams. Follow Omniverse on Instagram, Medium and X. For more, join the Omniverse community on the forums, Discord server, Twitch and YouTube channels.

Featured image courtesy of SoftServe.

Senua’s Story Continues: GeForce NOW Brings ‘Senua’s Saga: Hellblade II’ to the Cloud

Every week, GFN Thursday brings new games to the cloud, featuring some of the latest and greatest titles for members to play.

Leading the seven games joining GeForce NOW this week is the newest game in Ninja Theory’s Hellblade franchise, Senua’s Saga: Hellblade II. This day-and-date release expands the cloud gaming platform’s extensive library of over 1,900 games.

Members can also look forward to a new reward — a free in-game mount — for The Elder Scrolls Online starting Thursday, May 30. Get ready by opting into GeForce NOW’s Rewards program.

Senua Returns

In Senua’s Saga: Hellblade II, the sequel to the award-winning Hellblade: Senua’s Sacrifice, Senua returns in a brutal journey of survival through the myth and torment of Viking Iceland.

Intent on saving those who’ve fallen victim to the horrors of tyranny, Senua battles the forces of darkness within and without. Sink deep into the next chapter of Senua’s story, a crafted experience told through cinematic immersion, beautifully realized visuals and encapsulating sound.

Priority and Ultimate members can fully immerse themselves in Senua’s story with epic cinematic gameplay at higher resolutions and frame rates over free members. Ultimate members can stream at up to 4K 120 frames per second with exclusive access to GeForce RTX 4080 SuperPODs in the cloud, even on underpowered devices.

Level Up With New Games

Check out the full list of new games this week:

- Synergy (New release on Steam, May 21)

- Senua’s Saga: Hellblade II (New release on Steam and Xbox, available on PC Game Pass, May 21)

- Crown Wars: The Black Prince (New release on Steam, May 23)

- Serum (New release on Steam, May 23)

- Ships at Sea (New release on Steam, May 23)

- Exo One (Steam)

- Phantom Brigade (Steam)

What are you planning to play this weekend? Let us know on X or in the comments below

what’s a life lesson you learned from a game?

—

NVIDIA GeForce NOW (@NVIDIAGFN) May 22, 2024

Ideas: Designing AI for people with Abigail Sellen

Behind every emerging technology is a great idea propelling it forward. In the new Microsoft Research Podcast series, Ideas, members of the research community at Microsoft discuss the beliefs that animate their research, the experiences and thinkers that inform it, and the positive human impact it targets.

In this episode, host Gretchen Huizinga talks with Distinguished Scientist and Lab Director Abigail Sellen. The idea that computers could be designed for people is commonplace today, but when Sellen was pursuing an advanced degree in psychology, it was a novel one that set her on course for a career in human-centric computing. Today, Sellen and the teams she oversees are studying how AI could—and should—be designed for people, focusing on helping to ensure new developments support people in growing the skills and qualities they value. Sellen explores those efforts through the AI, Cognition, and the Economy initiative—or AICE, for short—a collective of interdisciplinary scientists examining the short- and long-term effects of generative AI on human cognition, organizational structures, and the economy.

Learn more:

AI, Cognition, and the Economy (AICE)

Initiative page

Responsible AI Principles and Approach | Microsoft AI

The Rise of the AI Co-Pilot: Lessons for Design from Aviation and Beyond

Publication, 2023

The Myth of the Paperless Office

Book, 2003

Subscribe to the Microsoft Research Podcast:

Transcript

[SPOT]GRETCHEN HUIZINGA: Hey, listeners. It’s host Gretchen Huizinga. Microsoft Research podcasts are known for bringing you stories about the latest in technology research and the scientists behind it. But if you want to dive even deeper, I encourage you to attend Microsoft Research Forum. Each episode is a series of talks and panels exploring recent advances in research, bold new ideas, and important discussions with the global research community in the era of general AI. The next episode is coming up on June 4, and you can register now at aka.ms/MyResearchForum (opens in new tab). Now, here’s today’s show.

[END OF SPOT] [TEASER] [MUSIC PLAYS UNDER DIALOGUE]ABIGAIL SELLEN: I’m not saying that we shouldn’t take concerns seriously about AI or be hugely optimistic about the opportunities, but rather, my view on this is that we can do research to get, kind of, line of sight into the future and what is going to happen with AI. And more than this, we should be using research to not just get line of sight but to steer the future, right. We can actually help to shape it. And especially being at Microsoft, we have a chance to do that.

[TEASER ENDS]GRETCHEN HUIZINGA: You’re listening to Ideas, a Microsoft Research Podcast that dives deep into the world of technology research and the profound questions behind the code. I’m Dr. Gretchen Huizinga. In this series, we’ll explore the technologies that are shaping our future and the big ideas that propel them forward.

[MUSIC FADES]

My guest on this episode is Abigail Sellen, known by her friends and colleagues as Abi. A social scientist by training and an expert in human-computer interaction, Abi has a long list of accomplishments and honors, and she’s a fellow of many technical academies and societies. But today I’m talking to her in her role as distinguished scientist and lab director of Microsoft Research Cambridge, UK, where she oversees a diverse portfolio of research, some of which supports a new initiative centered around the big idea of AI, Cognition, and the Economy, also known as AICE. Abi Sellen. I’m so excited to talk to you today. Welcome to Ideas!

ABIGAIL SELLEN: Thanks! Me, too.

HUIZINGA: So before we get into an overview of the ideas behind AICE research, let’s talk about the big ideas behind you. Tell us your own research origin story, as it were, and if there was one, what big idea or animating “what if?” captured your imagination and inspired you to do what you’re doing today?

SELLEN: OK, well, you’re asking me to go back in the mists of time a little bit, but let me try. [LAUGHTER] So I would say, going … this goes back to my time when I started doing my PhD at UC San Diego. So I had just graduated as a psychologist from the University of Toronto, and I was going to go off and do a PhD in psychology with a guy called Don Norman. So back then, I really had very little interest in computers. And in fact, computers weren’t really a thing that normal people used. [LAUGHTER] They were things that you might, like, put punch cards into. Or, in fact, in my undergrad days, I actually programmed in hexadecimal, and it was horrible. But at UCSD, they were using computers everywhere, and it was, kind of, central to how everyone worked. And we even had email back then. So computers weren’t really for personal use, and it was clear that they were designed for engineers by engineers. And so they were horrible to use, people grappling with them, people were making mistakes. You could easily remove all your files just by doing rm*. So the big idea that was going around the lab at the time—and this was by a bunch of psychologists, not just Don, but other ones—was that we could design computers for people, for people to use, and take into account, you know, how people act and interact with things and what they want. And that was a radical idea at the time. And that was the start of this field called human-computer interaction, which is … you know, now we talk about designing computers for people and “user-friendly” and that’s a, kind of, like, normal thing, but back then …

HUIZINGA: Yeah …

SELLEN: … it was a radical idea. And so, to me, that changed everything for me to think about how we could design technology for people. And then, if I can, I’ll talk about one other thing that happened …

HUIZINGA: Yeah, please.

SELLEN: … during that time. So at that time, there was another gang of psychologists, people like Dave Rumelhart, Geoff Hinton, Jay McClelland, people like that, who were thinking about, how do we model human intelligence—learning, memory, cognition—using computers? And so these were psychologists thinking about, how do people represent ideas and knowledge, and how can we do that with computers?

HUIZINGA: Yeah …

SELLEN: And this was radical at the time because cognitive psychologists back then were thinking about … they did lots of, kind of, flow chart models of human cognition. And people like Dave Rumelhart did networks, neural networks, …

HUIZINGA: Ooh …

SELLEN: … and they were using what were then called spreading activation models of memory and things, which came from psychology. And that’s interesting because not only were they modeling human cognition in this, kind of, what they called parallel distributed processing, but they operationalized it. And that’s where Hinton and others came up with the back-propagation algorithm, and that was a huge leap forward in AI. So psychologists were actually directly responsible for the wave of AI we see today. A lot of computer scientists don’t know that. A lot of machine learning people don’t know that. But so, for me, long story short, that time in my life and doing my PhD at UC San Diego led to me understanding that social science, psychology in particular, and computing should be seen as things which mutually support one another and that can lead to huge breakthroughs in how we design computers and computer algorithms and how we do computing. So that, kind of, set the path for the rest of my career. And that was 40 years ago!

HUIZINGA: Did you have what we’ll call metacognition of that being an aha moment for you, and like, I’m going to embrace this, and this is my path forward? Or was it just, sort of, more iterative: these things interest you, you take the next step, these things interest you more, you take that step?

SELLEN: I think it was an aha moment at certain points. Like, for example, the day that Francis Crick walked into our seminar and started talking about biologically inspired models of computing, I thought, “Ooh, there’s something big going on here!”

HUIZINGA: Wow, yeah.

SELLEN: Because even then I knew that he was a big deal. So I knew there was something happening that was really, really interesting. I didn’t think so much about it from the point of view of, you know, I would have a career of helping to design human-centric computing, but more, wow, there’s a breakthrough in psychology and how we understand the human mind. And I didn’t realize at that time that that was going to lead to what’s happening in AI today.

HUIZINGA: Well, let’s talk about some of these people that were influential for you as a follow-up to the animating “big idea.” If I’m honest, Abi, my jaw dropped a little when I read your bio because it’s like a who’s who of human-centered computing and UX design. And now these people are famous. Maybe they weren’t so much at the time. But tell us about the influential people in your life, and how their ideas inspired you?

SELLEN: Yeah, sure, happy to. In fact, I’ll start with one person who is not a, sort of, HCI person, but my stepfather, John Senders, was this remarkable human being. He died three years ago at the age of 98. He worked almost to his dying day. Just an amazing man. He entered my life when I was about 13. He joined the family. And he went to Harvard. He trained with people like Skinner. He was taught by these, kind of, famous psychologists of the 20th century, and they were his friends and his colleagues, and he introduced me to a lot of them. You know, people like Danny Kahneman and, you know, Amos Tversky and Alan Baddeley, and all these people that, you know, I had learned about as an undergrad. But the main thing that John did for me was to open my eyes to how you could think about modeling humans as machines. And he really believed that. He was not only a psychologist, but he was an engineer. And he, sort of, kicked off or he was one of the founders of the field of human factors engineering. And that’s what human factors engineers do. They look at people, and they think, how can we mathematically model them? So, you know, we’d be sitting by a pool, and he’d say, “You can use information sampling to model the frequency with which somebody has to watch a baby as they go towards the pool. And it depends on their velocity and then their trajectory… !” [LAUGHTER] Or we go into a bank, and he’d say, “Abi, how would you use queuing theory to, you know, estimate the mean wait time?” Like, you know, so he got me thinking like that, and he recognized in me that I had this curiosity about the world and about people, but also, that I loved mathematics. So he was the first guy. Don Norman, I’ve already mentioned as my PhD supervisor, and I’ve said something about already how he, sort of, had this radical idea about designing computers for people. And I was fortunate to be there when the field of human-computer interaction was being born, and that was mainly down to him. And he’s just [an] incredible guy. He’s still going. He’s still working, consulting, and he wrote this famous book called The Psychology of Everyday Things, which now is, I think it’s been renamed The Design of Everyday Things, and he was really influential and been a huge supporter of mine. And then the third person I’ll mention is Bill Buxton. And …

HUIZINGA: Yeah …

SELLEN: Bill, Bill …

HUIZINGA: Bill, Bill, Bill! [LAUGHTER]

SELLEN: Yeah. I met Bill at, first, well, actually first at University of Toronto; when I was a grad student, I went up and told him his … the experiment he was describing was badly designed. And instead of, you know, brushing me off, he said, “Oh really, OK, I want to talk to you about that.” And then I met him at Apple later when I was an intern, and we just started working together. And he is, he’s just … amazing designer. Everything he does is based on, kind of, theory and deep thought. And he’s just so much fun. So I would say those three people have been big influences on me.

HUIZINGA: Yeah. What about Marilyn Tremaine? Was she a factor in what you did?

SELLEN: Yes, yeah, she was great. And Ron Baecker. So…

HUIZINGA: Yeah …

SELLEN: … after I did my PhD, I did a postdoc at Toronto in the Dynamic Graphics Project Lab. And they were building a media space, and they asked me to join them. And Marilyn and Ron and Bill were building this video telepresence media space, which was way ahead of its time.

HUIZINGA: Yeah.

SELLEN: So I worked with all three of them, and they were great fun.

HUIZINGA: Well, let’s talk about the research initiative AI, Cognition, and the Economy. For context, this is a global, interdisciplinary effort to explore the impact of generative AI on human cognition and thinking, work dynamics and practices, and labor markets and the economy. Now, we’ve already lined up some AICE researchers to come on the podcast and talk about specific projects, including pilot studies, workshops, and extended collaborations, but I’d like you to act as a, sort of, docent or tour guide for the initiative, writ large, and tell us why, particularly now, you think it’s important to bring this group of scientists together and what you hope to accomplish.

SELLEN: I think it’s important now because I think there are so many extreme views out there about how AI is going to impact people. A lot of hyperbole, right. So there’s a lot of fear about, you know, jobs going away, people being replaced, robots taking over the world. And there’s a lot of enthusiasm about how, you know, we’re all going to be more productive, have more free time, how it’s going to be the answer to all our problems. And so I think there are people at either end of that conversation. And I always … I love the Helen Fielding quote … I don’t know if you know Helen Fielding. She wrote…

HUIZINGA: Yeah, Bridget Jones’s Diary …

SELLEN: … Bridget Jones’s Diary. Yeah. [LAUGHTER] She says, “Nothing is either as bad or as good as it seems,” right. And I live by that because I think things are usually somewhere in the middle. So I’m not saying that we shouldn’t take concerns seriously about AI or be hugely optimistic about the opportunities, but rather, my view on this is that we can do research to get, kind of, line of sight into the future and what is going to happen with AI. And more than this, we should be using research to not just get line of sight but to steer the future, right. We can actually help to shape it. And especially being at Microsoft, we have a chance to do that. So what I mean here is that let’s begin by understanding first the capabilities of AI and get a good understanding of where it’s heading and the pace that it’s heading at because it’s changing so fast, right.

HUIZINGA: Mm-hmm …

SELLEN: And then let’s do some research looking at the impact, both in the short term and the long term, about its impact on tasks, on interaction, and, most importantly for me anyway, on people. Yeah, and then we can extrapolate out how this is going to impact jobs, skills, organizations, society at large, you know. So we get this, kind of, arc that we can trace, but we do it because we do research. We don’t just rely on the hyperbole and speculation, but we actually try and do it more systematically. And then I think the last piece here is that if we’re going to do this well and if we think about what AI’s impact can be, which we think is going to impact on a global scale, we need many different skills and disciplines. We need not just machine learning people and engineering and computer scientists at large, but we need designers, we need social scientists, we need even philosophers, and we need domain experts, right. So we need to bring all of these people together to do this properly.

HUIZINGA: Interesting. Well, let’s do break it down a little bit then. And I want to ask you a couple questions about each of the disciplines within the acronym A-I-C-E, or AICE. And I’ll start with AI and another author that we can refer to. Sci-fi author and futurist Arthur C. Clarke famously said that “any sufficiently advanced technology is indistinguishable from magic,” and for many people, AI systems seem to be magic. So in response to that, many in the industry have emphatically stated that AI is just a tool. But you’ve said things like AI is more a “collaborative copilot than a mere tool,” and recently, you said we might even think of it as a “very smart and intuitive butler.” So how do those ideas from the airline industry and Downton Abbey help us better understand and position AI and its role in our world?

SELLEN: Well, I’m going to use Wodehouse here in a minute as well, but um … so I think AI is different from many other tech developments in a number of important ways. One is, it has agency, right. So it can take initiative and do things on your behalf. It’s highly complex, and, you know, it’s getting more complex by the day. It changes. It’s dynamic. It’s probabilistic rather than deterministic, so it will give you different answers depending on when, you know, when you ask it and what you ask it. And it’s based on human-generated data. So it’s a vastly different kind of tool than HCI, as a field, has studied in the past. There are lots of downsides to that, right. One is it means it’s very hard to understand how it works under the hood, right …

HUIZINGA: Yeah …

SELLEN: … and understanding the output. It’s fraught with uncertainty because the output changes every time you use it. But then let’s think about the upsides, especially, large language models give us a way of conversationally interacting with AI like never before, right. So it really is a new interaction paradigm which has finally come of age. So I do think it’s going to get more personal over time and more anticipatory of our needs. And if we design it right, it can be like the perfect butler. So if you know P.G. Wodehouse, Jeeves and Wooster, you know, Jeeves knows that Bertie has had a rough night and has a hangover, so he’s there at the bedside with a tonic and a warm bath already ready for him. But he also knows what Wooster enjoys and what decisions should be left to him, and he knows when to get out of the way. He also knows when to be very discreet, right. So when I use that butler metaphor, I think about how it’s going to take time to get this right, but eventually, we may live in a world where AI helps us with good attention to privacy of getting that kind of partnership right between Jeeves and Wooster.

HUIZINGA: Right. Do you think that’s possible?

SELLEN: I don’t think we’ll ever get it exactly right, but if we have a conversational system where we can mutually shape the interaction, then even if Jeeves doesn’t get things right, Wooster can train him to do a better job.

HUIZINGA: Go back to the copilot analogy, which is a huge thing at Microsoft — in fact, they’ve got products named Copilot — and the idea of a copilot, which is, sort of, assuaging our fears that it would be the pilot …

SELLEN: Yeah …

HUIZINGA: … AI.

SELLEN: Yeah, yeah.

HUIZINGA: So how do we envision that in a way that … you say it’s more than a mere tool, but it’s more like a copilot?

SELLEN: Yeah, I actually like the copilot metaphor for what you’re alluding to, which is that the pilot is the one who has the final say, who has the, kind of, oversight of everything that’s happening and can step in. And also that the copilot is there in a supportive role, who kind of trains by dint of the fact that they work next to the pilot, and that they have, you know, specialist skills that can help.

HUIZINGA: Right …

SELLEN: So I really like that metaphor. I think there are other metaphors that we will explore in future and which will make sense for different contexts, but I think, as a metaphor for a lot of the things we’re developing today, it makes a lot of sense.

HUIZINGA: You know, it also feels like, in the conversation, words really matter in how people perceive what the tool is. So having these other frameworks to describe it and to implement it, I think, could be really helpful.

SELLEN: Yes, I agree.

[MUSIC BREAK]HUIZINGA: Well, let’s talk about intelligence for a second. One of the most interesting things about AI is it’s caused us to pay attention to other kinds of intelligence. As author Meghan O’Gieblyn puts it, “God, human, animal, machine … ” So why do you think, Abi, it’s important to understand the characteristics of each kind of intelligence, and how does that impact how we conceptualize, make, and use what we’re calling artificial intelligence?

SELLEN: Yeah, well, I actually prefer the term machine intelligence to artificial intelligence …

HUIZINGA: Me too! Thank you! [LAUGHTER]

SELLEN: Because the latter implies that there’s one kind of intelligence, and also, it does allude to the fact that that is human-like. You know, we’re trying to imitate the human. But if you think about animals, I think that’s really interesting. I mean, many of us have good relationships with our pets, right. And we know that they have a different kind of intelligence. And it’s different from ours, but that doesn’t mean we can’t understand it to some extent, right. And if you think about … animals are superhuman in many ways, right. They can do things we can’t. So whether it’s an ox pulling a plow or a dog who can sniff out drugs or ferrets who can, you know, thread electrical cables through pipes, they can do things. And bee colonies are fascinating to me, right. And they work as a, kind of, a crowd intelligence, or hive mind, right. [LAUGHTER] That’s where that comes from. And so in so many ways, animals are smarter than humans. But it doesn’t matter—like this “smarter than” thing also bugs me. It’s about being differently intelligent, right. And the reason I think that’s important when we think about machine intelligence is that machine intelligence is differently intelligent, as well. So the conversational interface allows us to explore the nature of that machine intelligence because we can speak to it in a kind of human-like way, but that doesn’t mean that it is intelligent in the same way a human is intelligent. And in fact, we don’t really want it to be, right.

HUIZINGA: Right …

SELLEN: Because we want it, we want it to be a partner with us, to do things that we can’t, you know, just like using the plow and the ox. That partnership works because the ox is stronger than we are. So I think machine intelligence is a much better word, and understanding it’s not human is a good thing. I do worry that, because it sounds like a human, it can seduce us into thinking it’s a human …

HUIZINGA: Yeah …

SELLEN: … and that can be problematic. You know, there are instances where people have been on, for example, dating sites and a bot is sounding like a human and people get fooled. So I think we don’t want to go down the path of fooling people. We want to be really careful about that.

HUIZINGA: Yeah, this idea of conflating different kinds of intelligences to our own … I think we can have a separate vision of animal intelligence, but machines are, like you say, kind of seductively built to be like us.

SELLEN: Yeah …

HUIZINGA: And so back to your comment about shaping how this technology moves forward and the psychology of it, how might we envision how we could shape, either through language or the way these machines operate, that we build in a “I’m not going to fool you” mechanism?

SELLEN: Well, I mean, there are things that we do at the, kind of, technical level in terms of guardrails and metaprompts, and we have guidelines around that. But there’s also the language that an AI character will use in terms of, you know, expressing thoughts and feelings and some suggestion of an inner life, which … these machines don’t have an inner life, right.

HUIZINGA: Right!

SELLEN: So … and one of the reasons we talk to people is we want to discover something about their inner life.

HUIZINGA: Yessss …

SELLEN: And so why would I talk to a machine to try and discover that? So I think there are things that we can do in terms of how we design these systems so that they’re not trying to deceive us. Unless we want them to deceive us. So if we want to be entertained or immersed, maybe that’s a good thing, right? That they deceive us. But we enter into that knowing that that’s what’s happening, and I think that’s the difference.

HUIZINGA: Well, let’s talk about the C in A-I-C-E, which is cognition. And we’ve just talked about other kinds of intelligence. Let’s broaden the conversation and talk about the impact of AI on humans themselves. Is there any evidence to indicate that machine intelligence actually has an impact on human intelligence, and if so, why is that an important data point?

SELLEN: Yeah, OK, great topic. This is one of my favorite topics. [LAUGHTER] So, well, let me just backtrack a little bit for a minute. A lot of the work that’s coming out today looking at the impact of AI on people is in terms of their productivity, in terms of how fast they can do something, how efficiently they can do a job, or the quality of the output of the tasks. And I do think that’s important to understand because, you know, as we deploy these new tools in peoples’ hands, we want to know what’s happening in terms of, you know, peoples’ productivity, workflow, and so on. But there’s far less of it on looking at the impact of using AI on people themselves and on how people think, on their cognitive processes, and how are these changing over time? Are they growing? Are they atrophying as they use them? And, relatedly, what’s happening to our skills? You know, over time, what’s going to be valued, and what’s going to drop away? And I think that’s important for all kinds of reasons. So if you think about generative AI, right, these are these AI systems that will write something for us or make a slide deck or a picture or a video. What they’re doing is they are taking the cognitive work of generation of an artifact or the effort of self-expression that most of us, in the old-fashioned world, will do, right—we write something, we make something—they’re doing that for us on our behalf. And so our job then is to think about how do we specify our intention to the machine, how do we talk to it to get it to do the things we want, and then how do we evaluate the output at the end? So it’s really radically shifting what we do, the work that we do, the cognitive and mental work that we do, when we engage with these tools. Now why is that a problem? Or should it be a problem? One concern is that many of us think and structure our thoughts through the process of making things, right. Through the process of writing or making something. So a big question for me is, if we’re removed from that process, how deeply will we learn or understand what we’re writing about? A second one is, you know, if we’re not deeply engaged in the process of generating these things, does that actually undermine our ability to evaluate the output when we do get presented with it?

HUIZINGA: Right …

SELLEN: Like, if it writes something for us and it’s full of problems and errors, if we stop writing for ourselves, are we going to be worse at, kind of, judging the output? Another one is, as we hand things over to more and more of these automated processes, will we start to blindly accept or over-rely on our AI assistants, right. And the aviation industry has known that for years …

HUIZINGA: Yeah …

SELLEN: … which is why they stick pilots in simulators. Because they rely on autopilot so much that they forget those key skills. And then another one is, kind of, longer term, which is like these new generations of people who are going to grow up with this technology, what are the fundamental skills that they’re going to need to not just to use the AI but to be kind of citizens of the world and also be able to judge the output of these AI systems? So the calculator, right, is a great example. When it was first introduced, there was a huge outcry around, you know, kids won’t be able to do math anymore! Or we don’t need to teach it anymore. Well, we do still teach it because when you use a calculator, you need to be able to see whether or not the output the machine is giving you is in the right ballpark, right.

HUIZINGA: Right …

SELLEN: You need to know the basics. And so what are the basics that kids are going to need to know? We just don’t have the answer to those questions. And then the last thing I’ll say on this, because I could go on for a long time, is we also know that there are changes in the brain when we use these new technologies. There are shifts in our cognitive skills, you know, things get better and things do deteriorate. So I think Susan Greenfield is famous for her work looking at what happens to the neural pathways in the age of the internet, for example. So she found that all the studies were pointing to the fact that reading online and on the internet meant that our visual-spatial skills were being boosted, but our capacity to do deep processing, mindful knowledge acquisition, critical thinking, reflection, were all decreasing over time. And I think any parent who has a teenager will know that focus of attention, flitting from one thing to another, multitasking, is, sort of, the order of the day. Well, not just for teenagers. I think all of us are suffering from this now. It’s much harder. I find it much harder to sit down and read something in a long, focused way …

HUIZINGA: Yeah …

SELLEN: … than I used to. So all of this long-winded answer is to say, we don’t understand what the impact of these new AI systems is going to be. We need to do research to understand it. And we need to do that research both looking at short-term impacts and long-term impacts. Not to say that this is all going to be bad, but we need to understand where it’s going so we can design around it.

HUIZINGA: You know, even as you asked each of those questions, Abi, I found myself answering it preemptively, “Yes. That’s going to happen. That’s going to happen.” [LAUGHS] And so even as you say all of this and you say we need research, do you already have some thinking about, you know, if research tells us the answer that we thought might be true already, do we have a plan in place or a thought process in place to address it?

SELLEN: Well, yes, and I think we’ve got some really exciting research going on in the company right now and in the AICE program, and I’m hoping your future guests will be able to talk more in-depth about these things. But we are looking at things like the impact of AI on writing, on comprehension, on mathematical abilities. But more than that. Not just understanding the impact on these skills and abilities, but how can we design systems better to help people think better, right?

HUIZINGA: Yeah …

SELLEN: To help them think more deeply, more creatively. I don’t think AI needs to necessarily de-skill us in the critical skills that we want and need. It can actually help us if we design them properly. And so that’s the other part of what we’re doing. It’s not just understanding the impact, but now saying, OK, now that we understand what’s going on, how do we design these systems better to help people deepen their skills, change the way that they think in ways that they want to change—in being more creative, thinking more deeply, you know, reading in different ways, understanding the world in different ways.

HUIZINGA: Right. Well, that is a brilliant segue into my next question. Because we’re on the last letter, E, in AICE: the economy. And that I think instills a lot of fear in people. To cite another author, since we’re on a citing authors roll, Clay Shirky, in his book Here Comes Everybody, writes about technical revolutions in general and the impact they have on existing economic paradigms. And he says, “Real revolutions don’t involve an orderly transition from point A to point B. Rather, they go from A, through a long period of chaos, and only then reach B. And in that chaotic period the old systems get broken long before the new ones become stable.” Let’s take Shirky’s idea and apply it to generative AI. If B equals the future of work, what’s getting broken in the period of transition from how things were to how things are going to be, what do we have to look forward to, and how do we progress toward B in a way that minimizes chaos?

SELLEN: Hmm … oh, those are big questions! [LAUGHS]

HUIZINGA: Too many questions! [LAUGHS]

SELLEN: Yeah, well, I mean, Shirky was right. Things take a long time to bed in, right. And much of what happens over time, I don’t think we can actually predict. You know, so who would have predicted echo chambers or the rise of deepfakes or, you know, the way social media could start revolutions in those early days of social media, right. So good and bad things happen, and a lot of it’s because it rolls out over time, it scales up, and then people get involved. And that’s the really unpredictable bit, is when people get involved en masse. I think we’re going to see the same thing with AI systems. They are going to take a long time to bed in, and its impact is going to be global, and it’s going to take a long time to unfold. So I think what we can do is, to some extent, we can see the glimmerings of what’s going to happen, right. So I think it’s the William Gibson quote is, you know, “The future’s already here; it’s just not evenly distributed,” or something like that, right. We can see some of the problems that are playing out, both in the hands of bad actors and things that will happen unintentionally. We can see those, and we can design for them, and we can do things about it because we are alert and we are looking to see what happens. And also, the good things, right. And all the good things that are playing out, …

HUIZINGA: Yeah …

SELLEN: … we can make the most of those. Other things we can do is, you know, at Microsoft, we have a set of responsible AI principles that we make sure all our products go through to make sure that we look into the future as much as we can, consider what the consequences might be, and then deploy things in very careful steps, evaluating as we go. And then, coming back to what I said earlier, doing deep research to try and get a better line of sight. So in terms of what’s going to happen with the future of work, I think, again, we need to steer it. Some of the things I talked about earlier in terms of making sure we build skills rather than undermine them, making sure we don’t over automate, making sure that we put agency in the hands of people. And always making sure that we design our AI experiences with human hope, aspirations, and needs in mind. If we do that, I think we’re on a good track, but we should always be vigilant, you know, to what’s evolving, what’s happening here.

HUIZINGA: Yeah …

SELLEN: I can’t really predict whether we’re headed for chaos or not. I don’t think we are, as long as we’re mindful.

HUIZINGA: Yeah. And it sounds like there’s a lot more involved outside of computer science, in terms of support systems and education and communication, to acclimatize people to a new kind of economy, which like you say, you can’t … I’m shocked that you can’t predict it, Abi. I was expecting that you could, but … [LAUGHTER]

SELLEN: Sorry.

HUIZINGA: Sorry! But yeah, I mean, do you see the ancillary industries, we’ll call them, in on this? And how can, you know, sort of, a lab in Cambridge, and labs around the world that are doing AI, how can they spread out to incorporate these other things to help the people who know nothing about what’s going on in your lab move forward here?

SELLEN: Well, I think, you know, there are lots of people that we need to talk to and to take account of. The word stakeholder … I hate that word stakeholder! I’m not sure why. [LAUGHTER] But anyway, stakeholders in this whole AI odyssey that we’re on … you know, public perceptions are one thing. I’m a member of a lot of societies where we do a lot of outreach and talks about AI and what’s going on, and I think that’s really, really important. And get people excited also about the possibilities of what could happen.

HUIZINGA: Yeah …

SELLEN: Because I think a lot of the media, a lot of the stories that get out there are very dystopian and scary, and it’s right that we are concerned and we are alert to possibilities, but I don’t think it does anybody any good to make people scared or anxious. And so I think there’s a lot we can do with the public. And there’s a lot we can do with, when I think about the future of work, different domains, you know, and talking to them about their needs and how they see AI fitting into their particular work processes.

HUIZINGA: So, Abi, we’re kind of [LAUGHS] dancing around these dystopian narratives, and whether they’re right or wrong, they have gained traction. So it’s about now that I ask all of my guests what could go wrong if you got everything right? So maybe you could present, in this area, some more hopeful, we’ll call them “-topias,” or preferred futures, if you will, around AI and how you and/or your lab and other people in the industry are preparing for them.

SELLEN: Well, again, I come back to the idea that the future is all around us to some extent, and we’re seeing really amazing breakthroughs, right, with AI. For example, scientific breakthroughs in terms of, you know, drug discovery, new materials to help tackle climate change, all kinds of things that are going to help us tackle some of the world’s biggest problems. Better understandings of the natural world, right, and how interventions can help us. New tools in the hands of low-literacy populations and support for, you know, different ways of working in different cultures. I think that’s another big area in which AI can help us. Personalization—personalized medicine, personalized tutoring systems, right. So we talked about education earlier. I think that AI could do a lot if we design it right to really help in education and help support people’s learning processes. So I think there’s a lot here, and there’s a lot of excitement—with good reason. Because we’re already seeing these things happening. And we should bear those things in mind when we start to get anxious about AI. And I personally am really, really excited about it. I’m excited about, you know, what the company I work for is doing in this area and other companies around the world. I think that it’s really going to help us in the long term, build new skills, see the world in new ways, you know, tackle some of these big problems.

HUIZINGA: I recently saw an ad—I’m not making this up—it was the quote-unquote “productivity app,” and it was simply a small wooden box filled with pieces of paper. And there was a young man who had a how-to video on how to use it on YouTube. [LAUGHS] He was clearly born into the digital age and found writing lists on paper to be a revolutionary idea. But I myself have toggled back and forth between what we’ll call the affordances of the digital world and the familiarity and comfort of the physical world. And you actually studied this and wrote about it in a book called The Myth of the Paperless Office. That was 20 years ago. Why did you do the work then, what’s changed in the ensuing years, and why in the age of AI do I love paper so much?

SELLEN: Yeah, so, that was quite a while ago now. It was a book that I cowrote with my husband. He’s a sociologist, so we, sort of, came together on that book, me as a psychologist and he as a sociologist. What we were responding to at the time was a lot of hype about the paperless office and the paperless future. At the time, I was working at EuroPARC, you know, which is the European sister lab of Xerox PARC. And so, obviously, they had big investment in this. And there were many people in that lab who really believed in the paperless office, and lots of great inventions came out of the fact that people were pursuing that vision. So that was a good side of that, but we also saw where things could go horribly wrong when you just took a paper-based system away and you just replaced it with a digital system.

HUIZINGA: Yeah …

SELLEN: I remember some of the disasters in air traffic control, for example, when they took the paper flight strips away and just made them all digital. And those are places where you don’t want to mess around with something that works.

HUIZINGA: Right.

SELLEN: You have to be really careful about how you introduce digital systems. Likewise, many people remember things that went wrong when hospitals tried to go paperless with health records being paperless. Now, those things are digital now, but we were talking about chaos earlier. There was a lot of chaos on the path. So what we’ve tried to say in that book to some extent is, let’s understand the work that paper is doing in these different work contexts and the affordances of paper. You know, what is it doing for people? Anything from, you know, I hand a document over to someone else; a physical document gives me the excuse to talk to that person …

HUIZINGA: Right…

SELLEN: … through to, you know, when I place a document on somebody’s desk, other people in the workplace can see that I’ve passed it on to someone else. Those kind of nuanced observations are useful because you then need to think, how’s the digital system going to replace that? Not in the same way, but it’s got to do the same job, right. So you need to talk to people, you need to understand the context of their work, and then you need to carefully plan out how you’re going to make the transition. So if we just try to inject AI into workflows or totally replace parts of workflows with AI without a really deep understanding of how that work is currently done, what the workers get from it, what is the value that the workers bring to that process, we could go through that chaos. And so it’s really important to get social scientists involved in this and good designers, and that’s where the, kind of, multidisciplinary thing really comes into its own. That’s where it’s really, really valuable.

HUIZINGA: Yeah … You know, it feels super important, that book, about a different thing, how it applies now and how you can take lessons from that arc to what you’re talking about with AI. I feel like people should go back and read that book.

SELLEN: I wouldn’t object! [LAUGHTER]

[MUSIC BREAK]HUIZINGA: Let’s talk about some research ideas that are on the horizon. Lots of research is basically just incremental building on what’s been done before, but there are always those moonshot ideas that seem outrageous at first. Now, you’re a scientist and an inventor yourself, and you’re also a lab director, so you’ve seen a lot of ideas over the years. [LAUGHS] You’ve probably had a lot of ideas. Have any of them been outrageous in your mind? And if so, what was the most outrageous, and how did it work out?

SELLEN: OK, well, I’m a little reluctant to say this one, but I always believed that the dream of AI was outrageous. [LAUGHTER] So, you know, going back to those early days when, you know, I was a psychologist in the ’80s and seeing those early expert systems that were being built back then and trying to codify and articulate expert knowledge into machines to make them artificially intelligent, it just seemed like they were on a road to nowhere. I didn’t really believe in the whole vision of AI for many, many years. I think that when deep learning, that whole revolution’s kicked off, I never saw where it was heading. So I am, to this day, amazed by what these systems can do and never believed that these things would be possible. And so I was a skeptic, and I am no longer a skeptic, [LAUGHTER] with a proviso of everything else I’ve said before, but I thought it was an outrageous idea that these systems would be capable of what they’re now capable of.

HUIZINGA: You know, that’s funny because, going back to what you said earlier about your stepdad walking you around and asking you how you’d codify a human into a machine … was that just outrageous to you, or is that just part of the exploratory mode that your stepdad, kind of, brought you into?

SELLEN: Well, so, back then I was quite young, and I was willing to believe him, and I, sort of, signed up to that. But later, especially when I met my husband, a sociologist, I realized that I didn’t agree with any of that at all. [LAUGHTER] So we had great, I’ll say, “energetic” discussions with my stepdad after that, which was fun.

HUIZINGA: I bet.

SELLEN: But yeah, but so, it was how I used to think and then I went through this long period of really rejecting all of that. And part of that was, you know, seeing these AI systems really struggle and fail. And now here we are today. So yeah.

HUIZINGA: Yeah, I just had Rafah Hosn on the podcast and when we were talking about this “outrageous ideas” question, she said, “Well, I don’t really see much that’s outrageous.” And I said, “Wait a minute! You’re living in outrageous! You are in AI Frontiers at Microsoft Research.” Maybe it’s just because it’s so outrageous that it’s become normal?

SELLEN: Yeah …

HUIZINGA: And yeah, well … Well, finally, Abi, your mentor and adviser, Don Norman … you referred to a book that he wrote, and I know it as The Design of Everyday Things, and in it he wrote this: “Design is really an act of communication, which means having a deep understanding of the person with whom the designer is communicating.” So as we close, I’d love it if you’d speak to this statement in the context of AI, Cognition, and the Economy. How might we see the design of AI systems as an act of communication with people, and how do we get to a place where an understanding of deeply human qualities plays a larger role in informing these ideas, and ultimately the products, that emerge from a lab like yours?

SELLEN: So this is absolutely critical to getting AI development and design right. It’s deeply understanding people and what they need, what their aspirations are, what human values are we designing for. You know, I would say that as a social scientist, but I also believe that most of the technologists and computer scientists and machine learning people that I interact with on a daily basis also believe that. And that’s one thing that I love about the lab that I’m a part of, is that it’s very interdisciplinary. We’re always putting the, kind of, human-centric spin on things. And, you know, Don was right. And that’s what he’s been all about through his career. We really need to understand, who are we designing this technology for? Ultimately, it’s for people; it’s for society; it’s for the, you know, it’s for the common good. And so that’s what we’re all about. Also, I’m really excited to say we are becoming, as an organization, much more globally distributed. Just recently taken on a lab in Nairobi. And the cultural differences and the differences in different countries casts a whole new light on how these technologies might be used. And so I think that it’s not just about understanding different people’s needs but different cultures and different parts of the world and how this is all going to play out on a global scale.

HUIZINGA: Yeah … So just to, kind of, put a cap on it, when I said the term “deeply human qualities,” what I’m thinking about is the way we collaborate and work as a team with other people, having empathy and compassion, being innovative and creative, and seeking well-being and prosperity. Those are qualities that I have a hard time superimposing onto or into a machine. Do you think that AI can help us?

SELLEN: Yeah, I think all of these things that you just named are things which, as you say, are deeply human, and they are the aspects of our relationship with technology that we want to not only protect and preserve but support and amplify. And I think there are many examples I’ve seen in development and coming out which have that in mind, which seek to augment those different aspects of human nature. And that’s exciting. And we always need to keep that in mind as we design these new technologies.

HUIZINGA: Yeah. Well, Abi Sellen, I’d love to stay and chat with you for another couple hours, but how fun to have you on the show. Thanks for joining us today on Ideas.

SELLEN: It’s been great. I really enjoyed it. Thank you.

[MUSIC]

The post Ideas: Designing AI for people with Abigail Sellen appeared first on Microsoft Research.

Generating fashion product descriptions by fine-tuning a vision-language model with SageMaker and Amazon Bedrock

In the world of online retail, creating high-quality product descriptions for millions of products is a crucial, but time-consuming task. Using machine learning (ML) and natural language processing (NLP) to automate product description generation has the potential to save manual effort and transform the way ecommerce platforms operate. One of the main advantages of high-quality product descriptions is the improvement in searchability. Customers can more easily locate products that have correct descriptions, because it allows the search engine to identify products that match not just the general category but also the specific attributes mentioned in the product description. For example, a product that has a description that includes words such as “long sleeve” and “cotton neck” will be returned if a consumer is looking for a “long sleeve cotton shirt.” Furthermore, having factoid product descriptions can increase customer satisfaction by enabling a more personalized buying experience and improving the algorithms for recommending more relevant products to users, which raise the probability that users will make a purchase.

With the advancement of Generative AI, we can use vision-language models (VLMs) to predict product attributes directly from images. Pre-trained image captioning or visual question answering (VQA) models perform well on describing every-day images but can’t to capture the domain-specific nuances of ecommerce products needed to achieve satisfactory performance in all product categories. To solve this problem, this post shows you how to predict domain-specific product attributes from product images by fine-tuning a VLM on a fashion dataset using Amazon SageMaker, and then using Amazon Bedrock to generate product descriptions using the predicted attributes as input. So you can follow along, we’re sharing the code in a GitHub repository.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

You can use a managed service, such as Amazon Rekognition, to predict product attributes as explained in Automating product description generation with Amazon Bedrock. However, if you’re trying to extract specifics and detailed characteristics of your product or your domain (industry), fine-tuning a VLM on Amazon SageMaker is necessary.

Vision-language models

Since 2021, there has been a rise in interest in vision-language models (VLMs), which led to the release of solutions such as Contrastive Language-Image Pre-training (CLIP) and Bootstrapping Language-Image Pre-training (BLIP). When it comes to tasks such as image captioning, text-guided image generation, and visual question-answering, VLMs have demonstrated state-of-the art performance.

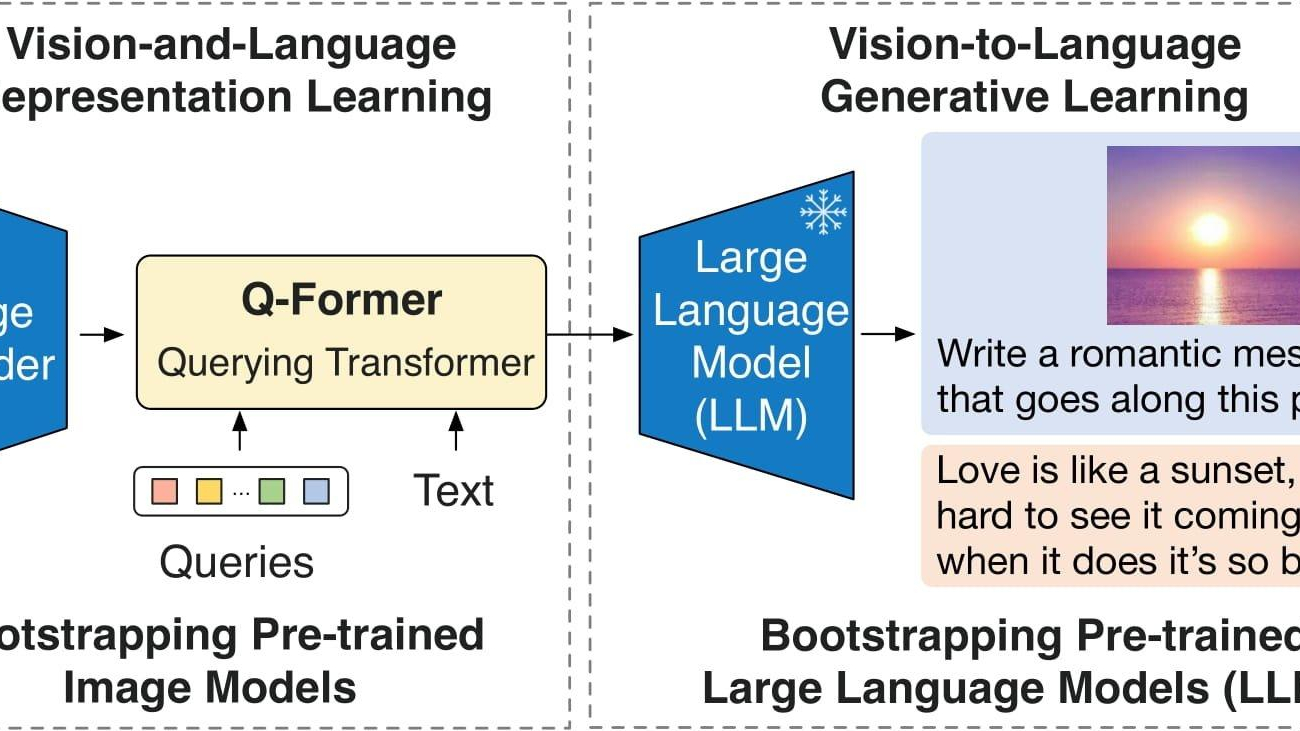

In this post, we use BLIP-2, which was introduced in BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models, as our VLM. BLIP-2 consists of three models: a CLIP-like image encoder, a Querying Transformer (Q-Former) and a large language model (LLM). We use a version of BLIP-2, that contains Flan-T5-XL as the LLM.

The following diagram illustrates the overview of BLIP-2:

Figure 1: BLIP-2 overview

The pre-trained version of the BLIP-2 model has been demonstrated in Build an image-to-text generative AI application using multimodality models on Amazon SageMaker and Build a generative AI-based content moderation solution on Amazon SageMaker JumpStart. In this post, we demonstrate how to fine-tune BLIP-2 for a domain-specific use case.

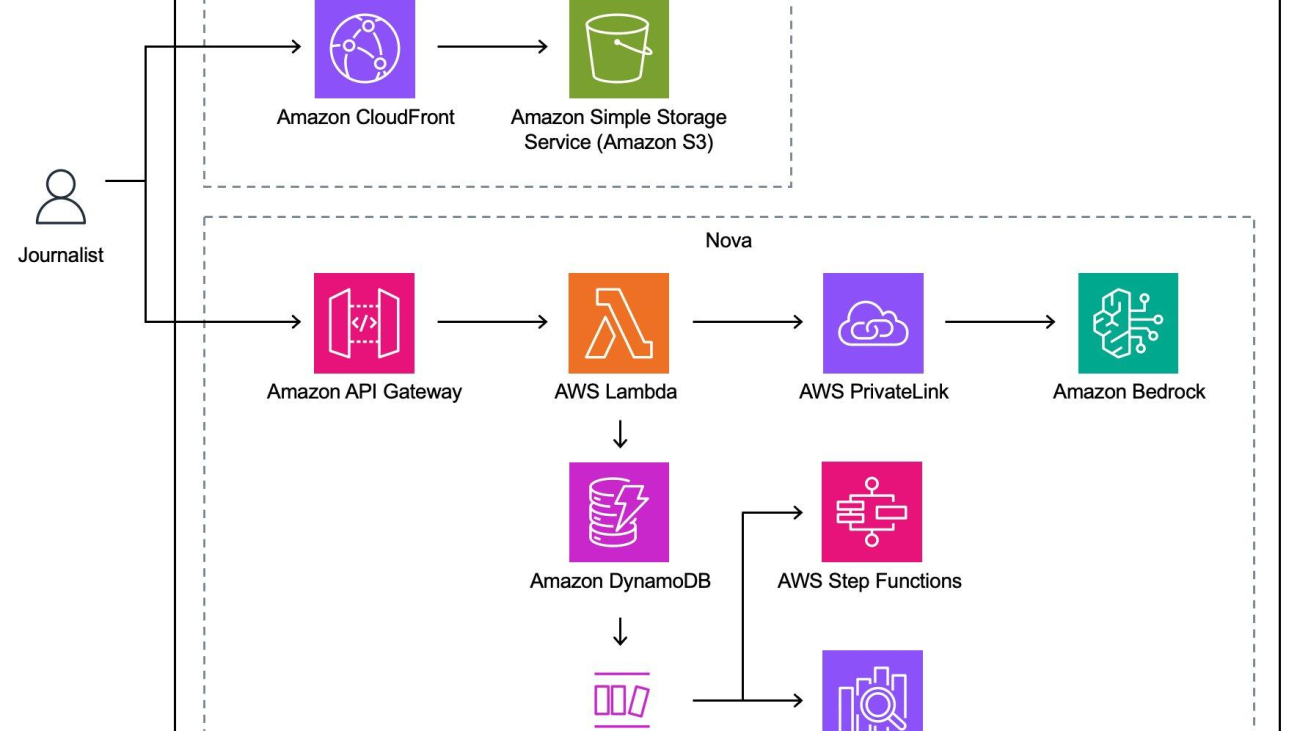

Solution overview

The following diagram illustrates the solution architecture.

Figure 2: High-level solution architecture

The high-level overview of the solution is:

- An ML scientist uses Sagemaker notebooks to process and split the data into training and validation data.

- The datasets are uploaded to Amazon Simple Storage Service (Amazon S3) using the S3 client (a wrapper around an HTTP call).

- Then the Sagemaker client is used to launch a Sagemaker Training job, again a wrapper for an HTTP call.

- The training job manages the copying of the datasets from S3 to the training container, the training of the model, and the saving of its artifacts to S3.

- Then, through another call of the Sagemaker client, an endpoint is generated, copying the model artifacts into the endpoint hosting container.

- The inference workflow is then invoked through an AWS Lambda request, which first makes an HTTP request to the Sagemaker endpoint, and then uses that to make another request to Amazon Bedrock.

In the following sections, we demonstrate how to:

- Set up the development environment

- Load and prepare the dataset

- Fine-tune the BLIP-2 model to learn product attributes using SageMaker

- Deploy the fine-tuned BLIP-2 model and predict product attributes using SageMaker

- Generate product descriptions from predicted product attributes using Amazon Bedrock

Set up the development environment

An AWS account is needed with an AWS Identity and Access Management (IAM) role that has permissions to manage resources created as part of the solution. For details, see Creating an AWS account.

We use Amazon SageMaker Studio with the ml.t3.medium instance and the Data Science 3.0 image. However, you can also use an Amazon SageMaker notebook instance or any integrated development environment (IDE) of your choice.

Note: Be sure to set up your AWS Command Line Interface (AWS CLI) credentials correctly. For more information, see Configure the AWS CLI.

An ml.g5.2xlarge instance is used for SageMaker Training jobs, and an ml.g5.2xlarge instance is used for SageMaker endpoints. Ensure sufficient capacity for this instance in your AWS account by requesting a quota increase if required. Also check the pricing of the on-demand instances.

You need to clone this GitHub repository for replicating the solution demonstrated in this post. First, launch the notebook main.ipynb in SageMaker Studio by selecting the Image as Data Science and Kernel as Python 3. Install all the required libraries mentioned in the requirements.txt.

Load and prepare the dataset

For this post, we use the Kaggle Fashion Images Dataset, which contain 44,000 products with multiple category labels, descriptions, and high resolution images. In this post we want to demonstrate how to fine-tune a model to learn attributes such as fabric, fit, collar, pattern, and sleeve length of a shirt using the image and a question as inputs.

Each product is identified by an ID such as 38642, and there is a map to all the products in styles.csv. From here, we can fetch the image for this product from images/38642.jpg and the complete metadata from styles/38642.json. To fine-tune our model, we need to convert our structured examples into a collection of question and answer pairs. Our final dataset has the following format after processing for each attribute:

Id | Question | Answer38642 | What is the fabric of the clothing in this picture? | Fabric: Cotton

Fine-tune the BLIP-2 model to learn product attributes using SageMaker

To launch a SageMaker Training job, we need the HuggingFace Estimator. SageMaker starts and manages all of the necessary Amazon Elastic Compute Cloud (Amazon EC2) instances for us, supplies the appropriate Hugging Face container, uploads the specified scripts, and downloads data from our S3 bucket to the container to /opt/ml/input/data.

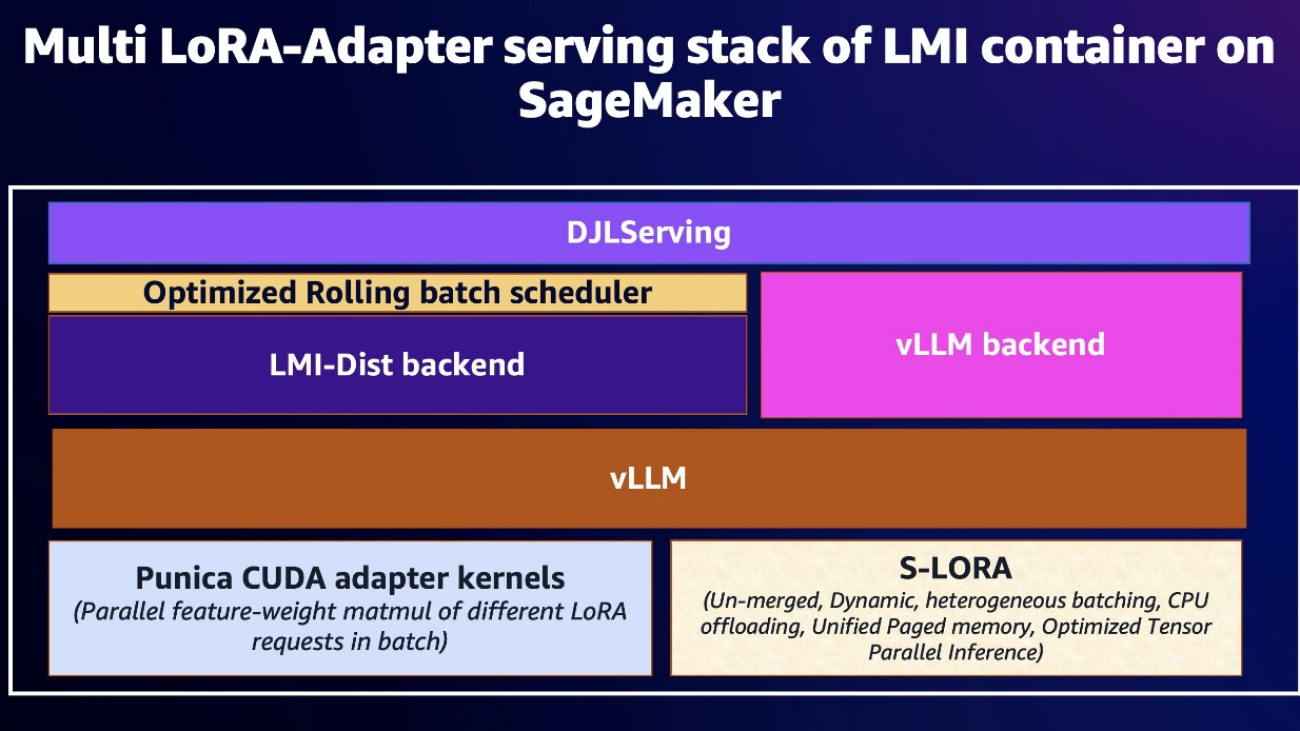

We fine-tune BLIP-2 using the Low-Rank Adaptation (LoRA) technique, which adds trainable rank decomposition matrices to every Transformer structure layer while keeping the pre-trained model weights in a static state. This technique can increase training throughput and reduce the amount of GPU RAM required by 3 times and the number of trainable parameters by 10,000 times. Despite using fewer trainable parameters, LoRA has been demonstrated to perform as well as or better than the full fine-tuning technique.

We prepared entrypoint_vqa_finetuning.py which implements fine-tuning of BLIP-2 with the LoRA technique using Hugging Face Transformers, Accelerate, and Parameter-Efficient Fine-Tuning (PEFT). The script also merges the LoRA weights into the model weights after training. As a result, you can deploy the model as a normal model without any additional code.

We can start our training job by running with the .fit() method and passing our Amazon S3 path for images and our input file.

Deploy the fine-tuned BLIP-2 model and predict product attributes using SageMaker

We deploy the fine-tuned BLIP-2 model to the SageMaker real time endpoint using the HuggingFace Inference Container. You can also use the large model inference (LMI) container, which is described in more detail in Build a generative AI-based content moderation solution on Amazon SageMaker JumpStart, which deploys a pre-trained BLIP-2 model. Here, we reference our fine-tuned model in Amazon S3 instead of the pre-trained model available in the Hugging Face hub. We first create the model and deploy the endpoint.

When the endpoint status becomes in service, we can invoke the endpoint for the instructed vision-to-language generation task with an input image and a question as a prompt:

The output response looks like the following:

{"Sleeve Length": "Long Sleeves"}

Generate product descriptions from predicted product attributes using Amazon Bedrock

To get started with Amazon Bedrock, request access to the foundational models (they are not enabled by default). You can follow the steps in the documentation to enable model access. In this post, we use Anthropic’s Claude in Amazon Bedrock to generate product descriptions. Specifically, we use the model anthropic.claude-3-sonnet-20240229-v1 because it provides good performance and speed.

After creating the boto3 client for Amazon Bedrock, we create a prompt string that specifies that we want to generate product descriptions using the product attributes.

You are an expert in writing product descriptions for shirts. Use the data below to create product description for a website. The product description should contain all given attributes.Provide some inspirational sentences, for example, how the fabric moves. Think about what a potential customer wants to know about the shirts. Here are the facts you need to create the product descriptions: [Here we insert the predicted attributes by the BLIP-2 model]

The prompt and model parameters, including maximum number of tokens used in the response and the temperature, are passed to the body. The JSON response must be parsed before the resulting text is printed in the final line.

The generated product description response looks like the following:

"Classic Striped Shirt Relax into comfortable casual style with this classic collared striped shirt. With a regular fit that is neither too slim nor too loose, this versatile top layers perfectly under sweaters or jackets."

Conclusion

We’ve shown you how the combination of VLMs on SageMaker and LLMs on Amazon Bedrock present a powerful solution for automating fashion product description generation. By fine-tuning the BLIP-2 model on a fashion dataset using Amazon SageMaker, you can predict domain-specific and nuanced product attributes directly from images. Then, using the capabilities of Amazon Bedrock, you can generate product descriptions from the predicted product attributes, enhancing the searchability and personalization of ecommerce platforms. As we continue to explore the potential of generative AI, LLMs and VLMs emerge as a promising avenue for revolutionizing content generation in the ever-evolving landscape of online retail. As a next step, you can try fine-tuning this model on your own dataset using the code provided in the GitHub repository to test and benchmark the results for your use cases.

About the Authors

Antonia Wiebeler is a Data Scientist at the AWS Generative AI Innovation Center, where she enjoys building proofs of concept for customers. Her passion is exploring how generative AI can solve real-world problems and create value for customers. While she is not coding, she enjoys running and competing in triathlons.

Antonia Wiebeler is a Data Scientist at the AWS Generative AI Innovation Center, where she enjoys building proofs of concept for customers. Her passion is exploring how generative AI can solve real-world problems and create value for customers. While she is not coding, she enjoys running and competing in triathlons.

Daniel Zagyva is a Data Scientist at AWS Professional Services. He specializes in developing scalable, production-grade machine learning solutions for AWS customers. His experience extends across different areas, including natural language processing, generative AI, and machine learning operations.

Daniel Zagyva is a Data Scientist at AWS Professional Services. He specializes in developing scalable, production-grade machine learning solutions for AWS customers. His experience extends across different areas, including natural language processing, generative AI, and machine learning operations.

Lun Yeh is a Machine Learning Engineer at AWS Professional Services. She specializes in NLP, forecasting, MLOps, and generative AI and helps customers adopt machine learning in their businesses. She graduated from TU Delft with a degree in Data Science & Technology.

Lun Yeh is a Machine Learning Engineer at AWS Professional Services. She specializes in NLP, forecasting, MLOps, and generative AI and helps customers adopt machine learning in their businesses. She graduated from TU Delft with a degree in Data Science & Technology.

Fotinos Kyriakides is an AI/ML Consultant at AWS Professional Services specializing in developing production-ready ML solutions and platforms for AWS customers. In his free time Fotinos enjoys running and exploring.

Fotinos Kyriakides is an AI/ML Consultant at AWS Professional Services specializing in developing production-ready ML solutions and platforms for AWS customers. In his free time Fotinos enjoys running and exploring.

On Efficient and Statistical Quality Estimation for Data Annotation

Annotated data is an essential ingredient to train, evaluate, compare and productionalize machine learning models. It is therefore imperative that annotations are of high quality. For their creation, good quality management and thereby reliable quality estimates are needed. Then, if quality is insufficient during the annotation process, rectifying measures can be taken to improve it. For instance, project managers can use quality estimates to improve annotation guidelines, retrain annotators or catch as many errors as possible before release.

Quality estimation is often performed by having…Apple Machine Learning Research

Create a multimodal assistant with advanced RAG and Amazon Bedrock

Retrieval Augmented Generation (RAG) models have emerged as a promising approach to enhance the capabilities of language models by incorporating external knowledge from large text corpora. However, despite their impressive performance in various natural language processing tasks, RAG models still face several limitations that need to be addressed.

Naive RAG models face limitations such as missing content, reasoning mismatch, and challenges in handling multimodal data. Although they can retrieve relevant information, they may struggle to generate complete and coherent responses when required information is absent, leading to incomplete or inaccurate outputs. Additionally, even with relevant information retrieved, the models may have difficulty correctly interpreting and reasoning over the content, resulting in inconsistencies or logical errors. Furthermore, effectively understanding and reasoning over multimodal data remains a significant challenge for these primarily text-based models.

In this post, we present a new approach named multimodal RAG (mmRAG) to tackle those existing limitations in greater detail. The solution intends to address these limitations for practical generative artificial intelligence (AI) assistant use cases. Additionally, we examine potential solutions to enhance the capabilities of large language models (LLMs) and visual language models (VLMs) with advanced LangChain capabilities, enabling them to generate more comprehensive, coherent, and accurate outputs while effectively handling multimodal data. The solution uses Amazon Bedrock, a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies, providing a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Solution architecture

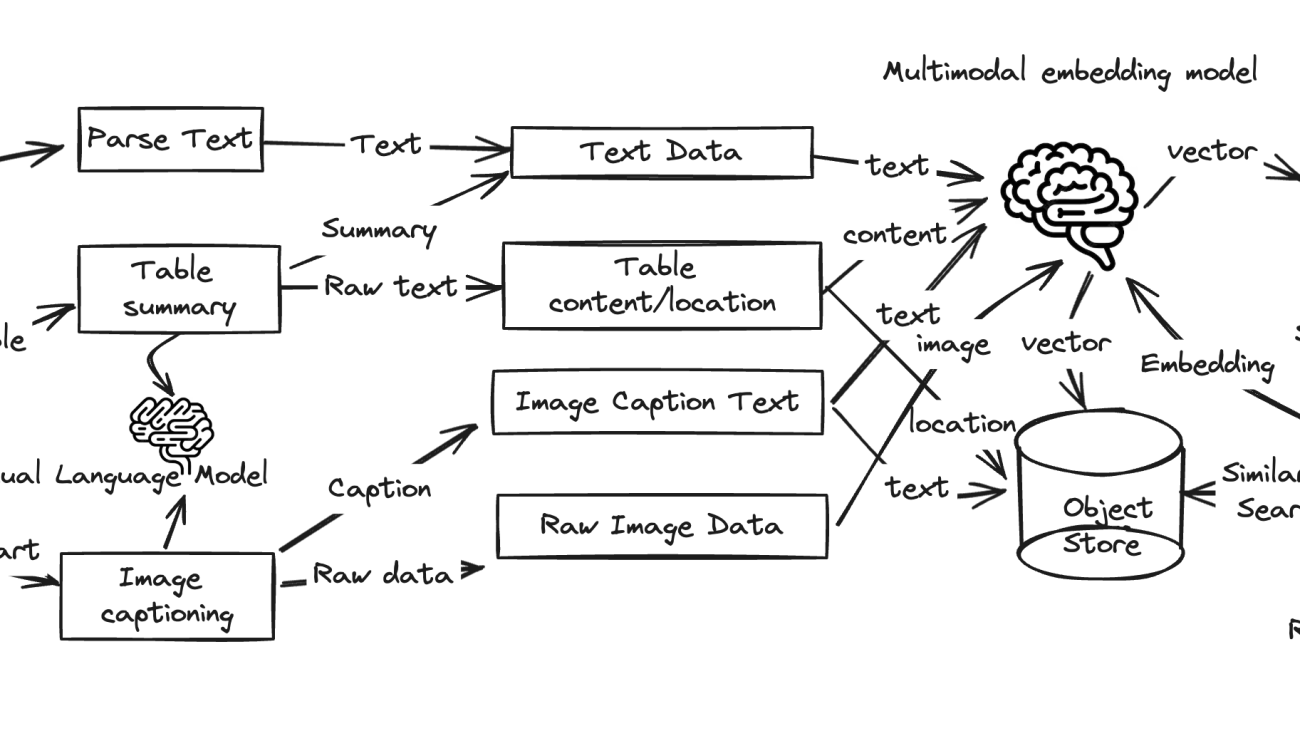

The mmRAG solution is based on a straightforward concept: to extract different data types separately, you generate text summarization using a VLM from different data types, embed text summaries along with raw data accordingly to a vector database, and store raw unstructured data in a document store. The query will prompt the LLM to retrieve relevant vectors from both the vector database and document store and generate meaningful and accurate answers.

The following diagram illustrates the solution architecture.

The architecture diagram depicts the mmRAG architecture that integrates advanced reasoning and retrieval mechanisms. It combines text, table, and image (including chart) data into a unified vector representation, enabling cross-modal understanding and retrieval. The process begins with diverse data extractions from various sources such as URLs and PDF files by parsing and preprocessing text, table, and image data types separately, while table data is converted into raw text and image data into captions.

These parsed data streams are then fed into a multimodal embedding model, which encodes the various data types into uniform, high dimensional vectors. The resulting vectors, representing the semantic content regardless of original format, are indexed in a vector database for efficient approximate similarity searches. When a query is received, the reasoning and retrieval component performs similarity searches across this vector space to retrieve the most relevant information from the vast integrated knowledge base.

The retrieved multimodal representations are then used by the generation component to produce outputs such as text, images, or other modalities. The VLM component generates vector representations specifically for textual data, further enhancing the system’s language understanding capabilities. Overall, this architecture facilitates advanced cross-modal reasoning, retrieval, and generation by unifying different data modalities into a common semantic space.

Developers can access mmRAG source codes on the GitHub repo.

Configure Amazon Bedrock with LangChain

You start by configuring Amazon Bedrock to integrate with various components from the LangChain Community library. This allows you to work with the core FMs. You use the BedrockEmbeddings class to create two different embedding models: one for text (embedding_bedrock_text) and one for images (embeddings_bedrock_image). These embeddings represent textual and visual data in a numerical format, which is essential for various natural language processing (NLP) tasks.

Additionally, you use the LangChain Bedrock and BedrockChat classes to create a VLM model instance (llm_bedrock_claude3_haiku) from Anthropic Claude 3 Haiku and a chat instance based on a different model, Sonnet (chat_bedrock_claude3_sonnet). These instances are used for advanced query reasoning, argumentation, and retrieval tasks. See the following code snippet:

from langchain_community.embeddings import BedrockEmbeddings

from langchain_community.chat_models.bedrock import BedrockChat

embedding_bedrock_text = BedrockEmbeddings(client=boto3_bedrock, model_id="amazon.titan-embed-g1-text-02")

embeddings_bedrock_image = BedrockEmbeddings(client=boto3_bedrock, model_id="amazon.titan-embed-image-v1")

model_kwargs = {

"max_tokens": 2048,

"temperature": 0.0,

"top_k": 250,

"top_p": 1,

"stop_sequences": ["nnn"],

}

chat_bedrock_claude3_haiku = BedrockChat(

model_id="anthropic:claude-3-haiku-20240307-v1:0",

client=boto3_bedrock,

model_kwargs=model_kwargs,

)

chat_bedrock_claude3_sonnet = BedrockChat(

model_id="anthropic.claude-3-sonnet-20240229-v1:0",

client=boto3_bedrock,

model_kwargs=model_kwargs,

)Parse content from data sources and embed both text and image data

In this section, we explore how to harness the power of Python to parse text, tables, and images from URLs and PDFs efficiently, using two powerful packages: Beautiful Soup and PyMuPDF. Beautiful Soup, a library designed for web scraping, makes it straightforward to sift through HTML and XML content, allowing you to extract the desired data from web pages. PyMuPDF offers an extensive set of functionalities for interacting with PDF files, enabling you to extract not just text but also tables and images with ease. See the following code:

from bs4 import BeautifulSoup as Soup

import fitz

def parse_tables_images_from_urls(url:str):

...

# Parse the HTML content using BeautifulSoup

soup = Soup(response.content, 'html.parser')

# Find all table elements

tables = soup.find_all('table')

# Find all image elements

images = soup.find_all('img')

...

def parse_images_tables_from_pdf(pdf_path:str):

...

pdf_file = fitz.open(pdf_path)

# Iterate through each page

for page_index in range(len(pdf_file)):

# Select the page

page = pdf_file[page_index]

# Search for tables on the page

tables = page.find_tables()

df = table.to_pandas()

# Search for images on the page

images = page.get_images()

image_info = pdf_file.extract_image(xref)

image_data = image_info["image"]

...The following code snippets demonstrate how to generate image captions using Anthropic Claude 3 by invoking the bedrock_get_img_description utility function. Additionally, they showcase how to embed image pixels along with image captioning using the Amazon Titan image embedding model amazon.titan_embeding_image_v1 by calling the get_text_embedding function.

image_caption = bedrock_get_img_description(model_id,

prompt='You are an expert at analyzing images in great detail. Your task is to carefully examine the provided

mage and generate a detailed, accurate textual description capturing all of the important elements and