Generating user intent from a sequence of user interface (UI) actions is a core challenge in comprehensive UI understanding. Recent advancements in multimodal large language models (MLLMs) have led to substantial progress in this area, but their demands for extensive model parameters, computing power, and high latency makes them impractical for scenarios requiring lightweight, on-device solutions with low latency or heightened privacy. Additionally, the lack of high-quality datasets has hindered the development of such lightweight models. To address these challenges, we propose UI-JEPA, a…Apple Machine Learning Research

PyTorch Shanghai Meetup Notes

Summary

We are honored to successfully host the PyTorch Shanghai Meetup on August 15, 2024. This Meetup has received great attention from the industry. We invited senior PyTorch developers from Intel and Huawei as guest speakers, who shared their valuable experience and the latest technical trends. In addition, this event also attracted PyTorch enthusiasts from many technology companies and well-known universities. A total of more than 40 participants gathered together to discuss and exchange the latest applications and technological advances of PyTorch.

This Meetup not only strengthened the connection between PyTorch community members, but also provided a platform for local AI technology enthusiasts to learn, communicate and grow. We look forward to the next gathering to continue to promote the development of PyTorch technology in the local area.

1. PyTorch Foundation Updates

PyTorch Board member Fred Li shared the latest updates in the PyTorch community, He reviewed the development history of the PyTorch community, explained in detail the growth path of community developers, encouraged everyone to delve deeper into technology, and introduced the upcoming PyTorch Conference 2024 related matters.

2. Intel’s Journey with PyTorch Democratizing AI with ubiquitous hardware and open software

PyTorch CPU module maintainer Jiong Gong shared 6-year technical contributions from Intel to PyTorch and its ecosystem, explored the remarkable advancements that Intel has made in both software and hardware democratizing AI, ensuring accessibility, and optimizing performance across a diverse range of Intel hardware platforms.

3. Exploring Multi-Backend Support in PyTorch Ecosystem: A Case Study of Ascend

Fengchun Hua, a PyTorch contributor from Huawei, took Huawei Ascend NPU as an example to demonstrate the latest achievements in multi-backend support for PyTorch applications. He introduced the hardware features of Huawei Ascend NPU and the infrastructure of CANN (Compute Architecture for Neural Networks), and explained the key achievements and innovations in native support work. He also shared the current challenges and the next work plan.

Yuanhao Ji, another PyTorch contributor from Huawei, then introduced the Autoload Device Extension proposal, explained its implementation details and value in improving the scalability of PyTorch, and introduced the latest work progress of the PyTorch Chinese community.

4. Intel XPU Backend for Inductor

Eikan is a PyTorch contributor from Intel. He focuses on torch.compile stack for both Intel CPU and GPU. In this session, Eikan presented Intel’s efforts on torch.compile for Intel GPUs. He provided updates on the current status of Intel GPUs within PyTorch, covering both functionality and performance aspects. Additionally, Eikan used Intel GPU as a case study to demonstrate how to integrate a new backend into the Inductor using Triton.

5. PyTorch PrivateUse1 Evolution Approaches and Insights

Jiawei Li, a PyTorch collaborator from Huawei, introduced PyTorch’s Dispatch mechanism and emphasized the limitations of DIspatchKey. He took Huawei Ascend NPU as an example to share the best practices of the PyTorch PrivateUse1 mechanism. He mentioned that while using the PrivateUse1 mechanism, Huawei also submitted many improvements and bug fixes for the mechanism to the PyTorch community. He also mentioned that due to the lack of upstream CI support for out-of-tree devices, changes in upstream code may affect their stability and quality, and this insight was recognized by everyone.

How Vidmob is using generative AI to transform its creative data landscape

This post was co-written with Mickey Alon from Vidmob.

Generative artificial intelligence (AI) can be vital for marketing because it enables the creation of personalized content and optimizes ad targeting with predictive analytics. Specifically, such data analysis can result in predicting trends and public sentiment while also personalizing customer journeys, ultimately leading to more effective marketing and driving business. For example, insights from creative data (advertising analytics) using campaign performance can not only uncover which creative works best but also help you understand the reasons behind its success.

In this post, we illustrate how Vidmob, a creative data company, worked with the AWS Generative AI Innovation Center (GenAIIC) team to uncover meaningful insights at scale within creative data using Amazon Bedrock. The collaboration involved the following steps:

- Use natural language to analyze and generate insights on performance data through different channels (such as TikTok, Meta, and Pinterest)

- Generate research information for context such as the value proposition, competitive differentiators, and brand identity of a specific client

Vidmob background

Vidmob is the Creative Data company that uses creative analytics and scoring software to make creative and media decisions for marketers and agencies as they strive to drive business results through improved creative effectiveness. Vidmob’s influence lies in its partnerships and native integrations across the digital ad landscape, its dozens of proprietary models, and operating a reinforcement learning with human feedback (RLHF) model for creativity.

Vidmob’s AI journey

Vidmob uses AI to not only enhance its creative data capabilities, but also pioneer advancements in the field of RLHF for creativity. By seamlessly integrating AI models such as Amazon Rekognition into its innovative stack, Vidmob has continually evolved to stay at the forefront of the creative data landscape.

This journey extends beyond the mere adoption of AI; Vidmob has consistently recognized the importance of curating a differentiated dataset to maximize the potential of its AI-driven solutions. Understanding the intrinsic value of data network effects, Vidmob constructed a product and operational system architecture designed to be the industry’s most comprehensive RLHF solution for marketing creatives.

Use case overview

Vidmob aims to revolutionize its analytics landscape with generative AI. The central goal is to empower customers to directly query and analyze their creative performance data through a chat interface. Over the past 8 years, Vidmob has amassed a wealth of data that provides deep insights into the value of creatives in ad campaigns and strategies for enhancing performance. Vidmob envisions making it effortless for customers to utilize this data to generate insights and make informed decisions about their creative strategies.

Currently, Vidmob and its customers rely on creative strategists to address these questions at the brand level, complemented by machine-generated normative insights at the industry or environment level. This process can take creative strategists many hours. To enhance the customer experience, Vidmob decided to partner with AWS GenAIIC to deliver these insights more quickly and automatically.

Vidmob partnered with AWS GenAIIC to analyze ad data to help Vidmob creative strategists understand the performance of customer ads. Vidmob’s ad data consists of tags created from Amazon Rekognition and other internal models. The chatbot built by AWS GenAIIC would take in this tag data and retrieve insights.

The following were key success criteria for the collaboration:

- Analyze and generate insights in a natural language based on performance data and other metadata

- Generate client company information to be used as initial research for a creative

- Create a scalable solution using Amazon Bedrock that can be integrated with Vidmob’s performance data

However, there were a few challenges in achieving these goals:

- Large language models (LLMs) are limited in the volume of data they can analyze to generate insights without hallucination. They are designed to predict and summarize text-based information and are less optimized for computing creative data at a terabyte scale.

- LLMs don’t have straightforward automatic evaluation techniques. Therefore, human evaluation was required for insights generated by the LLM.

- There are 50–100 creative questions that creative strategists would normally analyze, which means an asynchronous mechanism was needed that would queue up these prompts, aggregate them, and provide the top-most meaningful insights.

Solution overview

The AWS team worked with Vidmob to build a serverless architecture for handling incoming questions from customers. They used the following services in the solution:

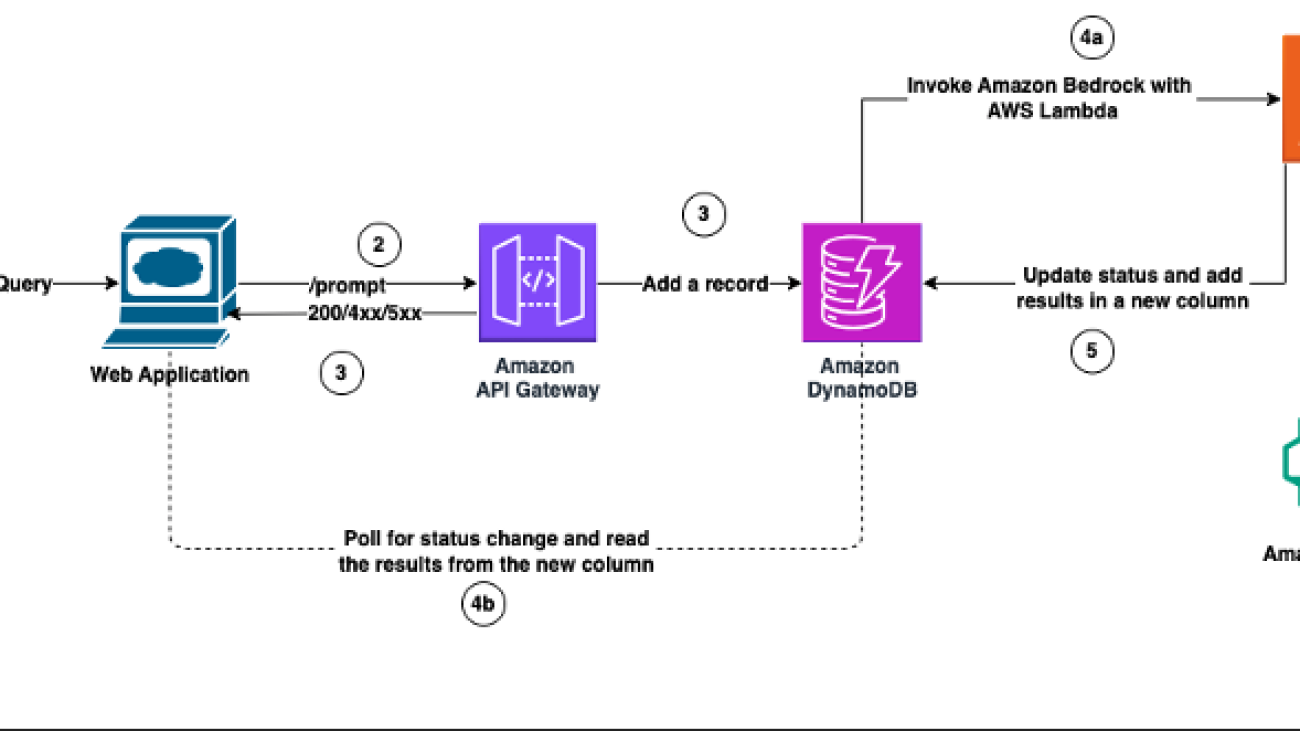

The following diagram illustrates the high-level workflow of the current solution:

The workflow consists of the following steps:

- The user navigates to Vidmob and asks a creative-related query.

- Dynamo DB stores the query and the session ID, which is then passed to a Lambda function as a DynamoDB event notification.

- The Lambda function calls Amazon Bedrock, obtains an output from the user query, and sends it back to the Streamlit application for the user to view.

- The Lambda function updates the status after it receives the completed output from Amazon Bedrock.

- In the following sections, we explore the details of the workflow, the dataset, and the results Vidmob achieved.

Workflow details

After the user inputs a query, a prompt is automatically created and then fed into a QA chatbot in which a response is outputted. The main aspects of the LLM prompt include:

- Client description – Background information about the client. This includes the value proposition, brand identity, and competitive differentiators, which is generated by Anthropic Claude v2 on Amazon Bedrock.

- Aperture – Important aspects to take into account for a user question. For example, for all questions relating to branding, “What is the best way to incorporate branding for my meta creative” might identify elements that include a logo, tagline, and sincere tone.

- Context – The filtered dataset of ad performance referenced by the QA bot.

- Question – The user query.

The following screenshot shows the UI where the user can input the client and their ad-related question.

On the backend, a router is used to determine the context (ad-related dataset) as a reference to answer the question. This depends on the question and the client, which is done in the following steps:

- Determine whether the question should reference the objective dataset (general for an entire channel like TikTok, Meta, Pinterest) or placement dataset (specific sub-channels like Facebook Reels). For example, “What is the best way to incorporate branding in my Meta creative” is objective-based, whereas “What is the best way to incorporate branding for Facebook News Feed” is placement-based because it references a specific part of the Meta creative.

- Obtain the corresponding objective dataset for the client if the query is objective-based. If it’s placement-based, first filter the placement dataset to only columns that are relevant to the query and then pass in the resulting dataset.

- Pass the completed prompt to the Anthropic’s Claude v2 model on Amazon Bedrock and display the outputs.

The outputs are displayed as shown in the following screenshot.

Specifically, the outputs include the elements that best answer the question, why this element may be important, and its corresponding percent lift for the creative.

Dataset

The dataset includes a set of ad-related data corresponding to a specific client. Specifically, Vidmob analyzes the client ad campaigns and extracts information related to the ads using various machine learning (ML) models and AWS services. The information about each campaign is collated into a single dataset (creative data). It notes how each element of a given creative performs under a certain metric; for example, how the CTA affects the view-through rate of the ad. The following two datasets were utilized:

- Creative strategist filtered performance data for each question – The dataset given was filtered by Vidmob creative strategists for their analysis. The filtered datasets include an element (such as logo or bright colors for a creative) as well as its corresponding average, percent lift (of a particular metric such as view-through rate), creative count, and impressions for each sub-channel (Facebook Explore, Reels, and so on).

- Unfiltered raw datasets – This dataset included objective-based and placement-based data for each client.

As we discussed earlier, there are two types of datasets for a particular client: objective-based and placement-based data. Objective data is used for answering generic user queries about ads for channels such as TikTok, Meta, or Pinterest, whereas placement data is used for answering specific questions about ads for sub-channels within Meta such as Facebook Reels, Instream, and News Feed. Therefore, questions such as “What are creative insights in my Meta creative” are more general and therefore reference the objective data, and questions such as “What are insights for Facebook News Feed” reference the News Feed statistics in the placement data.

The objective dataset includes elements and their corresponding average percent lift, creative count, p-values, and many more for an entire channel, whereas placement data includes these same statistics for each sub-channel.

Results

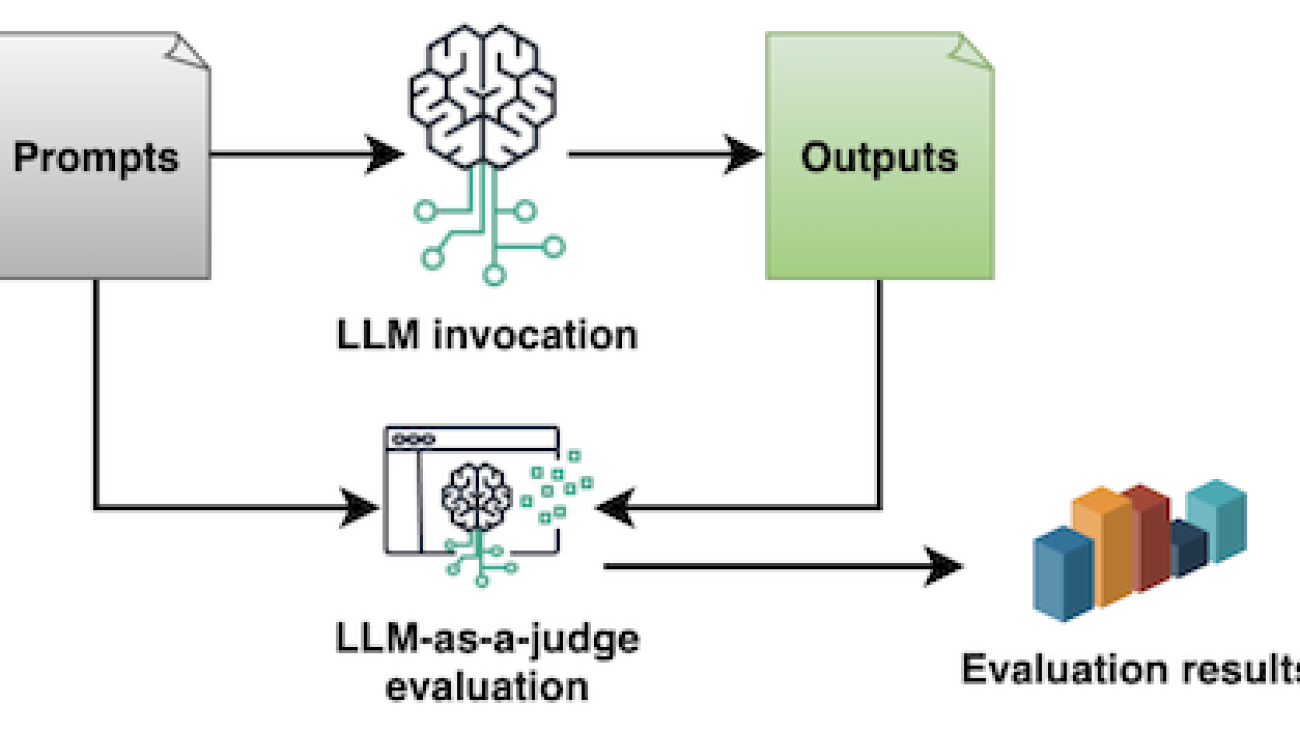

A set of questions were evaluated by the strategists for Vidmob, primarily for the following metrics:

- Accuracy – How correct the overall answer is with what you expect to be

- Relevancy – How relevant the LLM-generated output to the question is (or in this case, the background information for the client)

- Clarity – How clear and understandable the outputs from the performance data and their insights are, or if the LLM is making up things

The client background information for the prompt and a set of questions for the filtered and unfiltered data were evaluated.

Overall, the client background, generated by Anthropic’s Claude, outputted the value proposition, brand identity, and competitive differentiator for a given client. The accuracy and clarity were perfect, whereas relevancy was perfect for most samples. Perfect is determined as being given a 9/10 or 10/10 on the specific metrics by subject matter experts.

When answering a set of questions, the responses generally had high clarity and AWS GenAIIC was able to incrementally improve the QA chatbot’s accuracy and relevancy by adding extra tag information to filter the data by 10% and 5%, respectively. Overall, Vidmob expects a reduction in generating insights for creative campaigns from hours to minutes.

Conclusion

In this post, we shared how the AWS GenAIIC team used Anthropic’s Claude on Amazon Bedrock to extract and summarize insights from Vidmob’s performance data using zero-shot prompt engineering. With these services, creative strategists were able to understand client information through inherent knowledge of the LLM as well as answer user queries through added client background information and tag types such as messaging and branding. Such insights can be retrieved at scale and utilized for enhancing effective ad campaigns.

The success of this engagement allowed Vidmob an opportunity to use generative AI to create more valuable insights for customers in reduced time, allowing for a more scalable solution.

This is just one of the ways AWS enables builders to deliver generative AI-based solutions. You can get started with Amazon Bedrock and see how it can be integrated in example code bases today. If you’re interested in working with the AWS Generative AI Innovation Center, reach out to AWS GenAIIC.

About the Authors

Mickey Alon is a serial entrepreneur and co-author of ‘Mastering Product-Led Growth.’ He co-founded Gainsight PX (Vista) and Insightera (Adobe), a real-time personalization engine. He previously led the global product development team at Marketo (Adobe) and currently serves as the CPTO at Vidmob, a leading creative intelligence platform powered by GenAI.

Mickey Alon is a serial entrepreneur and co-author of ‘Mastering Product-Led Growth.’ He co-founded Gainsight PX (Vista) and Insightera (Adobe), a real-time personalization engine. He previously led the global product development team at Marketo (Adobe) and currently serves as the CPTO at Vidmob, a leading creative intelligence platform powered by GenAI.

Suren Gunturu is a Data Scientist working in the Generative AI Innovation Center, where he works with various AWS customers to solve high-value business problems. He specializes in building ML pipelines using Large Language Models, primarily through Amazon Bedrock and other AWS Cloud services.

Suren Gunturu is a Data Scientist working in the Generative AI Innovation Center, where he works with various AWS customers to solve high-value business problems. He specializes in building ML pipelines using Large Language Models, primarily through Amazon Bedrock and other AWS Cloud services.

Gaurav Rele is a Senior Data Scientist at the Generative AI Innovation Center, where he works with AWS customers across different verticals to accelerate their use of generative AI and AWS Cloud services to solve their business challenges.

Gaurav Rele is a Senior Data Scientist at the Generative AI Innovation Center, where he works with AWS customers across different verticals to accelerate their use of generative AI and AWS Cloud services to solve their business challenges.

Vidya Sagar Ravipati is a Science Manager at the Generative AI Innovation Center, where he leverages his vast experience in large-scale distributed systems and his passion for machine learning to help AWS customers across different industry verticals accelerate their AI and cloud adoption.

Vidya Sagar Ravipati is a Science Manager at the Generative AI Innovation Center, where he leverages his vast experience in large-scale distributed systems and his passion for machine learning to help AWS customers across different industry verticals accelerate their AI and cloud adoption.

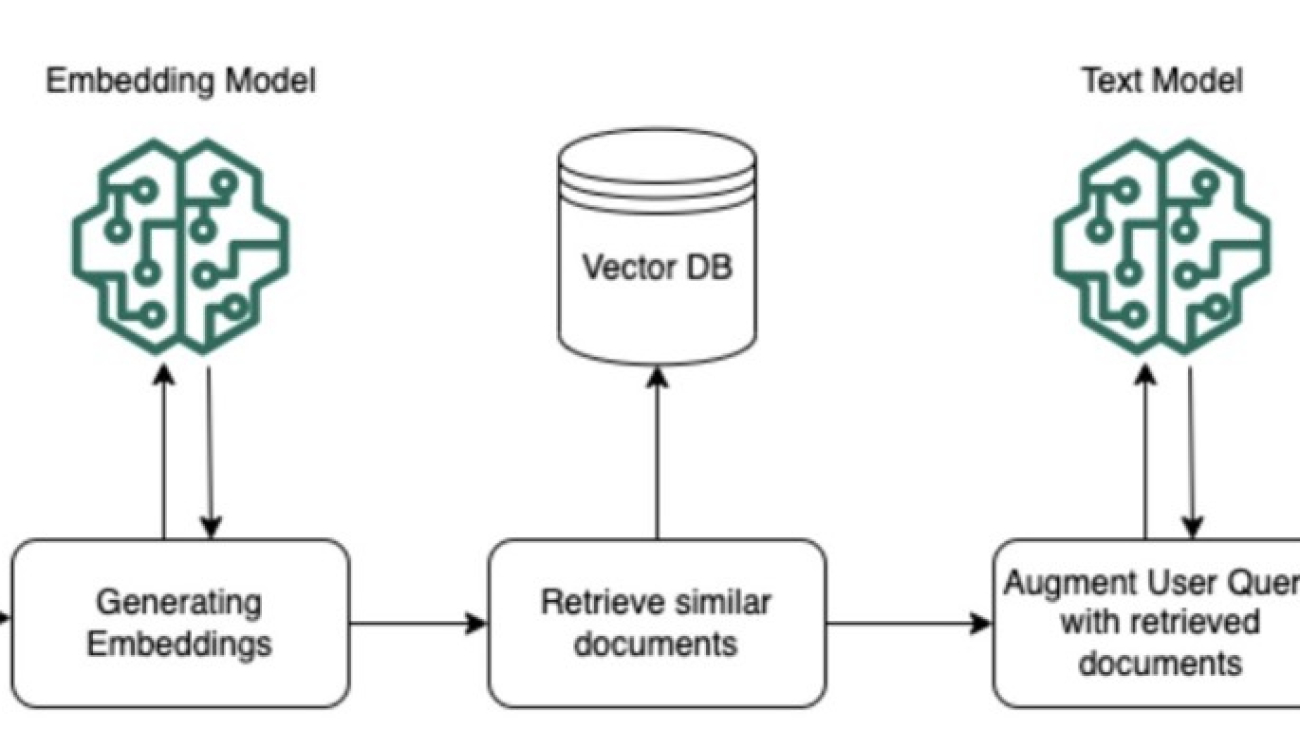

Fine-tune Llama 3 for text generation on Amazon SageMaker JumpStart

Generative artificial intelligence (AI) models have become increasingly popular and powerful, enabling a wide range of applications such as text generation, summarization, question answering, and code generation. However, despite their impressive capabilities, these models often struggle with domain-specific tasks or use cases due to their general training data. To address this challenge, fine-tuning these models on specific data is crucial for achieving optimal performance in specialized domains.

In this post, we demonstrate how to fine-tune the recently released Llama 3 models from Meta, specifically the llama-3-8b and llama-3-70b variants, using Amazon SageMaker JumpStart. The fine-tuning process is based on the scripts provided in the llama-recipes repo from Meta, utilizing techniques like PyTorch FSDP, PEFT/LoRA, and Int8 quantization for efficient fine-tuning of these large models on domain-specific datasets.

By fine-tuning the Meta Llama 3 models with SageMaker JumpStart, you can harness their improved reasoning, code generation, and instruction following capabilities tailored to your specific use cases.

Meta Llama 3 overview

Meta Llama 3 comes in two parameter sizes—8B and 70B with 8,000 context length—that can support a broad range of use cases with improvements in reasoning, code generation, and instruction following. Meta Llama 3 uses a decoder-only transformer architecture and new tokenizer that provides improved model performance with 128,000 context size. In addition, Meta improved post-training procedures that substantially reduced false refusal rates, improved alignment, and increased diversity in model responses. You can now derive the combined advantages of Meta Llama 3 performance and MLOps controls with Amazon SageMaker features such as Amazon SageMaker Pipelines and Amazon SageMaker Debugger. In addition, the model will be deployed in an AWS secure environment under your virtual private cloud (VPC) controls, helping provide data security.

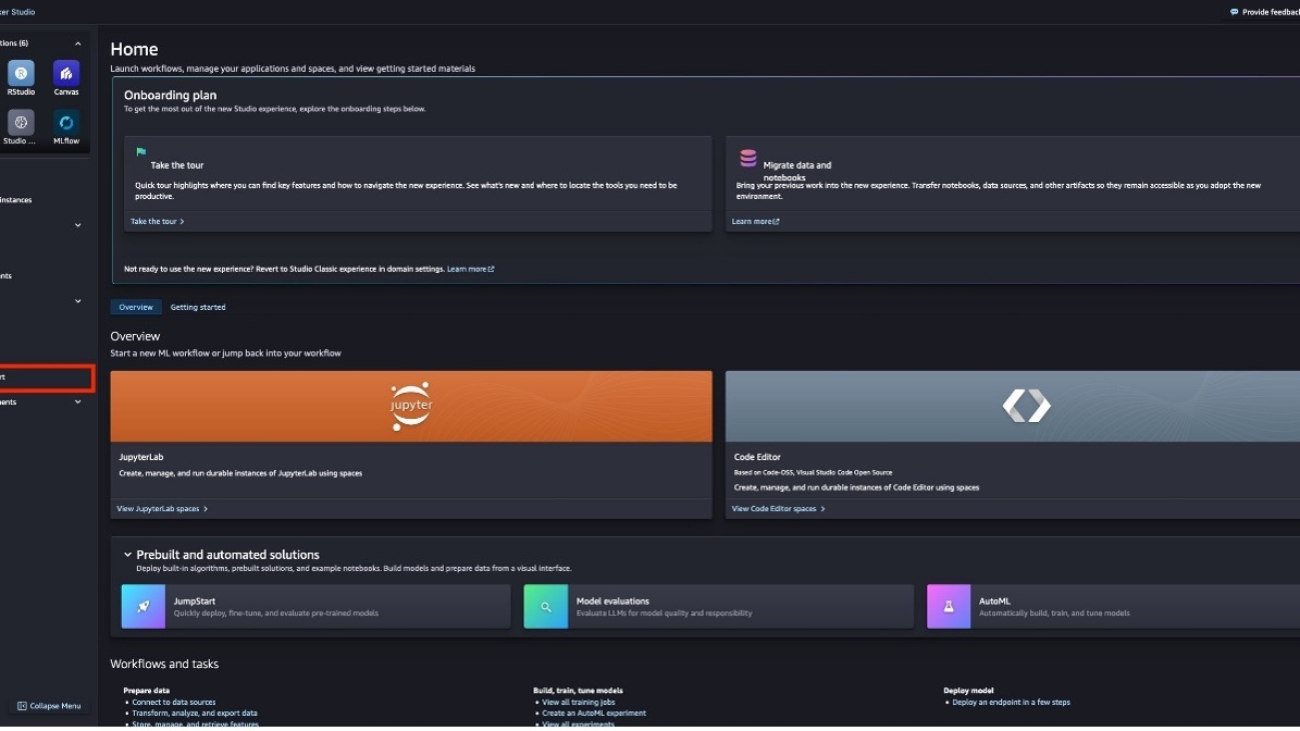

SageMaker JumpStart

SageMaker JumpStart is a powerful feature within the SageMaker machine learning (ML) environment that provides ML practitioners a comprehensive hub of publicly available and proprietary foundation models (FMs). With this managed service, ML practitioners get access to a growing list of cutting-edge models from leading model hubs and providers that they can deploy to dedicated SageMaker instances within a network isolated environment, and customize models using SageMaker for model training and deployment.

Prerequisites

To try out this solution using SageMaker JumpStart, you’ll need the following prerequisites:

- An AWS account that will contain all of your AWS resources.

- An AWS Identity and Access Management (IAM) role to access SageMaker. To learn more about how IAM works with SageMaker, refer to Identity and Access Management for Amazon SageMaker.

- Access to Amazon SageMaker Studio or a SageMaker notebook instance, or an interactive development environment (IDE) such as PyCharm or Visual Studio Code. We recommend using SageMaker Studio for straightforward deployment and inference.

Fine-tune Meta Llama 3 models

In this section, we discuss the steps to fine-tune Meta Llama 3 models. We’ll cover two approaches: using the SageMaker Studio UI for a no-code solution, and utilizing the SageMaker Python SDK.

No-code fine-tuning through the SageMaker Studio UI

SageMaker JumpStart provides access to publicly available and proprietary foundation models from third-party and proprietary providers. Data scientists and developers can quickly prototype and experiment with various ML use cases, accelerating the development and deployment of ML applications. It helps reduce the time and effort required to build ML models from scratch, allowing teams to focus on fine-tuning and customizing the models for their specific use cases. These models are released under different licenses designated by their respective sources. It’s essential to review and adhere to the applicable license terms before downloading or using these models to make sure they’re suitable for your intended use case.

You can access the Meta Llama 3 FMs through SageMaker JumpStart in the SageMaker Studio UI and the SageMaker Python SDK. In this section, we cover how to discover these models in SageMaker Studio.

SageMaker Studio is an IDE that offers a web-based visual interface for performing the ML development steps, from data preparation to model building, training, and deployment. For instructions on getting started and setting up SageMaker Studio, refer to Amazon SageMaker Studio.

When you’re in SageMaker Studio, you can access SageMaker JumpStart by choosing JumpStart in the navigation pane.

In the JumpStart view, you’re presented with the list of public models offered by SageMaker. You can explore other models from other providers in this view. To start using the Meta Llama 3 models, under Providers, choose Meta.

You’re presented with a list of the models available. Choose the Meta-Llama-3-8B-Instruct model.

Here you can view the model details, as well as train, deploy, optimize, and evaluate the model. For this demonstration, we choose Train.

On this page, you can point to the Amazon Simple Storage Service (Amazon S3) bucket containing the training and validation datasets for fine-tuning. In addition, you can configure deployment configuration, hyperparameters, and security settings for fine-tuning. Choose Submit to start the training job on a SageMaker ML instance.

Deploy the model

After the model is fine-tuned, you can deploy it using the model page on SageMaker JumpStart. The option to deploy the fine-tuned model will appear when fine-tuning is finished, as shown in the following screenshot.

You can also deploy the model from this view. You can configure endpoint settings such as the instance type, number of instances, and endpoint name. You will need to accept the End User License Agreement (EULA) before you can deploy the model.

Fine-tune using the SageMaker Python SDK

You can also fine-tune Meta Llama 3 models using the SageMaker Python SDK. A sample notebook with the full instructions can be found on GitHub. The following code example demonstrates how to fine-tune the Meta Llama 3 8B model:

The code sets up a SageMaker JumpStart estimator for fine-tuning the Meta Llama 3 large language model (LLM) on a custom training dataset. It configures the estimator with the desired model ID, accepts the EULA, enables instruction tuning by setting instruction_tuned="True", sets the number of training epochs, and initiates the fine-tuning process.

When the fine-tuning job is complete, you can deploy the fine-tuned model directly from the estimator, as shown in the following code. As part of the deploy settings, you can define the instance type you want to deploy the model on. For the full list of deployment parameters, refer to the deploy parameters in the SageMaker SDK documentation.

After the endpoint is up and running, you can perform an inference request against it using the predictor object as follows:

For the full list of predictor parameters, refer to the predictor object in the SageMaker SDK documentation.

Fine-tuning technique

Language models such as Meta Llama are more than 10 GB or even 100 GB in size. Fine-tuning such large models requires instances with significantly higher CUDA memory. Furthermore, training these models can be very slow due to their size. Therefore, for efficient fine-tuning, we use the following optimizations:

- Low-Rank Adaptation (LoRA) – This is a type of parameter efficient fine-tuning (PEFT) for efficient fine-tuning of large models. In this, we freeze the whole model and only add a small set of adjustable parameters or layers into the model. For instance, instead of training all 8 billion parameters for Llama 3 8B, we can fine-tune less than 1% of the parameters. This helps significantly reduce the memory requirement because we only need to store gradients, optimizer states, and other training-related information for only 1% of the parameters. Furthermore, this helps reduce both training time and cost. For more details on this method, refer to LoRA: Low-Rank Adaptation of Large Language Models.

- Int8 quantization – Even with optimizations such as LoRA, models like Meta Llama 70B require significant computational resources for training. To reduce the memory footprint during training, we can employ Int8 quantization. Quantization typically reduces the precision of the floating-point data types. Although this decreases the memory required to store model weights, it can potentially degrade the performance due to loss of information. However, Int8 quantization utilizes only a quarter of the precision compared to full-precision training, but it doesn’t incur significant degradation in performance. Instead of simply dropping bits, Int8 quantization rounds the data from one type to another, preserving the essential information while optimizing memory usage. To learn about Int8 quantization, refer to int8(): 8-bit Matrix Multiplication for Transformers at Scale.

- Fully Sharded Data Parallel (FSDP) – This is a type of data parallel training algorithm that shards the model’s parameters across data parallel workers and can optionally offload part of the training computation to the CPUs. Although the parameters are sharded across different GPUs, computation of each microbatch is local to the GPU worker. It shards parameters more uniformly and achieves optimized performance through communication and computation overlapping during training.

The following table compares different methods with the two Meta Llama 3 models.

| Default Instance Type | Supported Instance Types with Default configuration | Default Setting | LORA + FSDP | LORA + No FSDP | Int8 Quantization + LORA + No FSDP | |

| Llama 3 8B | ml.g5.12xlarge | ml.g5.12xlarge, ml.g5.24xlarge, ml.g5.48xlarge | LORA + FSDP | Yes | Yes | Yes |

| Llama 3 70B | ml.g5.48xlarge | ml.g5.48xlarge | INT8 + LORA + NO FSDP | No | No | Yes |

Fine-tuning of Meta Llama models is based on scripts provided by the GitHub repo.

Training dataset format

SageMaker JumpStart currently support datasets in both domain adaptation format and instruction tuning format. In this section, we specify an example dataset in both formats. For more details, refer to the Dataset formatting section in the appendix.

Domain adaptation format

The Meta Llama 3 text generation model can be fine-tuned on domain-specific datasets, enabling it to generate relevant text and tackle various natural language processing (NLP) tasks within a particular domain using few-shot prompting. This fine-tuning process involves providing the model with a dataset specific to the target domain. The dataset can be in various formats, such as CSV, JSON, or TXT files. For example, if you want to fine-tune the model for the domain of financial reports and filings, you could provide it with a text file containing SEC filings from a company like Amazon. The following is an excerpt from such a filing:

Instruction tuning format

In instruction fine-tuning, the model is fine-tuned for a set of NLP tasks described using instructions. This helps improve the model’s performance for unseen tasks with zero-shot prompts. In instruction tuning dataset format, you specify the template.json file describing the input and the output formats and the train.jsonl file with the training data item in each line.

The template.json file always has the following JSON format:

For instance, the following table shows the template.json and train.jsonl files for the Dolly and Dialogsum datasets.

| Dataset | Use Case | template.json | train.jsonl |

| Dolly | Question Answering | { “prompt”: “Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:n{instruction}nn### Input:n{context}nn”, “completion”: ” {response}” } |

{ “instruction”: “Who painted the Two Monkeys”, “context”: “Two Monkeys or Two Chained Monkeys is a 1562 painting by Dutch and Flemish Renaissance artist Pieter Bruegel the Elder. The work is now in the Gemäldegalerie (Painting Gallery) of the Berlin State Museums.”, “response”: “The two Monkeys or Two Chained Monkeys is a 1562 painting by Dutch and Flemish Renaissance artist Pieter Bruegel the Elder. The work is now in the Gemaeldegalerie (Painting Gallery) of the Berlin State Museums.” } |

| Dialogsum | Text Summarization | { “prompt”: “Below is a Instruction that holds conversation which describes discussion between two people.Write a response that appropriately summarizes the conversation.nn### Instruction:n{dialogue}nn”, “completion”: ” {summary}” } |

{ “dialogue”: “#Person1#: Where do these flower vases come from? n#Person2#: They are made a town nearby. The flower vases are made of porcelain and covered with tiny bamboo sticks. n#Person1#: Are they breakable? n#Person2#: No. They are not only ornmamental, but also useful. n#Person1#: No wonder it’s so expensive. “, “summary”: “#Person2# explains the flower vases’ materials and advantages and #Person1# understands why they’re expensive.” } |

Supported hyperparameters for training

The fine-tuning process for Meta Llama 3 models allows you to customize various hyperparameters, each of which can influence factors such as memory consumption, training speed, and the performance of the fine-tuned model. At the time of writing this post, the following are the default hyperparameter values. For the most up-to-date information, refer to the SageMaker Studio console, because these values may be subject to change.

- epoch – The number of passes that the fine-tuning algorithm takes through the training dataset. Must be an integer greater than 1. Default is 5.

- learning_rate – The rate at which the model weights are updated after working through each batch of training examples. Must be a positive float greater than 0. Default is 0.0001.

- lora_r – Lora R dimension. Must be a positive integer. Default is 8.

- lora_alpha – Lora Alpha. Must be a positive integer. Default is 32.

- target_modules – Target modules for LoRA fine-tuning. You can specify a subset of [‘q_proj’,’v_proj’,’k_proj’,’o_proj’,’gate_proj’,’up_proj’,’down_proj’] modules as a string separated by a comma without any spaces. Default is

q_proj,v_proj. - lora_dropout – Lora Dropout. Must be a positive float between 0 and 1. Default is 0.05.

- instruction_tuned – Whether to instruction-train the model or not. At most one of

instruction_tunedandchat_datasetcan beTrue. Must beTrueorFalse. Default isFalse. - chat_dataset – If

True, dataset is assumed to be in chat format. At most one ofinstruction_tunedandchat_datasetcan beTrue. Default isFalse. - add_input_output_demarcation_key – For an instruction tuned dataset, if this is

True, a demarcation key ("### Response:n") is added between the prompt and completion before training. Default isTrue. - per_device_train_batch_size – The batch size per GPU core/CPU for training. Default is

1. - per_device_eval_batch_size – The batch size per GPU core/CPU for evaluation. Default is

1. - max_train_samples – For debugging purposes or quicker training, truncate the number of training examples to this value. Value

-1means using all of the training samples. Must be a positive integer or-1. Default is-1. - max_val_samples – For debugging purposes or quicker training, truncate the number of validation examples to this value. Value

-1means using all of the validation samples. Must be a positive integer or-1. Default is-1. - seed – Random seed that will be set at the beginning of training. Default is

10. - max_input_length – Maximum total input sequence length after tokenization. Sequences longer than this will be truncated. If

-1,max_input_lengthis set to the minimum of 1024 and the maximum model length defined by the tokenizer. If set to a positive value,max_input_lengthis set to the minimum of the provided value and themodel_max_lengthdefined by the tokenizer. Must be a positive integer or-1. Default is-1. - validation_split_ratio – If validation channel is

None, ratio of train-validation split from the train data must be between 0–1. Default is0.2. - train_data_split_seed – If validation data is not present, this fixes the random splitting of the input training data to training and validation data used by the algorithm. Must be an integer. Default is

0. - preprocessing_num_workers – The number of processes to use for preprocessing. If

None, the main process is used for preprocessing. Default isNone. - int8_quantization – If

True, the model is loaded with 8-bit precision for training. Default for 8B isFalse. Default for 70B isTrue. - enable_fsdp – If

True, training uses FSDP. Default for 8B isTrue. Default for 70B isFalse.

Instance types and compatible hyperparameters

The memory requirement during fine-tuning may vary based on several factors:

- Model type – The 8B model has the smallest GPU memory requirement and the 70B model has a largest memory requirement

- Max input length – A higher value of input length leads to processing more tokens at a time and as such requires more CUDA memory

- Batch size – A larger batch size requires larger CUDA memory and therefore requires larger instance types

- Int8 quantization – If using Int8 quantization, the model is loaded into low precision mode and therefore requires less CUDA memory

To help you get started, we provide a set of combinations of different instance types, hyperparameters, and model types that can be successfully fine-tuned. You can select a configuration as per your requirements and availability of instance types. We fine-tune all three models on a variety of settings with three epochs on a subset of the Dolly dataset with summarization examples.

8B model

| Instance Type | Max Input Length | Per Device Batch Size | Int8 Quantization | Enable FSDP | Time Taken (Minutes) |

| ml.g4dn.12xlarge | 1024 | 2 | TRUE | FALSE | 202 |

| ml.g4dn.12xlarge | 2048 | 2 | TRUE | FALSE | 192 |

| ml.g4dn.12xlarge | 1024 | 2 | FALSE | TRUE | 98 |

| ml.g4dn.12xlarge | 1024 | 4 | TRUE | FALSE | 200 |

| ml.g5.12xlarge | 2048 | 2 | TRUE | FALSE | 73 |

| ml.g5.12xlarge | 1024 | 2 | TRUE | FALSE | 88 |

| ml.g5.12xlarge | 2048 | 2 | FALSE | TRUE | 24 |

| ml.g5.12xlarge | 1024 | 2 | FALSE | TRUE | 35 |

| ml.g5.12xlarge | 2048 | 4 | TRUE | FALSE | 72 |

| ml.g5.12xlarge | 1024 | 4 | TRUE | FALSE | 83 |

| ml.g5.12xlarge | 1024 | 4 | FALSE | TRUE | 25 |

| ml.g5.12xlarge | 1024 | 8 | TRUE | FALSE | 83 |

| ml.g5.24xlarge | 2048 | 2 | TRUE | FALSE | 73 |

| ml.g5.24xlarge | 1024 | 2 | TRUE | FALSE | 86 |

| ml.g5.24xlarge | 2048 | 2 | FALSE | TRUE | 24 |

| ml.g5.24xlarge | 1024 | 2 | FALSE | TRUE | 35 |

| ml.g5.24xlarge | 2048 | 4 | TRUE | FALSE | 72 |

| ml.g5.24xlarge | 1024 | 4 | TRUE | FALSE | 83 |

| ml.g5.24xlarge | 1024 | 4 | FALSE | TRUE | 25 |

| ml.g5.24xlarge | 1024 | 8 | TRUE | FALSE | 82 |

| ml.g5.48xlarge | 2048 | 2 | TRUE | FALSE | 73 |

| ml.g5.48xlarge | 1024 | 2 | TRUE | FALSE | 87 |

| ml.g5.48xlarge | 2048 | 2 | FALSE | TRUE | 27 |

| ml.g5.48xlarge | 1024 | 2 | FALSE | TRUE | 48 |

| ml.g5.48xlarge | 2048 | 4 | TRUE | FALSE | 71 |

| ml.g5.48xlarge | 1024 | 4 | TRUE | FALSE | 82 |

| ml.g5.48xlarge | 1024 | 4 | FALSE | TRUE | 32 |

| ml.g5.48xlarge | 1024 | 8 | TRUE | FALSE | 81 |

| ml.p3dn.24xlarge | 2048 | 2 | TRUE | FALSE | 104 |

| ml.p3dn.24xlarge | 1024 | 2 | TRUE | FALSE | 114 |

70B model

| Instance Type | Max Input Length | Per Device Batch Size | Int8 Quantization | Enable FSDP | Time Taken (Minutes) |

| ml.g5.48xlarge | 1024 | 1 | TRUE | FALSE | 461 |

| ml.g5.48xlarge | 2048 | 1 | TRUE | FALSE | 418 |

| ml.g5.48xlarge | 1024 | 2 | TRUE | FALSE | 423 |

Recommendations on instance types and hyperparameters

When fine-tuning the model’s accuracy, keep in mind the following:

- Larger models such as 70B provide better performance than 8B

- Performance without Int8 quantization is better than performance with Int8 quantization

Note the following training time and CUDA memory requirements:

- Setting

int8_quantization=Truedecreases the memory requirement and leads to faster training. - Decreasing

per_device_train_batch_sizeandmax_input_lengthreduces the memory requirement and therefore can be run on smaller instances. However, setting very low values may increase the training time. - If you’re not using Int8 quantization (

int8_quantization=False), use FSDP (enable_fsdp=True) for faster and efficient training.

When choosing the instance type, consider the following:

- At the time of writing this post, the G5 instances provided the most efficient training among the supported instance types. However, because AWS regularly updates and introduces new instance types, we recommend that you validate the recommended instance type for Meta Llama 3 fine-tuning in the SageMaker documentation or SageMaker console before proceeding.

- Training time largely depends on the amount of GPUs and the CUDA memory available. Therefore, training on instances with the same number of GPUs (for example, ml.g5.2xlarge and ml.g5.4xlarge) is roughly the same. Therefore, you can use the more cost effective instance for training (ml.g5.2xlarge).

To learn about the cost of training per instance, refer to Amazon EC2 G5 Instances.

If your dataset is in instruction tuning format, where each sample consists of an instruction (input) and the desired model response (completion), and these input+completion sequences are short (for example, 50–100 words), using a high value for max_input_length can lead to poor performance. This is because the model may struggle to focus on the relevant information when dealing with a large number of padding tokens, and it can also lead to inefficient use of computational resources. The default value of -1 corresponds to a max_input_length of 1024 for Llama models. We recommend setting max_input_length to a smaller value (for example, 200–400) when working with datasets containing shorter input+completion sequences to mitigate these issues and potentially improve the model’s performance and efficiency.

Lastly, due to the high demand of the G5 instances, you may experience unavailability of these instances in your AWS Region with the error “CapacityError: Unable to provision requested ML compute capacity. Please retry using a different ML instance type.” If you experience this error, retry the training job or try a different Region.

Issues when fine-tuning large models

In this section, we discuss two issues when fine-tuning very large models.

Disable output compression

By default, the output of a training job is a trained model that is compressed in a .tar.gz format before it’s uploaded to Amazon S3. However, for large models like the 70B model, this compression step can be time-consuming, taking more than 4 hours. To mitigate this delay, it’s recommended to use the disable_output_compression feature supported by the SageMaker training environment. When disable_output_compression is set to True, the model is uploaded without any compression, which can significantly reduce the time taken for large model artifacts to be uploaded to Amazon S3. The uncompressed model can then be used directly for deployment or further processing. The following code shows how to pass this parameter into the SageMaker JumpStart estimator:

SageMaker Studio kernel timeout issue

Due to the size of the Meta Llama 3 70B model, the training job may take several hours to complete. The SageMaker Studio kernel is only used to initiate the training job, and its status doesn’t affect the ongoing training process. After the training job starts, the compute resources allocated for the job will continue running the training process, regardless of whether the SageMaker Studio kernel remains active or times out. If the kernel times out during the lengthy training process, you can still deploy the endpoint after training is complete using the training job name with the following code:

To find the training job name, navigate to the SageMaker console and under Training in the navigation pane, choose Training jobs. Identify the training job name and substitute it in the preceding code.

Clean up

To prevent incurring unnecessary charges, it’s recommended to clean up the deployed resources when you’re done using them. You can remove the deployed model with the following code:

Conclusion

In this post, we discussed fine-tuning Meta Llama 3 models using SageMaker JumpStart. We showed that you can use the SageMaker JumpStart console in SageMaker Studio or the SageMaker Python SDK to fine-tune and deploy these models. We also discussed the fine-tuning technique, instance types, and supported hyperparameters. In addition, we outlined recommendations for optimized training based on various tests we carried out.

The results for fine-tuning the three models over two datasets are shown in the appendix at the end of this post. As we can see from these results, fine-tuning improves summarization compared to non-fine-tuned models.

As a next step, you can try fine-tuning these models on your own dataset using the code provided in the GitHub repository to test and benchmark the results for your use cases.

About the Authors

Ben Friebe is a Senior Solutions Architect at Amazon Web Services, based in Brisbane, Australia. He likes computers.

Ben Friebe is a Senior Solutions Architect at Amazon Web Services, based in Brisbane, Australia. He likes computers.

Pavan Kumar Rao Navule is a Solutions Architect at Amazon Web Services, where he works with ISVs in India to help them innovate on the AWS platform. He is specialized in architecting AI/ML and generative AI services at AWS. Pavan is a published author for the book “Getting Started with V Programming.” In his free time, Pavan enjoys listening to the great magical voices of Sia and Rihanna.

Pavan Kumar Rao Navule is a Solutions Architect at Amazon Web Services, where he works with ISVs in India to help them innovate on the AWS platform. He is specialized in architecting AI/ML and generative AI services at AWS. Pavan is a published author for the book “Getting Started with V Programming.” In his free time, Pavan enjoys listening to the great magical voices of Sia and Rihanna.

Khush Patel Khush Patel is a Solutions Architect at Amazon Web Services based out of Houston, Texas. He’s passionate about working with customers to deliver business value using technology. He has a multitude of experience with customers working with Machine Learning and GenerativeAI workloads. In his free time, Khush enjoys watching sports and reading.

Khush Patel Khush Patel is a Solutions Architect at Amazon Web Services based out of Houston, Texas. He’s passionate about working with customers to deliver business value using technology. He has a multitude of experience with customers working with Machine Learning and GenerativeAI workloads. In his free time, Khush enjoys watching sports and reading.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Appendix

This appendix provides additional information about performance benchmarking and dataset formatting.

Performance benchmarking

In this section, we provide results for fine-tuning the two Meta Llama 3 models (8B and 70B) on two different datasets: Dolly and Dialogsum. For the Dolly dataset, our task is to summarize a paragraph of text, whereas for Dialogsum, we are fine-tuning the model to summarize a discussion between two people. In the following tables, we show the input to the model (prompt and instructions), ground truth (summary), response from the pre-trained Meta Llama 3 model, and response from the fine-tuned Meta Llama 3 model for each of the models. We show inference results for five data points. You can notice from the following tables that the summaries improve for both the datasets when we fine-tune the models.

Results for fine-tuning the Meta Llama 3 8B text generation model on the Dolly dataset

| Inputs | Ground Truth | Response from Non-Fine-Tuned Model | Response from Fine-Tuned Model |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nCan you generate a 2 lines abstract of this text?nn### Input:nIn physics, gravity (from Latin gravitas ‘weight’) is a fundamental interaction which causes mutual attraction between all things with mass or energy. Gravity is, by far, the weakest of the four fundamental interactions, approximately 1038 times weaker than the strong interaction, 1036 times weaker than the electromagnetic force and 1029 times weaker than the weak interaction. As a result, it has no significant influence at the level of subatomic particles. However, gravity is the most significant interaction between objects at the macroscopic scale, and it determines the motion of planets, stars, galaxies, and even light.nnOn Earth, gravity gives weight to physical objects, and the Moon’s gravity is responsible for sublunar tides in the oceans (the corresponding antipodal tide is caused by the inertia of the Earth and Moon orbiting one another). Gravity also has many important biological functions, helping to guide the growth of plants through the process of gravitropism and influencing the circulation of fluids in multicellular organisms. Investigation into the effects of weightlessness has shown that gravity may play a role in immune system function and cell differentiation within the human body.nnThe gravitational attraction between the original gaseous matter in the universe allowed it to coalesce and form stars which eventually condensed into galaxies, so gravity is responsible for many of the large-scale structures in the universe. Gravity has an infinite range, although its effects become weaker as objects get farther away.nnGravity is most accurately described by the general theory of relativity (proposed by Albert Einstein in 1915), which describes gravity not as a force, but as the curvature of spacetime, caused by the uneven distribution of mass, and causing masses to move along geodesic lines. The most extreme example of this curvature of spacetime is a black hole, from which nothing—not even light—can escape once past the black hole’s event horizon However, for most applications, gravity is well approximated by Newton’s law of universal gravitation, which describes gravity as a force causing any two bodies to be attracted toward each other, with magnitude proportional to the product of their masses and inversely proportional to the square of the distance between them.nnnn### Response:n | In general physics theory (Newton), Gravity is a mutual attraction between objects based on their respective masses and the square of the distance which separates them. In the relativity theory (Einstein), it’s a curvature of the spacetime generated by the objects themselves. | Gravity is a fundamental interaction which causes mutual attraction between all things with mass or energy. It is the weakest of the four fundamental interactions, but it is the most significant interaction between objects at the macroscopic scale, and it determines the motion of planets, stars, galaxies, and even light. Gravity gives weight to physical objects, and the Moon’s gravity is responsible for sublunar tides in the oceans. It also has many important biological functions, helping to guide the growth of plants through the process | Gravity is a fundamental interaction which causes mutual attraction between all things with mass or energy. Gravity is, by far, the weakest of the four fundamental interactions, approximately 1038 times weaker than the strong interaction, 1036 times weaker than the electromagnetic force and 1029 times weaker than the weak interaction. Gravity is most accurately described by the general theory of relativity (proposed by Albert Einstein in 1915), which describes gravity not as a force, but as the curvature of spacetime |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhat is ProSavinnn### Input:nProSavin is an experimental drug believed to be of use in the treatment of Parkinson’s disease. It is administered to the striatum in the brain, inducing production of dopamine.nnIt is manufactured by Oxford BioMedica. Results from a Phase I/II clinical trial were published in the Lancet and showed safety, but little efficacy. ProSavin was superseded by AXO-Lenti-PD (OXB-102), an optimized version of the drug.nnnn### Response:n | ProSavin is an experimental drug believed to be of use in the treatment of Parkinson’s disease. It is administered to the striatum in the brain, inducing production of dopamine. | ProSavin is an experimental drug believed to be of use in the treatment of Parkinson’s disease. It is administered to the striatum in the brain, inducing production of dopamine.nnIt is manufactured by Oxford BioMedica. Results from a Phase I/II clinical trial were published in the Lancet and showed safety, but little efficacy. ProSavin was superseded by AXO-Lenti-PD (OXB-102), an optimized version of the drug. | ProSavin is an experimental drug believed to be of use in the treatment of Parkinson’s disease. It is administered to the striatum in the brain, inducing production of dopamine.nnIt is manufactured by Oxford BioMedica. Results from a Phase I/II clinical trial were published in the Lancet and showed safety, but little efficacy. ProSavin was superseded by AXO-Lenti-PD (OXB-102), an optimized version of the drug. |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWho were the Beatles?nn### Input:nThe Beatles were an English rock band, formed in Liverpool in 1960, that comprised John Lennon, Paul McCartney, George Harrison and Ringo Starr. They are regarded as the most influential band of all time and were integral to the development of 1960s counterculture and popular music’s recognition as an art form. Rooted in skiffle, beat and 1950s rock ‘n’ roll, their sound incorporated elements of classical music and traditional pop in innovative ways; the band also explored music styles ranging from folk and Indian music to psychedelia and hard rock. As pioneers in recording, songwriting and artistic presentation, the Beatles revolutionised many aspects of the music industry and were often publicised as leaders of the era’s youth and sociocultural movements.nnnn### Response:n | The Beatles were a rock band comprised of 4 musicians. They were pioneers who had a huge influence on the music industry and wider sociocultural movements. | The Beatles were an English rock band, formed in Liverpool in 1960, that comprised John Lennon, Paul McCartney, George Harrison and Ringo Starr. They are regarded as the most influential band of all time and were integral to the development of 1960s counterculture and popular music’s recognition as an art form. Rooted in skiffle, beat and 1950s rock ‘n’ roll, their sound incorporated elements of classical music and traditional pop in innovative ways; the | The Beatles were an English rock band, formed in Liverpool in 1960, that comprised John Lennon, Paul McCartney, George Harrison and Ringo Starr. They are regarded as the most influential band of all time and were integral to the development of 1960s counterculture and popular music’s recognition as an art form. Rooted in skiffle, beat and 1950s rock ‘n’ roll, their sound incorporated elements of classical music and traditional pop in innovative ways; the |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nSummarize what Alpha Phi is.nn### Input:nAlpha Phi International Women’s Fraternity (ΑΦ, also known as APhi) is an international sorority with 172 active chapters and over 250,000 initiated members.nnFounded at Syracuse University in Syracuse, New York on September 18, 1872, it is the fourth Greek-letter organization founded for women, and the first women’s fraternity founded in the northeast.nnAlpha Phi is a member of the National Panhellenic Conference, the governing council of 26 women’s fraternities. Alpha Phi’s international headquarters are located in Evanston, Illinois.nnnn### Response:n | Alpha Phi is one of the first women’s fraternities founded in the Northeast. They have over 172 active chapters at College campuses and over 250,000 initiated members. Alpha Phi was founded at Syracuse University in Syracuse, New York on September 18, 1872. | Alpha Phi is an international sorority with 172 active chapters and over 250,000 initiated members. Founded at Syracuse University in Syracuse, New York on September 18, 1872, it is the fourth Greek-letter organization founded for women, and the first women’s fraternity founded in the northeast. Alpha Phi is a member of the National Panhellenic Conference, the governing council of 26 women’s fraternities. Alpha Phi’s international headquarters are located in Evanston, Illinois | Alpha Phi is an international sorority with 172 active chapters and over 250,000 initiated members. It was founded at Syracuse University in Syracuse, New York on September 18, 1872, and is the fourth Greek-letter organization founded for women, and the first women’s fraternity founded in the northeast. Alpha Phi is a member of the National Panhellenic Conference, the governing council of 26 women’s fraternities. Alpha Phi’s international headquarters are located in Evanston |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nplease give me a short description about SVB collapsenn### Input:nOn Friday, March 10, 2023, Silicon Valley Bank (SVB) failed after a bank run, marking the second-largest bank failure in United States history and the largest since the 2007–2008 financial crisis. It was one of three March 2023 United States bank failures.nnnn### Response:n | It’s due to risk mismanagement of SVB Bank, where the bank bet on long dated treasury bond. When the bank runs happen, SVB don’t have a choice but to sell those long dated bond at a steep discount and incur huge lossses. | Silicon Valley Bank (SVB) was a bank that failed in 2023. It was one of the largest banks in the United States, and its failure had a significant impact on the financial markets. The bank was founded in 1983 and was headquartered in Santa Clara, California. It was known for its focus on the technology industry and its support of startups and venture capital firms. However, in 2023, the bank faced a number of challenges, including a decline in the value | Silicon Valley Bank (SVB) failed after a bank run, marking the second-largest bank failure in United States history and the largest since the 2007–2008 financial crisis. It was one of three March 2023 United States bank failures. |

Results for fine-tuning the Meta Llama 3 8B text generation model on the Dialogsum dataset

| Inputs | Ground Truth | Response from Non-Fine-Tuned Model | Response from Fine-Tuned Model |

| Below is a Instruction that holds conversation which describes discussion between two people.Write a response that appropriately summarizes the conversation.nn### Instruction:n#Person1#: Good moring, madam. Can I help you? n#Person2#: Good moring. I am Sunfang. Yesterday Mr. Smith told me to come for a job interview this morning. Is he available now? n#Person1#: Yes, he is waiting for you in Room 123. n#Person2#: Thank you very much. May I come in? n#Person3#: Come in, please. n#Person2#: Good moring, Mr. Smith. I am Sunfang coming for an interview. n#Person3#: Nice to see you. Take a seat, please. n#Person2#: Thank you. n#Person3#: We’ve been looking over your application. I see you’ve got several years experience in secretarial work. What kind of work did you do in your previous job? n#Person2#: I mainly did typing, filing, answering telephone calls and such routine work in the beginning. I started the job as a junior secretary, but within the year I was promoted to senior secretary of Southeast Company. I drafted business correspondence for the general manager. n#Person3#: Then what’s the reason that promoted you to leave Southeast Company? n#Person2#: Last month the company moved to Guangzhou for further development. I was reluctant to relocate because I love Nanjing so much. n#Person3#: Tell me what you know about our company. n#Person2#: Your company is the leading dealer in the import and export of cosmetics. Also, you have a reputation for being a good company to work for and you are in last year’s top 500 Employers list. n#Person3#: Tell me three positive qualities your coworkers would use to describe you and your work style. n#Person2#: First they’d say I am very punctual. They’d also say I am a cheerful person to work with I don’t bring my problems to work. I try to have a positive attitude about work. And thirdly, they’d say I am flexible I accept change easily. n#Person3#: Tell me something about your education. n#Person2#: I graduated from Yangzhou Junior College. Now I am attending evening classes in Nanjing Radio & TV University three times a week. My major is International Trade. I’ve already passed over half of the compulsory courses and five optional courses, and expect to receive a B. A. degree next year. n#Person3#: I see you are an ambitious young lady. I wish you can make greater progress in the future. nnnn### Response:n | #Person1# leads Sunfang to Mr. Smith for an interview. Sunfang tells Mr. Smith she was promoted fast in her previous job. She wants to work for Mr. Smith because her old company moved but she didn’t want to relocate, and because She thinks Mr. Smith’s company plays a leading role. Sunfang thinks her colleagues might describe her as being punctual, positive and flexible. Mr. Smith is impressed. | #Person1#: Good moring, madam. Can I help you? n#Person2#: Good moring. I am Sunfang. Yesterday Mr. Smith told me to come for a job interview this morning. Is he available now? n#Person1#: Yes, he is waiting for you in Room 123. n#Person2#: Thank you very much. May I come in? n#Person3#: Come in, please. n#Person2#: Good moring, Mr. Smith. I am Sunfang coming for an interview. n#Person3#: Nice to see you. Take a seat, please. n#Person2#: Thank you. n#Person3#: We’ve been looking over your application. I see you’ve got several years experience in secretarial work. What kind of work did you do in your previous job? n#Person2#: I mainly did typing, filing, answering telephone calls and such routine work in the beginning. I started the job as a junior secretary, but within the year I was promoted to senior secretary of Southeast Company. I drafted business correspondence for the general manager. n#Person3#: Then what’s the reason that promoted you to leave Southeast Company? n#Person2#: Last month the company moved to Guangzhou for further development. I was reluctant to relocate because I love Nanjing so much. n#Person3#: Tell me what you know about our company. n#Person2#: Your company | Sunfang comes for a job interview with Mr. Smith. She tells Mr. Smith about her previous job and her work experience. She also tells Mr. Smith about her company and her education. |

| Below is a Instruction that holds conversation which describes discussion between two people.Write a response that appropriately summarizes the conversation.nn### Instruction:n#Person1#: Well, Jenny, the school year is almost over. We just have two more weeks before exams. What do you plan to do this summer?n#Person2#: I’m going to teach English to some immigrants in the university’s community service program.n#Person1#: That sounds interesting. Don’t you need to speak a foreign language for a job like that?n#Person2#: No, you don’t. You just have to present the language simply and give the students a chance to practice speaking.n#Person1#: Come to think of it, that’s the way I was taught to speak Chinese. But speaking didn’t help me learn to read and write Chinese.n#Person2#: My students won’t want to read and write English, at lease not now. They are more interested in speaking.n#Person1#: You sound very knowledgeable about all this. How do you know so much?n#Person2#: I took a Teaching English as a Second Language course last year when you were in China. I’ve also talked with the experienced teachers quite a lot. I think I would like to be an ESL teacher when I graduate.nnnn### Response:n | Jenny plans to teach English to some immigrants in the university’s community service program this summer and she tells #Person1# that students are more interested in speaking. | #Person1#: Well, Jenny, the school year is almost over. We just have two more weeks before exams. What do you plan to do this summer?n#Person2#: I’m going to teach English to some immigrants in the university’s community service program.n#Person1#: That sounds interesting. Don’t you need to speak a foreign language for a job like that?n#Person2#: No, you don’t. You just have to present the language simply and give the students a chance to practice speaking.n#Person1#: Come to think of it, that’s the way I was taught to speak Chinese. But speaking didn’t help me learn to read and write Chinese.n#Person2#: My students won’t want to read and write English, at lease not now. They are more interested in speaking.n#Person1#: You sound very knowledgeable about all this. How do you know so much?n#Person2#: I took a Teaching English as a Second Language course last year when you were in China. I’ve also talked with the experienced teachers quite a lot. I think I would like to be an ESL teacher when I graduate.n | Jenny tells #Person1# she’s going to teach English to some immigrants in the university’s community service program. Jenny thinks speaking English is the most important part and she’s knowledgeable about it. |

| Below is a Instruction that holds conversation which describes discussion between two people.Write a response that appropriately summarizes the conversation.nn### Instruction:n#Person1#: Can I help you?n#Person2#: Yes, I am looking for a pair of gloves. I think I wear size six.n#Person1#: The gloves are on this counter. Let’s see… How about the blue pair? The color suits you and they are washable, too.n#Person2#: Oh, I’ll try them on. They seem to fit. How much shall I pay you?n#Person1#: They are on sales this week. They have been reduced to twenty-five dollars.n#Person2#: All right. I will take them.n#Person1#: They are very nice.n#Person2#: Thank you.nnnn### Response:n | #Person2# buys a pair of blue gloves of size six with #Person1#’s assistance. | #Person1#: Can I help you?n#Person2#: Yes, I am looking for a pair of gloves. I think I wear size six.n#Person1#: The gloves are on this counter. Let’s see… How about the blue pair? The color suits you and they are washable, too.n#Person2#: Oh, I’ll try them on. They seem to fit. How much shall I pay you?n#Person1#: They are on sales this week. They have been reduced to twenty-five dollars.n#Person2#: All right. I will take them.n#Person1#: They are very nice.n#Person2#: Thank you.n | #Person2# buys a pair of gloves with #Person1#’s help. |

| Below is a Instruction that holds conversation which describes discussion between two people.Write a response that appropriately summarizes the conversation.nn### Instruction:n#Person1#: How is your business on the Internet, Steven?n#Person2#: Don’t mention it. I lost a bunch of money on it this year. I think it is on the brink of bankruptcy.n#Person1#: I am sorry to hear that. It shows that you should buy companies, not ideas.n#Person2#: I changed my strategy around too. I am investing in good companies now.n#Person1#: Yes, I agree with you. I am going to find a good company and invest in it soon.n#Person2#: It’s a good idea. But please be careful when you invest this time.n#Person1#: Yes, I will. I should learn more from you. After all I am green hand on it.n#Person2#: If you have any question, you can ask me.n#Person1#: OK. My wife sent me a message and asked me to go home just now. I am leaving.n#Person2#: All right. Let’s chat next time. Bye-bye.n#Person1#: Bye.nnnn### Response:n | Steven lost much money on Internet business so he’s now investing in good companies. #Person1# wants to invest too but #Person1# is green hand on investment. Steven’s willing to help. | #Person1#: How is your business on the Internet, Steven?n#Person2#: Don’t mention it. I lost a bunch of money on it this year. I think it is on the brink of bankruptcy.n#Person1#: I am sorry to hear that. It shows that you should buy companies, not ideas.n#Person2#: I changed my strategy around too. I am investing in good companies now.n#Person1#: Yes, I agree with you. I am going to find a good company and invest in it soon.n#Person2#: It’s a good idea. But please be careful when you invest this time.n#Person1#: Yes, I will. I should learn more from you. After all I am green hand on it.n#Person2#: If you have any question, you can ask me.n#Person1#: OK. My wife sent me a message and asked me to go home just now. I am leaving.n#Person2#: All right. Let’s chat next time. Bye-bye.n#Person1#: Bye.n | Steven lost a bunch of money on the Internet and changed his strategy to invest in good companies. #Person1# agrees and will be careful when investing. |

| Below is a Instruction that holds conversation which describes discussion between two people.Write a response that appropriately summarizes the conversation.nn### Instruction:n#Person1#: Which language do you speak?n#Person2#: Well, I studied French at school, but I’ve forgotten most of it. I learnt some Russian while I was studying there and now I study Spanish in evening classes. Do you speak Japanese at home with your parents.n#Person1#: Yes, I do. I’m learning Chinese now on the internet.n#Person2#: How do you find studying a language on the internet?n#Person1#: It’s not easy, particularly with listening and speaking, because sometimes the connection isn’t too good.n#Person2#: I bet you find the character difficult to write!n#Person1#: Actually, many of the characters are the same or very similar in both Chinese and Japanese, so they’re easy for me.n#Person2#: So, you can read and write much better than you speak and understand other speakers, right?n#Person1#: Yes. How are the Spanish classes going?n#Person2#: In a way, it’s similar to your experience of learning Chinese. Some Spanish words are very similar to English ones. But I need to practise listening to native speakers and saying the words myself.nnnn### Response:n | #Person1# is learning Chinese on the internet and finds listening and speaking difficult, but thinks writing is easy. #Person2# thinks #Person2#’s experience of learning Spanish is similar to #Person1#’s. | #Person1#: Which language do you speak?n#Person2#: Well, I studied French at school, but I’ve forgotten most of it. I learnt some Russian while I was studying there and now I study Spanish in evening classes. Do you speak Japanese at home with your parents.n#Person1#: Yes, I do. I’m learning Chinese now on the internet.n#Person2#: How do you find studying a language on the internet?n#Person1#: It’s not easy, particularly with listening and speaking, because sometimes the connection isn’t too good.n#Person2#: I bet you find the character difficult to write!n#Person1#: Actually, many of the characters are the same or very similar in both Chinese and Japanese, so they’re easy for me.n#Person2#: So, you can read and write much better than you speak and understand other speakers, right?n#Person1#: Yes. How are the Spanish classes going?n#Person2#: In a way, it’s similar to your experience of learning Chinese. Some Spanish words are very similar to English ones. But I need to practise listening to native speakers and saying the words myself.n | #Person1# and #Person2# are talking about the languages they speak. #Person1# is learning Chinese on the internet and #Person2# is learning Spanish. They both find it difficult to learn the language. |

Results for fine-tuning the Meta Llama 3 70B text generation model on the Dolly dataset

| Inputs | Ground Truth | Response from Non-Fine-Tuned Model | Response from Fine-Tuned Model |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nCan you give me a short summary of the follow paragraph about the history of electronic music?nn### Input:nDuring the 1960s, digital computer music was pioneered, innovation in live electronics took place, and Japanese electronic musical instruments began to influence the music industry. In the early 1970s, Moog synthesizers and drum machines helped popularize synthesized electronic music. The 1970s also saw electronic music begin to have a significant influence on popular music, with the adoption of polyphonic synthesizers, electronic drums, drum machines, and turntables, through the emergence of genres such as disco, krautrock, new wave, synth-pop, hip hop, and EDM. In the early 1980s mass-produced digital synthesizers, such as the Yamaha DX7, became popular, and MIDI (Musical Instrument Digital Interface) was developed. In the same decade, with a greater reliance on synthesizers and the adoption of programmable drum machines, electronic popular music came to the fore. During the 1990s, with the proliferation of increasingly affordable music technology, electronic music production became an established part of popular culture. In Berlin starting in 1989, the Love Parade became the largest street party with over 1 million visitors, inspiring other such popular celebrations of electronic music.nnnn### Response:n | With the advent of new electronic instruments in the 60s and 70s, namely synthesizers and drum machines, electronic music quickly gained mainstream popularity through genres like disco and hip hop. As the technology progressed through the 80s and 90s, more affordable instruments led electronic music to be even more widely embraced, cementing its status in pop culture and leading to the rise of large electronic music events around the globe. | The history of electronic music can be traced back to the 1960s, when digital computer music was pioneered, innovation in live electronics took place, and Japanese electronic musical instruments began to influence the music industry. In the early 1970s, Moog synthesizers and drum machines helped popularize synthesized electronic music. The 1970s also saw electronic music begin to have a significant influence on popular music, with the adoption of polyphonic synthesizers, electronic drums, drum machines, and | Electronic music has been around since the 1960s. In the 1970s, Moog synthesizers and drum machines helped popularize synthesized electronic music. In the 1980s, digital synthesizers became popular and MIDI was developed. In the 1990s, electronic music production became an established part of popular culture. |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWho was Nike in Greek Mythology?nn### Input:nIn Greek mythology, Nike (/ˈnaɪki/ (listen); Ancient Greek: Νίκη, lit. ‘victory’, ancient: [nǐː.kɛː], modern: [ˈni.ci]) was a goddess who personified victory in any field including art, music, war, and athletics. She is often portrayed in Greek art as Winged Victory in the motion of flight; however, she can also appear without wings as “Wingless Victory” when she is being portrayed as an attribute of another deity such as Athena. In Greek literature Nike is described as both an attribute and attendant to the gods Zeus and Athena. Nike gained this honored role beside Zeus during the Titanomachy where she was one of the first gods to offer her allegiance to Zeus. At Athens, Nike became a servant to Athena as well as an attribute of her due to the prominent status Athena held in her patron city. The fusion of the two goddesses at Athens has contributed to the ambiguity surrounding Nike’s origins. It is unclear whether she originated from a character trait of the Greek goddess Athena or has always existed as an independent deity. Her origin story in Greek mythology is also slightly ambiguous, with the Theogony claiming Nike to be the daughter of Styx and Pallas while the Homeric Hymns describe Ares as being Nike’s father. Her Roman equivalent was Victoria.nnnn### Response:n | Nike is the god of ‘victory’ in Greek mythology, and is often portrayed in art Winged Victory in the motion of flight. | Nike was the Greek goddess of victory. She was the daughter of the Titan Pallas and the goddess Styx, and the sister of Kratos (Strength), Bia (Force), and Zelus (Rivalry). She was depicted as a winged goddess, and was often shown holding a wreath of victory or a palm branch. She was also associated with the goddess Athena, and was often depicted as her companion. Nike was worshipped throughout Greece, and her cult was particularly strong | Nike was the Greek goddess of victory. She was often portrayed in Greek art as Winged Victory in the motion of flight. Nike gained this honored role beside Zeus during the Titanomachy where she was one of the first gods to offer her allegiance to Zeus. At Athens, Nike became a servant to Athena as well as an attribute of her due to the prominent status Athena held in her patron city. |