A recap of the some of the biggest scientific breakthroughs over the past two years.Read More

A recap of the some of the biggest scientific breakthroughs over the past two years.Read More

Distilling Llama3.1 8B into 1B in torchtune

In this blog, we present a case study on distilling a Llama 3.1 8B model into Llama 3.2 1B using torchtune’s knowledge distillation recipe. We demonstrate how knowledge distillation (KD) can be used in post-training to improve instruction-following task performance and showcase how users can leverage the recipe.

What is Knowledge Distillation?

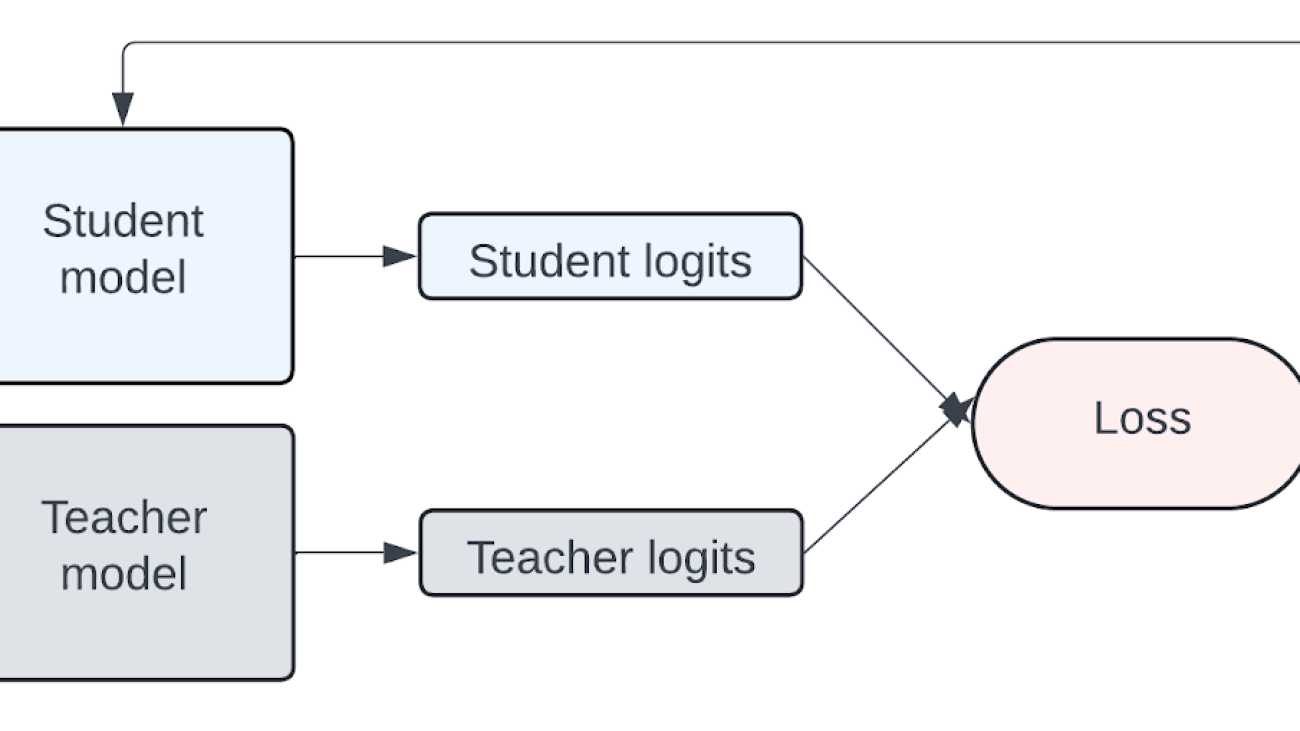

Knowledge Distillation is a widely used compression technique that transfers knowledge from a larger (teacher) model to a smaller (student) model. Larger models have more parameters and capacity for knowledge, however, this larger capacity is also more computationally expensive to deploy. Knowledge distillation can be used to compress the knowledge of a larger model into a smaller model. The idea is that performance of smaller models can be improved by learning from larger model’s outputs.

How does Knowledge Distillation work?

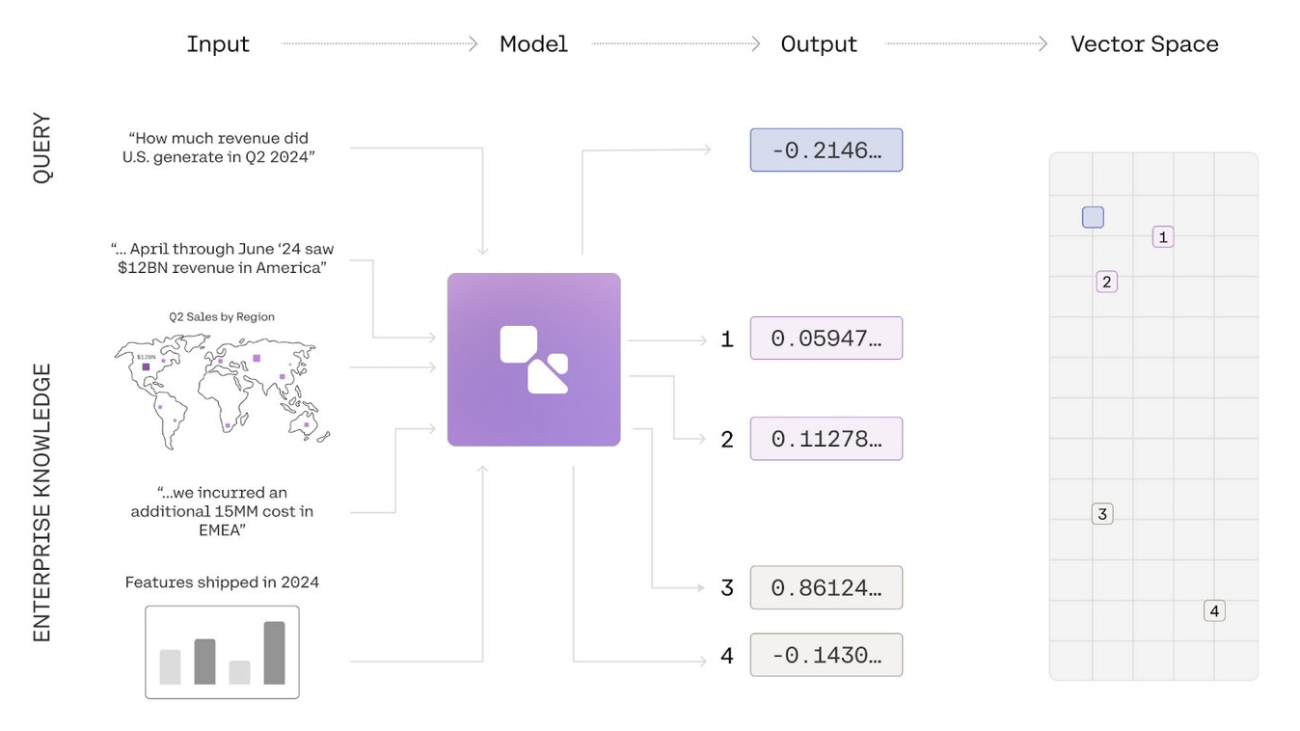

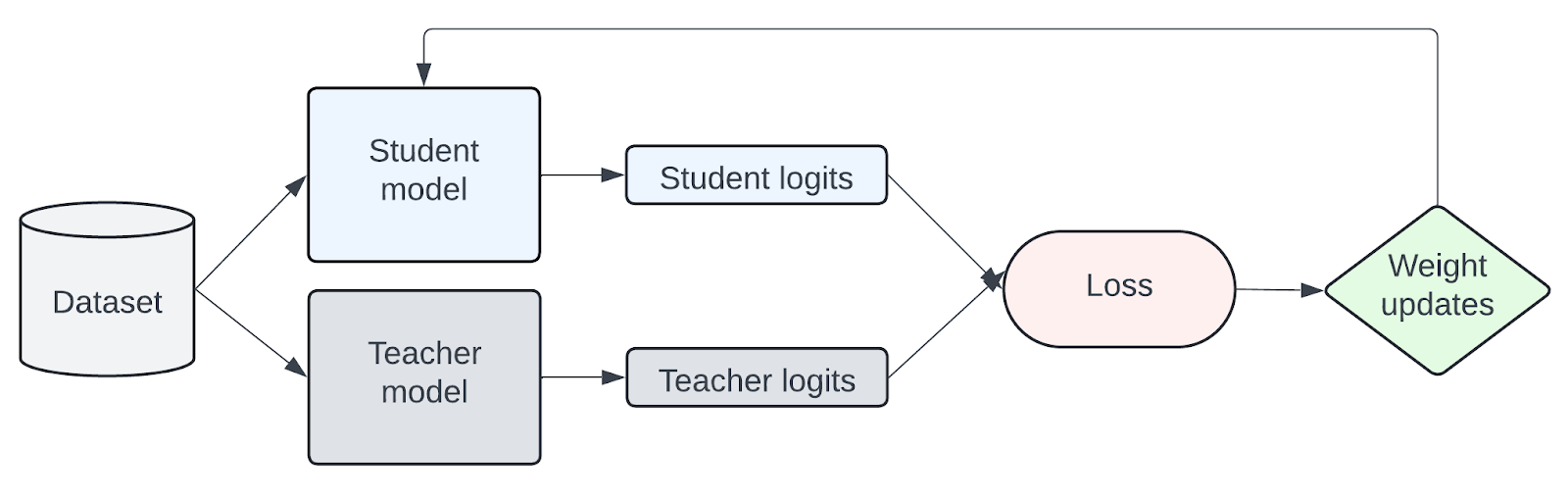

Knowledge is transferred from the teacher to student model by training on a transfer set where the student is trained to imitate the token-level probability distributions of the teacher. The assumption is that the teacher model distribution is similar to the transfer dataset. The diagram below is a simplified representation of how KD works.

Figure 1: Simplified representation of knowledge transfer from teacher to student model

As knowledge distillation for LLMs is an active area of research, there are papers, such as MiniLLM, DistiLLM, AKL, and Generalized KD, investigating different loss approaches. In this case study, we focus on the standard cross-entropy (CE) loss with the forward Kullback-Leibler (KL) divergence loss as the baseline. Forward KL divergence aims to minimize the difference by forcing the student’s distribution to align with all of the teacher’s distributions.

Why is Knowledge Distillation useful?

The idea of knowledge distillation is that a smaller model can achieve better performance using a teacher model’s outputs as an additional signal than it could training from scratch or with supervised fine-tuning. For instance, Llama 3.2 lightweight 1B and 3B text models incorporated logits from Llama 3.1 8B and 70B to recover performance after pruning. In addition, for fine-tuning on instruction-following tasks, research in LLM distillation demonstrates that knowledge distillation methods can outperform supervised fine-tuning (SFT) alone.

| Model | Method | DollyEval | Self-Inst | S-NI |

| GPT-4 Eval | GPT-4 Eval | Rouge-L | ||

| Llama 7B | SFT | 73.0 | 69.2 | 32.4 |

| KD | 73.7 | 70.5 | 33.7 | |

| MiniLLM | 76.4 | 73.1 | 35.5 | |

| Llama 1.1B | SFT | 22.1 | – | 27.8 |

| KD | 22.2 | – | 28.1 | |

| AKL | 24.4 | – | 31.4 | |

| OpenLlama 3B | SFT | 47.3 | 41.7 | 29.3 |

| KD | 44.9 | 42.1 | 27.9 | |

| SeqKD | 48.1 | 46.0 | 29.1 | |

| DistiLLM | 59.9 | 53.3 | 37.6 |

Table 1: Comparison of knowledge distillation approaches to supervised fine-tuning

Below is a simplified example of how knowledge distillation differs from supervised fine-tuning.

| Supervised fine-tuning | Knowledge distillation |

|---|---|

|

|

KD recipe in torchtune

With torchtune, we can easily apply knowledge distillation to Llama3, as well as other LLM model families, using torchtune’s KD recipe. The objective for this recipe is to fine-tune Llama3.2-1B on the Alpaca instruction-following dataset by distilling from Llama3.1-8B. This recipe focuses on post-training and assumes the teacher and student models have already been pre-trained.

First, we have to download the model weights. To be consistent with other torchtune fine-tuning configs, we will use the instruction tuned models of Llama3.1-8B as teacher and Llama3.2-1B as student.

tune download meta-llama/Meta-Llama-3.1-8B-Instruct --output-dir /tmp/Meta-Llama-3.1-8B-Instruct --ignore-patterns "original/consolidated.00.pth" --hf_token <HF_TOKEN>

tune download meta-llama/Llama-3.2-1B-Instruct --output-dir /tmp/Llama-3.2-1B-Instruct --ignore-patterns "original/consolidated.00.pth" --hf_token <HF_TOKEN>

In order for the teacher model distribution to be similar to the Alpaca dataset, we will fine-tune the teacher model using LoRA. Based on our experiments, shown in the next section, we’ve found that KD performs better when the teacher model is already fine-tuned on the target dataset.

tune run lora_finetune_single_device --config llama3_1/8B_lora_single_device

Finally, we can run the following command to distill the fine-tuned 8B model into the 1B model on a single GPU. For this case study, we used a single A100 80GB GPU. We also have a distributed recipe for running on multiple devices.

tune run knowledge_distillation_single_device --config llama3_2/knowledge_distillation_single_device

Ablation studies

In this section, we demonstrate how changing configurations and hyperparameters can affect performance. By default, our configuration uses the LoRA fine-tuned 8B teacher model, downloaded 1B student model, learning rate of 3e-4 and KD loss ratio of 0.5. For this case study, we fine-tuned on the alpaca_cleaned_dataset and evaluated the models on truthfulqa_mc2, hellaswag and commonsense_qa tasks through the EleutherAI LM evaluation harness. Let’s take a look at the effects of:

- Using a fine-tuned teacher model

- Using a fine-tuned student model

- Hyperparameter tuning of KD loss ratio and learning rate

Using a fine-tuned teacher model

The default settings in the config uses the fine-tuned teacher model. Now, let’s take a look at the effects of not fine-tuning the teacher model first.

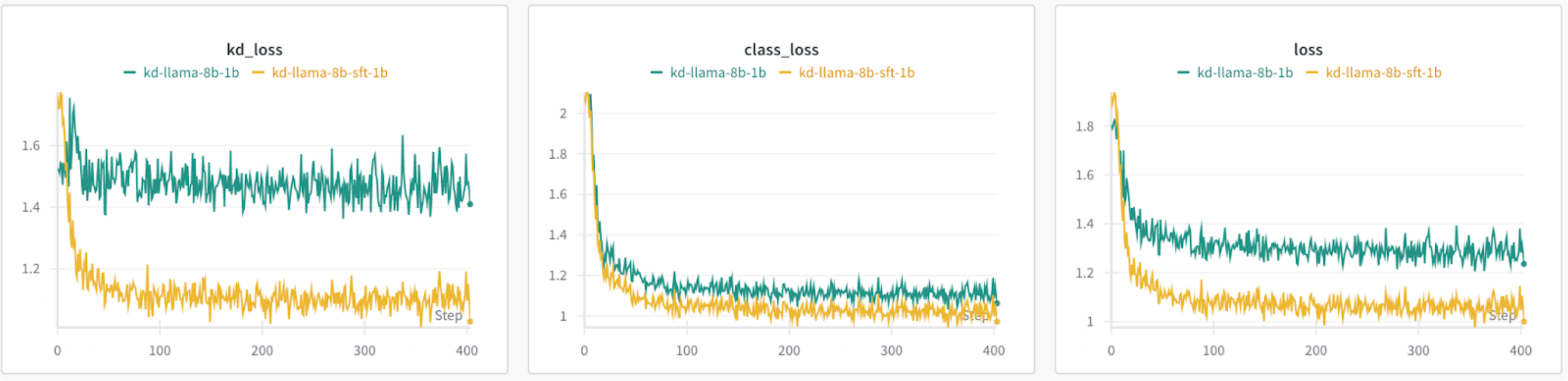

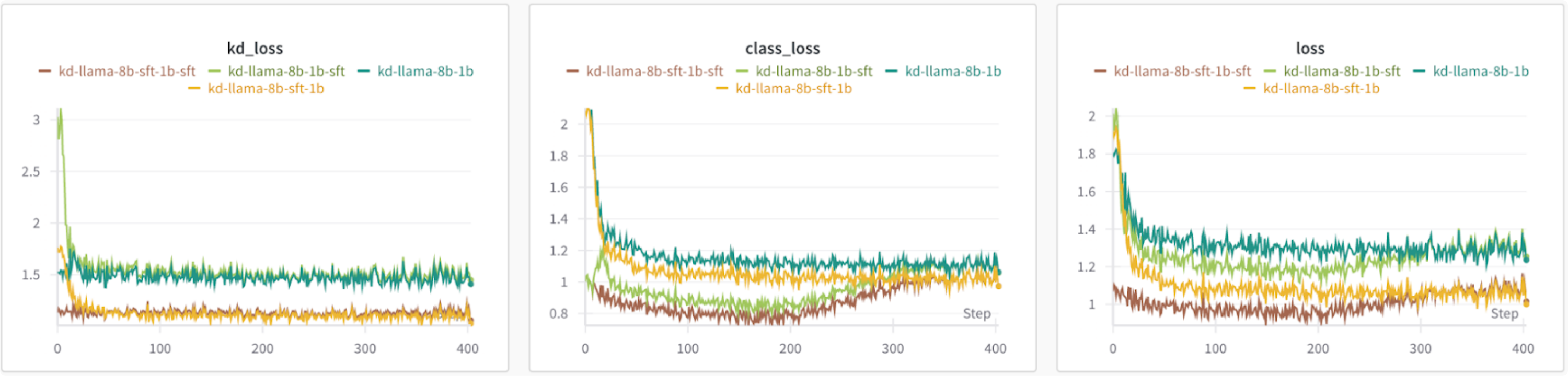

Taking a loss at the losses, using the baseline 8B as teacher results in a higher loss than using the fine-tuned teacher model. The KD loss also remains relatively constant, suggesting that the teacher model should have the same distributions as the transfer dataset.

Figure 2: (left to right) KD loss from forward KL divergence, class loss from cross entropy, total loss: even combination of KD and class loss.

In our benchmarks, we can see that supervised fine-tuning of the 1B model achieves better accuracy than the baseline 1B model. By using the fine-tuned 8B teacher model, we see comparable results for truthfulqa and improvement for hellaswag and commonsense. When using the baseline 8B as a teacher, we see improvement across all metrics, but lower than the other configurations.

| Model | TruthfulQA | hellaswag | commonsense | |

| mc2 | acc | acc_norm | acc | |

| Baseline Llama 3.1 8B | 0.5401 | 0.5911 | 0.7915 | 0.7707 |

| Fine-tuned Llama 3.1 8B using LoRA | 0.5475 | 0.6031 | 0.7951 | 0.7789 |

| Baseline Llama 3.2 1B | 0.4384 | 0.4517 | 0.6064 | 0.5536 |

| Fine-tuned Llama 3.2 1B using LoRA | 0.4492 | 0.4595 | 0.6132 | 0.5528 |

| KD using baseline 8B as teacher | 0.444 | 0.4576 | 0.6123 | 0.5561 |

| KD using fine-tuned 8B as teacher | 0.4481 | 0.4603 | 0.6157 | 0.5569 |

Table 2: Comparison between using baseline and fine-tuned 8B as teacher model

Using a fine-tuned student model

For these experiments, we look at the effects of KD when the student model is already fine-tuned. We analyze the effects using different combinations of baseline and fine-tuned 8B and 1B models.

Based on the loss graphs, using a fine-tuned teacher model results in a lower loss irrespective of whether the student model is fine-tuned or not. It’s also interesting to note that the class loss starts to increase when using a fine-tuned student model.

Figure 3: Comparing losses of different teacher and student model initializations

Using the fine-tuned student model boosts accuracy even further for truthfulqa, but the accuracy drops for hellaswag and commonsense. Using a fine-tuned teacher model and baseline student model achieved the best results on hellaswag and commonsense dataset. Based on these findings, the best configuration will change depending on which evaluation dataset and metric you are optimizing for.

| Model | TruthfulQA | hellaswag | commonsense | |

| mc2 | acc | acc_norm | acc | |

| Baseline Llama 3.1 8B | 0.5401 | 0.5911 | 0.7915 | 0.7707 |

| Fine-tuned Llama 3.1 8B using LoRA | 0.5475 | 0.6031 | 0.7951 | 0.7789 |

| Baseline Llama 3.2 1B | 0.4384 | 0.4517 | 0.6064 | 0.5536 |

| Fine-tuned Llama 3.2 1B using LoRA | 0.4492 | 0.4595 | 0.6132 | 0.5528 |

| KD using baseline 8B and baseline 1B | 0.444 | 0.4576 | 0.6123 | 0.5561 |

| KD using baseline 8B and fine-tuned 1B | 0.4508 | 0.448 | 0.6004 | 0.5274 |

| KD using fine-tuned 8B and baseline 1B | 0.4481 | 0.4603 | 0.6157 | 0.5569 |

| KD using fine-tuned 8B and fine-tuned 1B | 0.4713 | 0.4512 | 0.599 | 0.5233 |

Table 3: Comparison using baseline and fine-tuned teacher and student models

Hyperparameter tuning: learning rate

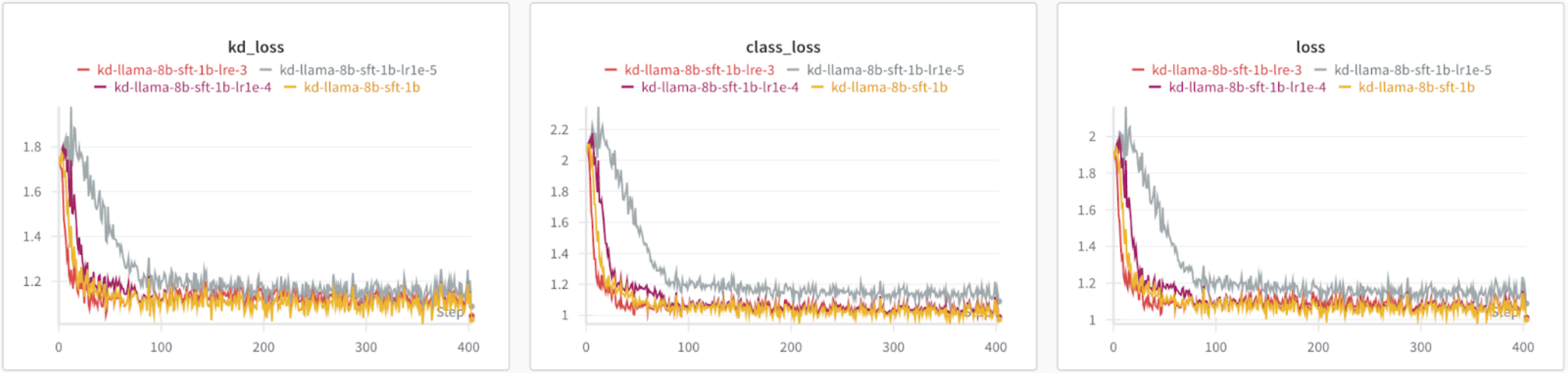

By default, the recipe has a learning rate of 3e-4. For these experiments, we changed the learning rate from as high as 1e-3 to as low as 1e-5.

Based on the loss graphs, all learning rates result in similar losses except for 1e-5, which has a higher KD and class loss.

Figure 4: Comparing losses of different learning rates

Based on our benchmarks, the optimal learning rate changes depending on which metric and tasks you are optimizing for.

| Model | learning rate | TruthfulQA | hellaswag | commonsense | |

| mc2 | acc | acc_norm | acc | ||

| Baseline Llama 3.1 8B | – | 0.5401 | 0.5911 | 0.7915 | 0.7707 |

| Fine-tuned Llama 3.1 8B using LoRA | – | 0.5475 | 0.6031 | 0.7951 | 0.7789 |

| Baseline Llama 3.2 1B | – | 0.4384 | 0.4517 | 0.6064 | 0.5536 |

| Fine-tuned Llama 3.2 1B using LoRA | – | 0.4492 | 0.4595 | 0.6132 | 0.5528 |

| KD using fine-tuned 8B and baseline 1B | 3e-4 | 0.4481 | 0.4603 | 0.6157 | 0.5569 |

| KD using fine-tuned 8B and baseline 1B | 1e-3 | 0.4453 | 0.4535 | 0.6071 | 0.5258 |

| KD using fine-tuned 8B and baseline 1B | 1e-4 | 0.4489 | 0.4606 | 0.6156 | 0.5586 |

| KD using fine-tuned 8B and baseline 1B | 1e-5 | 0.4547 | 0.4548 | 0.6114 | 0.5487 |

Table 4: Effects of tuning learning rate

Hyperparameter tuning: KD ratio

By default, the KD ratio is set to 0.5, which gives even weighting to both the class and KD loss. In these experiments, we look at the effects of different KD ratios, where 0 only uses the class loss and 1 only uses the KD loss.

Overall, the benchmark results show that for these tasks and metrics, higher KD ratios perform slightly better.

| Model | kd_ratio (lr=3e-4) | TruthfulQA | hellaswag | commonsense | |

| mc2 | acc | acc_norm | acc | ||

| Baseline Llama 3.1 8B | – | 0.5401 | 0.5911 | 0.7915 | 0.7707 |

| Fine-tuned Llama 3.1 8B using LoRA | – | 0.5475 | 0.6031 | 0.7951 | 0.7789 |

| Baseline Llama 3.2 1B | – | 0.4384 | 0.4517 | 0.6064 | 0.5536 |

| Fine-tuned Llama 3.2 1B using LoRA | – | 0.4492 | 0.4595 | 0.6132 | 0.5528 |

| KD using fine-tuned 8B and baseline 1B | 0.25 | 0.4485 | 0.4595 | 0.6155 | 0.5602 |

| KD using fine-tuned 8B and baseline 1B | 0.5 | 0.4481 | 0.4603 | 0.6157 | 0.5569 |

| KD using fine-tuned 8B and baseline 1B | 0.75 | 0.4543 | 0.463 | 0.6189 | 0.5643 |

| KD using fine-tuned 8B and baseline 1B | 1.0 | 0.4537 | 0.4641 | 0.6177 | 0.5717 |

Table 5: Effects of tuning KD ratio

Looking Ahead

In this blog, we presented a study on how to distill LLMs through torchtune using the forward KL divergence loss on Llama 3.1 8B and Llama 3.2 1B logits. There are many directions for future exploration to further improve performance and offer more flexibility in distillation methods.

- Expand KD loss offerings. The KD recipe uses the forward KL divergence loss. However, aligning the student distribution to the whole teacher distribution may not be effective, as mentioned above. There are multiple papers, such as MiniLLM, DistiLLM, and Generalized KD, that introduce new KD losses and policies to address the limitation and have shown to outperform the standard use of cross entropy with forward KL divergence loss. For instance, MiniLLM uses reverse KL divergence to prevent the student from over-estimating low-probability regions of the teacher. DistiLLM introduces a skewed KL loss and an adaptive training policy.

- Enable cross-tokenizer distillation. The current recipe requires the teacher and student model to use the same tokenizer, which limits the ability to distill across different LLM families. There has been research on cross-tokenizer approaches (e.g. Universal Logit Distillation) that we could explore.

- Expand distillation to multimodal LLMs and encoder models. A natural extension of the KD recipe is to expand to multimodal LLMs. Similar to deploying more efficient LLMs, there’s also a need to deploy smaller and more efficient multimodal LLMs. In addition, there has been work in demonstrating LLMs as encoder models (e.g. LLM2Vec). Distillation from LLMs as encoders to smaller encoder models may also be a promising direction to explore.

Enhancing JEPAs with Spatial Conditioning: Robust and Efficient Representation Learning

This paper was accepted at the Self-Supervised Learning – Theory and Practice (SSLTP) Workshop at NeurIPS 2024.

Image-based Joint-Embedding Predictive Architecture (IJEPA) offers an attractive alternative to Masked Autoencoder (MAE) for representation learning using the Masked Image Modeling framework. IJEPA drives representations to capture useful semantic information by predicting in latent rather than input space. However, IJEPA relies on carefully designed context and target windows to avoid representational collapse. The encoder modules in IJEPA cannot adaptively modulate the type of…Apple Machine Learning Research

Generalization on the Unseen, Logic Reasoning and Degree Curriculum

This paper considers the learning of logical (Boolean) functions with a focus on the generalization on the unseen (GOTU) setting, a strong case of out-of-distribution generalization. This is motivated by the fact that the rich combinatorial nature of data in certain reasoning tasks (e.g., arithmetic/logic) makes representative data sampling challenging, and learning successfully under GOTU gives a first vignette of an ‘extrapolating’ or ‘reasoning’ learner. We study how different network architectures trained by (S)GD perform under GOTU and provide both theoretical and experimental evidence…Apple Machine Learning Research

Duo-LLM: A Framework for Studying Adaptive Computation in Large Language Models

This paper was accepted at the Efficient Natural Language and Speech Processing (ENLSP) Workshop at NeurIPS 2024.

Large Language Models (LLMs) typically generate outputs token by token using a fixed compute budget, leading to inefficient resource utilization. To address this shortcoming, recent advancements in mixture of expert (MoE) models, speculative decoding, and early exit strategies leverage the insight that computational demands can vary significantly based on the complexity and nature of the input. However, identifying optimal routing patterns for dynamic execution remains an open…Apple Machine Learning Research

Recurrent Drafter for Fast Speculative Decoding in Large Language Models

We present Recurrent Drafter (ReDrafter), an advanced speculative decoding approach that achieves state-of-the-art speedup for large language models (LLMs) inference. The performance gains are driven by three key aspects: (1) leveraging a recurrent neural network (RNN) as the draft model conditioning on LLM’s hidden states, (2) applying a dynamic tree attention algorithm over beam search results to eliminate duplicated prefixes in candidate sequences, and (3) training through knowledge distillation from the LLM. ReDrafter accelerates Vicuna inference in MT-Bench by up to 3.5x with a PyTorch…Apple Machine Learning Research

Considerations for addressing the core dimensions of responsible AI for Amazon Bedrock applications

The rapid advancement of generative AI promises transformative innovation, yet it also presents significant challenges. Concerns about legal implications, accuracy of AI-generated outputs, data privacy, and broader societal impacts have underscored the importance of responsible AI development. Responsible AI is a practice of designing, developing, and operating AI systems guided by a set of dimensions with the goal to maximize benefits while minimizing potential risks and unintended harm. Our customers want to know that the technology they are using was developed in a responsible way. They also want resources and guidance to implement that technology responsibly in their own organization. Most importantly, they want to make sure the technology they roll out is for everyone’s benefit, including end-users. At AWS, we are committed to developing AI responsibly, taking a people-centric approach that prioritizes education, science, and our customers, integrating responsible AI across the end-to-end AI lifecycle.

What constitutes responsible AI is continually evolving. For now, we consider eight key dimensions of responsible AI: Fairness, explainability, privacy and security, safety, controllability, veracity and robustness, governance, and transparency. These dimensions make up the foundation for developing and deploying AI applications in a responsible and safe manner.

At AWS, we help our customers transform responsible AI from theory into practice—by giving them the tools, guidance, and resources to get started with purpose-built services and features, such as Amazon Bedrock Guardrails. In this post, we introduce the core dimensions of responsible AI and explore considerations and strategies on how to address these dimensions for Amazon Bedrock applications. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Safety

The safety dimension in responsible AI focuses on preventing harmful system output and misuse. It focuses on steering AI systems to prioritize user and societal well-being.

Amazon Bedrock is designed to facilitate the development of secure and reliable AI applications by incorporating various safety measures. In the following sections, we explore different aspects of implementing these safety measures and provide guidance for each.

Addressing model toxicity with Amazon Bedrock Guardrails

Amazon Bedrock Guardrails supports AI safety by working towards preventing the application from generating or engaging with content that is considered unsafe or undesirable. These safeguards can be created for multiple use cases and implemented across multiple FMs, depending on your application and responsible AI requirements. For example, you can use Amazon Bedrock Guardrails to filter out harmful user inputs and toxic model outputs, redact by either blocking or masking sensitive information from user inputs and model outputs, or help prevent your application from responding to unsafe or undesired topics.

Content filters can be used to detect and filter harmful or toxic user inputs and model-generated outputs. By implementing content filters, you can help prevent your AI application from responding to inappropriate user behavior, and make sure your application provides only safe outputs. This can also mean providing no output at all, in situations where certain user behavior is unwanted. Content filters support six categories: hate, insults, sexual content, violence, misconduct, and prompt injections. Filtering is done based on confidence classification of user inputs and FM responses across each category. You can adjust filter strengths to determine the sensitivity of filtering harmful content. When a filter is increased, it increases the probability of filtering unwanted content.

Denied topics are a set of topics that are undesirable in the context of your application. These topics will be blocked if detected in user queries or model responses. You define a denied topic by providing a natural language definition of the topic along with a few optional example phrases of the topic. For example, if a medical institution wants to make sure their AI application avoids giving any medication or medical treatment-related advice, they can define the denied topic as “Information, guidance, advice, or diagnoses provided to customers relating to medical conditions, treatments, or medication” and optional input examples like “Can I use medication A instead of medication B,” “Can I use Medication A for treating disease Y,” or “Does this mole look like skin cancer?” Developers will need to specify a message that will be displayed to the user whenever denied topics are detected, for example “I am an AI bot and cannot assist you with this problem, please contact our customer service/your doctor’s office.” Avoiding specific topics that aren’t toxic by nature but can potentially be harmful to the end-user is crucial when creating safe AI applications.

Word filters are used to configure filters to block undesirable words, phrases, and profanity. Such words can include offensive terms or undesirable outputs, like product or competitor information. You can add up to 10,000 items to the custom word filter to filter out topics you don’t want your AI application to produce or engage with.

Sensitive information filters are used to block or redact sensitive information such as personally identifiable information (PII) or your specified context-dependent sensitive information in user inputs and model outputs. This can be useful when you have requirements for sensitive data handling and user privacy. If the AI application doesn’t process PII information, your users and your organization are safer from accidental or intentional misuse or mishandling of PII. The filter is configured to block sensitive information requests; upon such detection, the guardrail will block content and display a preconfigured message. You can also choose to redact or mask sensitive information, which will either replace the data with an identifier or delete it completely.

Measuring model toxicity with Amazon Bedrock model evaluation

Amazon Bedrock provides a built-in capability for model evaluation. Model evaluation is used to compare different models’ outputs and select the most appropriate model for your use case. Model evaluation jobs support common use cases for large language models (LLMs) such as text generation, text classification, question answering, and text summarization. You can choose to create either an automatic model evaluation job or a model evaluation job that uses a human workforce. For automatic model evaluation jobs, you can either use built-in datasets across three predefined metrics (accuracy, robustness, toxicity) or bring your own datasets. For human-in-the-loop evaluation, which can be done by either AWS managed or customer managed teams, you must bring your own dataset.

If you are planning on using automated model evaluation for toxicity, start by defining what constitutes toxic content for your specific application. This may include offensive language, hate speech, and other forms of harmful communication. Automated evaluations come with curated datasets to choose from. For toxicity, you can use either RealToxicityPrompts or BOLD datasets, or both. If you bring your custom model to Amazon Bedrock, you can implement scheduled evaluations by integrating regular toxicity assessments into your development pipeline at key stages of model development, such as after major updates or retraining sessions. For early detection, implement custom testing scripts that run toxicity evaluations on new data and model outputs continuously.

Amazon Bedrock and its safety capabilities helps developers create AI applications that prioritize safety and reliability, thereby fostering trust and enforcing ethical use of AI technology. You should experiment and iterate on chosen safety approaches to achieve their desired performance. Diverse feedback is also important, so think about implementing human-in-the-loop testing to assess model responses for safety and fairness.

Controllability

Controllability focuses on having mechanisms to monitor and steer AI system behavior. It refers to the ability to manage, guide, and constrain AI systems to make sure they operate within desired parameters.

Guiding AI behavior with Amazon Bedrock Guardrails

To provide direct control over what content the AI application can produce or engage with, you can use Amazon Bedrock Guardrails, which we discussed under the safety dimension. This allows you to steer and manage the system’s outputs effectively.

You can use content filters to manage AI outputs by setting sensitivity levels for detecting harmful or toxic content. By controlling how strictly content is filtered, you can steer the AI’s behavior to help avoid undesirable responses. This allows you to guide the system’s interactions and outputs to align with your requirements. Defining and managing denied topics helps control the AI’s engagement with specific subjects. By blocking responses related to defined topics, you help AI systems remain within the boundaries set for its operation.

Amazon Bedrock Guardrails can also guide the system’s behavior for compliance with content policies and privacy standards. Custom word filters allow you to block specific words, phrases, and profanity, giving you direct control over the language the AI uses. And managing how sensitive information is handled, whether by blocking or redacting it, allows you to control the AI’s approach to data privacy and security.

Monitoring and adjusting performance with Amazon Bedrock model evaluation

To asses and adjust AI performance, you can look at Amazon Bedrock model evaluation. This helps systems operate within desired parameters and meet safety and ethical standards. You can explore both automatic and human-in-the loop evaluation. These evaluation methods help you monitor and guide model performance by assessing how well models meet safety and ethical standards. Regular evaluations allow you to adjust and steer the AI’s behavior based on feedback and performance metrics.

Integrating scheduled toxicity assessments and custom testing scripts into your development pipeline helps you continuously monitor and adjust model behavior. This ongoing control helps AI systems to remain aligned with desired parameters and adapt to new data and scenarios effectively.

Fairness

The fairness dimension in responsible AI considers the impacts of AI on different groups of stakeholders. Achieving fairness requires ongoing monitoring, bias detection, and adjustment of AI systems to maintain impartiality and justice.

To help with fairness in AI applications that are built on top of Amazon Bedrock, application developers should explore model evaluation and human-in-the-loop validation for model outputs at different stages of the machine learning (ML) lifecycle. Measuring bias presence before and after model training as well as at model inference is the first step in mitigating bias. When developing an AI application, you should set fairness goals, metrics, and potential minimum acceptable thresholds to measure performance across different qualities and demographics applicable to the use case. On top of these, you should create remediation plans for potential inaccuracies and bias, which may include modifying datasets, finding and deleting the root cause for bias, introducing new data, and potentially retraining the model.

Amazon Bedrock provides a built-in capability for model evaluation, as we explored under the safety dimension. For general text generation evaluation for measuring model robustness and toxicity, you can use the built-in fairness dataset Bias in Open-ended Language Generation Dataset (BOLD), which focuses on five domains: profession, gender, race, religious ideologies, and political ideologies. To assess fairness for other domains or tasks, you must bring your own custom prompt datasets.

Transparency

The transparency dimension in generative AI focuses on understanding how AI systems make decisions, why they produce specific results, and what data they’re using. Maintaining transparency is critical for building trust in AI systems and fostering responsible AI practices.

To help meet the growing demand for transparency, AWS introduced AWS AI Service Cards, a dedicated resource aimed at enhancing customer understanding of our AI services. AI Service Cards serve as a cornerstone of responsible AI documentation, consolidating essential information in one place. They provide comprehensive insights into the intended use cases, limitations, responsible AI design principles, and best practices for deployment and performance optimization of our AI services. They are part of a comprehensive development process we undertake to build our services in a responsible way.

At the time of writing, we offer the following AI Service Cards for Amazon Bedrock models:

Service cards for other Amazon Bedrock models can be found directly on the provider’s website. Each card details the service’s specific use cases, the ML techniques employed, and crucial considerations for responsible deployment and use. These cards evolve iteratively based on customer feedback and ongoing service enhancements, so they remain relevant and informative.

An additional effort in providing transparency is the Amazon Titan Image Generator invisible watermark. Images generated by Amazon Titan come with this invisible watermark by default. This watermark detection mechanism enables you to identify images produced by Amazon Titan Image Generator, an FM designed to create realistic, studio-quality images in large volumes and at low cost using natural language prompts. By using watermark detection, you can enhance transparency around AI-generated content, mitigate the risks of harmful content generation, and reduce the spread of misinformation.

Content creators, news organizations, risk analysts, fraud detection teams, and more can use this feature to identify and authenticate images created by Amazon Titan Image Generator. The detection system also provides a confidence score, allowing you to assess the reliability of the detection even if the original image has been modified. Simply upload an image to the Amazon Bedrock console, and the API will detect watermarks embedded in images generated by the Amazon Titan model, including both the base model and customized versions. This tool not only supports responsible AI practices, but also fosters trust and reliability in the use of AI-generated content.

Veracity and robustness

The veracity and robustness dimension in responsible AI focuses on achieving correct system outputs, even with unexpected or adversarial inputs. The main focus of this dimension is to address possible model hallucinations. Model hallucinations occur when an AI system generates false or misleading information that appears to be plausible. Robustness in AI systems makes sure model outputs are consistent and reliable under various conditions, including unexpected or adverse situations. A robust AI model maintains its functionality and delivers consistent and accurate outputs even when faced with incomplete or incorrect input data.

Measuring accuracy and robustness with Amazon Bedrock model evaluation

As introduced in the AI safety and controllability dimensions, Amazon Bedrock provides tools for evaluating AI models in terms of toxicity, robustness, and accuracy. This makes sure the models don’t produce harmful, offensive, or inappropriate content and can withstand various inputs, including unexpected or adversarial scenarios.

Accuracy evaluation helps AI models produce reliable and correct outputs across various tasks and datasets. In the built-in evaluation, accuracy is measured against a TREX dataset and the algorithm calculates the degree to which the model’s predictions match the actual results. The actual metric for accuracy depends on the chosen use case; for example, in text generation, the built-in evaluation calculates a real-world knowledge score, which examines the model’s ability to encode factual knowledge about the real world. This evaluation is essential for maintaining the integrity, credibility, and effectiveness of AI applications.

Robustness evaluation makes sure the model maintains consistent performance across diverse and potentially challenging conditions. This includes handling unexpected inputs, adversarial manipulations, and varying data quality without significant degradation in performance.

Methods for achieving veracity and robustness in Amazon Bedrock applications

There are several techniques that you can consider when using LLMs in your applications to maximize veracity and robustness:

- Prompt engineering – You can instruct that model to only engage in discussion about things that the model knows and not generate any new information.

- Chain-of-thought (CoT) – This technique involves the model generating intermediate reasoning steps that lead to the final answer, improving the model’s ability to solve complex problems by making its thought process transparent and logical. For example, you can ask the model to explain why it used certain information and created a certain output. This is a powerful method to reduce hallucinations. When you ask the model to explain the process it used to generate the output, the model has to identify different the steps taken and information used, thereby reducing hallucination itself. To learn more about CoT and other prompt engineering techniques for Amazon Bedrock LLMs, see General guidelines for Amazon Bedrock LLM users.

- Retrieval Augmented Generation (RAG) – This helps reduce hallucination by providing the right context and augmenting generated outputs with internal data to the models. With RAG, you can provide the context to the model and tell the model to only reply based on the provided context, which leads to fewer hallucinations. With Amazon Bedrock Knowledge Bases, you can implement the RAG workflow from ingestion to retrieval and prompt augmentation. The information retrieved from the knowledge bases is provided with citations to improve AI application transparency and minimize hallucinations.

- Fine-tuning and pre-training – There are different techniques for improving model accuracy for specific context, like fine-tuning and continued pre-training. Instead of providing internal data through RAG, with these techniques, you add data straight to the model as part of its dataset. This way, you can customize several Amazon Bedrock FMs by pointing them to datasets that are saved in Amazon Simple Storage Service (Amazon S3) buckets. For fine-tuning, you can take anything between a few dozen and hundreds of labeled examples and train the model with them to improve performance on specific tasks. The model learns to associate certain types of outputs with certain types of inputs. You can also use continued pre-training, in which you provide the model with unlabeled data, familiarizing the model with certain inputs for it to associate and learn patterns. This includes, for example, data from a specific topic that the model doesn’t have enough domain knowledge of, thereby increasing the accuracy of the domain. Both of these customization options make it possible to create an accurate customized model without collecting large volumes of annotated data, resulting in reduced hallucination.

- Inference parameters – You can also look into the inference parameters, which are values that you can adjust to modify the model response. There are multiple inference parameters that you can set, and they affect different capabilities of the model. For example, if you want the model to get creative with the responses or generate completely new information, such as in the context of storytelling, you can modify the temperature parameter. This will affect how the model looks for words across probability distribution and select words that are farther apart from each other in that space.

- Contextual grounding – Lastly, you can use the contextual grounding check in Amazon Bedrock Guardrails. Amazon Bedrock Guardrails provides mechanisms within the Amazon Bedrock service that allow developers to set content filters and specify denied topics to control allowed text-based user inputs and model outputs. You can detect and filter hallucinations in model responses if they are not grounded (factually inaccurate or add new information) in the source information or are irrelevant to the user’s query. For example, you can block or flag responses in RAG applications if the model response deviates from the information in the retrieved passages or doesn’t answer the question by the user.

Model providers and tuners might not mitigate these hallucinations, but can inform the user that they might occur. This could be done by adding some disclaimers about using AI applications at the user’s own risk. We currently also see advances in research in methods that estimate uncertainty based on the amount of variation (measured as entropy) between multiple outputs. These new methods have proved much better at spotting when a question was likely to be answered incorrectly than previous methods.

Explainability

The explainability dimension in responsible AI focuses on understanding and evaluating system outputs. By using an explainable AI framework, humans can examine the models to better understand how they produce their outputs. For the explainability of the output of a generative AI model, you can use techniques like training data attribution and CoT prompting, which we discussed under the veracity and robustness dimension.

For customers wanting to see attribution of information in completion, we recommend using RAG with an Amazon Bedrock knowledge base. Attribution works with RAG because the possible attribution sources are included in the prompt itself. Information retrieved from the knowledge base comes with source attribution to improve transparency and minimize hallucinations. Amazon Bedrock Knowledge Bases manages the end-to-end RAG workflow for you. When using the RetrieveAndGenerate API, the output includes the generated response, the source attribution, and the retrieved text chunks.

Security and privacy

If there is one thing that is absolutely critical to every organization using generative AI technologies, it is making sure everything you do is and remains private, and that your data is protected at all times. The security and privacy dimension in responsible AI focuses on making sure data and models are obtained, used, and protected appropriately.

Built-in security and privacy of Amazon Bedrock

With Amazon Bedrock, if we look from a data privacy and localization perspective, AWS does not store your data—if we don’t store it, it can’t leak, it can’t be seen by model vendors, and it can’t be used by AWS for any other purpose. The only data we store is operational metrics—for example, for accurate billing, AWS collects metrics on how many tokens you send to a specific Amazon Bedrock model and how many tokens you receive in a model output. And, of course, if you create a fine-tuned model, we need to store that in order for AWS to host it for you. Data used in your API requests remains in the AWS Region of your choosing—API requests to the Amazon Bedrock API to a specific Region will remain completely within that Region.

If we look at data security, a common adage is that if it moves, encrypt it. Communications to, from, and within Amazon Bedrock are encrypted in transit—Amazon Bedrock doesn’t have a non-TLS endpoint. Another adage is that if it doesn’t move, encrypt it. Your fine-tuning data and model will by default be encrypted using AWS managed AWS Key Management Service (AWS KMS) keys, but you have the option to use your own KMS keys.

When it comes to identity and access management, AWS Identity and Access Management (IAM) controls who is authorized to use Amazon Bedrock resources. For each model, you can explicitly allow or deny access to actions. For example, one team or account could be allowed to provision capacity for Amazon Titan Text, but not Anthropic models. You can be as broad or as granular as you need to be.

Looking at network data flows for Amazon Bedrock API access, it’s important to remember that traffic is encrypted at all time. If you’re using Amazon Virtual Private Cloud (Amazon VPC), you can use AWS PrivateLink to provide your VPC with private connectivity through the regional network direct to the frontend fleet of Amazon Bedrock, mitigating exposure of your VPC to internet traffic with an internet gateway. Similarly, from a corporate data center perspective, you can set up a VPN or AWS Direct Connect connection to privately connect to a VPC, and from there you can have that traffic sent to Amazon Bedrock over PrivateLink. This should negate the need for your on-premises systems to send Amazon Bedrock related traffic over the internet. Following AWS best practices, you secure PrivateLink endpoints using security groups and endpoint policies to control access to these endpoints following Zero Trust principles.

Let’s also look at network and data security for Amazon Bedrock model customization. The customization process will first load your requested baseline model, then securely read your customization training and validation data from an S3 bucket in your account. Connection to data can happen through a VPC using a gateway endpoint for Amazon S3. That means bucket policies that you have can still be applied, and you don’t have to open up wider access to that S3 bucket. A new model is built, which is then encrypted and delivered to the customized model bucket—at no time does a model vendor have access to or visibility of your training data or your customized model. At the end of the training job, we also deliver output metrics relating to the training job to an S3 bucket that you had specified in the original API request. As mentioned previously, both your training data and customized model can be encrypted using a customer managed KMS key.

Best practices for privacy protection

The first thing to keep in mind when implementing a generative AI application is data encryption. As mentioned earlier, Amazon Bedrock uses encryption in transit and at rest. For encryption at rest, you have the option to choose your own customer managed KMS keys over the default AWS managed KMS keys. Depending on your company’s requirements, you might want to use a customer managed KMS key. For encryption in transit, we recommend using TLS 1.3 to connect to the Amazon Bedrock API.

For terms and conditions and data privacy, it’s important to read the terms and conditions of the models (EULA). Model providers are responsible for setting up these terms and conditions, and you as a customer are responsible for evaluating these and deciding if they’re appropriate for your application. Always make sure you read and understand the terms and conditions before accepting, including when you request model access in Amazon Bedrock. You should make sure you’re comfortable with the terms. Make sure your test data has been approved by your legal team.

For privacy and copyright, it is the responsibility of the provider and the model tuner to make sure the data used for training and fine-tuning is legally available and can actually be used to fine-tune and train those models. It is also the responsibility of the model provider to make sure the data they’re using is appropriate for the models. Public data doesn’t automatically mean public for commercial usage. That means you can’t use this data to fine-tune something and show it to your customers.

To protect user privacy, you can use the sensitive information filters in Amazon Bedrock Guardrails, which we discussed under the safety and controllability dimensions.

Lastly, when automating with generative AI (for example, with Amazon Bedrock Agents), make sure you’re comfortable with the model making automated decisions and consider the consequences of the application providing wrong information or actions. Therefore, consider risk management here.

Governance

The governance dimension makes sure AI systems are developed, deployed, and managed in a way that aligns with ethical standards, legal requirements, and societal values. Governance encompasses the frameworks, policies, and rules that direct AI development and use in a way that is safe, fair, and accountable. Setting and maintaining governance for AI allows stakeholders to make informed decisions around the use of AI applications. This includes transparency about how data is used, the decision-making processes of AI, and the potential impacts on users.

Robust governance is the foundation upon which responsible AI applications are built. AWS offers a range of services and tools that can empower you to establish and operationalize AI governance practices. AWS has also developed an AI governance framework that offers comprehensive guidance on best practices across vital areas such as data and model governance, AI application monitoring, auditing, and risk management.

When looking at auditability, Amazon Bedrock integrates with the AWS generative AI best practices framework v2 from AWS Audit Manager. With this framework, you can start auditing your generative AI usage within Amazon Bedrock by automating evidence collection. This provides a consistent approach for tracking AI model usage and permissions, flagging sensitive data, and alerting on issues. You can use collected evidence to assess your AI application across eight principles: responsibility, safety, fairness, sustainability, resilience, privacy, security, and accuracy.

For monitoring and auditing purposes, you can use Amazon Bedrock built-in integrations with Amazon CloudWatch and AWS CloudTrail. You can monitor Amazon Bedrock using CloudWatch, which collects raw data and processes it into readable, near real-time metrics. CloudWatch helps you track usage metrics such as model invocations and token count, and helps you build customized dashboards for audit purposes either across one or multiple FMs in one or multiple AWS accounts. CloudTrail is a centralized logging service that provides a record of user and API activities in Amazon Bedrock. CloudTrail collects API data into a trail, which needs to be created inside the service. A trail enables CloudTrail to deliver log files to an S3 bucket.

Amazon Bedrock also provides model invocation logging, which is used to collect model input data, prompts, model responses, and request IDs for all invocations in your AWS account used in Amazon Bedrock. This feature provides insights on how your models are being used and how they are performing, enabling you and your stakeholders to make data-driven and responsible decisions around the use of AI applications. Model invocation logs need to be enabled, and you can decide whether you want to store this log data in an S3 bucket or CloudWatch logs.

From a compliance perspective, Amazon Bedrock is in scope for common compliance standards, including ISO, SOC, FedRAMP moderate, PCI, ISMAP, and CSA STAR Level 2, and is Health Insurance Portability and Accountability Act (HIPAA) eligible. You can also use Amazon Bedrock in compliance with the General Data Protection Regulation (GDPR). Amazon Bedrock is included in the Cloud Infrastructure Service Providers in Europe Data Protection Code of Conduct (CISPE CODE) Public Register. This register provides independent verification that Amazon Bedrock can be used in compliance with the GDPR. For the most up-to-date information about whether Amazon Bedrock is within the scope of specific compliance programs, see AWS services in Scope by Compliance Program and choose the compliance program you’re interested in.

Implementing responsible AI in Amazon Bedrock applications

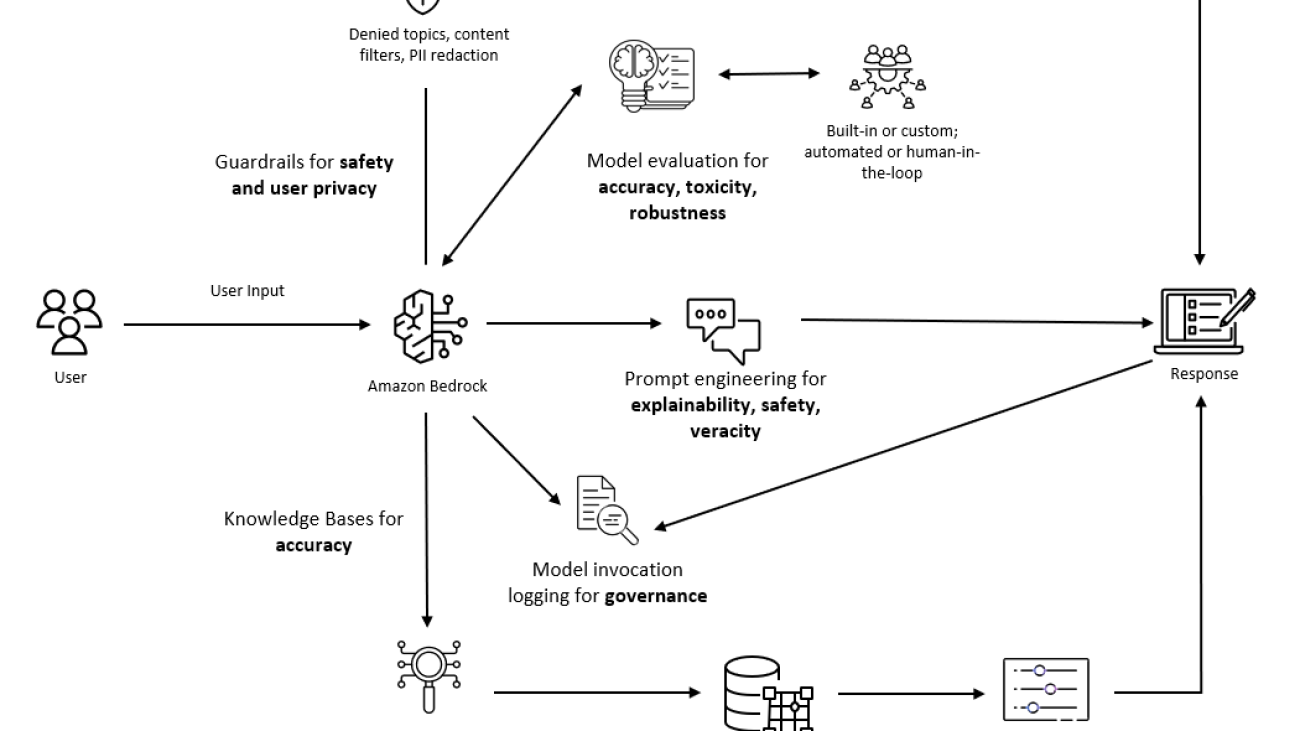

When building applications in Amazon Bedrock, consider your application context, needs, and behaviors of your end-users. Also, look into your organization’s needs, legal and regulatory requirements, and metrics you want or need to collect when implementing responsible AI. Take advantage of managed and built-in features available. The following diagram outlines various measures you can implement to address the core dimensions of responsible AI. This is not an exhaustive list, but rather a proposition of how the measures mentioned in this post could be combined together. These measures include:

- Model evaluation – Use model evaluation to assess fairness, accuracy, toxicity, robustness, and other metrics to evaluate your chosen FM and its performance.

- Amazon Bedrock Guardrails – Use Amazon Bedrock Guardrails to establish content filters, denied topics, word filters, sensitive information filters, and contextual grounding. With guardrails, you can guide model behavior by denying any unsafe or harmful topics or words and protect the safety of your end-users.

- Prompt engineering – Utilize prompt engineering techniques, such as CoT, to improve explainability, veracity and robustness, and safety and controllability of your AI application. With prompt engineering, you can set a desired structure for the model response, including tone, scope, and length of responses. You can emphasize safety and controllability by adding denied topics to the prompt template.

- Amazon Bedrock Knowledge Bases – Use Amazon Bedrock Knowledge Bases for end-to-end RAG implementation to decrease hallucinations and improve accuracy of the model for internal data use cases. Using RAG will improve veracity and robustness, safety and controllability, and explainability of your AI application.

- Logging and monitoring – Maintain comprehensive logging and monitoring to enforce effective governance.

Diagram outlining the various measures you can implement to address the core dimensions of responsible AI.

Conclusion

Building responsible AI applications requires a deliberate and structured approach, iterative development, and continuous effort. Amazon Bedrock offers a robust suite of built-in capabilities that support the development and deployment of responsible AI applications. By providing customizable features and the ability to integrate your own datasets, Amazon Bedrock enables developers to tune AI solutions to their specific application contexts and align them with organizational requirements for responsible AI. This flexibility makes sure AI applications are not only effective, but also ethical and aligned with best practices for fairness, safety, transparency, and accountability.

Implementing AI by following the responsible AI dimensions is key for developing and using AI solutions transparently, and without bias. Responsible development of AI will also help with AI adoption across your organization and build reliability with end customers. The broader the use and impact of your application, the more important following the responsibility framework becomes. Therefore, consider and address the responsible use of AI early on in your AI journey and throughout its lifecycle.

To learn more about the responsible use of ML framework, refer to the following resources:

- Responsible Use of ML

- AWS generative AI best practices framework v2

- Building Generative AI prompt chaining workflows with human in the loop

- Foundation Model Evaluations Library

About the Authors

Laura Verghote is a senior solutions architect for public sector customers in EMEA. She works with customers to design and build solutions in the AWS Cloud, bridging the gap between complex business requirements and technical solutions. She joined AWS as a technical trainer and has wide experience delivering training content to developers, administrators, architects, and partners across EMEA.

Laura Verghote is a senior solutions architect for public sector customers in EMEA. She works with customers to design and build solutions in the AWS Cloud, bridging the gap between complex business requirements and technical solutions. She joined AWS as a technical trainer and has wide experience delivering training content to developers, administrators, architects, and partners across EMEA.

Maria Lehtinen is a solutions architect for public sector customers in the Nordics. She works as a trusted cloud advisor to her customers, guiding them through cloud system development and implementation with strong emphasis on AI/ML workloads. She joined AWS through an early-career professional program and has previous work experience from cloud consultant position at one of AWS Advanced Consulting Partners.

Maria Lehtinen is a solutions architect for public sector customers in the Nordics. She works as a trusted cloud advisor to her customers, guiding them through cloud system development and implementation with strong emphasis on AI/ML workloads. She joined AWS through an early-career professional program and has previous work experience from cloud consultant position at one of AWS Advanced Consulting Partners.

From RAG to fabric: Lessons learned from building real-world RAGs at GenAIIC – Part 2

In Part 1 of this series, we defined the Retrieval Augmented Generation (RAG) framework to augment large language models (LLMs) with a text-only knowledge base. We gave practical tips, based on hands-on experience with customer use cases, on how to improve text-only RAG solutions, from optimizing the retriever to mitigating and detecting hallucinations.

This post focuses on doing RAG on heterogeneous data formats. We first introduce routers, and how they can help managing diverse data sources. We then give tips on how to handle tabular data and will conclude with multimodal RAG, focusing specifically on solutions that handle both text and image data.

Overview of RAG use cases with heterogeneous data formats

After a first wave of text-only RAG, we saw an increase in customers wanting to use a variety of data for Q&A. The challenge here is to retrieve the relevant data source to answer the question and correctly extract information from that data source. Use cases we have worked on include:

- Technical assistance for field engineers – We built a system that aggregates information about a company’s specific products and field expertise. This centralized system consolidates a wide range of data sources, including detailed reports, FAQs, and technical documents. The system integrates structured data, such as tables containing product properties and specifications, with unstructured text documents that provide in-depth product descriptions and usage guidelines. A chatbot enables field engineers to quickly access relevant information, troubleshoot issues more effectively, and share knowledge across the organization.

- Oil and gas data analysis – Before beginning operations at a well a well, an oil and gas company will collect and process a diverse range of data to identify potential reservoirs, assess risks, and optimize drilling strategies. The data sources may include seismic surveys, well logs, core samples, geochemical analyses, and production histories, with some of it in industry-specific formats. Each category necessitates specialized generative AI-powered tools to generate insights. We built a chatbot that can answer questions across this complex data landscape, so that oil and gas companies can make faster and more informed decisions, improve exploration success rates, and decrease time to first oil.

- Financial data analysis – The financial sector uses both unstructured and structured data for market analysis and decision-making. Unstructured data includes news articles, regulatory filings, and social media, providing qualitative insights. Structured data consists of stock prices, financial statements, and economic indicators. We built a RAG system that combines these diverse data types into a single knowledge base, allowing analysts to efficiently access and correlate information. This approach enables nuanced analysis by combining numerical trends with textual insights to identify opportunities, assess risks, and forecast market movements.

- Industrial maintenance – We built a solution that combines maintenance logs, equipment manuals, and visual inspection data to optimize maintenance schedules and troubleshooting. This multimodal approach integrates written reports and procedures with images and diagrams of machinery, allowing maintenance technicians to quickly access both descriptive information and visual representations of equipment. For example, a technician could query the system about a specific machine part, receiving both textual maintenance history and annotated images showing wear patterns or common failure points, enhancing their ability to diagnose and resolve issues efficiently.

- Ecommerce product search – We built several solutions to enhance the search capabilities on ecommerce websites to improve the shopping experience for customers. Traditional search engines rely mostly on text-based queries. By integrating multimodal (text and image) RAG, we aimed to create a more comprehensive search experience. The new system can handle both text and image inputs, allowing customers to upload photos of desired items and receive precise product matches.

Using a router to handle heterogeneous data sources

In RAG systems, a router is a component that directs incoming user queries to the appropriate processing pipeline based on the query’s nature and the required data type. This routing capability is crucial when dealing with heterogeneous data sources, because different data types often require distinct retrieval and processing strategies.

Consider a financial data analysis system. For a qualitative question like “What caused inflation in 2023?”, the router would direct the query to a text-based RAG that retrieves relevant documents and uses an LLM to generate an answer based on textual information. However, for a quantitative question such as “What was the average inflation in 2023?”, the router would direct the query to a different pipeline that fetches and analyzes the relevant dataset.

The router accomplishes this through intent detection, analyzing the query to determine the type of data and analysis required to answer it. In systems with heterogeneous data, this process makes sure each data type is processed appropriately, whether it’s unstructured text, structured tables, or multimodal content. For instance, analyzing large tables might require prompting the LLM to generate Python or SQL and running it, rather than passing the tabular data to the LLM. We give more details on that aspect later in this post.

In practice, the router module can be implemented with an initial LLM call. The following is an example prompt for a router, following the example of financial analysis with heterogeneous data. To avoid adding too much latency with the routing step, we recommend using a smaller model, such as Anthropic’s Claude Haiku on Amazon Bedrock.

Prompting the LLM to explain the routing logic may help with accuracy, by forcing the LLM to “think” about its answer, and also for debugging purposes, to understand why a category might not be routed properly.

The prompt uses XML tags following Anthropic’s Claude best practices. Note that in this example prompt we used <data_source> tags but something similar such as <category> or <label> could also be used. Asking the LLM to also structure its response with XML tags allows us to parse out the category from the LLM answer, which can be done with the following code:

From a user’s perspective, if the LLM fails to provide the right routing category, the user can explicitly ask for the data source they want to use in the query. For instance, instead of saying “What caused inflation in 2023?”, the user could disambiguate by asking “What caused inflation in 2023 according to analysts?”, and instead of “What was the average inflation in 2023?”, the user could ask “What was the average inflation in 2023? Look at the indicators.”

Another option for a better user experience is to add an option to ask for clarifications in the router, if the LLM finds that the query is too ambiguous. We can add this as an additional “data source” in the router using the following code:

We use an associated example:

If in the LLM’s response, the data source is Clarifications, we can then directly return the content of the <reason> tags to the user for clarifications.

An alternative approach to routing is to use the native tool use capability (also known as function calling) available within the Bedrock Converse API. In this scenario, each category or data source would be defined as a ‘tool’ within the API, enabling the model to select and use these tools as needed. Refer to this documentation for a detailed example of tool use with the Bedrock Converse API.

Using LLM code generation abilities for RAG with structured data

Consider an oil and gas company analyzing a dataset of daily oil production. The analyst may ask questions such as “Show me all wells that produced oil on June 1st 2024,” “What well produced the most oil in June 2024?”, or “Plot the monthly oil production for well XZY for 2024.” Each question requires different treatment, with varying complexity. The first one involves filtering the dataset to return all wells with production data for that specific date. The second one requires computing the monthly production values from the daily data, then finding the maximum and returning the well ID. The third one requires computing the monthly average for well XYZ and then generating a plot.

LLMs don’t perform well at analyzing tabular data when it’s added directly in the prompt as raw text. A simple way to improve the LLM’s handling of tables is to add it in the prompt in a more structured format, such as markdown or XML. However, this method will only work if the question doesn’t require complex quantitative reasoning and the table is small enough. In other cases, we can’t reliably use an LLM to analyze tabular data, even when provided as structured format in the prompt.

On the other hand, LLMs are notably good at code generation; for instance, Anthropic’s Claude Sonnet 3.5 has 92% accuracy on the HumanEval code benchmark. We can take advantage of that capability by asking the LLM to write Python (if the data is stored in a CSV, Excel, or Parquet file) or SQL (if the data is stored in a SQL database) code that performs the required analysis. Popular libraries Llama Index and LangChain both offer out-of-the-box solutions for text-to-SQL (Llama Index, LangChain) and text-to-Pandas (Llama Index, LangChain) pipelines for quick prototyping. However, for better control over prompts, code execution, and outputs, it might be worth writing your own pipeline. Out-of-the-box solutions will typically prompt the LLM to write Python or SQL code to answer the user’s question, then parse and run the code from the LLM’s response, and finally send the code output back to the LLM for a final answer.

Going back to the oil and gas data analysis use case, take the question “Show me all wells that produced oil on June 1st 2024.” There could be hundreds of entries in the dataframe. In that case, a custom pipeline that directly returns the code output to the UI (the filtered dataframe for the date of June 1st 2024, with oil production greater than 0) would be more efficient than sending it to the LLM for a final answer. If the filtered dataframe is large, the additional call might cause high latency and even risks causing hallucinations. Writing your custom pipelines also allows you to perform some sanity checks on the code, to verify, for instance, that the code generated by the LLM will not create issues (such as modify existing files or data bases).

The following is an example of a prompt that can be used to generate Pandas code for data analysis:

We can then parse the code out from the <code> tags in the LLM response and run it using exec in Python. The following code is a full example:

Because we explicitly prompt the LLM to store the final result in the result variable, we know it will be stored in the local_vars dictionary under that key, and we can retrieve it that way. We can then either directly return this result to the user, or send it back to the LLM to generate its final response. Sending the variable back to the user directly can be useful if the request requires filtering and returning a large dataframe, for instance. Directly returning the variable to the user removes the risk of hallucination that can occur with large inputs and outputs.

Multimodal RAG

An emerging trend in generative AI is multimodality, with models that can use text, images, audio, and video. In this post, we focus exclusively on mixing text and image data sources.

In an industrial maintenance use case, consider a technician facing an issue with a machine. To troubleshoot, they might need visual information about the machine, not just a textual guide.

In ecommerce, using multimodal RAG can enhance the shopping experience not only by allowing users to input images to find visually similar products, but also by providing more accurate and detailed product descriptions from visuals of the products.

We can categorize multimodal text and image RAG questions in three categories:

- Image retrieval based on text input – For example:

- “Show me a diagram to repair the compressor on the ice cream machine.”

- “Show me red summer dresses with floral patterns.”

- Text retrieval based on image input – For example:

- A technician might take a picture of a specific part of the machine and ask, “Show me the manual section for this part.”

- Image retrieval based on text and image input – For example:

- A customer could upload an image of a dress and ask, “Show me similar dresses.” or “Show me items with a similar pattern.”

As with traditional RAG pipelines, the retrieval component is the basis of these solutions. Constructing a multimodal retriever requires having an embedding strategy that can handle this multimodality. There are two main options for this.

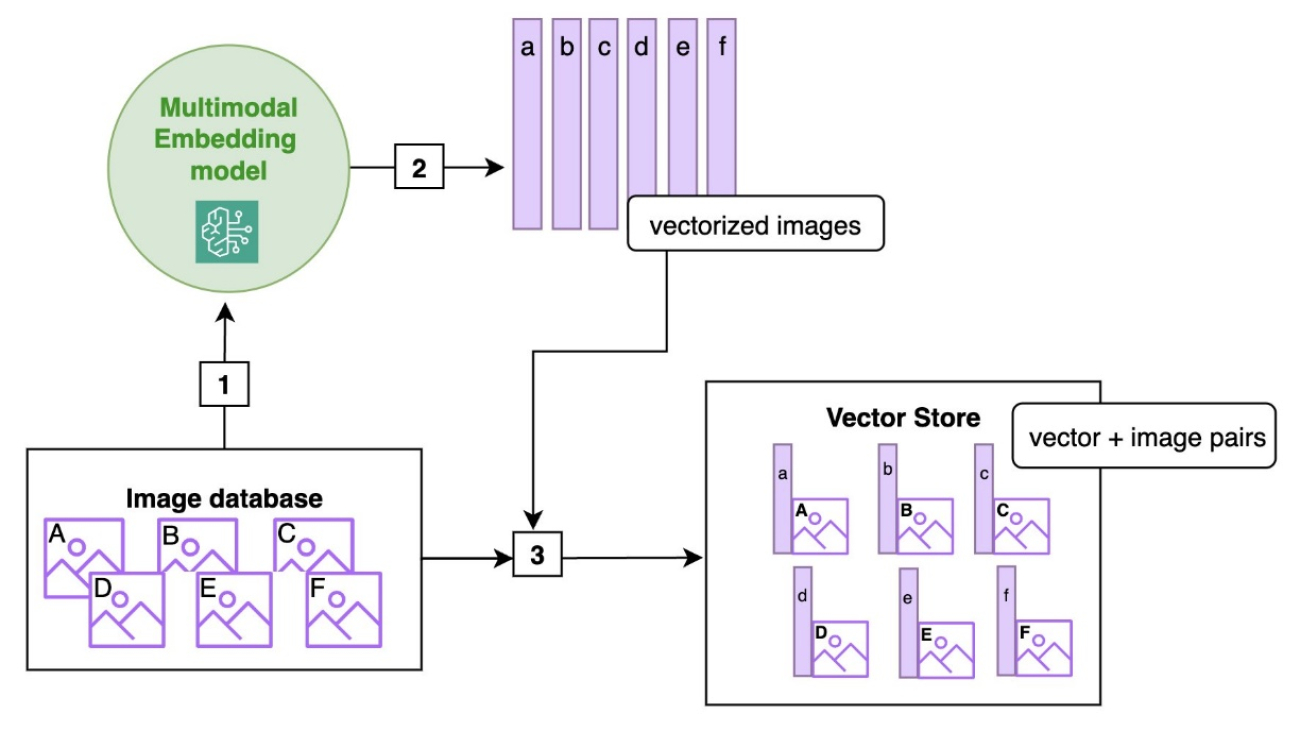

First, you could use a multimodal embedding model such as Amazon Titan Multimodal Embeddings, which can embed both images and text into a shared vector space. This allows for direct comparison and retrieval of text and images based on semantic similarity. This simple approach is effective for finding images that match a high-level description or for matching images of similar items. For instance, a query like “Show me summer dresses” would return a variety of images that fit that description. It’s also suitable for queries where the user uploads a picture and asks, “Show me dresses similar to that one.”

The following diagram shows the ingestion logic with a multimodal embedding. The images in the database are sent to a multimodal embedding model that returns vector representations of the images. The images and the corresponding vectors are paired up and stored in the vector database.

At retrieval time, the user query (which can be text or image) is passed to the multimodal embedding model, which returns a vectorized user query that is used by the retriever module to search for images that are close to the user query, in the embedding distance. The closest images are then returned.

Alternatively, you could use a multimodal foundation model (FM) such as Anthropic’s Claude v3 Haiku, Sonnet, or Opus, and Sonnet 3.5, all available on Amazon Bedrock, which can generate the caption of an image, which will then be used for retrieval. Specifically, the generated image description is embedded using a traditional text embedding (e.g. Amazon Titan Embedding Text v2) and stored in a vector store along with the image as metadata.

Captions can capture finer details in images, and can be guided to focus on specific aspects such as color, fabric, pattern, shape, and more. This would be better suited for queries where the user uploads an image and looks for similar items but only in some aspects (such as uploading a picture of a dress, and asking for skirts in a similar style). This would also work better to capture the complexity of diagrams in industrial maintenance.

The following figure shows the ingestion logic with a multimodal FM and text embedding. The images in the database are sent to a multimodal FM that returns image captions. The image captions are then sent to a text embedding model and converted to vectors. The images are paired up with the corresponding vectors and captions and stored in the vector database.

At retrieval time, the user query (text) is passed to the text embedding model, which returns a vectorized user query that is used by the retriever module to search for captions that are close to the user query, in the embedding distance. The images corresponding to the closest captions are then returned, optionally with the caption as well. If the user query contains an image, we need to use a multimodal LLM to describe that image similarly to the previous ingestion steps.

Example with a multimodal embedding model

The following is a code sample performing ingestion with Amazon Titan Multimodal Embeddings as described earlier. The embedded image is stored in an OpenSearch index with a k-nearest neighbors (k-NN) vector field.

The following is the code sample performing the retrieval with Amazon Titan Multimodal Embeddings:

In the response, we have the images that are closest to the user query in embedding space, thanks to the multimodal embedding.

Example with a multimodal FM

The following is a code sample performing the retrieval and ingestion described earlier. It uses Anthropic’s Claude Sonnet 3 to caption the image first, and then Amazon Titan Text Embeddings to embed the caption. You could also use another multimodal FM such as Anthropic’s Claude Sonnet 3.5, Haiku 3, or Opus 3 on Amazon Bedrock. The image, caption embedding, and caption are stored in an OpenSearch index. At retrieval time, we embed the user query using the same Amazon Titan Text Embeddings model and perform a k-NN search on the OpenSearch index to retrieve the relevant image.

The following is code to perform the retrieval step using text embeddings:

This returns the images whose captions are closest to the user query in the embedding space, thanks to the text embeddings. In the response, we get both the images and the corresponding captions for downstream use.

Comparative table of multimodal approaches

The following table provides a comparison between using multimodal embeddings and using a multimodal LLM for image captioning, across several key factors. Multimodal embeddings offer faster ingestion and are generally more cost-effective, making them suitable for large-scale applications where speed and efficiency are crucial. On the other hand, using a multimodal LLM for captions, though slower and less cost-effective, provides more detailed and customizable results, which is particularly useful for scenarios requiring precise image descriptions. Considerations such as latency for different input types, customization needs, and the level of detail required in the output should guide the decision-making process when selecting your approach.

| . | Multimodal Embeddings | Multimodal LLM for Captions |

| Speed | Faster ingestion | Slower ingestion due to additional LLM call |

| Cost | More cost-effective | Less cost-effective |

| Detail | Basic comparison based on embeddings | Detailed captions highlighting specific features |

| Customization | Less customizable | Highly customizable with prompts |

| Text Input Latency | Same as multimodal LLM | Same as multimodal embeddings |

| Image Input Latency | Faster, no extra processing required | Slower, requires extra LLM call to generate image caption |

| Best Use Case | General use, quick and efficient data handling | Precise searches needing detailed image descriptions |

Conclusion

Building real-world RAG systems with heterogeneous data formats presents unique challenges, but also unlocks powerful capabilities for enabling natural language interactions with complex data sources. By employing techniques like intent detection, code generation, and multimodal embeddings, you can create intelligent systems that can understand queries, retrieve relevant information from structured and unstructured data sources, and provide coherent responses. The key to success lies in breaking down the problem into modular components and using the strengths of FMs for each component. Intent detection helps route queries to the appropriate processing logic, and code generation enables quantitative reasoning and analysis on structured data sources. Multimodal embeddings and multimodal FMs enable you to bridge the gap between text and visual data, enabling seamless integration of images and other media into your knowledge bases.

Get started with FMs and embedding models in Amazon Bedrock to build RAG solutions that seamlessly integrate tabular, image, and text data for your organization’s unique needs.

About the Author

Aude Genevay is a Senior Applied Scientist at the Generative AI Innovation Center, where she helps customers tackle critical business challenges and create value using generative AI. She holds a PhD in theoretical machine learning and enjoys turning cutting-edge research into real-world solutions.

Aude Genevay is a Senior Applied Scientist at the Generative AI Innovation Center, where she helps customers tackle critical business challenges and create value using generative AI. She holds a PhD in theoretical machine learning and enjoys turning cutting-edge research into real-world solutions.

Cohere Embed multimodal embeddings model is now available on Amazon SageMaker JumpStart

The Cohere Embed multimodal embeddings model is now generally available on Amazon SageMaker JumpStart. This model is the newest Cohere Embed 3 model, which is now multimodal and capable of generating embeddings from both text and images, enabling enterprises to unlock real value from their vast amounts of data that exist in image form.

In this post, we discuss the benefits and capabilities of this new model with some examples.

Overview of multimodal embeddings and multimodal RAG architectures

Multimodal embeddings are mathematical representations that integrate information not only from text but from multiple data modalities—such as product images, graphs, and charts—into a unified vector space. This integration allows for seamless interaction and comparison between different types of data. As foundational models (FMs) advance, they increasingly require the ability to interpret and generate content across various modalities to better mimic human understanding and communication. This trend toward multimodality enhances the capabilities of AI systems in tasks like cross-modal retrieval, where a query in one modality (such as text) retrieves data in another modality (such as images or design files).

Multimodal embeddings can enable personalized recommendations by understanding user preferences and matching them with the most relevant assets. For instance, in ecommerce, product images are a critical factor influencing purchase decisions. Multimodal embeddings models can enhance personalization through visual similarity search, where users can upload an image or select a product they like, and the system finds visually similar items. In the case of retail and fashion, multimodal embeddings can capture stylistic elements, enabling the search system to recommend products that fit a particular aesthetic, such as “vintage,” “bohemian,” or “minimalist.”

Multimodal Retrieval Augmented Generation (MM-RAG) is emerging as a powerful evolution of traditional RAG systems, addressing limitations and expanding capabilities across diverse data types. Traditionally, RAG systems were text-centric, retrieving information from large text databases to provide relevant context for language models. However, as data becomes increasingly multimodal in nature, extending these systems to handle various data types is crucial to provide more comprehensive and contextually rich responses. MM-RAG systems that use multimodal embeddings models to encode both text and images into a shared vector space can simplify retrieval across modalities. MM-RAG systems can also enable enhanced customer service AI agents that can handle queries that involve both text and images, such as product defects or technical issues.

Cohere Multimodal Embed 3: Powering enterprise search across text and images

Cohere’s embeddings model, Embed 3, is an industry-leading AI search model that is designed to transform semantic search and generative AI applications. Cohere Embed 3 is now multimodal and capable of generating embeddings from both text and images. This enables enterprises to unlock real value from their vast amounts of data that exist in image form. Businesses can now build systems that accurately search important multimodal assets such as complex reports, ecommerce product catalogs, and design files to boost workforce productivity.

Cohere Embed 3 translates input data into long strings of numbers that represent the meaning of the data. These numerical representations are then compared to each other to determine similarities and differences. Cohere Embed 3 places both text and image embeddings in the same space for an integrated experience.

The following figure illustrates an example of this workflow. This figure is simplified for illustrative purposes. In practice, the numerical representations of data (seen in the output column) are far longer and the vector space that stores them has a higher number of dimensions.

This similarity comparison enables applications to retrieve enterprise data that is relevant to an end-user query. In addition to being a fundamental component of semantic search systems, Cohere Embed 3 is useful in RAG systems because it makes generative models like the Command R series have the most relevant context to inform their responses.

All businesses, across industry and size, can benefit from multimodal AI search. Specifically, customers are interested in the following real-world use cases: