Amazon has introduced two new creative content generation models on Amazon Bedrock: Amazon Nova Canvas for image generation and Amazon Nova Reel for video creation. These models transform text and image inputs into custom visuals, opening up creative opportunities for both professional and personal projects. Nova Canvas, a state-of-the-art image generation model, creates professional-grade images from text and image inputs, ideal for applications in advertising, marketing, and entertainment. Similarly, Nova Reel, a cutting-edge video generation model, supports the creation of short videos from text and image inputs, complete with camera motion controls using natural language. Although these models are powerful tools for creative expression, their effectiveness relies heavily on how well users can communicate their vision through prompts.

This post dives deep into prompt engineering for both Nova Canvas and Nova Reel. We share proven techniques for describing subjects, environments, lighting, and artistic style in ways the models can understand. For Nova Reel, we explore how to effectively convey camera movements and transitions through natural language. Whether you’re creating marketing content, prototyping designs, or exploring creative ideas, these prompting strategies can help you unlock the full potential of the visual generation capabilities of Amazon Nova.

Solution overview

To get started with Nova Canvas and Nova Reel, you can either use the Image/Video Playground on the Amazon Bedrock console or access the models through APIs. For detailed setup instructions, including account requirements, model access, and necessary permissions, refer to Creative content generation with Amazon Nova.

Generate images with Nova Canvas

Prompting for image generation models like Amazon Nova Canvas is both an art and a science. Unlike large language models (LLMs), Nova Canvas doesn’t reason or interpret command-based instructions explicitly. Instead, it transforms prompts into images based on how well the prompt captures the essence of the desired image. Writing effective prompts is key to unlocking the full potential of the model for various use cases. In this post, we explore how to craft effective prompts, examine essential elements of good prompts, and dive into typical use cases, each with compelling example prompts.

Essential elements of good prompts

A good prompt serves as a descriptive image caption rather than command-based instructions. It should provide enough detail to clearly describe the desired outcome while maintaining brevity (limited to 1,024 characters). Instead of giving command-based instructions like “Make a beautiful sunset,” you can achieve better results by describing the scene as if you’re looking at it: “A vibrant sunset over mountains, with golden rays streaming through pink clouds, captured from a low angle.” Think of it as painting a vivid picture with words to guide the model effectively.

Effective prompts start by clearly defining the main subject and action:

- Subject – Clearly define the main subject of the image. Example: “A blue sports car parked in front of a grand villa.”

- Action or pose – Specify what the subject is doing or how it is positioned. Example: “The car is angled slightly towards the camera, its doors open, showcasing its sleek interior.”

Add further context:

- Environment – Describe the setting or background. Example: “A grand villa overlooking Lake Como, surrounded by manicured gardens and sparkling lake waters.”

After you define the main focus of the image, you can refine the prompt further by specifying additional attributes such as visual style, framing, lighting, and technical parameters. For instance:

- Lighting – Include lighting details to set the mood. Example: “Soft, diffused lighting from a cloudy sky highlights the car’s glossy surface and the villa’s stone facade.”

- Camera position and framing – Provide information about perspective and composition. Example: “A wide-angle shot capturing the car in the foreground and the villa’s grandeur in the background, with Lake Como visible beyond.”

- Style – Mention the visual style or medium. Example: “Rendered in a cinematic style with vivid, high-contrast details.”

Finally, you can use a negative prompt to exclude specific elements or artifacts from your composition, aligning it more closely to your vision:

- Negative prompt – Use the

negativeTextparameter to exclude unwanted elements in your main prompt. For instance, to remove any birds or people from the image, rather than adding “no birds or people” to the prompt, add “birds, people” to the negative prompt instead. Avoid using negation words like “no,” “not,” or “without” in both the prompt and negative prompt because they might lead to unintended consequences.

Let’s bring all these elements together with an example prompt with a basic and enhanced version that shows these techniques in action.

|

|

| Basic: A car in front of a house | Enhanced: A blue luxury sports car parked in front of a grand villa overlooking Lake Como. The setting features meticulously manicured gardens, with the lake’s sparkling waters and distant mountains in the background. The car’s sleek, polished surface reflects the surrounding elegance, enhanced by soft, diffused lighting from a cloudy sky. A wide-angle shot capturing the car, villa, and lake in harmony, rendered in a cinematic style with vivid, high-contrast details. |

Example image generation prompts

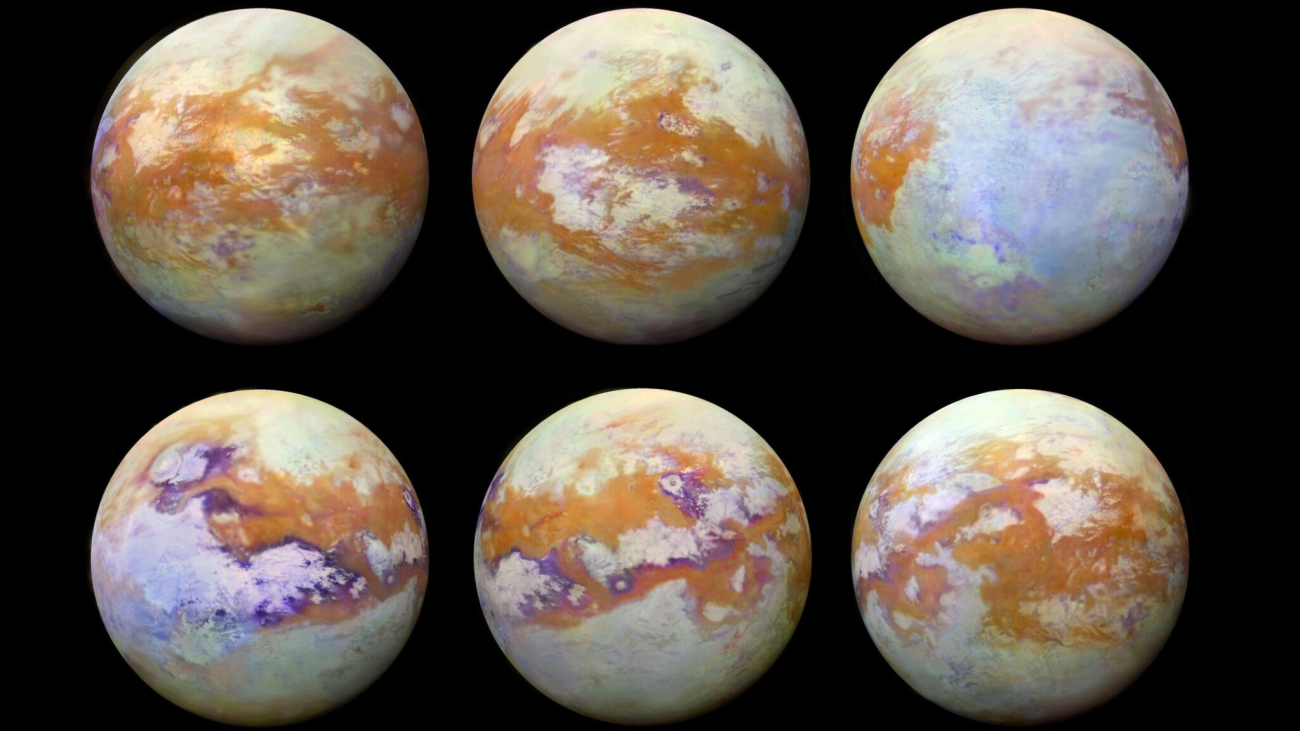

By mastering these techniques, you can create stunning visuals for a wide range of applications using Amazon Nova Canvas. The following images have been generated using Nova Canvas at a 1280×720 pixel resolution with a CFG scale of 6.5 and seed of 0 for reproducibility (see Request and response structure for image generation for detailed explanations of these parameters). This resolution also matches the image dimensions expected by Nova Reel, allowing seamless integration for video experimentation. Let’s examine some example prompts and their resulting images to see these techniques in action.

|

|

| Aerial view of sparse arctic tundra landscape, expansive white terrain with meandering frozen rivers and scattered rock formations. High-contrast black and white composition showcasing intricate patterns of ice and snow, emphasizing texture and geological diversity. Bird’s-eye perspective capturing the abstract beauty of the arctic wilderness. | An overhead shot of premium over-ear headphones resting on a reflective surface, showcasing the symmetry of the design. Dramatic side lighting accentuates the curves and edges, casting subtle shadows that highlight the product’s premium build quality |

|

|

| An angled view of a premium matte metal water bottle with bamboo accents, showcasing its sleek profile. The background features a soft blur of a serene mountain lake. Golden hour sunlight casts a warm glow on the bottle’s surface, highlighting its texture. Captured with a shallow depth of field for product emphasis. | Watercolor scene of a cute baby dragon with pearlescent mint-green scales crouched at the edge of a garden puddle, tiny wings raised. Soft pastel flowers and foliage frame the composition. Loose, wet-on-wet technique for a dreamy atmosphere, with sunlight glinting off ripples in the puddle. |

|

|

| Abstract figures emerging from digital screens, glitch art aesthetic with RGB color shifts, fragmented pixel clusters, high contrast scanlines, deep shadows cast by volumetric lighting | An intimate portrait of a seasoned fisherman, his face filling the frame. His thick gray beard is flecked with sea spray, and his knit cap is pulled low over his brow. The warm glow of sunset bathes his weathered features in golden light, softening the lines of his face while still preserving the character earned through years at sea. His eyes reflect the calm waters of the harbor behind him. |

Generate video with Nova Reel

Video generation models work best with descriptive prompts rather than command-based instructions. When crafting prompts, focus on what you want to see rather than telling the model what to do. Think of writing a detailed caption or scene description like you’re explaining a video that already exists. For example, describing elements like the main subjects, what they’re doing, the setting around them, how the scene is lit, the overall artistic style, and any camera movements can help create more accurate results. The key is to paint a complete picture through description rather than giving step-by-step directions. This means instead of saying “create a dramatic scene,” you could describe “a stormy beach at sunset with crashing waves and dark clouds, filmed with a slow aerial shot moving over the coastline.” The more specific and descriptive you can be about the visual elements you want, the better the output will be. You might want to include details about the subject, action, environment, lighting, style, and camera motion.

When writing a video generation prompt for Nova Reel, be mindful of the following requirements and best practices:

- Prompts must be no longer than 512 characters.

- For best results, place camera movement descriptions at the start or end of your prompt.

- Specify what you want to include rather than what to exclude. For example, instead of “fruit basket with no bananas,” say “fruit basket with apples, oranges, and pears.”

When describing camera movements in your video prompts, be specific about the type of motion you want—whether it’s a smooth dolly shot (moving forward/backward), a pan (sweeping left/right), or a tilt (moving up/down). For more dynamic effects, you can request aerial shots, orbit movements, or specialized techniques like dolly zooms. You can also specify the speed of the movement; refer to camera controls for more ideas.

Example video generation prompts

Let’s look at some of the outputs from Amazon Nova Reel.

Generate image-based video using Nova Reel

In addition to the fundamental text-to-video, Amazon Nova Reel also supports an image-to-video feature that allows you to use an input reference image to guide video generation. Using image-based prompts for video generation offers significant advantages: it speeds up your creative iteration process and provides precise control over the final output. Instead of relying solely on text descriptions, you can visually define exactly how you want your video to start. These images can come from Nova Canvas or from other sources where you have appropriate rights to use them. This approach has two main strategies:

- Simple camera motion – Start with your reference image to establish the visual elements and style, then add minimal prompts focused purely on camera movement, such as “dolly forward.” This approach keeps the scene largely static while creating dynamic motion through camera direction.

- Dynamic transformation – This strategy involves describing specific actions and temporal changes in your scene. Detail how elements should evolve over time, framing your descriptions as summaries of the desired transformation rather than step-by-step commands. This allows for more complex scene evolution while maintaining the visual foundation established by your reference image.

This method streamlines your creative workflow by using your image as the visual foundation. Rather than spending time refining text prompts to achieve the right look, you can quickly create an image in Nova Canvas (or source it elsewhere) and use it as your starting point in Nova Reel. This approach leads to faster iterations and more predictable results compared to pure text-to-video generation.

Let’s take some images we created earlier with Amazon Nova Canvas and use them as reference frames to generate videos with Amazon Nova Reel.

Tying it all together

You can transform your storytelling by combining multiple generated video clips into a captivating narrative. Although Nova Reel handles the video generation, you can further enhance the content using preferred video editing software to add thoughtful transitions, background music, and narration. This combination creates an immersive journey that transports viewers into compelling storytelling. The following example showcases Nova Reel’s capabilities in creating engaging visual narratives. Each clip is crafted with professional lighting and cinematic quality.

Best practices

Consider the following best practices:

- Refine iteratively – Start simple and refine your prompt based on the output.

- Be specific – Provide detailed descriptions for better results.

- Diversify adjectives – Avoid overusing generic descriptors like “beautiful” or “amazing;” opt for specific terms like “serene” or “ornate.”

- Refine prompts with AI – Use multimodal understanding models like Amazon Nova (Pro, Lite, or Micro) to help you convert your high-level idea into a refined prompt based on best practices. Generative AI can also help you quickly generate different variations of your prompt to help you experiment with different combinations of style, framing, and more, as well as other creative explorations. Experiment with community-built tools like the Amazon Nova Canvas Prompt Refiner in PartyRock, the Amazon Nova Canvas Prompting Assistant, and the Nova Reel Prompt Optimizer.

- Maintain a templatized prompt library – Keep a catalog of effective prompts and their results to refine and adapt over time. Create reusable templates for common scenarios to save time and maintain consistency.

- Learn from others – Explore community resources and tools to see effective prompts and adapt them.

- Follow trends – Monitor updates or new model features, because prompt behavior might change with new capabilities.

Fundamentals of prompt engineering for Amazon Nova

Effective prompt engineering for Amazon Nova Canvas and Nova Reel follows an iterative process, designed to refine your input and achieve the desired output. This process can be visualized as a logical flow chart, as illustrated in the following diagram.

The process consists of the following steps:

- Begin by crafting your initial prompt, focusing on descriptive elements such as subject, action, environment, lighting, style, and camera position or motion.

- After generating the first output, assess whether it aligns with your vision. If the result is close to what you want, proceed to the next step. If not, return to Step 1 and refine your prompt.

- When you’ve found a promising direction, maintain the same seed value. This maintains consistency in subsequent generations while you make minor adjustments.

- Make small, targeted changes to your prompt. These could involve adjusting descriptors, adding or removing elements, and more.

- Generate a new output with your refined prompt, keeping the seed value constant.

- Evaluate the new output. If it’s satisfactory, move to the next step. If not, return to Step 4 and continue refining.

- When you’ve achieved a satisfactory result, experiment with different seed values to create variations of your successful prompt. This allows you to explore subtle differences while maintaining the core elements of your desired output.

- Select the best variations from your generated options as your final output.

This iterative approach allows for systematic improvement of your prompts, leading to more accurate and visually appealing results from Nova Canvas and Nova Reel models. Remember, the key to success lies in making incremental changes, maintaining consistency where needed, and being open to exploring variations after you’ve found a winning formula.

Conclusion

Understanding effective prompt engineering for Nova Canvas and Nova Reel unlocks exciting possibilities for creating stunning images and compelling videos. By following the best practices outlined in this guide—from crafting descriptive prompts to iterative refinement—you can transform your ideas into production-ready visual assets.

Ready to start creating? Visit the Amazon Bedrock console today to experiment with Nova Canvas and Nova Reel in the Amazon Bedrock Playground or using the APIs. For detailed specifications, supported features, and additional examples, refer to the following resources:

- Creative content generation with Amazon Nova

- Prompting best practices for Amazon Nova content creation models

About the authors

Yanyan Zhang is a Senior Generative AI Data Scientist at Amazon Web Services, where she has been working on cutting-edge AI/ML technologies as a Generative AI Specialist, helping customers use generative AI to achieve their desired outcomes. Yanyan graduated from Texas A&M University with a PhD in Electrical Engineering. Outside of work, she loves traveling, working out, and exploring new things.

Yanyan Zhang is a Senior Generative AI Data Scientist at Amazon Web Services, where she has been working on cutting-edge AI/ML technologies as a Generative AI Specialist, helping customers use generative AI to achieve their desired outcomes. Yanyan graduated from Texas A&M University with a PhD in Electrical Engineering. Outside of work, she loves traveling, working out, and exploring new things.

Kris Schultz has spent over 25 years bringing engaging user experiences to life by combining emerging technologies with world class design. In his role as Senior Product Manager, Kris helps design and build AWS services to power Media & Entertainment, Gaming, and Spatial Computing.

Kris Schultz has spent over 25 years bringing engaging user experiences to life by combining emerging technologies with world class design. In his role as Senior Product Manager, Kris helps design and build AWS services to power Media & Entertainment, Gaming, and Spatial Computing.

Sanju Sunny is a Digital Innovation Specialist with Amazon ProServe. He engages with customers in a variety of industries around Amazon’s distinctive customer-obsessed innovation mechanisms in order to rapidly conceive, validate and prototype new products, services and experiences.

Sanju Sunny is a Digital Innovation Specialist with Amazon ProServe. He engages with customers in a variety of industries around Amazon’s distinctive customer-obsessed innovation mechanisms in order to rapidly conceive, validate and prototype new products, services and experiences.

Nitin Eusebius is a Sr. Enterprise Solutions Architect at AWS, experienced in Software Engineering, Enterprise Architecture, and AI/ML. He is deeply passionate about exploring the possibilities of generative AI. He collaborates with customers to help them build well-architected applications on the AWS platform, and is dedicated to solving technology challenges and assisting with their cloud journey.

Nitin Eusebius is a Sr. Enterprise Solutions Architect at AWS, experienced in Software Engineering, Enterprise Architecture, and AI/ML. He is deeply passionate about exploring the possibilities of generative AI. He collaborates with customers to help them build well-architected applications on the AWS platform, and is dedicated to solving technology challenges and assisting with their cloud journey.

Vishal Naik is a Sr. Solutions Architect at Amazon Web Services (AWS). He is a builder who enjoys helping customers accomplish their business needs and solve complex challenges with AWS solutions and best practices. His core area of focus includes Generative AI and Machine Learning. In his spare time, Vishal loves making short films on time travel and alternate universe themes.

Vishal Naik is a Sr. Solutions Architect at Amazon Web Services (AWS). He is a builder who enjoys helping customers accomplish their business needs and solve complex challenges with AWS solutions and best practices. His core area of focus includes Generative AI and Machine Learning. In his spare time, Vishal loves making short films on time travel and alternate universe themes. Sumeet Tripathi is an Enterprise Support Lead (TAM) at AWS in North Carolina. He has over 17 years of experience in technology across various roles. He is passionate about helping customers to reduce operational challenges and friction. His focus area is AI/ML and Energy & Utilities Segment. Outside work, He enjoys traveling with family, watching cricket and movies.

Sumeet Tripathi is an Enterprise Support Lead (TAM) at AWS in North Carolina. He has over 17 years of experience in technology across various roles. He is passionate about helping customers to reduce operational challenges and friction. His focus area is AI/ML and Energy & Utilities Segment. Outside work, He enjoys traveling with family, watching cricket and movies.

Syed Jaffry is a Principal Solutions Architect with AWS. He advises software companies on AI and helps them build modern, robust and secure application architectures on AWS.

Syed Jaffry is a Principal Solutions Architect with AWS. He advises software companies on AI and helps them build modern, robust and secure application architectures on AWS. Amit Kumar Agrawal is a Senior Solutions Architect at AWS where he has spent over 5 years working with large ISV customers. He helps organizations build and operate cost-efficient and scalable solutions in the cloud, driving their business and technical outcomes.

Amit Kumar Agrawal is a Senior Solutions Architect at AWS where he has spent over 5 years working with large ISV customers. He helps organizations build and operate cost-efficient and scalable solutions in the cloud, driving their business and technical outcomes. Tej Nagabhatla is a Senior Solutions Architect at AWS, where he works with a diverse portfolio of clients ranging from ISVs to large enterprises. He specializes in providing architectural guidance across a wide range of topics around AI/ML, security, storage, containers, and serverless technologies. He helps organizations build and operate cost-efficient, scalable cloud applications. In his free time, Tej enjoys music, playing basketball, and traveling.

Tej Nagabhatla is a Senior Solutions Architect at AWS, where he works with a diverse portfolio of clients ranging from ISVs to large enterprises. He specializes in providing architectural guidance across a wide range of topics around AI/ML, security, storage, containers, and serverless technologies. He helps organizations build and operate cost-efficient, scalable cloud applications. In his free time, Tej enjoys music, playing basketball, and traveling.

This post highlights startups in the Google for Startups Growth Academy: AI for Education program, showcasing how artificial intelligence is transforming education.

This post highlights startups in the Google for Startups Growth Academy: AI for Education program, showcasing how artificial intelligence is transforming education.

Muni Annachi, a Senior DevOps Consultant at AWS, boasts over a decade of expertise in architecting and implementing software systems and cloud platforms. He specializes in guiding non-profit organizations to adopt DevOps CI/CD architectures, adhering to AWS best practices and the AWS Well-Architected Framework. Beyond his professional endeavors, Muni is an avid sports enthusiast and tries his luck in the kitchen.

Muni Annachi, a Senior DevOps Consultant at AWS, boasts over a decade of expertise in architecting and implementing software systems and cloud platforms. He specializes in guiding non-profit organizations to adopt DevOps CI/CD architectures, adhering to AWS best practices and the AWS Well-Architected Framework. Beyond his professional endeavors, Muni is an avid sports enthusiast and tries his luck in the kitchen. Ajay Raghunathan is a Machine Learning Engineer at AWS. His current work focuses on architecting and implementing ML solutions at scale. He is a technology enthusiast and a builder with a core area of interest in AI/ML, data analytics, serverless, and DevOps. Outside of work, he enjoys spending time with family, traveling, and playing football.

Ajay Raghunathan is a Machine Learning Engineer at AWS. His current work focuses on architecting and implementing ML solutions at scale. He is a technology enthusiast and a builder with a core area of interest in AI/ML, data analytics, serverless, and DevOps. Outside of work, he enjoys spending time with family, traveling, and playing football. Arun Dyasani is a Senior Cloud Application Architect at AWS. His current work focuses on designing and implementing innovative software solutions. His role centers on crafting robust architectures for complex applications, leveraging his deep knowledge and experience in developing large-scale systems.

Arun Dyasani is a Senior Cloud Application Architect at AWS. His current work focuses on designing and implementing innovative software solutions. His role centers on crafting robust architectures for complex applications, leveraging his deep knowledge and experience in developing large-scale systems. Shweta Singh is a Senior Product Manager in the Amazon SageMaker Machine Learning platform team at AWS, leading the SageMaker Python SDK. She has worked in several product roles in Amazon for over 5 years. She has a Bachelor of Science degree in Computer Engineering and a Masters of Science in Financial Engineering, both from New York University.

Shweta Singh is a Senior Product Manager in the Amazon SageMaker Machine Learning platform team at AWS, leading the SageMaker Python SDK. She has worked in several product roles in Amazon for over 5 years. She has a Bachelor of Science degree in Computer Engineering and a Masters of Science in Financial Engineering, both from New York University. Jenna Eun is a Principal Practice Manager for the Health and Advanced Compute team at AWS Professional Services. Her team focuses on designing and delivering data, ML, and advanced computing solutions for the public sector, including federal, state and local governments, academic medical centers, nonprofit healthcare organizations, and research institutions.

Jenna Eun is a Principal Practice Manager for the Health and Advanced Compute team at AWS Professional Services. Her team focuses on designing and delivering data, ML, and advanced computing solutions for the public sector, including federal, state and local governments, academic medical centers, nonprofit healthcare organizations, and research institutions. Meenakshi Ponn Shankaran is a Principal Domain Architect at AWS in the Data & ML Professional Services Org. He has extensive expertise in designing and building large-scale data lakes, handling petabytes of data. Currently, he focuses on delivering technical leadership to AWS US Public Sector clients, guiding them in using innovative AWS services to meet their strategic objectives and unlock the full potential of their data.

Meenakshi Ponn Shankaran is a Principal Domain Architect at AWS in the Data & ML Professional Services Org. He has extensive expertise in designing and building large-scale data lakes, handling petabytes of data. Currently, he focuses on delivering technical leadership to AWS US Public Sector clients, guiding them in using innovative AWS services to meet their strategic objectives and unlock the full potential of their data.