Formula 1® (F1) races are high-stakes affairs where operational efficiency is paramount. During these live events, F1 IT engineers must triage critical issues across its services, such as network degradation to one of its APIs. This impacts downstream services that consume data from the API, including products such as F1 TV, which offer live and on-demand coverage of every race as well as real-time telemetry. Determining the root cause of these issues and preventing it from happening again takes significant effort. Due to the event schedule and change freeze periods, it can take up to 3 weeks to triage, test, and resolve a critical issue, requiring investigations across teams including development, operations, infrastructure, and networking.

“We used to have a recurring issue with the web API system, which was slow to respond and provided inconsistent outputs. Teams spent around 15 full engineer days to iteratively resolve the issue over several events: reviewing logs, inspecting anomalies, and iterating on the fixes,” says Lee Wright, head of IT Operations at Formula 1. Recognizing this challenge as an opportunity for innovation, F1 partnered with Amazon Web Services (AWS) to develop an AI-driven solution using Amazon Bedrock to streamline issue resolution. In this post, we show you how F1 created a purpose-built root cause analysis (RCA) assistant to empower users such as operations engineers, software developers, and network engineers to troubleshoot issues, narrow down on the root cause, and significantly reduce the manual intervention required to fix recurrent issues during and after live events. We’ve also provided a GitHub repo for a general-purpose version of the accompanying chat-based application.

Users can ask the RCA chat-based assistant questions using natural language prompts, with the solution troubleshooting in the background, identifying potential reasons for the incident and recommending next steps. The assistant is connected to internal and external systems, with the capability to query various sources such as SQL databases, Amazon CloudWatch logs, and third-party tools to check the live system health status. Because the solution doesn’t require domain-specific knowledge, it even allows engineers of different disciplines and levels of expertise to resolve issues.

“With the RCA tool, the team could narrow down the root cause and implement a solution within 3 days, including deployments and testing over a race weekend. The system not only saves time on active resolution, it also routes the issue to the correct team to resolve, allowing teams to focus on other high-priority tasks, like building new products to enhance the race experience,” adds Wright. By using generative AI, engineers can receive a response within 5–10 seconds on a specific query and reduce the initial triage time from more than a day to less than 20 minutes. The end-to-end time to resolution has been reduced by as much as 86%.

Implementing the root cause analysis solution architecture

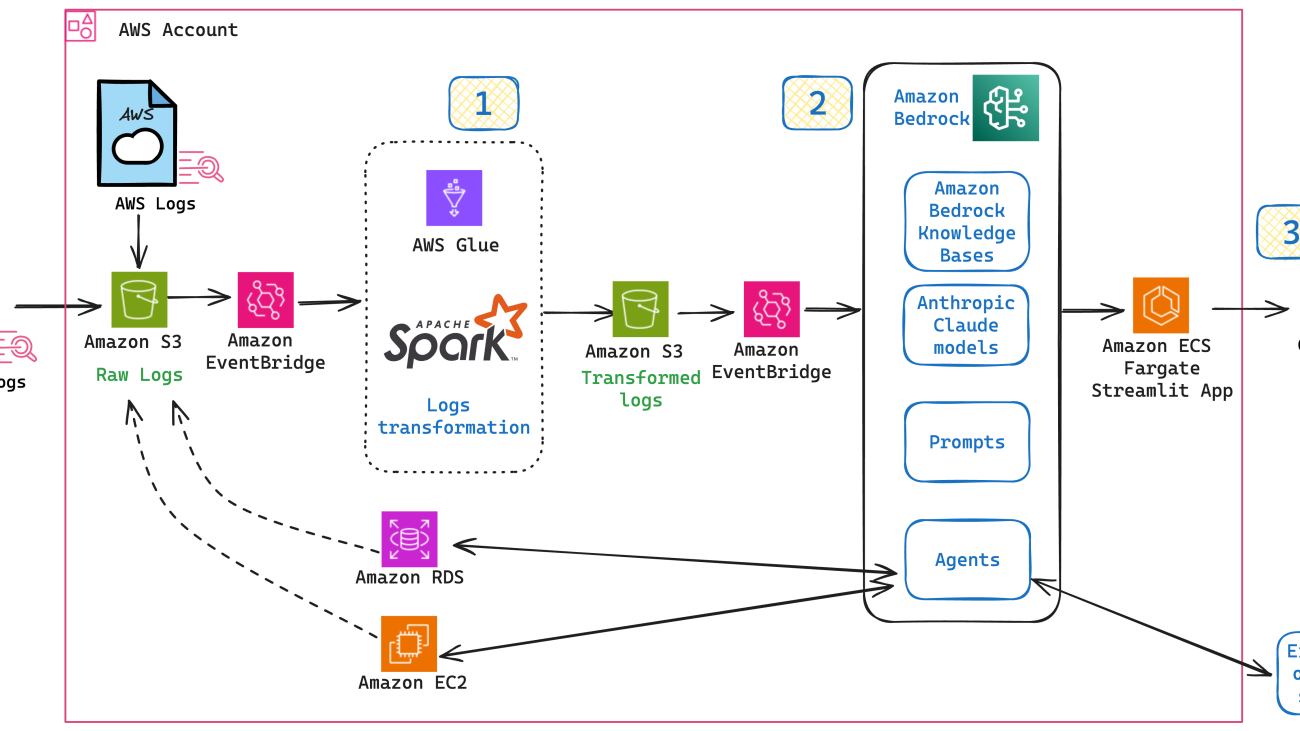

In collaboration with the AWS Prototyping team, F1 embarked on a 5-week prototype to demonstrate the feasibility of this solution. The objective was to use AWS to replicate and automate the current manual troubleshooting process for two candidate systems. As a starting point, the team reviewed real-life issues, drafting a flowchart outlining 1) the troubleshooting process, 2) teams and systems involved, 3) required live checks, and 4) logs investigations required for each scenario. The following is a diagram of the solution architecture.

To handle the log data efficiently, raw logs were centralized into an Amazon Simple Storage Service (Amazon S3) bucket. An Amazon EventBridge schedule checked this bucket hourly for new files and triggered log transformation extract, transform, and load (ETL) pipelines built using AWS Glue and Apache Spark. The transformed logs were stored in a separate S3 bucket, while another EventBridge schedule fed these transformed logs into Amazon Bedrock Knowledge Bases, an end-to-end managed Retrieval Augmented Generation (RAG) workflow capability, allowing the chat assistant to query them efficiently. Amazon Bedrock Agents facilitates interaction with internal systems such as databases and Amazon Elastic Compute Cloud (Amazon EC2) instances and external systems such as Jira and Datadog. Anthropic’s Claude 3 models (the latest model at the time of development) were used to orchestrate and generate high-quality responses, maintaining accurate and relevant information from the chat assistant. Finally, the chat application is hosted in an AWS Fargate for Amazon Elastic Container Service (Amazon ECS) service, providing scalability and reliability to handle variable loads without compromising performance.

The following sections further explain the main components of the solution: ETL pipelines to transform the log data, agentic RAG implementation, and the chat application.

Creating ETL pipelines to transform log data

Preparing your data to provide quality results is the first step in an AI project. AWS helps you improve your data quality over time so you can innovate with trust and confidence. Amazon CloudWatch gives you visibility into system-wide performance and allows you to set alarms, automatically react to changes, and gain a unified view of operational health.

For this solution, AWS Glue and Apache Spark handled data transformations from these logs and other data sources to improve the chatbot’s accuracy and cost efficiency. AWS Glue helps you discover, prepare, and integrate your data at scale. For this project, there was a simple three-step process for the log data transformation. The following is a diagram of the data processing flow.

|

- Data standardization: Schemas, types and formats – Conforming the data to a unified format helps the chat assistant understand the data more thoroughly, improving output accuracy. To enable Amazon Bedrock Knowledge Bases to ingest data consumed from different sources and formats (such as structure, schema, column names, timestamp formats), the data must first be standardized.

- Data filtering: Removing unnecessary data – To improve the chat assistant’s performance further, it’s important to reduce the amount of data to scan. A simple way to do that is to determine which data columns wouldn’t be used by the chat assistant. This removed a considerable amount of data in the ETL process even before ingesting into the knowledge base. Plus, it reduced costs in the embeddings process because less data is used to transform and tokenize into the vector database. All this helps improve the chat assistant’s accuracy, performance, and cost. For example, the chat assistant doesn’t need all the headers from some HTTP requests, but it does need the host and user agent.

- Data aggregation: Reducing data size – Users only need to know by the minute when a problem occurred, so aggregating data at the minute level helped to reduce the data size. For example, when there are 60 data points per minute with API response times, data was aggregated to a single data point per minute. This single aggregated event contains attributes such as the maximum time taken to fulfill a request, focusing the chat assistant to identify if the response time was high—again reducing the data needed to analyze the issue.

Building the RCA assistant with Amazon Bedrock Agents and Amazon Bedrock Knowledge Bases

Amazon Bedrock was used to build an agentic (agent-based) RAG solution for the RCA assistant. Amazon Bedrock Agents streamlines workflows and automates repetitive tasks. Agents uses the reasoning capability of foundation models (FMs) to break down user-requested tasks into multiple steps. They use the provided instruction to create an orchestration plan and then carry out the plan by invoking company APIs and accessing knowledge bases using RAG to provide a final response to the end user.

Knowledge bases are essential to the RAG framework, querying business data sources and adding relevant context to answer your questions. Amazon Bedrock Agents also allows interaction with internal and external systems, such as querying database statuses to check their health, querying Datadog for live application monitoring, and raising Jira tickets for future analysis and investigation. Anthropic’s Claude 3 Sonnet model was selected for informative and comprehensive answers and the ability to understand diversified questions. For example, it can correctly interpret user input date formats such as “2024-05-10” or “10th May 2024.”

Amazon Bedrock Agents integrates with Amazon Bedrock Knowledge Bases, providing the end user with a single and consolidated frontend. The RCA agent considers the tools and knowledge bases available, then intelligently and autonomously creates an execution plan. After the agent receives documents from the knowledge base and responses from tool APIs, it consolidates the information to feed it to the large language model (LLM) and generate the final response. The following diagram illustrates the orchestration flow.

Systems security

With Amazon Bedrock, you have full control over the data used to customize the FMs for generative AI applications such as RCA. Data is encrypted in transit and at rest. Identity-based policies provide further control over your data, helping you manage what actions roles can perform, on which resources, and under what conditions.

To evaluate the system health of RCA, the agent runs a series of checks, such as AWS Boto3 API calls (for example, boto3_client.describe_security_groups, to determine if an IP address is allowed to access system) or database SQL queries (SQL: sys.dm_os_schedulers, to query the database system metrics such as CPU, memory or user locks).

To help protect these systems against potential hallucinations or even prompt injections, agents aren’t allowed to create their own database queries or system health checks on the fly. Instead, a series of controlled SQL queries and API checks were implemented, following the principle of least privilege (PoLP). This layer also validates the input and output schema (see Powertools docs), making sure this aspect is also controlled. To learn more about protecting your application, refer to the ArXiv paper, From Prompt Injections to SQL Injection Attacks. The following code is an example.

"""

- Health Checks: one explicit function per Health Check, to avoid potential LLM hallucinations or risky syntax errors.

- DB is KMS-encrypted and behind private subnets. Connection uses Least-Privileges and Secrets Manager

- Schema is protected using OpenAPI, via AWS Lambda Powertools BedrockAgentResolver

"""

from typing import List, Annotated

from helpers import run_sql_query, check_ec2_port_access

from aws_lambda_powertools.event_handler.bedrock_agent import BedrockAgentResolver

from aws_lambda_powertools.event_handler.openapi.params import Query, Body

from aws_lambda_powertools import Metrics, Tracer, Logger

from aws_lambda_powertools.metrics import MetricUnit

# Initialize Agents, Metrics, Loggers and Tracers

app = BedrockAgentResolver()

metrics = Metrics(namespace="rca-stack-api-logs", service="HealthChecks")

tracer = Tracer()

logger = Logger(level='INFO')

@tracer.capture_method

@app.get("/checkDatabaseCPUMemory", description='Checks the CPU and Memory usage, for the Database server.')

def check_db_cpu_memory() -> Annotated[List, Body(description='Returns Database CPU and Memory metrics')]:

response = run_sql_query('db_cpu_memory')

metrics.add_metric(name="DBCpuMemory", unit=MetricUnit.Count, value=1)

logger.info(response)

return response

Frontend application: The chat assistant UI

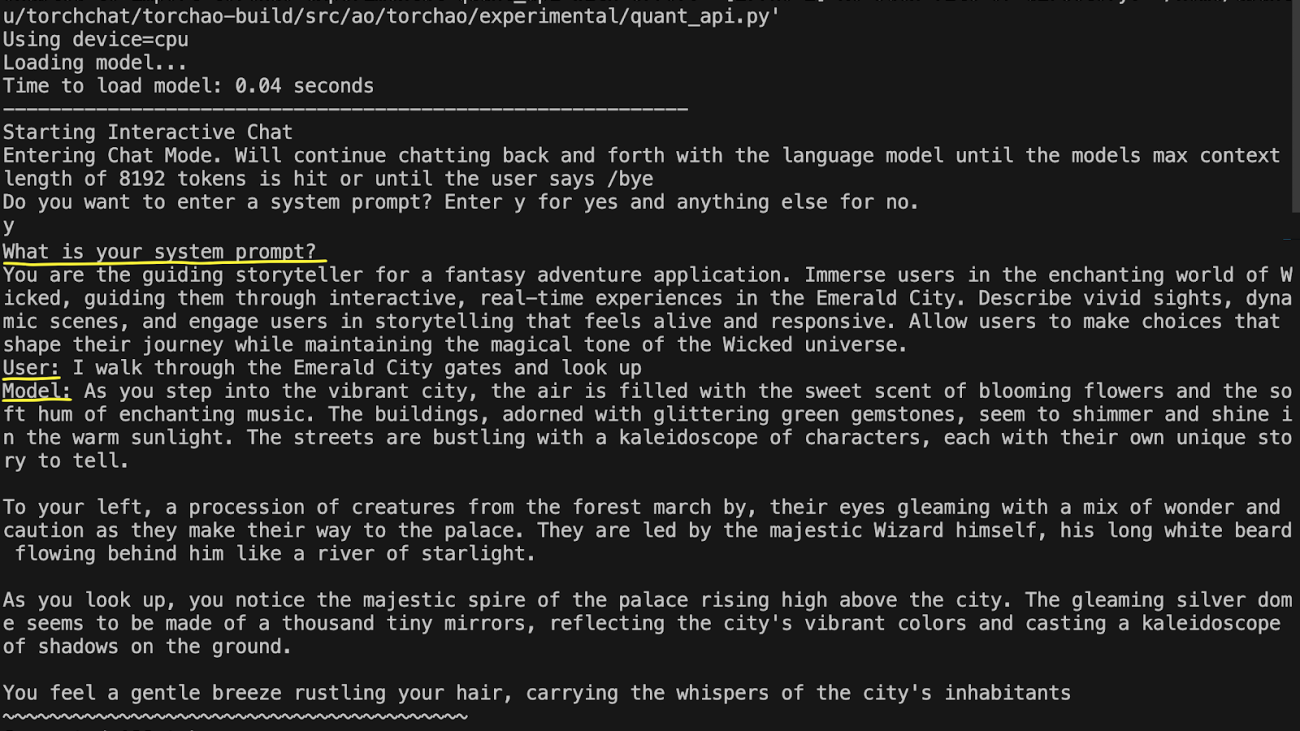

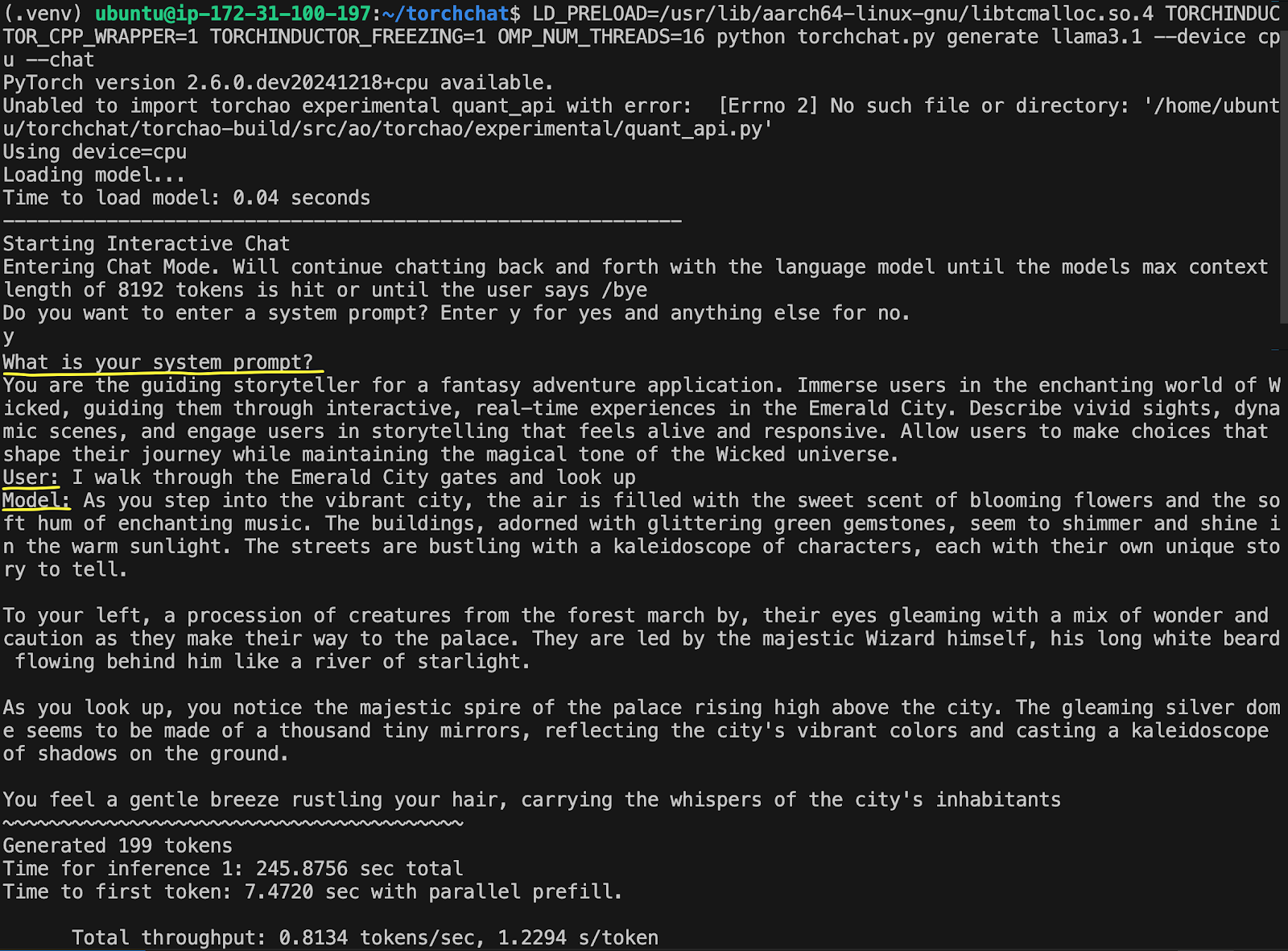

The chat assistant UI was developed using the Streamlit framework, which is Python-based and provides simple yet powerful application widgets. In the Streamlit app, users can test their Amazon Bedrock agent iterations seamlessly by providing or replacing the agent ID and alias ID. In the chat assistant, the full conversation history is displayed, and the conversation can be reset by choosing Clear. The response from the LLM application consists of two parts. On the left is the final neutral response based on the user’s questions. On the right is the trace of LLM agent orchestration plans and executions, which is hidden by default to keep the response clean and concise. The trace can be reviewed and examined by the user to make sure that the correct tools are invoked and the correct documents are retrieved by the LLM chatbot.

A general-purpose version of the chat-based application is available from this GitHub repo, where you can experiment with the solution and modify it for additional use cases.

In the following demo, the scenario involves user complaints that they can’t connect to F1 databases. Using the chat assistant, users can check if the database driver version they’re using is supported by the server. Additionally, users can verify EC2 instance network connectivity by providing the EC2 instance ID and AWS Region. These checks are performed by API tools accessible by the agent. Furthermore, users can troubleshoot website access issues by checking system logs. In the demo, users provide an error code and date, and the chat assistant retrieves relevant logs from Amazon Bedrock Knowledge Bases to answer their questions and provide information for future analysis.

Technical engineers can now query to investigate system errors and issues using natural language. It’s integrated with existing incident management tools (such as Jira) to facilitate seamless communication and ticket creation. In most cases, the chat assistant can quickly identify the root cause and provide remediation recommendations, even if multiple issues are present. When warranted, particularly challenging issues are automatically escalated to the F1 engineering team for investigation, allowing engineers to better prioritize their tasks.

Conclusion

In this post, we explained how F1 and AWS have developed a root cause analysis (RCA) assistant powered by Amazon Bedrock to reduce manual intervention and accelerate the resolution of recurrent operational issues during races from weeks to minutes. The RCA assistant enables the F1 team to spend more time on innovation and improving its services, ultimately delivering an exceptional experience for fans and partners. The successful collaboration between F1 and AWS showcases the transformative potential of generative AI in empowering teams to accomplish more in less time.

Learn more about how AWS helps F1 on and off the track.

About the Author

Carlos Contreras is a Senior Big Data and Generative AI Architect, at Amazon Web Services. Carlos specializes in designing and developing scalable prototypes for customers, to solve their most complex business challenges, implementing RAG and Agentic solutions with Distributed Data Processing techniques.

Carlos Contreras is a Senior Big Data and Generative AI Architect, at Amazon Web Services. Carlos specializes in designing and developing scalable prototypes for customers, to solve their most complex business challenges, implementing RAG and Agentic solutions with Distributed Data Processing techniques.

Hin Yee Liu is a Senior Prototyping Engagement Manager at Amazon Web Services. She helps AWS customers to bring their big ideas to life and accelerate the adoption of emerging technologies. Hin Yee works closely with customer stakeholders to identify, shape and deliver impactful use cases leveraging Generative AI, AI/ML, Big Data, and Serverless technologies using agile methodologies. In her free time, she enjoys knitting, travelling and strength training.

Hin Yee Liu is a Senior Prototyping Engagement Manager at Amazon Web Services. She helps AWS customers to bring their big ideas to life and accelerate the adoption of emerging technologies. Hin Yee works closely with customer stakeholders to identify, shape and deliver impactful use cases leveraging Generative AI, AI/ML, Big Data, and Serverless technologies using agile methodologies. In her free time, she enjoys knitting, travelling and strength training.

Olga Miloserdova is an Innovation Lead at Amazon Web Services, where she supports executive leadership teams across industries to drive innovation initiatives leveraging Amazon’s customer-centric Working Backwards methodology.

Olga Miloserdova is an Innovation Lead at Amazon Web Services, where she supports executive leadership teams across industries to drive innovation initiatives leveraging Amazon’s customer-centric Working Backwards methodology.

Ying Hou, PhD is a Senior GenAI Prototyping Architect at AWS, where she collaborates with customers to build cutting-edge GenAI applications, specialising in RAG and agentic solutions. Her expertise spans GenAI, ASR, Computer Vision, NLP, and time series prediction models. When she’s not architecting AI solutions, she enjoys spending quality time with her family, getting lost in novels, and exploring the UK’s national parks.

Ying Hou, PhD is a Senior GenAI Prototyping Architect at AWS, where she collaborates with customers to build cutting-edge GenAI applications, specialising in RAG and agentic solutions. Her expertise spans GenAI, ASR, Computer Vision, NLP, and time series prediction models. When she’s not architecting AI solutions, she enjoys spending quality time with her family, getting lost in novels, and exploring the UK’s national parks.

Read More

Today we’re announcing new creative capabilities in Google Ads to help brands generate lifestyle imagery.

Today we’re announcing new creative capabilities in Google Ads to help brands generate lifestyle imagery.

Today Google is launching an AI co-scientist, a new AI system built on Gemini 2.0 designed to aid scientists in creating novel hypotheses and research plans. Researchers…

Today Google is launching an AI co-scientist, a new AI system built on Gemini 2.0 designed to aid scientists in creating novel hypotheses and research plans. Researchers…

Carlos Contreras is a Senior Big Data and Generative AI Architect, at Amazon Web Services. Carlos specializes in designing and developing scalable prototypes for customers, to solve their most complex business challenges, implementing RAG and Agentic solutions with Distributed Data Processing techniques.

Carlos Contreras is a Senior Big Data and Generative AI Architect, at Amazon Web Services. Carlos specializes in designing and developing scalable prototypes for customers, to solve their most complex business challenges, implementing RAG and Agentic solutions with Distributed Data Processing techniques. Hin Yee Liu is a Senior Prototyping Engagement Manager at Amazon Web Services. She helps AWS customers to bring their big ideas to life and accelerate the adoption of emerging technologies. Hin Yee works closely with customer stakeholders to identify, shape and deliver impactful use cases leveraging Generative AI, AI/ML, Big Data, and Serverless technologies using agile methodologies. In her free time, she enjoys knitting, travelling and strength training.

Hin Yee Liu is a Senior Prototyping Engagement Manager at Amazon Web Services. She helps AWS customers to bring their big ideas to life and accelerate the adoption of emerging technologies. Hin Yee works closely with customer stakeholders to identify, shape and deliver impactful use cases leveraging Generative AI, AI/ML, Big Data, and Serverless technologies using agile methodologies. In her free time, she enjoys knitting, travelling and strength training. Olga Miloserdova is an Innovation Lead at Amazon Web Services, where she supports executive leadership teams across industries to drive innovation initiatives leveraging Amazon’s customer-centric Working Backwards methodology.

Olga Miloserdova is an Innovation Lead at Amazon Web Services, where she supports executive leadership teams across industries to drive innovation initiatives leveraging Amazon’s customer-centric Working Backwards methodology. Ying Hou, PhD is a Senior GenAI Prototyping Architect at AWS, where she collaborates with customers to build cutting-edge GenAI applications, specialising in RAG and agentic solutions. Her expertise spans GenAI, ASR, Computer Vision, NLP, and time series prediction models. When she’s not architecting AI solutions, she enjoys spending quality time with her family, getting lost in novels, and exploring the UK’s national parks.

Ying Hou, PhD is a Senior GenAI Prototyping Architect at AWS, where she collaborates with customers to build cutting-edge GenAI applications, specialising in RAG and agentic solutions. Her expertise spans GenAI, ASR, Computer Vision, NLP, and time series prediction models. When she’s not architecting AI solutions, she enjoys spending quality time with her family, getting lost in novels, and exploring the UK’s national parks.

An overview of our latest expert tools in Flood Hub and our partnerships to support vulnerable communities.

An overview of our latest expert tools in Flood Hub and our partnerships to support vulnerable communities.