[MUSIC FADES]

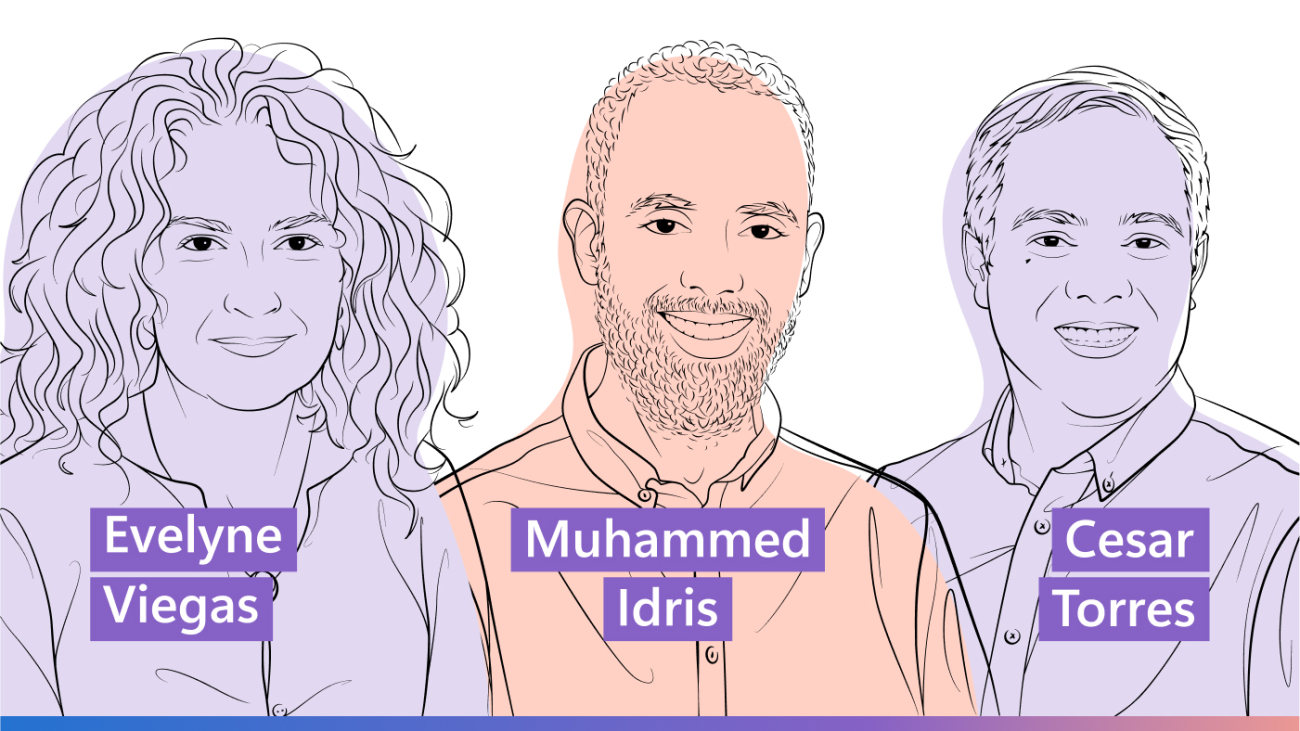

I’m excited to share the mic today with three guests to talk about a really cool program called Accelerating Foundation Models Research, or AFMR for short. With me is Cesar Torres, an assistant professor of computer science at the University of Texas, Arlington, and the director of a program called The Hybrid Atelier. More on that soon. I’m also joined by Muhammed Idris, an assistant professor of medicine at the Morehouse School of Medicine. And finally, I welcome Evelyne Viegas, a technical advisor at Microsoft Research. Cesar, Muhammed, Evelyne, welcome to Ideas!

EVELYNE VIEGAS: Pleasure.

CESAR TORRES: Thank you.

MUHAMMED IDRIS: Thank you.

HUIZINGA: So I like to start these episodes with what I’ve been calling the “research origin story” and since there are three of you, I’d like you each to give us a brief overview of your work. And if there was one, what big idea or larger than life person inspired you to do what you’re doing today? Cesar let’s start with you and then we’ll have Muhammed and Evelyne give their stories as well.

CESAR TORRES: Sure, thanks for having me. So, I work at the frontier of creativity especially thinking about how technology could support or augment the ways that we manipulate our world and our ideas. And I would say that the origin of why I happened into this space can really come back down to a “bring your kid to work” day. [LAUGHTER] My dad, who worked at Maquiladora, which is a factory on the border, took me over – he was an accountant – and so he first showed me the accountants and he’s like look at the amazing work that these folks are doing. But the reality is that a lot of what they do is hidden behind spreadsheets and so it wasn’t necessarily the most engaging. Suffice to say I did not go into accounting like my dad! [LAUGHTER] But then he showed us the chemical engineer in the factory, and he would tell me this chemical engineer holds the secret formula to the most important processes in the entire company. But again, it was this black box, right? And I got a little bit closer when I looked at this process engineer who was melting metal and pulling it out of a furnace making solder and I thought wow, that’s super engaging but at the same time it’s like it was hidden behind machinery and heat and it was just unattainable. And so finally I saw my future career and it was a factory line worker who was opening boxes. And the way that she opened boxes was incredible. Every movement, every like shift of weight was so perfectly coordinated. And I thought, here is the peak of human ability. [LAUGHTER] This was a person who had just like found a way to leverage her surroundings, to leverage her body, the material she was working with. And I thought, this is what I want to study. I want to study how people acquire skills. And I realized … that moment, I realized just how important the environment and visibility was to being able to acquire skills. And so from that moment, everything that I’ve done to this point has been trying to develop technologies that could get everybody to develop a skill in the same way that I saw that factory line worker that day.

HUIZINGA: Wow, well, we’ll get to the specifics on what you’re doing now and how that’s relevant in a bit. But thank you for that. So Muhammed, what’s the big idea behind your work and how did you get to where you are today?

MUHAMMED IDRIS: Yeah, no. First off, Cesar, I think it’s a really cool story. I wish I had an origin story [LAUGHTER] from when I was a kid, and I knew exactly what my life’s work was going to be. Actually, my story, I figured out my “why” much later. Actually, my background was in finance. And I started my career in the hedge fund space at a company called BlackRock, really large financial institution you might have heard of. Then I went off and I did a PhD at Penn State. And I fully intended on going back. I was going to basically be working in spreadsheets for the rest of my life. But actually during my postdoc at the time I was living in Montreal, I actually had distant relatives of mine who were coming to Montreal to apply for asylum and it was actually in helping them navigate the process, that it became clear to me, you know, the role, it was very obvious to me, the role that technology can play in helping people help themselves. And kind of the big idea that I realized is that, you know, oftentimes, you know, the world kind of provides a set of conditions, right, that strip away our rights and our dignity and our ability to really fend for ourselves. But it was so amazing to see, you know, 10-, 12-year-old kids who, just because they had a phone, were able to help their families navigate what shelter to go to, how to apply for school, and more importantly, how do they actually start the rest of their lives? And so actually at the time, I, you know, got together a few friends, and, you know, we started to think about, well, you know, all of this information is really sitting on a bulletin board somewhere. How can we digitize it? And so we put together a pretty, I would say, bad-ass team, interdisciplinary team, included developers and refugees, and we built a prototype over a weekend. And essentially what happened was we built this really cool platform called Atar. And in many ways, I would say that it was the first real solution that leveraged a lot of the natural language processing capabilities that everyone is using today to actually help people help themselves. And it did that in three really important ways. The first way is that people could essentially ask what they needed help with in natural language. And so we had some algorithms developed that would allow us to identify somebody’s intent. Taking that information then, we had a set of models that would then ask you a set of questions to understand your circumstances and determine your eligibility for resources. And then from that, we’d create a customized checklist for them with everything that they needed to know, where to go, what to bring, and who to talk to in order to accomplish that thing. And it was amazing to see how that very simple prototype that we developed over a weekend really became a lifeline for a lot of people. And so that’s really, I think, what motivated my work in terms of trying to combine data science, emerging technologies like AI and machine learning, with the sort of community-based research that I think is important for us to truly identify applications where, in my world right now, it’s really studying health disparities.

HUIZINGA: Yeah. Evelyne, tell us how you got into doing what you’re doing as a technical advisor. What’s the big idea behind what you do and how you got here?

EVELYNE VIEGAS: So as a technical advisor in Microsoft Research, I really look for ideas out there. So ideas can come from anywhere. And so think it of scanning the horizon to look for some of those ideas out there and then figuring out, are there scientific hypotheses we should be looking at? And so the idea here is, once we have identified some of those ideas, the goal is really to help nurture a healthy pipeline for potential big bets. What I do is really about “subtle science and exact art” and we discover as we do and it involves a lot of discussions and conversations working with our researchers here, our scientists, but of course with the external research community. And how I got here … well first I will say that I am so excited to be alive in a moment where AI has made it to industry because I’ve looked and worked in AI for as long as I can remember with very different approaches. And actually as important, importantly for me is really natural languages which have enabled this big evolution. People sometimes also talk about revolution in AI, via the language models. Because when I started, so I was very fortunate growing up in an environment where my family, my extended family spoke different languages, but then it was interesting to see the different idioms in those natural languages. Just to give you an example, in English you say, it rains cats and dogs. Well, in France, in French it doesn’t mean anything, right? In French, actually, it rains ropes, right? Which probably doesn’t mean anything in English. [LAUGHTER] And so I was really curious about natural languages and communication. When I went to school, being good at math, I ended up doing math, realizing very quickly that I didn’t want to do a career in math. You know, proofs all that is good in high school, doing a full career, was not my thing, math. You know, proofs, all that. It’s good in high school, but doing a full career, it was not my thing, math. But there was that class I really, really enjoyed, which was mathematical logic. And so little by little, I started discovering people working in that field. And at the same time, I was still restless with natural languages. And so I also took some classes in linguistics on the humanity university in Toulouse in France. And I stumbled on those people who were actually working in … some in linguistics, some in computer science, and then there was this lab doing computational linguistics. And then that was it for me. I was like, that’s, you know, so that’s how I ended up doing my PhD in computational linguistics. And the last aspect I’ll talk about, because in my role today, the aspect of working with a network of people, with a global network, is still so important to me, and I think for science as a whole. At the time, there was this nascent field of computational lexical semantics. And for me, it was so important to bring people together because I realized that we all had different approaches, different theories, not even in France, but across the world, and actually, I worked with somebody else, and we co-edited the first book on computational lexical semantics, where we started exposing what it meant to do lexical semantics and the relationships between words within a larger context, with a larger context of conversations, discourse, and all those different approaches. And that’s an aspect which for me to this day is so important and that was also really important to keep as we develop what we’re going to talk about today, Accelerating Foundation Models Research program.

HUIZINGA: Yeah, this is fascinating because I didn’t even know all of these stories. I just knew that there were stories here and this is the first time I’m hearing them. So it’s like this discovery process and the sort of pushing on a door and having it be, well, that’s not quite the door I want. [LAUGHTER] Let’s try door number two. Let’s try door number three. Well, let’s get onto the topic of Accelerating Foundation Models Research and unpack the big idea behind that. Evelyne, I want to stay with you on this for a minute because I’m curious as to how this initiative even came to exist and what it hopes to achieve. So, maybe start out with a breakdown of the title. It might be confusing for some people, Accelerating Foundation Models Research. What is it?

VIEGAS: Yeah, thank you for the question. So I think I’m going to skip quickly on accelerate research. I think people can understand it’s just like to bring …

HUIZINGA: Make it faster …

VIEGAS: … well, faster and deeper advances. I mean, there are some nuances there, but I think the terms like foundation models, maybe that’s where I’ll start here. So when we talk about foundation models, just think about any model which has been trained on broad data, and which actually enables you to really do any task. That’s, I think, the simplest way to talk about it. And indeed, actually people talk a lot about large language models or language models. And so think of language models as just one part, right, for those foundation models. The term was actually coined at Stanford when people started looking at GPTs, the generative pre-trained transformers, this new architecture. And so that term was coined like to go not just talk about language models, but foundation models, because actually it’s not just language models, but there are also vision models. And so there are other types of models and modalities really. And so when we started with Accelerating Foundation Models Research and from now on, I will say AFMR if that’s okay.

HUIZINGA: Yeah. Not to be confused with ASMR, which is that sort of tingly feeling you get in your head when you hear a good sound, but AFMR, yes.

VIEGAS: So with the AFMR, so actually I need to come a little bit before that and just remind us that actually that this is not just new. The point I was making earlier about it’s so important to engage with the external research community in academia. So Microsoft Research has been doing it for as long as I’ve been at Microsoft and I’ve been 25 years, I just did 25 in January.

HUIZINGA: Congrats!

VIEGAS: And so, I … thank you! … and so, it’s really important for Microsoft Research, for Microsoft. And so we had some programs even before the GPT, ChatGPT moment where we had engaged with the external research community on a program called the Microsoft Turing Academic Program where we provided access to the Turing model, which was a smaller model than the one then developed by OpenAI. But at that time, it was very clear that we needed to be responsible, to look at safety, to look at trustworthiness of those models. And so we cannot just drink our own Kool-Aid and so we really had to work with people externally. And so we were already doing that. But that was an effort which we couldn’t scale really because to scale an effort and having multiple people that can have access to the resources, you need more of a programmatic way to be able to do that and rely on some platform, like for instance, Azure, which has security and privacy, confidentiality which enables to scale those type of efforts. And so what happens as we’re developing this program on the Turing model with a small set of academic people, then there was this ChatGPT moment in November 2022, which was the moment like the “aha moment,” I think, as I mentioned, for me, it’s like, wow, AI now has made it to industry. And so for us, it became very clear that we could not with this moment and the amount of resources needed on the compute side, access to actually OpenAI that new that GPT, at the beginning of GPT-3 and then 4 and then … So how could we build a program? First, should we, and was there interest? And academia responded “Yes! Please! Of course!” right? [LAUGHTER] I mean, what are you waiting for? So AFMR is really a program which enabled us to provide access to foundation models, but it’s also a global network of researchers. And so for us, I think when we started that program, it was making sure that AI was made available to anyone and not just the few, right? And really important to hear from our academic colleagues, what they were discovering and covering and what were those questions that we were not even really thinking about, right? So that’s how we started with AFMR.

HUIZINGA: This is funny, again, on the podcast, you can’t see people shaking their heads, nodding in agreement, [LAUGHTER] but the two academic researchers are going, yep, that’s right. Well, Muhammed, let’s talk to you for a minute. I understand AFMR started a little more than a year ago with a pilot project that revolved around health applications, so this is a prime question for you. And since you’re in medicine, give us a little bit of a “how it started, how it’s going” from your perspective, and why it’s important for you at the Morehouse School of Medicine.

IDRIS: For sure. You know, it’s something as we mentioned that really, I remember vividly is when I saw my first GPT-3 demo, and I was absolutely blown away. This was a little bit before the ChatGPT moment that Evelyne was mentioning, but just the possibilities, oh my God, were so exciting! And again, if I tie that back to the work that we were doing, where we were trying to kind of mimic what ChatGPT is today, there were so many models that we had to build, very complex architectures, edge cases that we didn’t even realize. So you could imagine when I saw that, I said, wow, this is amazing. It’s going to unlock so many possibilities. But at the same time, this demo was coming out, I actually saw a tweet about the inherent biases that were baked into these models. And I’ll never forget this. I think it was at the time he was a grad student at Stanford, and they were able to show that if you asked the model to complete a very simple sentence, a sort of joke, “Two Muslims walk into a bar …” what is it going to finish? And it was scary.

HUIZINGA: Wow.

IDRIS: Two thirds, it was about 66% of the time, the responses referenced some sort of violence, right? And that really was an “aha moment” for me personally, of course, not being that I’m Muslim, but beyond that, that there are all of these possibilities. At the same time, there’s a lot that we don’t know about how these models might operate in the real world. And of course, the first thing that this made me do as a researcher was wonder how do these emerging technologies, how may they unintentionally lead to greater health disparities? Maybe they do. Maybe they don’t. The reality is that we don’t know.

HUIZINGA: Right.

IDRIS: Now I tie that back to something that I’ve been fleshing out for myself, given my time here at Morehouse School of Medicine. And kind of what I believe is that, you know, the likely outcome, and I would say this is the case for really any sort of emerging technology, but let’s specifically talk about AI, machine learning, large language models, is that if we’re not intentional in interrogating how they perform, then what’s likely going to happen is that despite overall improvements in health, we’re going to see greater health disparities, right? It’s almost kind of that trickle-down economics type model, right? And it’s really this addressing of health disparities, which is at the core of the mission of Morehouse School of Medicine. It is literally the reason why I came here a few years ago. Now, the overarching goal of our program, without getting too specific, is really around evaluating the capabilities of foundation models. And those, course, as Evelyne mentioned, are large language models. And we’re specifically working on facilitating accessible and culturally congruent cancer-related health information. And specifically, we need to understand that communities that are disproportionately impacted have specific challenges around trust. And all of these are kind of obstacles to taking advantage of things like cancer screenings, which we know significantly reduce the likelihood of mortality. And it’s going very well. We have a pretty amazing interdisciplinary team. And I think we’ve been able to develop a pretty cool research agenda, a few papers and a few grants. I’d be happy to share about a little bit later.

HUIZINGA: Yeah, that’s awesome. And I will ask you about those because your project is really interesting. But I want Cesar to weigh in here on sort of the goals that are the underpinning of AFMR, which is aligning AI with human values, improving AI-human interaction, and accelerating scientific discovery. Cesar, how do these goals, writ large, align with the work you’re doing at UT Arlington and how has this program helped?

TORRES: Yeah, I love this moment in time that everybody’s been talking about, that GPT or large language model exposure. Definitely when I experienced it, the first thing that came to my head was, I need to get this technology into the hands of my students because it is so nascent, there’s so many open research questions, there’s so many things that can go wrong, but there’s also so much potential, right? And so when I saw this research program by Microsoft I was actually surprised. I saw that, hey, they are actually acknowledging the human element. And so the fact that there was this call for research that was looking at that human dimension was really refreshing. So like what Muhammad was saying, one of the most exciting things about these large language models is you don’t have to be a computer scientist in order to use them. And it reminded me to this moment in time within the arts when digital media started getting produced. And we had this crisis. There was this idea that we would lose all the skills that we have learned from working traditionally with physical materials and having to move into a digital canvas.

HUIZINGA: Right.

TORRES: And it’s kind of this, the birth of a new medium. And we’re kind of at this unique position to guide how this medium is produced and to make sure that people develop that virtuosity in being able to use that medium but also understand its limitations, right? And so one of the fun projects that we’ve done here has been around working with our glass shop. Specifically, we have this amazing neon-bending artists here at UTA, Jeremy Scidmore and Justin Ginsberg. We’ve been doing some collaborations with them, and we’ve been essentially monitoring how they bend glass. I run an undergraduate research program here and I’ve had undergrads try to tackle this problem of how do you transfer that skill of neon bending? And the fact is that because of AFMR, here is just kind of a way to structure that undergraduate research process so that people feel comfortable to ask those dumb questions exactly where they are. But what I think is even more exciting is that they start to see that questions like skill acquisition is still something that our AI is not able to do. And so it’s refreshing to see; it’s like the research problems have not all been solved. It just means that new ones have opened and ones that we previously thought were unattainable now have this groundwork, this foundation in order to be researched, to be investigated. And so it’s really fertile ground. And I really thank AFMR … the AFMR program for letting us have access to those grounds.

HUIZINGA: Yeah. I’m really eager to get into both your projects because they’re both so cool. But Evelyne, I want you to just go on this “access” line of thought for a second because Microsoft has given grants in this program, AFMR, to several Minority Serving Institutions, or MSIs, as they’re called, including Historically Black Colleges and Universities and Hispanic Serving Institutions, so what do these grants involve? You’ve alluded to it already, but can you give us some more specifics on how Microsoft is uniquely positioned to give these and what they’re doing?

VIEGAS: Yes. So the grant program, per se, is really access to resources, actually compute and API access to frontier models. So think about Azure, OpenAI … but also now actually as the program evolves, it’s also providing access to even our research models, so Phi, I mean if you … like smaller models …

HUIZINGA: Yeah, P-H-I.

VIEGAS: Yes, Phi! [LAUGHTER] OK! So, so it’s really about access to those resources. It’s also access to people. I was talking about this global research network and the importance of it. And I’ll come back to that specifically with the Minority Serving Institutions, what we did. But actually when we started, I think we started a bit in a naive way, thinking … we did an open call for proposals, a global one, and we got a great response. But actually at the beginning, we really had no participation from MSIs. [LAUGHTER] And then we thought, why? It’s open … it’s … and I think what we missed there, at the beginning, is like we really focused on the technology and some people who were already a part of the kind of, this global network, started approaching us, but actually a lot of people didn’t even know, didn’t think they could apply, right? And so we ended up doing a more targeted call where we provided not only access to the compute resources, access to the APIs to be able to develop applications or validate or expand the work which is being done with foundation models, but also we acknowledged that it was important, with MSIs, to also enable the students of the researchers like Cesar, Muhammed, and other professors who are part of the program so that they could actually spend the time working on those projects because there are some communities where the teaching load is really high compared to other communities or other colleges. So we already had a good sense that one size doesn’t fit all. And I think what came also with the MSIs and others, it’s like also one culture doesn’t fit all, right? So it’s about access. It’s about access to people, access to the resources and really co-designing so that we can really, really make more advances together.

HUIZINGA: Yeah. Cesar let’s go over to you because big general terms don’t tell a story as well as specific projects with specific people. So your project is called, and I’m going to read this, AI-Enhanced Bricolage: Augmenting Creative Decision Making in Creative Practices. That falls under the big umbrella of Creativity and Design. So tell our audience, and as you do make sure to explain what bricolage is and why you work in a Hybrid Atelier, terms I’m sure are near and dear to Evelyne’s heart … the French language. Talk about that, Cesar.

TORRES: So at UTA, I run a lab called The Hybrid Atelier. And I chose that name because “lab” is almost too siloed into thinking about scientific methods in order to solve problems. And I wanted something that really spoke to the ethos of the different communities of practice that generate knowledge. And so The Hybrid Atelier is a space, it’s a makerspace, and it’s filled with the tools and knowledge that you might find in creative practices like ceramics, glass working, textiles, polymer fabrication, 3D printing. And so every year I throw something new in there. And this last year, what I threw in there was GPT and large language models. And it has been exciting to see how it has transformed. But speaking to this specific project, I think the best way I can describe bricolage is to ask you a question: what would you do if you had a paperclip, duct tape, and a chewing gum wrapper? What could you make with that, right? [LAUGHTER] And so some of us have these MacGyver-type mentalities, and that is what Claude Lévi-Strauss kind of terms as the “bricoleur,” a person who is able to improvise solutions with the materials that they have at hand. But all too often, when we think about bricolage, it’s about the physical world. But the reality is that we very much live in a hybrid reality where we are behind our screens. And that does not mean that we cannot engage in these bricoleur activities. And so this project that I was looking at, it’s both a vice and an opportunity of the human psyche, and it’s known as “functional fixation.” And that is to say, for example, if I were to give you a hammer, you would see everything as a nail. And while this helps kind of constrain creative thought and action to say, okay, if I have this tool, I’m going to use it in this particular way. At the same time, it limits the other potential solutions, the ways that you could use a hammer in unexpected ways, whether it’s to weigh something down or like jewelers to texturize a metal piece or, I don’t know, even to use it as a pendulum … But my point here is that this is where large language models can come in because they can, from a more unbiased perspective, not having the cognitive bias of functional fixation say, hey, here is some tool, here’s some material, here’s some machine. Here are all the ways that I know people have used it. Here are other ways that it could be extended. And so we have been exploring, you know, how can we alter the physical and virtual environment in such a way so that this information just percolates into the creative practitioner’s mind in that moment when they’re trying to have that creative thought? And we’ve had some fun with it. I did a workshop at an event known as OurCS here at DFW. It’s a research weekend where we bring a couple of undergrads and expose them to research. And we found that it’s actually the case that it’s not AI that does better, and it’s also not the case that the practitioner does better! [LAUGHTER] It’s when they hybridize that you really kind of lock into the full kind of creative thought that could emerge. And so we’ve been steadily moving this project forward, expanding from our data sets, essentially, to look at the corpus of video tutorials that people have published all around the web to find the weird and quirky ways that they have extended and shaped new techniques and materials to advance creative thought. So …

HUIZINGA: Wow.

TORRES: … it’s been an exciting project to say the least.

HUIZINGA: Okay, again, my face hurts because I’m grinning so hard for so long. I have to stop. No, I don’t because it’s amazing. You made me think of that movie Apollo 13 when they’re stuck up in space and this engineer comes in with a box of, we’ll call it bricolage, throws it down on the table and says, we need to make this fit into this using this, go. And they didn’t have AI models to help them figure it out, but they did a pretty good job. Okay, Cesar, that’s fabulous. I want Muhammed’s story now. I have to also calm down. It’s so much fun. [LAUGHTER]

IDRIS: No, know I love it. I love it and actually to bring it back to what Evelyne was mentioning earlier about just getting different perspectives in a room, I think this is a perfect example of it. Actually, Cesar, I never thought of myself as being a creative person but as soon as you said a paperclip and was it the gum wrapper …

HUIZINGA: Duct tape.

IDRIS: … duct tape or gum wrapper, I thought to myself, my first internship I was able to figure out how to make two paper clips and a rubber band into a … this was of course before AirPods, right? But something that I could wrap my wires around and it was perfect! [LAUGHTER] I almost started thinking to myself, how could I even scale this, or maybe get a patent on it, but it was a paper clip … yeah. Uh, so, no, no, I mean, this is really exciting stuff, yeah.

HUIZINGA: Well, Muhammed, let me tee you up because I want to actually … I want to say your project out loud …

IDRIS: Please.

HUIZINGA: … because it’s called Advancing Culturally Congruent Cancer Communication with Foundation Models. You might just beat Cesar’s long title with yours. I don’t know. [LAUGHTER] You include alliteration, which as an English major, that makes my heart happy, but it’s positioned under the Cognition and Societal Benefits bucket, whereas Cesar’s was under Creativity and Design, but I see some crossover. Evelyne’s probably grinning too, because this is the whole thing about research is how do these things come together and help? Tell us, Muhammed, about this cultury … culturally … Tell us about your project! [LAUGHTER]

IDRIS: So, you know, I think again, whenever I talk about our work, especially the mission and the “why” of Morehouse School of Medicine, everything really centers around health disparities, right? And if you think about it, health disparities usually comes from one of many, but let’s focus on kind of three potential areas. You might not know you need help, right? If you know you need help, you might not know where to go. And if you end up there, you might not get the help that you need. And if you think about it, a lot of like the kind of the through line through all of these, it really comes down to health communication at the end of the day. It’s not just what people are saying, it’s how people are saying it as well. And so our project focuses right now on language and text, right? But we are, as I’ll talk about in a second, really exploring the kind of multimodal nature of communication more broadly and so, you know, I think another thing that’s important in terms of just background context is that for us, these models are more than just tools, right? We really do feel that if we’re intentional about it that they can be important facilitators for public health more broadly. And that’s where this idea of our project fitting under the bucket at benefiting society as a whole. Now, you know, the context is that over the past couple of decades, how we’ve talked about cancer, how we’ve shared health information has just changed dramatically. And a lot of this has to do with the rise, of course, of digital technologies more broadly, social media, and now there’s AI. People have more access to health information than ever before. And despite all of these advancements, of course, as I keep saying over and over again, not everyone’s benefiting equally, especially when it comes to cancer screening. Now, breast and cervical cancer, that’s what we’re focusing on specifically, are two of the leading causes of cancer-related deaths in women worldwide. And actually, black and Hispanic women in the US are at particular risk and disproportionately impacted by not just lower screening rates, but later diagnoses, and of course from that, higher mortality rates as well. Now again, an important part of the context here is COVID-19. I think there are, by some estimates, about 10 million cancer screenings that didn’t happen. And this is also happening within a context of just a massive amount of misinformation. It’s actually something that the WHO termed as an infodemic. And so our project is trying to kind of look for creative emerging technologies-based solutions for this. And I think we’re doing it in a few unique ways. Now the first way is that we’re looking at how foundation models like the GPTs but also open-source models and those that are, let’s say, specifically fine-tuned on medical texts, how do they perform in terms of their ability to generate health information? How accurate are they? How well is it written? And whether it’s actually useful for the communities that need it the most. We developed an evaluation framework, and we embedded within that some qualitative dimensions that are important to health communications. And we just wrapped up an analysis where we compared the general-purpose models, like a ChatGPT, with medical and more science-specific domain models and as you’d expect, the general-purpose models kind of produced information that was easier to understand, but that was of course at the risk of safety and more accurate responses that the medically tuned models were able to produce. Now a second aspect of our work, and I think this is really a unique part of not what I’ve called, but actually literally there’s a book called The Morehouse Model, is how is it that we could actually integrate communities into research? And specifically, my work is thinking about how do we integrate communities into the development and evaluation of language models? And that’s where we get the term “culturally congruent.” That these models are not just accurate, but they’re also aligned with the values, the beliefs, and even the communication styles of the communities that they’re meant to serve. One of the things that we’re thinking, you know, quite a bit about, right, is that these are not just tools to be published on and maybe put in a GitHub, you know, repo somewhere, right? That these are actually meant to drive the sort of interventions that we need within community. So of course, implementation is really key. And so for this, you know, not only do you need to understand the context within which these models will be deployed, the goal here really is to activate you and prepare you with information to be able to advocate for yourself once you actually see your doctor, right? So that again, I think is a good example of that. But you also have to keep in mind Gretchen that, you know, our goal here is, we don’t want to create greater disparities between those who have and those who don’t, right? And so for example, thinking about accessibility is a big thing and that’s been a part of our project as well. And so for example, we’re leveraging some of Azure API services for speech-to-text and we’re even going as far as trying to leverage some of the text-to-image models to develop visuals that address health literacy barriers and try to leverage these tools to truly, truly benefit health.

HUIZINGA: One of the most delightful and sometimes surprising benefits of programs like AFMR is that the technologies developed in conjunction with people in minority communities have a big impact for people in majority communities as well, often called the Curb Cut Effect. Evelyne, I wonder if you’ve seen any of this happen in the short time that AFMR has been going?

VIEGAS: Yeah, so, I’m going to focus a bit more maybe on education and examples there where we’ve seen, as Cesar was also talking about it, you know for scaling and all that. But we’ve seen a few examples of professors working with their students where English is not the first language.

HUIZINGA: Yeah …

VIEGAS: Another one I would mention is in the context of domains. So for domains, what I mean here is application domains, like not just in CS, but we’ve been working with professors who are, for instance, astronomers, or lawyers, or musicians working in universities. So they started looking actually at these LLMs as more of the “super advisor” helping them. And so it’s another way of looking at it. And actually they started focusing on, can we actually build small astronomy models, right? And I’m thinking, okay, that could … maybe also we learn something which could be potentially applied to some other domain. So these are some of the things we are seeing.

HUIZINGA: Yes.

VIEGAS: But I will finish with something which may, for me, kind of challenges this Curb Cut Effect to certain extent, if I understand the concept correctly, is that I think, with this technology and the way AI and foundation models work compared to previous technologies, I feel it’s kind of potentially the opposite. It’s kind of like the tail catching up with the head. But here I feel that with the foundation models, I think it’s a different way to find information and gain some knowledge. I think that actually when we look at that, these are really broad tools that now actually can be used to help customize your own curb, as it were! So kind of the other way around.

HUIZINGA: Oh, interesting …

VIEGAS: So I think it’s maybe there are two dimensions. It’s not just I work on something small, and it applies to everyone. I feel there is also a dimension of, this is broad, this is any tasks, and it enables many more people. I think Cesar and Muhammed made that point earlier, is you don’t have to be a CS expert or rocket scientist to start using those tools and make progress in your field. So I think that maybe there is this dimension of it.

HUIZINGA: I love the way you guys are flipping my questions back on me. [LAUGHTER] So, and again, that is fascinating, you know, a custom curb, not a curb cut. Cesar, Muhammad, do you, either of you, have any examples of how perhaps this is being used in your work and you’re having accidental or serendipitous discoveries that sort of have a bigger impact than what you might’ve thought?

TORRES: Well, one thing comes to mind. It’s a project that two PhD students in my lab, Adam Emerson and Shreyosi Endow have been working on. It’s around this idea of communities of practice and that is to say, when we talk about how people develop skills as a group, it’s often through some sort of tiered structure. And I’m making a tree diagram with my hands here! [LAUGHTER] And so we often talk about what it’s like for an outsider to enter from outside of the community, and just how much effort it takes to get through that gate, to go through the different rungs, through the different rites of passage, to finally be a part of the inner circle, so to speak. And one of the projects that we’ve been doing, we started to examine these known communities of practice, where they exist. But in doing this analysis, we realized that there’s a couple of folks out there that exist on the periphery. And by really focusing on them, we could start to see where the field is starting to move. And these are folks that have said, I’m neither in this community or another, I’m going to kind of pave my own way. While we’re still seeing those effects of that research go through, I think being able to monitor the communities at the fringe is a really telling sign of how we’re advancing as a society. I think shining some light into these fringe areas, it’s exactly how research develops, how it’s really just about expanding at some bleeding edge. And I think sometimes we just have to recontextualize that that bleeding edge is sometimes the group of people that we haven’t been necessarily paying attention to.

HUIZINGA: Right. Love it. Muhammad, do you have a quick example … or, I mean, you don’t have to, but I just was curious.

IDRIS: Yeah, maybe I’ll just give one quick example that I think keeps me excited, actually has to do with the idea of kind of small language models, right? And so, you know, I gave the example of GPT-3 and how it’s trained on the entirety of the internet and with that is kind of baked in some unfortunate biases, right? And so we asked ourselves the flip side of that question. Well, how is it that we can go about actually baking in some of the good bias, right? The cultural context that’s important to train these models on. And the reality is that we started off by saying, let’s just have focus groups. Let’s talk to people. But of course that takes time, it takes money, it takes effort. And what we quickly realized actually is there are literally generations of people who have done these focus groups specifically on breast and cervical cancer screening. And so what we actually have since done is leverage that real world data in order to actually start developing synthetic data sets that are …

HUIZINGA: Ahhhh.

IDRIS: … small enough but are of higher quality enough that allow us to address the specific concerns around bias that might not exist. And so for me, that’s a really like awesome thing that we came across that I think in trying to solve a problem for our kind of specific use case, I think this could actually be a method for developing more representative, context-aware, culturally sensitive models and I think overall this contributes to the overall safety and reliability of these large language models and hopefully can create a method for people to be able to do it as well.

HUIZINGA: Yeah. Evelyne, I see why it’s so cool for you to be sitting at Microsoft Research and working with these guys … It’s about now that I pose the “what could possibly go wrong if you got everything right?” question on this podcast. And I’m really interested in how researchers are thinking about the potential downsides and consequences of their work. So, Evelyne, do you have any insights on things that you’ve discovered along the path that might make you take preemptive steps to mitigate?

VIEGAS: Yeah, I think it’s coming back to actually what Muhammed was just talking about, I think Cesar, too, around data, the importance of data and the cultural value and the local value. I think an important piece of continuing to be positive for me [LAUGHTER] is to make sure that we fully understand that at the end of the day, data, which is so important to build those foundation models is, especially language models in particular, are just proxies to human beings. And I feel that it’s uh … we need to remember that it’s a proxy to humans and that we all have some different beliefs, values, goals, preferences. And so how do we take all that into account? And I think that beyond the data safety, provenance, I think there’s an aspect of “data caring.” I don’t know how to say it differently, [LAUGHTER] but it’s kind of in the same way that we care for people, how do we care for the data as a proxy to humans? And I’m thinking of, you know, when we talk about like in, especially in cases where there is no economic value, right? [LAUGHTER] And so, but there is local value for those communities. And I think actually there is cultural value across countries. So just wanted to say that there is also an aspect, I think we need to do more research on, as data as proxies to humans. And as complex humans we are, right?

HUIZINGA: Right. Well, one of the other questions I like to ask on these Ideas episodes is, is about the idea of “blue sky” or “moonshot” research, kind of outrageous ideas. And sometimes they’re not so much outrageous as they are just living outside the box of traditional research, kind of the “what if” questions that make us excited. So just briefly, is there anything on your horizon, specifically Cesar and Muhammed, that you would say, in light of this program, AFMR, that you’ve had access to things that you think, boy, this now would enable me to ask those bigger questions or that bigger question. I don’t know what it is. Can you share anything on that line?

TORRES: I guess from my end, one of the things that the AFMR program has allowed me to see is this kind of ability to better visualize the terrain of creativity. And it’s a little bit of a double-edged sword because when we talk about disrupting creativity and we think about tools, it’s typically the case that the tool is making something easier for us. But at the same time, if something’s easier, then some other thing is harder. And then we run into this really strange case where if everything is easy, then we are faced with the “blank canvas syndrome,” right? Like what do you even do if everything is just equally weighted with ease? And so my big idea is to actually think about tools that are purposely making us slower …

HUIZINGA: Mmmmm …

TORRES: … that have friction, that have errors, that have failures and really design how those moments can change our attitudes towards how we move around in space. To say that maybe the easiest path is not the most advantageous, but the one that you can feel the most fulfillment or agency towards. And so I really do think that this is hidden in the latent space of the data that we collect. And so we just need to be immersed in that data. We need to traverse it and really it becomes an infrastructure problem. And so the more that we expose people to these foundational models, the more that we’re going to be able to see how we can enable these new ways of walking through and exploring our environment.

HUIZINGA: Yeah. I love this so much because I’ve actually been thinking some of the best experiences in our lives haven’t seemed like the best experiences when we went through them, right? The tough times are what make us grow. And this idea that AI makes everything accessible and easy and frictionless is what you’ve said. I’ve used that term too. I think of the people floating around in that movie WALL-E and all they have to do is pick whether I’m wearing red or blue today and which drink I want. I love this, Cesar. That’s something I hadn’t even expected you might say and boom, out of the park. Muhammad, do you have any sort of outrageous …? That was flipping it back!

IDRIS: I was going to say, yeah, no, I listen, I don’t know how I could top that. But no, I mean, so it’s funny, Cesar, as you were mentioning that I was thinking about grad school, how at the time, it was the most, you know, friction-filled life experience. But in hindsight, I wouldn’t trade it in for the world. For me, you know, one of the things I’m often thinking about in my job is that, you know, what if we lived in a world where everyone had all the information that they needed, access to all the care they need? What would happen then? Would we magically all be the healthiest version of ourselves? I’m a little bit skeptical. I’m not going to lie, right? [LAUGHTER] But that’s something that I’m often thinking about. Now, bringing that back down to our project, one of the things that I find a little bit amusing is that I tend to ping-pong between, this is amazing, the capabilities are just, the possibilities are endless; and then there will be kind of one or two small things where it’s pretty obvious that there’s still a lot of research that needs to be done, right? So my whole, my big “what if” actually, I want to bring that back down to a kind of a technical thing which is, what if AI can truly understand culture, not just language, right? And so right now, right, an AI model can translate a public health message. It’s pretty straightforward from English to Spanish, right? But it doesn’t inherently understand why some Spanish speaking countries may be more hesitant about certain medical interventions. It doesn’t inherently appreciate the historical context that shapes that hesitancy or what kinds of messaging would build trust rather than skepticism, right? So there’s literal like cultural nuances. That to me is what, when I say culturally congruent or cultural context, what it is that I mean. And I think for me, I think what programs like AFMR have enabled us to do is really start thinking outside the box as to how will these, or how can these, emerging technologies revolutionize public health? What truly would it take for an LLM to understand context? And really, I think for the first time, we can truly, truly achieve personalized, if you want to use that term, health communication. And so that’s what I would say for me is like, what would that world look like?

HUIZINGA: Yeah, the big animating “what if?” I love this. Go ahead, Evelyne, you had something. Please.

VIEGAS: Can I expand? I cannot talk. I’m going to do like Muhammed, I cannot talk! Like that friction and the cultural aspect, but can I expand? And as I was listening to Cesar on the education, I think I heard you talk about the educational rite of passage at some point, and Muhammed on those cultural nuances. So first, before talking about “what if?” I want to say that there is some work, again, when we talk about AFMR, is the technology is all the brain power of people thinking, having crazy ideas, very creative in the research being done. And there is some research where people are looking at what it means, actually, when you build those language models and how you can take into account different language and different culture or different languages within the same culture or between different cultures speaking the same language, or … So there is very interesting research. And so it made me think, expanding on what Muhammed and Cesar were talking about, so this educational rite of passage, I don’t know if you’re aware, so in Europe in the 17th, 18th century, there was this grand tour of Europe and that was reserved to just some people who had the funds to do that grand tour of Europe, [LAUGHTER] let’s be clear! But it was this educational rite of passage where actually they had to physically go to different countries to actually get familiar and experience, experiment, philosophy and different types of politics, and … So that was kind of this “passage obligé” we say in French. I don’t know if there is a translation in English, but kind of this rite of passage basically. And so I am like, wow, what if actually we could have, thanks to the AI looking at different nuances of cultures, of languages … not just language, but in a multimodal point of viewpoint, what if we could have this “citizen of the world” rite of passage, where we … before we are really citizens of the world, we need to understand other cultures, at least be exposed to them. So that would be my “what if?” How do we make AI do that? And so without … and for anyone, right, not just people who can afford it.

HUIZINGA: Well, I don’t even want to close, but we have to. And I’d like each of you to reflect a bit. I think I want to frame this in a way you can sort of pick what you’d like to talk about. But I often have a little bit of vision casting in this section. But there are some specific things I’d like you to talk about. What learnings can you share from your experience with AFMR? Or/and what’s something that strikes you as important now that may not have seemed that way when you started? And you can also, I’m anticipating you people are going to flip that and say, what wasn’t important that is now? And also, how do see yourself moving forward in light of this experience that you’ve had? So Muhammed, let’s go first with you, then Cesar, and then Evelyne, you can close the show.

IDRIS: Awesome. One of the things that, that I’m often thinking about and one of the concepts I’m often reminded of, given the significance of the work that institutions like a Morehouse School of Medicine and UT Arlington and kind of Minority Serving Institutions, right, it almost feels like there is an onslaught of pushback to addressing some of these more systemic issues that we all struggle with, is what does it mean to strive for excellence, right? So in our tradition there’s a concept called Ihsan. Ihsan … you know there’s a lot of definitions of it but essentially to do more than just the bare minimum to truly strive for excellence and I think it was interesting, having spent time at Microsoft Research in Redmond as part of the AFMR program, meeting other folks who also participated in the program that, that I started to appreciate for myself the importance of this idea of the responsible design, development, and deployment of technologies if we truly are going to achieve the potential benefits. And I think this is one of the things that I could kind of throw out there as something to take away from this podcast, it’s really, don’t just think of what we’re developing as tools, but also think of them as how will they be applied in the real world? And when you’re thinking about the context within which something is going to be deployed, that brings up a lot of interesting constraints, opportunities, and just context that I think is important, again, to not just work on an interesting technology for the sake of an interesting technology, but to truly achieve that benefit for society.

HUIZINGA: Hmm. Cesar.

TORRES: I mean, echoing Muhammad, I think the community is really at the center of how we can move forward. I would say the one element that really struck a chord with me, and something that I very much undervalued, was the power of infrastructure and spending time laying down the proper scaffolds and steppingstones, not just for you to do what you’re trying to do, but to allow others to also find their own path. I was setting up Azure from one of my classes and it took time, it took effort, but the payoff has been incredible in … in so much the impact that I see now of students from my class sharing with their peers. And I think this culture of entrepreneurship really comes from taking ownership of where you’ve been and where you can go. But it really just, it all comes down to infrastructure. And so AFMR for me has been that infrastructure to kind of get my foot out the door and also have the ability to bring some folks along the journey with me, so …

HUIZINGA: Yeah. Evelyne, how blessed are you to be working with people like this? Again, my face hurts from grinning so hard. Bring us home. What are your thoughts on this?

VIEGAS: Yeah, so first of all, I mean, it’s so wonderful just here live, like listening to the feedback from Muhammed and Cesar of what AFMR brings and has the potential to bring. And first, let me acknowledge that to put a program like AFMR, it takes a village. So I’m here, the face here, or well, not the face, the voice rather! [LAUGHTER] But it’s so many people who have, at Microsoft on the engineering side, we’re just talking about infrastructure, Cesar was talking about, you know, the pain and gain of leveraging an industry-grade infrastructure like Azure and Azure AI services. So, also our policy teams, of course, our researchers. But above all, the external research community … so grateful to see. It’s, as you said, I feel super blessed and fortunate to be working on this program and really listening what we need to do next. How can we together do better? There is one thing for me, I want to end on the community, right? Muhammed talked about this, Cesar too, the human aspect, right? The technology is super important but also understanding the human aspect. And I will say, actually, my “curb cut moment” for me [LAUGHTER] was really working with the MSIs and the cohort, including Muhammed and Cesar, when they came to Redmond, and really understanding some of the needs which were going beyond the infrastructure, beyond you know a small network, how we can put it bigger and deployments ideas too, coming from the community and that’s something which actually we also try to bring to the whole of AFMR moving forward. And I will finish on one note, which for me is really important moving forward. We heard from Muhammed talking about the really importance of interdisciplinarity, right, and let us not work in silo. And so, and I want to see AFMR go more international, internationality if the word exists … [LAUGHTER]

HUIZINGA: It does now!

VIEGAS: It does now! But it’s just making sure that when we have those collaborations, it’s really hard actually, time zones, you know, practically it’s a nightmare! But I think there is definitely an opportunity here for all of us.

HUIZINGA: Well, Cesar Torres, Muhammed Idris, Evelyne Viegas. This has been so fantastic. Thank you so much for coming on the show to share your insights on AFMR today.

[MUSIC PLAYS]

TORRES: It was a pleasure.

IDRIS: Thank you so much.

VIEGAS: Pleasure.

Google.org has announced which organizations will receive the final $10 million in funding from its $75 million AI Opportunity Fund.

Google.org has announced which organizations will receive the final $10 million in funding from its $75 million AI Opportunity Fund.

These efforts, including new responsible AI curriculum and a $1 million grant to MIT RAISE.

These efforts, including new responsible AI curriculum and a $1 million grant to MIT RAISE.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)