This paper delves into the challenging task of Active Speaker Detection (ASD), where the system needs to determine in real-time whether a person is speaking or not in a series of video frames. While previous works have made significant strides in improving network architectures and learning effective representations for ASD, a critical gap exists in the exploration of real-time system deployment. Existing models often suffer from high latency and memory usage, rendering them impractical for immediate applications. To bridge this gap, we present two scenarios that address the key challenges…Apple Machine Learning Research

Transforming financial analysis with CreditAI on Amazon Bedrock: Octus’s journey with AWS

Investment professionals face the mounting challenge of processing vast amounts of data to make timely, informed decisions. The traditional approach of manually sifting through countless research documents, industry reports, and financial statements is not only time-consuming but can also lead to missed opportunities and incomplete analysis. This challenge is particularly acute in credit markets, where the complexity of information and the need for quick, accurate insights directly impacts investment outcomes. Financial institutions need a solution that can not only aggregate and process large volumes of data but also deliver actionable intelligence in a conversational, user-friendly format. The intersection of AI and financial analysis presents a compelling opportunity to transform how investment professionals access and use credit intelligence, leading to more efficient decision-making processes and better risk management outcomes.

Founded in 2013, Octus, formerly Reorg, is the essential credit intelligence and data provider for the world’s leading buy side firms, investment banks, law firms and advisory firms. By surrounding unparalleled human expertise with proven technology, data and AI tools, Octus unlocks powerful truths that fuel decisive action across financial markets. Visit octus.com to learn how we deliver rigorously verified intelligence at speed and create a complete picture for professionals across the entire credit lifecycle. Follow Octus on LinkedIn and X.

Using advanced GenAI, CreditAI by Octus is a flagship conversational chatbot that supports natural language queries and real-time data access with source attribution, significantly reducing analysis time and streamlining research workflows. It gives instant access to insights on over 10,000 companies from hundreds of thousands of proprietary intel articles, helping financial institutions make informed credit decisions while effectively managing risk. Key features include chat history management, being able to ask questions that are targeted to a specific company or more broadly to a sector, and getting suggestions on follow-up questions.

is a flagship conversational chatbot that supports natural language queries and real-time data access with source attribution, significantly reducing analysis time and streamlining research workflows. It gives instant access to insights on over 10,000 companies from hundreds of thousands of proprietary intel articles, helping financial institutions make informed credit decisions while effectively managing risk. Key features include chat history management, being able to ask questions that are targeted to a specific company or more broadly to a sector, and getting suggestions on follow-up questions.

In this post, we demonstrate how Octus migrated its flagship product, CreditAI, to Amazon Bedrock, transforming how investment professionals access and analyze credit intelligence. We walk through the journey Octus took from managing multiple cloud providers and costly GPU instances to implementing a streamlined, cost-effective solution using AWS services including Amazon Bedrock, AWS Fargate, and Amazon OpenSearch Service. We share detailed insights into the architecture decisions, implementation strategies, security best practices, and key learnings that enabled Octus to maintain zero downtime while significantly improving the application’s performance and scalability.

Opportunities for innovation

CreditAI by Octus version 1.x uses Retrieval Augmented Generation (RAG). It was built using a combination of in-house and external cloud services on Microsoft Azure for large language models (LLMs), Pinecone for vectorized databases, and Amazon Elastic Compute Cloud (Amazon EC2) for embeddings. Based on our operational experience, and as we started scaling up, we realized that there were several operational inefficiencies and opportunities for improvement:

version 1.x uses Retrieval Augmented Generation (RAG). It was built using a combination of in-house and external cloud services on Microsoft Azure for large language models (LLMs), Pinecone for vectorized databases, and Amazon Elastic Compute Cloud (Amazon EC2) for embeddings. Based on our operational experience, and as we started scaling up, we realized that there were several operational inefficiencies and opportunities for improvement:

- Our in-house services for embeddings (deployed on EC2 instances) were not as scalable and reliable as needed. They also required more time on operational maintenance than our team could spare.

- The overall solution was incurring high operational costs, especially due to the use of on-demand GPU instances. The real-time nature of our application meant that Spot Instances were not an option. Additionally, our investigation of lower-cost CPU-based instances revealed that they couldn’t meet our latency requirements.

- The use of multiple external cloud providers complicated DevOps, support, and budgeting.

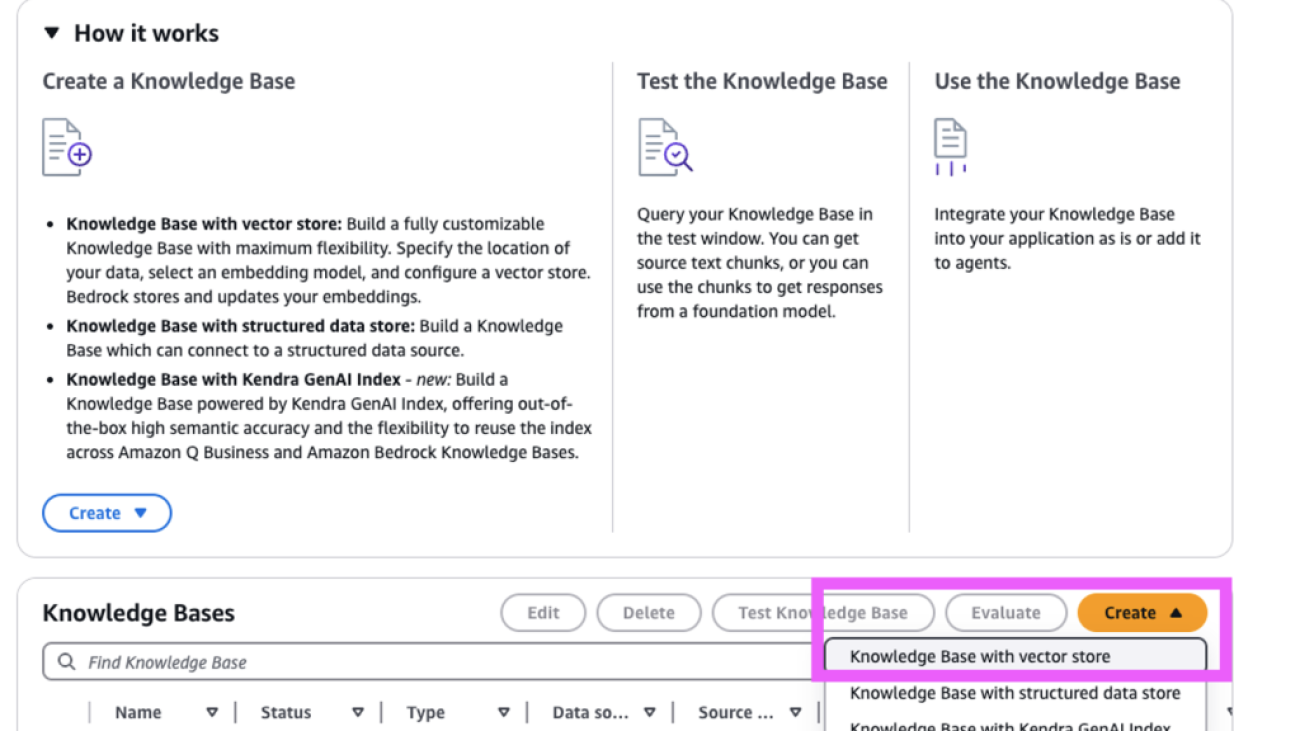

These operational inefficiencies meant that we had to revisit our solution architecture. It became apparent that a cost-effective solution for our generative AI needs was required. Enter Amazon Bedrock Knowledge Bases. With its support for knowledge bases that simplify RAG operations, vectorized search as part of its integration with OpenSearch Service, availability of multi-tenant embeddings, as well as Anthropic’s Claude suite of LLMs, it was a compelling choice for Octus to migrate its solution architecture. Along the way, it also simplified operations as Octus is an AWS shop more generally. However, we were still curious about how we would go about this migration, and whether there would be any downtime through the transition.

Strategic requirements

To help us move forward systematically, Octus identified the following key requirements to guide the migration to Amazon Bedrock:

- Scalability – A crucial requirement was the need to scale operations from handling hundreds of thousands of documents to millions of documents. A significant challenge in the previous system was the slow (and relatively unreliable) process of embedding new documents into vector databases, which created bottlenecks in scaling operations.

- Cost-efficiency and infrastructure optimization – CreditAI 1.x, though performant, was incurring high infrastructure costs due to the use of GPU-based, single-tenant services for embeddings and reranking. We needed multi-tenant alternatives that were much cheaper while enabling elasticity and scale.

- Response performance and latency – The success of generative AI-based applications depends on the response quality and speed. Given our user base, it’s important that our responses are accurate while valuing users’ time (low latency). This is a challenge when the data size and complexity grow. We want to balance spatial and temporal retrieval in order to give responses that have the best answer and context relevance, especially when we get large quantities of data updated every day.

- Zero downtime – CreditAI is in production and we could not afford any downtime during this migration.

- Technological agility and innovation – In the rapidly evolving AI landscape, Octus recognized the importance of maintaining technological competitiveness. We wanted to move away from in-house development and feature maintenance such as embeddings services, rerankers, guardrails, and RAG evaluators. This would allow Octus to focus on product innovation and faster feature deployment.

- Operational consolidation and reliability – Octus’s goal is to consolidate cloud providers, and to reduce support overheads and operational complexity.

Migration to Amazon Bedrock and addressing our requirements

Migrating to Amazon Bedrock addressed our aforementioned requirements in the following ways:

- Scalability – The architecture of Amazon Bedrock, combined with AWS Fargate for Amazon ECS, Amazon Textract, and AWS Lambda, provided the elastic and scalable infrastructure necessary for this expansion while maintaining performance, data integrity, compliance, and security standards. The solution’s efficient document processing and embedding capabilities addressed the previous system’s limitations, enabling faster and more efficient knowledge base updates.

- Cost-efficiency and infrastructure optimization – By migrating to Amazon Bedrock multi-tenant embedding, Octus achieved significant cost reduction while maintaining performance standards through Anthropic’s Claude Sonnet and improved embedding capabilities. This move alleviated the need for GPU-instance-based services in favor of more cost-effective and serverless Amazon ECS and Fargate solutions.

- Response performance and latency – Octus verified the quality and latency of responses from Anthropic’s Claude Sonnet to confirm that response accuracy and latency are not maintained (or even exceeded) as part of this migration. With this LLM, CreditAI was now able to respond better to broader, industry-wide queries than before.

- Zero downtime – We were able to achieve zero downtime migration to Amazon Bedrock for our application using our in-house centralized infrastructure frameworks. Our frameworks comprise infrastructure as code (IaC) through Terraform, continuous integration and delivery (CI/CD), SOC2 security, monitoring, observability, and alerting for our infrastructure and applications.

- Technological agility and innovation – Amazon Bedrock emerged as an ideal partner, offering solutions specifically designed for AI application development. Amazon Bedrock built-in features, such as embeddings services, reranking, guardrails, and the upcoming RAG evaluator, alleviated the need for in-house development of these components, allowing Octus to focus on product innovation and faster feature deployment.

- Operational consolidation and reliability – The comprehensive suite of AWS services offers a streamlined framework that simplifies operations while providing high availability and reliability. This consolidation minimizes the complexity of managing multiple cloud providers and creates a more cohesive technological ecosystem. It also enables economies of scale with development velocity given that over 75 engineers at Octus already use AWS services for application development.

In addition, the Amazon Bedrock Knowledge Bases team worked closely with us to address several critical elements, including expanding embedding limits, managing the metadata limit (250 characters), testing different chunking methods, and syncing throughput to the knowledge base.

In the following sections, we explore our solution and how we addressed the details around the migration to Amazon Bedrock and Fargate.

Solution overview

The following figure illustrates our system architecture for CreditAI on AWS, with two key paths: the document ingestion and content extraction workflow, and the Q&A workflow for live user query response.

In the following sections, we dive into crucial details within key components in our solution. In each case, we connect them to the requirements discussed earlier for readability.

The document ingestion workflow (numbered in blue in the preceding diagram) processes content through five distinct stages:

- Documents uploaded to Amazon Simple Storage Service (Amazon S3) automatically invoke Lambda functions through S3 Event Notifications. This event-driven architecture provides immediate processing of new documents.

- Lambda functions process the event payload containing document location, perform format validation, and prepare content for extraction. This includes file type verification, size validation, and metadata extraction before routing to Amazon Textract.

- Amazon Textract processes the documents to extract both text and structural information. This service handles various formats, including PDFs, images, and forms, while preserving document layout and relationships between content elements.

- The extracted content is stored in a dedicated S3 prefix, separate from the source documents, maintaining clear data lineage. Each processed document maintains references to its source file, extraction timestamp, and processing metadata.

- The extracted content flows into Amazon Bedrock Knowledge Bases, where our semantic chunking strategy is implemented to divide content into optimal segments. The system then generates embeddings for each chunk and stores these vectors in OpenSearch Service for efficient retrieval. Throughout this process, the system maintains comprehensive metadata to support downstream filtering and source attribution requirements.

The Q&A workflow (numbered in yellow in the preceding diagram) processes user interactions through six integrated stages:

- The web application, hosted on AWS Fargate, handles user interactions and query inputs, managing initial request validation before routing queries to appropriate processing services.

- Amazon Managed Streaming for Kafka (Amazon MSK) serves as the streaming service, providing reliable inter-service communication while maintaining message ordering and high-throughput processing for query handling.

- The Q&A handler, running on AWS Fargate, orchestrates the complete query response cycle by coordinating between services and processing responses through the LLM pipeline.

- The pipeline integrates with Amazon Bedrock foundation models through these components:

- Cohere Embeddings model performs vector transformations of the input.

- Amazon OpenSearch Service manages vector embeddings and performs similarity searches.

- Amazon Bedrock Knowledge Bases provides efficient access to the document repository.

- Amazon Bedrock Guardrails implements content filtering and safety checks as part of the query processing pipeline.

- Anthropic Claude LLM performs the natural language processing, generating responses that are then returned to the web application.

This integrated workflow provides efficient query processing while maintaining response quality and system reliability.

For scalability: Using OpenSearch Service as our vector database

Amazon OpenSearch Serverless emerged as the optimal solution for CreditAI’s evolving requirements, offering advanced capabilities while maintaining seamless integration within the AWS ecosystem:

- Vector search capabilities – OpenSearch Serverless provides robust built-in vector search capabilities essential for our needs. The service supports hybrid search, allowing us to combine vector embeddings with raw text search without modifying our embedding model. This capability proved crucial for enabling broader question support in CreditAI 2.x, enhancing its overall usability and flexibility.

- Serverless architecture benefits – The serverless design alleviates the need to provision, configure, or tune infrastructure, significantly reducing operational complexities. This shift allows our team to focus more time and resources on feature development and application improvements rather than managing underlying infrastructure.

- AWS integration advantages – The tight integration with other AWS services, particularly Amazon S3 and Amazon Bedrock, streamlines our content ingestion process. This built-in compatibility provides a cohesive and scalable landscape for future enhancements while maintaining optimal performance.

OpenSearch Serverless enabled us to scale our vector search capabilities efficiently while minimizing operational overhead and maintaining high performance standards.

For scalability and security: Splitting data across multiple vector databases with in-house support for intricate permissions

To enhance scalability and security, we implemented isolated knowledge bases (corresponding to vector databases) for each client data. Although this approach slightly increases costs, it delivers multiple significant benefits. Primarily, it maintains complete isolation of client data, providing enhanced privacy and security. Thanks to Amazon Bedrock Knowledge Bases, this solution doesn’t compromise on performance. Amazon Bedrock Knowledge Bases enables concurrent embedding and synchronization across multiple knowledge bases, allowing us to maintain real-time updates without delays—something previously unattainable with our previous GPU based architectures.

Additionally, we introduced two in-house services within Octus to strengthen this system:

- AuthZ access management service – This service enforces granular access control, making sure users and applications can only interact with the data they are authorized to access. We had to migrate our AuthZ backend from Airbyte to native SQL replication so that it can support access management in near real time at scale.

- Global identifiers service – This service provides a unified framework to link identifiers across multiple domains, enabling seamless integration and cross-referencing of identifiers across multiple datasets.

Together, these enhancements create a robust, secure, and highly efficient environment for managing and accessing client data.

For cost efficiency: Adopting a multi-tenant embedding service

In our migration to Amazon Bedrock Knowledge Bases, Octus made a strategic shift from using an open-source embedding service on EC2 instances to using the managed embedding capabilities of Amazon Bedrock through Cohere’s multilingual model. This transition was carefully evaluated based on several key factors.

Our selection of Cohere’s multilingual model was driven by two primary advantages. First, it demonstrated superior retrieval performance in our comparative testing. Second, it offered robust multilingual support capabilities that were essential for our global operations.

The technical benefits of this migration manifested in two distinct areas: document embedding and message embedding. In document embedding, we transitioned from a CPU-based system to Amazon Bedrock Knowledge Bases, which enabled faster and higher throughput document processing through its multi-tenant architecture. For message embedding, we alleviated our dependency on dedicated GPU instances while maintaining optimal performance with 20–30 millisecond embedding times. The Amazon Bedrock Knowledge Bases API also simplified our operations by combining embedding and retrieval functionality into a single API call.

The migration to Amazon Bedrock Knowledge Bases managed embedding delivered two significant advantages: it eliminated the operational overhead of maintaining our own open-source solution while providing access to industry-leading embedding capabilities through Cohere’s model. This helped us achieve both our cost-efficiency and performance objectives without compromises.

For cost-efficiency and response performance: Choice of chunking strategy

Our primary goal was to improve three critical aspects of CreditAI’s responses: quality (accuracy of information), groundedness (ability to trace responses back to source documents), and relevance (providing information that directly answers user queries). To achieve this, we tested three different approaches to breaking down documents into smaller pieces (chunks):

- Fixed chunking – Breaking text into fixed-length pieces

- Semantic chunking – Breaking text based on natural semantic boundaries like paragraphs, sections, or complete thoughts

- Hierarchical chunking – Creating a two-level structure with smaller child chunks for precise matching and larger parent chunks for contextual understanding

Our testing showed that both semantic and hierarchical chunking performed significantly better than fixed chunking in retrieving relevant information. However, each approach came with its own technical considerations.

Hierarchical chunking requires a larger chunk size to maintain comprehensive context during retrieval. This approach creates a two-level structure: smaller child chunks for precise matching and larger parent chunks for contextual understanding. During retrieval, the system first identifies relevant child chunks and then automatically includes their parent chunks to provide broader context. Although this method optimizes both search precision and context preservation, we couldn’t implement it with our preferred Cohere embeddings because they only support chunks up to 512 tokens, which is insufficient for the parent chunks needed to maintain effective hierarchical relationships.

Semantic chunking uses LLMs to intelligently divide text by analyzing both semantic similarity and natural language structures. Instead of arbitrary splits, the system identifies logical break points by calculating embedding-based similarity scores between sentences and paragraphs, making sure semantically related content stays together. The resulting chunks maintain context integrity by considering both linguistic features (like sentence and paragraph boundaries) and semantic coherence, though this precision comes at the cost of additional computational resources for LLM analysis and embedding calculations.

After evaluating our options, we chose semantic chunking despite two trade-offs:

- It requires additional processing by our LLMs, which increases costs

- It has a limit of 1,000,000 tokens per document processing batch

We made this choice because semantic chunking offered the best balance between implementation simplicity and retrieval performance. Although hierarchical chunking showed promise, it would have been more complex to implement and harder to scale. This decision helped us maintain high-quality, grounded, and relevant responses while keeping our system manageable and efficient.

For response performance and technical agility: Adopting Amazon Bedrock Guardrails with Amazon Bedrock Knowledge Bases

Our implementation of Amazon Bedrock Guardrails focused on three key objectives: enhancing response security, optimizing performance, and simplifying guardrail management. This service plays a crucial role in making sure our responses are both safe and efficient.

Amazon Bedrock Guardrails provides a comprehensive framework for content filtering and response moderation. The system works by evaluating content against predefined rules before the LLM processes it, helping prevent inappropriate content and maintaining response quality. Through the Amazon Bedrock Guardrails integration with Amazon Bedrock Knowledge Bases, we can configure, test, and iterate on our guardrails without writing complex code.

We achieved significant technical improvements in three areas:

- Simplified moderation framework – Instead of managing multiple separate denied topics, we consolidated our content filtering into a unified guardrail service. This approach allows us to maintain a single source of truth for content moderation rules, with support for customizable sample phrases that help fine-tune our filtering accuracy.

- Performance optimization – We improved system performance by integrating guardrail checks directly into our main prompts, rather than running them as separate operations. This optimization reduced our token usage and minimized unnecessary API calls, resulting in lower latency for each query.

- Enhanced content control – The service provides configurable thresholds for filtering potentially harmful content and includes built-in capabilities for detecting hallucinations and assessing response relevance. This alleviated our dependency on external services like TruLens while maintaining robust content quality controls.

These improvements have helped us maintain high response quality while reducing both operational complexity and processing overhead. The integration with Amazon Bedrock has given us a more streamlined and efficient approach to content moderation.

To achieve zero downtime: Infrastructure migration

Our migration to Amazon Bedrock required careful planning to provide uninterrupted service for CreditAI while significantly reducing infrastructure costs. We achieved this through our comprehensive infrastructure framework that addresses deployment, security, and monitoring needs:

- IaC implementation – We used reusable Terraform modules to manage our infrastructure consistently across environments. These modules enabled us to share configurations efficiently between services and projects. Our approach supports multi-Region deployments with minimal configuration changes while maintaining infrastructure version control alongside application code.

- Automated deployment strategy – Our GitOps-embedded framework streamlines the deployment process by implementing a clear branching strategy for different environments. This automation handles CreditAI component deployments through CI/CD pipelines, reducing human error through automated validation and testing. The system also enables rapid rollback capabilities if needed.

- Security and compliance – To maintain SOC2 compliance and robust security, our framework incorporates comprehensive access management controls and data encryption at rest and in transit. We follow network security best practices, conduct regular security audits and monitoring, and run automated compliance checks in the deployment pipeline.

We maintained zero downtime during the entire migration process while reducing infrastructure costs by 70% by eliminating GPU instances. The successful transition from Amazon ECS on Amazon EC2 to Amazon ECS with Fargate has simplified our infrastructure management and monitoring.

Achieving excellence

CreditAI’s migration to Amazon Bedrock has yielded remarkable results for Octus:

- Scalability – We have almost doubled the number of documents available for Q&A across three environments in days instead of weeks. Our use of Amazon ECS with Fargate with auto scaling rules and controls gives us elastic scalability for our services during peak usage hours.

- Cost-efficiency and infrastructure optimization – By moving away from GPU-based clusters to Fargate, our monthly infrastructure costs are now 78.47% lower, and our per-question costs have reduced by 87.6%.

- Response performance and latency – There has been no drop in latency, and have seen a 27% increase in questions answered successfully. We have also seen a 250% boost in user engagement. Users especially love our support for broad, industry-wide questions enabled by Anthropic’s Claude Sonnet.

- Zero downtime – We experienced zero downtime during migration and 99% uptime overall for the whole application.

- Technological agility and innovation – We have been able to add new document sources in a quarter of the time it took pre-migration. In addition, we adopted enhanced guardrails support for free and no longer have to retrieve documents from the knowledge base and pass the chunks to Anthropic’s Claude Sonnet to trigger a guardrail.

- Operational consolidation and reliability – Post-migration, our DevOps and SRE teams see 20% less maintenance burden and overheads. Supporting SOC2 compliance is also straightforward now that we’re using only one cloud provider.

Operational monitoring

We use Datadog to monitor both LLM latency and our document ingestion pipeline, providing real-time visibility into system performance. The following screenshot showcases how we use custom Datadog dashboards to provide a live view of the document ingestion pipeline. This visualization offers both a high-level overview and detailed insights into the ingestion process, helping us understand the volume, format, and status of the documents processed. The bottom half of the dashboard presents a time-series view of document processing volumes. The timeline tracks fluctuations in processing rates, identifies peak activity periods, and provides actionable insights to optimize throughput. This detailed monitoring system enables us to maintain efficiency, minimize failures, and provide scalability.

Roadmap

Looking ahead, Octus plans to continue enhancing CreditAI by taking advantage of new capabilities released by Amazon Bedrock that continue to meet and exceed our requirements. Future developments will include:

- Enhance retrieval by testing and integrating with reranking techniques, allowing the system to prioritize the most relevant search results for better user experience and accuracy.

- Explore the Amazon Bedrock RAG evaluator to capture detailed metrics on CreditAI’s performance. This will add to the existing mechanisms at Octus to track performance that include tracking unanswered questions.

- Expand to ingest large-scale structured data, making it capable of handling complex financial datasets. The integration of text-to-SQL will enable users to query structured databases using natural language, simplifying data access.

- Explore replacing our in-house content extraction service (ADE) with the Amazon Bedrock advanced parsing solution to potentially further reduce document ingestion costs.

- Improve CreditAI’s disaster recovery and redundancy mechanisms, making sure that our services and infrastructure are more fault tolerant and can recover from outages faster.

These upgrades aim to boost the precision, reliability, and scalability of CreditAI.

Vishal Saxena, CTO at Octus, shares: “CreditAI is a first-of-its-kind generative AI application that focuses on the entire credit lifecycle. It is truly ’AI embedded’ software that combines cutting-edge AI technologies with an enterprise data architecture and a unified cloud strategy.”

Conclusion

CreditAI by Octus is the company’s flagship conversational chatbot that supports natural language queries and gives instant access to insights on over 10,000 companies from hundreds of thousands of proprietary intel articles. In this post, we described in detail our motivation, process, and results on Octus’s migration to Amazon Bedrock. Through this migration, Octus achieved remarkable results that included an over 75% reduction in operating costs as well as a 250% boost in engagement. Future steps include adopting new features such as reranking, RAG evaluator, and advanced parsing to further reduce costs and improve performance. We believe that the collaboration between Octus and AWS will continue to revolutionize financial analysis and research workflows.

To learn more about Amazon Bedrock, refer to the Amazon Bedrock User Guide.

About the Authors

Vaibhav Sabharwal is a Senior Solutions Architect with Amazon Web Services based out of New York. He is passionate about learning new cloud technologies and assisting customers in building cloud adoption strategies, designing innovative solutions, and driving operational excellence. As a member of the Financial Services Technical Field Community at AWS, he actively contributes to the collaborative efforts within the industry.

Vaibhav Sabharwal is a Senior Solutions Architect with Amazon Web Services based out of New York. He is passionate about learning new cloud technologies and assisting customers in building cloud adoption strategies, designing innovative solutions, and driving operational excellence. As a member of the Financial Services Technical Field Community at AWS, he actively contributes to the collaborative efforts within the industry.

Yihnew Eshetu is a Senior Director of AI Engineering at Octus, leading the development of AI solutions at scale to address complex business problems. With seven years of experience in AI/ML, his expertise spans GenAI and NLP, specializing in designing and deploying agentic AI systems. He has played a key role in Octus’s AI initiatives, including leading AI Engineering for its flagship GenAI chatbot, CreditAI.

Yihnew Eshetu is a Senior Director of AI Engineering at Octus, leading the development of AI solutions at scale to address complex business problems. With seven years of experience in AI/ML, his expertise spans GenAI and NLP, specializing in designing and deploying agentic AI systems. He has played a key role in Octus’s AI initiatives, including leading AI Engineering for its flagship GenAI chatbot, CreditAI.

Harmandeep Sethi is a Senior Director of SRE Engineering and Infrastructure Frameworks at Octus, with nearly 10 years of experience leading high-performing teams in the design, implementation, and optimization of large-scale, highly available, and reliable systems. He has played a pivotal role in transforming and modernizing Credit AI infrastructure and services by driving best practices in observability, resilience engineering, and the automation of operational processes through Infrastructure Frameworks.

Harmandeep Sethi is a Senior Director of SRE Engineering and Infrastructure Frameworks at Octus, with nearly 10 years of experience leading high-performing teams in the design, implementation, and optimization of large-scale, highly available, and reliable systems. He has played a pivotal role in transforming and modernizing Credit AI infrastructure and services by driving best practices in observability, resilience engineering, and the automation of operational processes through Infrastructure Frameworks.

Rohan Acharya is an AI Engineer at Octus, specializing in building and optimizing AI-driven solutions at scale. With expertise in GenAI and NLP, he focuses on designing and deploying intelligent systems that enhance automation and decision-making. His work involves developing robust AI architectures and advancing Octus’s AI initiatives, including the evolution of CreditAI.

Rohan Acharya is an AI Engineer at Octus, specializing in building and optimizing AI-driven solutions at scale. With expertise in GenAI and NLP, he focuses on designing and deploying intelligent systems that enhance automation and decision-making. His work involves developing robust AI architectures and advancing Octus’s AI initiatives, including the evolution of CreditAI.

Hasan Hasibul is a Principal Architect at Octus leading the DevOps team, with nearly 12 years of experience in building scalable, complex architectures while following software development best practices. A true advocate of clean code, he thrives on solving complex problems and automating infrastructure. Passionate about DevOps, infrastructure automation, and the latest advancements in AI, he has architected Octus initial CreditAI, pushing the boundaries of innovation.

Hasan Hasibul is a Principal Architect at Octus leading the DevOps team, with nearly 12 years of experience in building scalable, complex architectures while following software development best practices. A true advocate of clean code, he thrives on solving complex problems and automating infrastructure. Passionate about DevOps, infrastructure automation, and the latest advancements in AI, he has architected Octus initial CreditAI, pushing the boundaries of innovation.

Philipe Gutemberg is a Principal Software Engineer and AI Application Development Team Lead at Octus, passionate about leveraging technology for impactful solutions. An AWS Certified Solutions Architect – Associate (SAA), he has expertise in software architecture, cloud computing, and leadership. Philipe led both backend and frontend application development for CreditAI, ensuring a scalable system that integrates AI-driven insights into financial applications. A problem-solver at heart, he thrives in fast-paced environments, delivering innovative solutions for financial institutions while fostering mentorship, team development, and continuous learning.

Philipe Gutemberg is a Principal Software Engineer and AI Application Development Team Lead at Octus, passionate about leveraging technology for impactful solutions. An AWS Certified Solutions Architect – Associate (SAA), he has expertise in software architecture, cloud computing, and leadership. Philipe led both backend and frontend application development for CreditAI, ensuring a scalable system that integrates AI-driven insights into financial applications. A problem-solver at heart, he thrives in fast-paced environments, delivering innovative solutions for financial institutions while fostering mentorship, team development, and continuous learning.

Kishore Iyer is the VP of AI Application Development and Engineering at Octus. He leads teams that build, maintain and support Octus’s customer-facing GenAI applications, including CreditAI, our flagship AI offering. Prior to Octus, Kishore has 15+ years of experience in engineering leadership roles across large corporations, startups, research labs, and academia. He holds a Ph.D. in computer engineering from Rutgers University.

Kishore Iyer is the VP of AI Application Development and Engineering at Octus. He leads teams that build, maintain and support Octus’s customer-facing GenAI applications, including CreditAI, our flagship AI offering. Prior to Octus, Kishore has 15+ years of experience in engineering leadership roles across large corporations, startups, research labs, and academia. He holds a Ph.D. in computer engineering from Rutgers University.

Kshitiz Agarwal is an Engineering Leader at Amazon Web Services (AWS), where he leads the development of Amazon Bedrock Knowledge Bases. With a decade of experience at Amazon, having joined in 2012, Kshitiz has gained deep insights into the cloud computing landscape. His passion lies in engaging with customers and understanding the innovative ways they leverage AWS to drive their business success. Through his work, Kshitiz aims to contribute to the continuous improvement of AWS services, enabling customers to unlock the full potential of the cloud.

Kshitiz Agarwal is an Engineering Leader at Amazon Web Services (AWS), where he leads the development of Amazon Bedrock Knowledge Bases. With a decade of experience at Amazon, having joined in 2012, Kshitiz has gained deep insights into the cloud computing landscape. His passion lies in engaging with customers and understanding the innovative ways they leverage AWS to drive their business success. Through his work, Kshitiz aims to contribute to the continuous improvement of AWS services, enabling customers to unlock the full potential of the cloud.

Sandeep Singh is a Senior Generative AI Data Scientist at Amazon Web Services, helping businesses innovate with generative AI. He specializes in generative AI, machine learning, and system design. He has successfully delivered state-of-the-art AI/ML-powered solutions to solve complex business problems for diverse industries, optimizing efficiency and scalability.

Sandeep Singh is a Senior Generative AI Data Scientist at Amazon Web Services, helping businesses innovate with generative AI. He specializes in generative AI, machine learning, and system design. He has successfully delivered state-of-the-art AI/ML-powered solutions to solve complex business problems for diverse industries, optimizing efficiency and scalability.

Tim Ramos is a Senior Account Manager at AWS. He has 12 years of sales experience and 10 years of experience in cloud services, IT infrastructure, and SaaS. Tim is dedicated to helping customers develop and implement digital innovation strategies. His focus areas include business transformation, financial and operational optimization, and security. Tim holds a BA from Gonzaga University and is based in New York City.

Tim Ramos is a Senior Account Manager at AWS. He has 12 years of sales experience and 10 years of experience in cloud services, IT infrastructure, and SaaS. Tim is dedicated to helping customers develop and implement digital innovation strategies. His focus areas include business transformation, financial and operational optimization, and security. Tim holds a BA from Gonzaga University and is based in New York City.

Optimize reasoning models like DeepSeek with prompt optimization on Amazon Bedrock

DeepSeek-R1 models, now available on Amazon Bedrock Marketplace, Amazon SageMaker JumpStart, as well as a serverless model on Amazon Bedrock, were recently popularized by their long and elaborate thinking style, which, according to DeepSeek’s published results, lead to impressive performance on highly challenging math benchmarks like AIME-2024 and MATH-500, as well as competitive performance compared to then state-of-the-art models like Anthropic’s Claude Sonnet 3.5, GPT 4o, and OpenAI O1 (more details in this paper).

During training, researchers showed how DeepSeek-R1-Zero naturally learns to solve tasks with more thinking time, which leads to a boost in performance. However, what often gets ignored is the number of thinking tokens required at inference time, and the time and cost of generating these tokens before answering the original question.

In this post, we demonstrate how to optimize reasoning models like DeepSeek-R1 using prompt optimization on Amazon Bedrock.

Long reasoning chains and challenges with maximum token limits

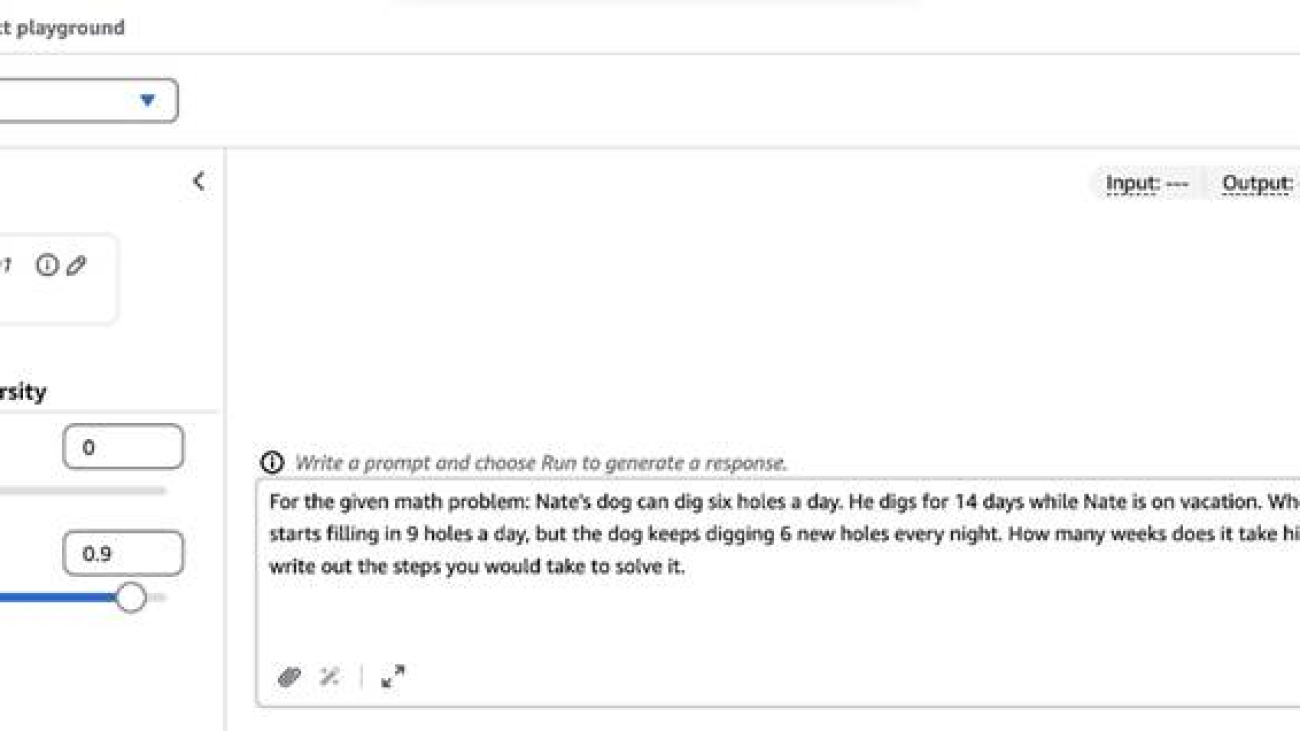

Let’s try out a straightforward question on DeepSeek-R1:

For the given math problem: Nate’s dog can dig six holes a day. He digs for 14 days while Nate is on vacation. When Nate gets home, he starts filling in 9 holes a day, but the dog keeps digging 6 new holes every night. How many weeks does it take him to fill in all the holes?, write out the steps you would take to solve it.

On the Amazon Bedrock Chat/Text Playground, you can follow along by choosing the new DeepSeek-R1 model, as shown in the following screenshot.

You might see that sometimes, based on the question, reasoning models don’t finish thinking within the overall maximum token budget.

Increasing the output token budget allows the model to think for longer. With the maximum tokens increased from 2,048 to 4,096, you should see the model reasoning for a while before printing the final answer.

The appendix at the end of this post provides the complete response. You can also collapse the reasoning steps to view just the final answer.

As we can see in the case with the 2,048-token budget, the thinking process didn’t end. This not only cost us 2,048 tokens’ worth of time and money, but we also didn’t get the final answer! This observation of high token counts for thinking usually leads to a few follow-up questions, such as:

- Is it possible to reduce the thinking tokens and still get a correct answer?

- Can the thinking be restricted to a maximum number of thinking tokens, or a thinking budget?

- At a high level, should thinking-intensive models like DeepSeek be used in real-time applications at all?

In this post, we show you how you can optimize thinking models like DeepSeek-R1 using prompt optimization on Amazon Bedrock, resulting in more succinct thinking traces without sacrificing accuracy.

Optimize DeepSeek-R1 prompts

To get started with prompt optimization, select DeepSeek-R1 on the model playground on Amazon Bedrock, enter your prompt, and choose the magic wand icon, or use the Amazon Bedrock optimize_prompt() API. You may also use prompt optimization on the console, add variables if required, set your model to Deepseek-R1 and model parameters, and click “Optimize”:

To demonstrate how prompt optimization on Amazon Bedrock can help with reasoning models, we first need a challenging dataset. Humanity’s Last Exam (HLE), a benchmark of extremely challenging questions from dozens of subject areas, is designed to be the “final” closed-ended benchmark of broad academic capabilities. HLE is multi-modal, featuring questions that are either text-only or accompanied by an image reference, and includes both multiple-choice and exact-match questions for automated answer verification. The questions require deep domain knowledge in various verticals; they are unambiguous and resistant to simple internet lookups or database retrieval. For context, several state-of-the-art models (including thinking models) perform poorly on the benchmark (see the results table in this full paper).

Let’s look at an example question from this dataset:

In an alternate universe where the mass of the electron was 1% heavier and the charges of the electron and proton were both 1% smaller, but all other fundamental constants stayed the same, approximately how would the speed of sound in diamond change? Answer Choices: A. Decrease by 2% B. Decrease by 1.5% C. Decrease by 1% D. Decrease by 0.5% E. Stay approximately the same F. Increase by 0.5% G. Increase by 1% H. Increase by 1.5% I. Increase by 2%

The question requires a deep understanding of physics, which most large language models (LLMs) today will fail at. Our goal with prompt optimization on Amazon Bedrock for reasoning models is to reduce the number of thinking tokens but not sacrifice accuracy. After using prompt optimization, the optimized prompt is as follows:

## Question <extracted_question_1>In an alternate universe where the mass of the electron was 1% heavier and the charges of the electron and proton were both 1% smaller, but all other fundamental constants stayed the same, approximately how would the speed of sound in diamond change? Answer Choices: A. Decrease by 2% B. Decrease by 1.5% C. Decrease by 1% D. Decrease by 0.5% E. Stay approximately the same F. Increase by 0.5% G. Increase by 1% H. Increase by 1.5% I. Increase by 2%</extracted_question_1> ## Instruction Read the question above carefully and provide the most accurate answer possible. If multiple choice options are provided within the question, respond with the entire text of the correct answer option, not just the letter or number. Do not include any additional explanations or preamble in your response. Remember, your goal is to answer as precisely and accurately as possible!

The following figure shows how, for this specific case, the number of thinking tokens reduced by 35%, while still getting the final answer correct (B. Decrease by 1.5%). Here, the number of thinking tokens reduced from 5,000 to 3,300. We also notice that in this and other examples with the original prompts, part of the reasoning is summarized or repeated before the final answer. As we can see in this example, the optimized prompt gives clear instructions, separates different prompt sections, and provides additional guidance based on the type of question and how to answer. This leads to both shorter, clearer reasoning traces and a directly extractable final answer.

Optimized prompts can also lead to correct answers as opposed to wrong ones after long-form thinking, because thinking doesn’t guarantee a correct final answer. In this case, we see that the number of thinking tokens reduced from 5,000 to 1,555, and the answer is obtained directly, rather than after another long, post-thinking explanation. The following figure shows an example.

The preceding two examples demonstrate ways in which prompt optimization can improve results while shortening output tokens for models like DeepSeek R1. Prompt optimization was also applied to 400 questions from HLE. The following table summarizes the results.

| Experiment | Overall Accuracy | Average Number of Prompt Tokens | Average Number of Tokens Completion (Thinking + Response) |

Average Number of Tokens (Response Only) |

Average Number of Tokens (Thinking Only) | Percentage of Thinking Completed (6,000 Maximum output Token) |

| Baseline DeepSeek | 8.75 | 288 | 3334 | 271 | 3063 | 80.0% |

| Prompt Optimized DeepSeek | 11 | 326 | 1925 | 27 | 1898 | 90.3% |

As we can see, the overall accuracy jumps to 11% on this subset of the HLE dataset, the number of thinking and output tokens are reduced (therefore reducing the time to last token and cost), and the rate of completing thinking increased to 90% overall. From our experiments, we see that although there is no explicit reference to reducing the thinking tokens, the clearer, more detailed instructions about the task at hand after prompt optimization might reduce the additional effort involved for models like DeepSeek-R1 to do self-clarification or deeper problem understanding. Prompt optimization for reasoning models makes sure that the quality of thinking and overall flow, which is self-adaptive and dependent on the question, is largely unaffected, leading to better final answers.

Conclusion

In this post, we demonstrated how prompt optimization on Amazon Bedrock can effectively enhance the performance of thinking-intensive models like DeepSeek-R1. Through our experiments with the HLE dataset, we showed that optimized prompts not only reduced the number of thinking tokens by a significant margin, but also improved overall accuracy from 8.75% to 11%. The optimization resulted in more efficient reasoning paths without sacrificing the quality of answers, leading to faster response times and lower costs. This improvement in both efficiency and effectiveness suggests that prompt optimization can be a valuable tool for deploying reasoning-heavy models in production environments where both accuracy and computational resources need to be carefully balanced. As the field of AI continues to evolve with more sophisticated thinking models, techniques like prompt optimization will become increasingly important for practical applications.

To get started with prompt optimization on Amazon Bedrock, refer to Optimize a prompt and Improve the performance of your Generative AI applications with Prompt Optimization on Amazon Bedrock.

Appendix

The following is the full response for the question about Nate’s dog:

About the authors

Shreyas Subramanian is a Principal Data Scientist and helps customers by using generative AI and deep learning to solve their business challenges using AWS services. Shreyas has a background in large-scale optimization and ML and in the use of ML and reinforcement learning for accelerating optimization tasks.

Shreyas Subramanian is a Principal Data Scientist and helps customers by using generative AI and deep learning to solve their business challenges using AWS services. Shreyas has a background in large-scale optimization and ML and in the use of ML and reinforcement learning for accelerating optimization tasks.

Zhengyuan Shen is an Applied Scientist at Amazon Bedrock, specializing in foundational models and ML modeling for complex tasks including natural language and structured data understanding. He is passionate about leveraging innovative ML solutions to enhance products or services, thereby simplifying the lives of customers through a seamless blend of science and engineering. Outside work, he enjoys sports and cooking.

Zhengyuan Shen is an Applied Scientist at Amazon Bedrock, specializing in foundational models and ML modeling for complex tasks including natural language and structured data understanding. He is passionate about leveraging innovative ML solutions to enhance products or services, thereby simplifying the lives of customers through a seamless blend of science and engineering. Outside work, he enjoys sports and cooking.

Xuan Qi is an Applied Scientist at Amazon Bedrock, where she applies her background in physics to tackle complex challenges in machine learning and artificial intelligence. Xuan is passionate about translating scientific concepts into practical applications that drive tangible improvements in technology. Her work focuses on creating more intuitive and efficient AI systems that can better understand and interact with the world. Outside of her professional pursuits, Xuan finds balance and creativity through her love for dancing and playing the violin, bringing the precision and harmony of these arts into her scientific endeavors.

Xuan Qi is an Applied Scientist at Amazon Bedrock, where she applies her background in physics to tackle complex challenges in machine learning and artificial intelligence. Xuan is passionate about translating scientific concepts into practical applications that drive tangible improvements in technology. Her work focuses on creating more intuitive and efficient AI systems that can better understand and interact with the world. Outside of her professional pursuits, Xuan finds balance and creativity through her love for dancing and playing the violin, bringing the precision and harmony of these arts into her scientific endeavors.

Shuai Wang is a Senior Applied Scientist and Manager at Amazon Bedrock, specializing in natural language proceeding, machine learning, large language modeling, and other related AI areas.

Shuai Wang is a Senior Applied Scientist and Manager at Amazon Bedrock, specializing in natural language proceeding, machine learning, large language modeling, and other related AI areas.

Amazon Bedrock announces general availability of multi-agent collaboration

Today, we’re announcing the general availability (GA) of multi-agent collaboration on Amazon Bedrock. This capability allows developers to build, deploy, and manage networks of AI agents that work together to execute complex, multi-step workflows efficiently.

Since its preview launch at re:Invent 2024, organizations across industries—including financial services, healthcare, supply chain and logistics, manufacturing, and customer support—have used multi-agent collaboration to orchestrate specialized agents, driving efficiency, accuracy, and automation. With this GA release, we’ve introduced enhancements based on customer feedback, further improving scalability, observability, and flexibility—making AI-driven workflows easier to manage and optimize.

What is multi-agent collaboration?

Generative AI is no longer just about models generating responses, it’s about automation. The next wave of innovation is driven by agents that can reason, plan, and act autonomously across company systems. Generative AI applications are no longer just generating content; they also take action, solve problems, and execute complex workflows. The shift is clear: businesses need AI that doesn’t just respond to prompts but orchestrates entire workflows, automating processes end to end.

Agents enable generative AI applications to perform tasks across company systems and data sources, and Amazon Bedrock already simplifies building them. With Amazon Bedrock, customers can quickly create agents that handle sales orders, compile financial reports, analyze customer retention, and much more. However, as applications become more capable, the tasks customers want them to perform can exceed what a single agent can manage—either because the tasks require specialized expertise, involve multiple steps, or demand continuous execution over time.

Coordinating potentially hundreds of agents at scale is also challenging, because managing dependencies, ensuring efficient task distribution, and maintaining performance across a large network of specialized agents requires sophisticated orchestration. Without the right tools, businesses can face inefficiencies, increased latency, and difficulties in monitoring and optimizing performance. For customers looking to advance their agents and tackle more intricate, multi-step workflows, Amazon Bedrock supports multi-agent collaboration, enabling developers to easily build, deploy, and manage multiple specialized agents working together seamlessly.

Multi-agent collaboration enables developers to create networks of specialized agents that communicate and coordinate under the guidance of a supervisor agent. Each agent contributes its expertise to the larger workflow by focusing on a specific task. This approach breaks down complex processes into manageable sub-tasks processed in parallel. By facilitating seamless interaction among agents, Amazon Bedrock enhances operational efficiency and accuracy, ensuring workflows run more effectively at scale. Because each agent only accesses the data required for its role, this approach minimizes exposure of sensitive information while reinforcing security and governance. This allows businesses to scale their AI-driven workflows without the need for manual intervention in coordinating agents. As more agents are added, the supervisor ensures smooth collaboration between them all.

By using multi-agent collaboration on Amazon Bedrock, organizations can:

- Streamline AI-driven workflows by distributing workloads across specialized agents.

- Improve execution efficiency by parallelizing tasks where possible.

- Enhance security and governance by restricting agent access to only necessary data.

- Reduce operational complexity by eliminating manual intervention in agent coordination.

A key challenge in building effective multi-agent collaboration systems is managing the complexity and overhead of coordinating multiple specialized agents at scale. Amazon Bedrock simplifies the process of building, deploying, and orchestrating effective multi-agent collaboration systems while addressing efficiency challenges through several key features and optimizations:

- Quick setup – Create, deploy, and manage AI agents working together in minutes without the need for complex coding.

- Composability – Integrate your existing agents as subagents within a larger agent system, allowing them to seamlessly work together to tackle complex workflows.

- Efficient inter-agent communication – The supervisor agent can interact with subagents using a consistent interface, supporting parallel communication for more efficient task completion.

- Optimized collaboration modes – Choose between supervisor mode and supervisor with routing mode. With routing mode, the supervisor agent will route simple requests directly to specialized subagents, bypassing full orchestration. For complex queries or when no clear intention is detected, it automatically falls back to the full supervisor mode, where the supervisor agent analyzes, breaks down problems, and coordinates multiple subagents as needed.

- Integrated trace and debug console – Visualize and analyze multi-agent interactions behind the scenes using the integrated trace and debug console.

What’s new in general availability?

The GA release introduces several key enhancements based on customer feedback, making multi-agent collaboration more scalable, flexible, and efficient:

- Inline agent support – Enables the creation of supervisor agents dynamically at runtime, allowing for more flexible agent management without predefined structures.

- AWS CloudFormation and AWS Cloud Development Kit (AWS CDK) support – Enables customers to deploy agent networks as code, enabling scalable, reusable agent templates across AWS accounts.

- Enhanced traceability and debugging – Provides structured execution logs, sub-step tracking, and Amazon CloudWatch integration to improve monitoring and troubleshooting.

- Increased collaborator and step count limits – Expands self-service limits for agent collaborators and execution steps, supporting larger-scale workflows.

- Payload referencing – Reduces latency and costs by allowing the supervisor agent to reference external data sources without embedding them in the agent request.

- Improved citation handling – Enhances accuracy and attribution when agents pull external data sources into their responses.

These features collectively improve coordination capabilities, communication speed, and overall effectiveness of the multi-agent collaboration framework in tackling complex, real-world problems.

Multi-agent collaboration across industries

Multi-agent collaboration is already transforming AI automation across sectors:

- Investment advisory – A financial firm uses multiple agents to analyze market trends, risk factors, and investment opportunities to deliver personalized client recommendations.

- Retail operations – A retailer deploys agents for demand forecasting, inventory tracking, pricing optimization, and order fulfillment to increase operational efficiency.

- Fraud detection – A banking institution assigns agents to monitor transactions, detect anomalies, validate customer behaviors, and flag potential fraud risks in real time.

- Customer support – An enterprise customer service platform uses agents for sentiment analysis, ticket classification, knowledge base retrieval, and automated responses to enhance resolution times.

- Healthcare diagnosis – A hospital system integrates agents for patient record analysis, symptom recognition, medical imaging review, and treatment plan recommendations to assist clinicians.

Deep dive: Syngenta’s use of multi-agent collaboration

Syngenta, a global leader in agricultural innovation, has integrated cutting-edge generative AI into its Cropwise service, resulting in the development of Cropwise AI. This advanced system is designed to enhance the efficiency of agronomic advisors and growers by providing tailored recommendations for crop management practices.

Business challenge

The agricultural sector faces the complex task of optimizing crop yields while ensuring sustainability and profitability. Farmers and agronomic advisors must consider a multitude of factors, including weather patterns, soil conditions, crop growth stages, and potential pest and disease threats. In the past, analyzing these variables required extensive manual effort and expertise. Syngenta recognized the need for a more efficient, data-driven approach to support decision-making in crop management.

Solution: Cropwise AI

To address these challenges, Syngenta collaborated with AWS to develop Cropwise AI, using Amazon Bedrock Agents to create a multi-agent system that integrates various data sources and AI capabilities. This system offers several key features:

- Advanced seed recommendation and placement – Uses predictive machine learning algorithms to deliver personalized seed recommendations tailored to each grower’s unique environment.

- Sophisticated predictive modeling – Employs state-of-the-art machine learning algorithms to forecast crop growth patterns, yield potential, and potential risk factors by integrating real-time data with comprehensive historical information.

- Precision agriculture optimization – Provides hyper-localized, site-specific recommendations for input application, minimizing waste and maximizing resource efficiency.

Agent architecture

Cropwise AI is built on AWS architecture and designed for scalability, maintainability, and security. The system uses Amazon Bedrock Agents to orchestrate multiple AI agents, each specializing in distinct tasks:

- Data aggregation agent – Collects and integrates extensive datasets, including over 20 years of weather history, soil conditions, and more than 80,000 observations on crop growth stages.

- Recommendation agent – Analyzes the aggregated data to provide tailored recommendations for precise input applications, product placement, and strategies for pest and disease control.

- Conversational AI agent – Uses a multilingual conversational large language model (LLM) to interact with users in natural language, delivering insights in a clear format.

This multi-agent collaboration enables Cropwise AI to process complex agricultural data efficiently, offering actionable insights and personalized recommendations to enhance crop yields, sustainability, and profitability.

Results

By implementing Cropwise AI, Syngenta has achieved significant improvements in agricultural practices:

- Enhanced decision-making: Agronomic advisors and growers receive data-driven recommendations, leading to optimized crop management strategies.

- Increased yields: Utilizing Syngenta’s seed recommendation models, Cropwise AI helps growers increase yields by up to 5%.

- Sustainable practices: The system promotes precision agriculture, reducing waste and minimizing environmental impact through optimized input applications.

Highlighting the significance of this advancement, Feroz Sheikh, Chief Information and Digital Officer at Syngenta Group, stated:

“Agricultural innovation leader Syngenta is using Amazon Bedrock Agents as part of its Cropwise AI solution, which gives growers deep insights to help them optimize crop yields, improve sustainability, and drive profitability. With multi-agent collaboration, Syngenta will be able to use multiple agents to further improve their recommendations to growers, transforming how their end-users make decisions and delivering even greater value to the farming community.”

This collaboration between Syngenta and AWS exemplifies the transformative potential of generative AI and multi-agent systems in agriculture, driving innovation and supporting sustainable farming practices.

How multi-agent collaboration works

Amazon Bedrock automates agent collaboration, including task delegation, execution tracking, and data orchestration. Developers can configure their system in one of two collaboration modes:

- Supervisor mode

- The supervisor agent receives an input, breaks down complex requests, and assigns tasks to specialized sub-agents.

- Sub-agents execute tasks in parallel or sequentially, returning responses to the supervisor, which consolidates the results.

- Supervisor with routing mode

- Simple queries are routed directly to a relevant sub-agent.

- Complex or ambiguous requests trigger the supervisor to coordinate multiple agents to complete the task.

Watch the Amazon Bedrock multi-agent collaboration video to learn how to get started.

Conclusion

By enabling seamless multi-agent collaboration, Amazon Bedrock empowers businesses to scale their generative AI applications with greater efficiency, accuracy, and flexibility. As organizations continue to push the boundaries of AI-driven automation, having the right tools to orchestrate complex workflows will be essential. With Amazon Bedrock, companies can confidently build AI systems that don’t just generate responses but drive real impact—automating processes, solving problems, and unlocking new possibilities across industries.

Amazon Bedrock multi-agent collaboration is now generally available.

- Learn more: Automate tasks in your application using AI agents

- Code samples: Amazon Bedrock agent samples on GitHub

- Try it out today in the AWS Management Console for Amazon Bedrock.

Multi-agent collaboration opens new possibilities for AI-driven automation. Whether in finance, healthcare, retail, or agriculture, Amazon Bedrock helps organizations scale AI workflows with efficiency and precision.

Start building today—and let us know what you create!

About the authors

Sri Koneru has spent the last 13.5 years honing her skills in both cutting-edge product development and large-scale infrastructure. At Salesforce for 7.5 years, she had the incredible opportunity to build and launch brand new products from the ground up, reaching over 100,000 external customers. This experience was instrumental in her professional growth. Then, at Google for 6 years, she transitioned to managing critical infrastructure, overseeing capacity, efficiency, fungibility, job scheduling, data platforms, and spatial flexibility for all of Alphabet. Most recently, Sri joined Amazon Web Services leveraging her diverse skillset to make a significant impact on AI/ML services and infrastructure at AWS. Personally, Sri & her husband recently became empty nesters, relocating to Seattle from the Bay Area. They’re a basketball-loving family who even catch pre-season Warriors games but are looking forward to cheering on the Seattle Storm this year. Beyond basketball, Sri enjoys cooking, recipe creation, reading, and her newfound hobby of hiking. While she’s a sun-seeker at heart, she is looking forward to experiencing the unique character of Seattle weather.

Sri Koneru has spent the last 13.5 years honing her skills in both cutting-edge product development and large-scale infrastructure. At Salesforce for 7.5 years, she had the incredible opportunity to build and launch brand new products from the ground up, reaching over 100,000 external customers. This experience was instrumental in her professional growth. Then, at Google for 6 years, she transitioned to managing critical infrastructure, overseeing capacity, efficiency, fungibility, job scheduling, data platforms, and spatial flexibility for all of Alphabet. Most recently, Sri joined Amazon Web Services leveraging her diverse skillset to make a significant impact on AI/ML services and infrastructure at AWS. Personally, Sri & her husband recently became empty nesters, relocating to Seattle from the Bay Area. They’re a basketball-loving family who even catch pre-season Warriors games but are looking forward to cheering on the Seattle Storm this year. Beyond basketball, Sri enjoys cooking, recipe creation, reading, and her newfound hobby of hiking. While she’s a sun-seeker at heart, she is looking forward to experiencing the unique character of Seattle weather.

Utah to Advance AI Education, Training

A new AI education initiative in the State of Utah, developed in collaboration with NVIDIA, is set to advance the state’s commitment to workforce training and economic growth.

The public-private partnership aims to equip universities, community colleges and adult education programs across Utah with the resources to develop skills in generative AI.

“AI will continue to grow in importance, affecting every sector of Utah’s economy,” said Spencer Cox, governor of Utah. “We need to prepare our students and faculty for this revolution. Working with NVIDIA is an ideal path to help ensure that Utah is positioned for AI growth in the near and long term.”

As part of the new initiative, Utah’s educators can gain certification through the NVIDIA Deep Learning Institute University Ambassador Program. The program offers high-quality teaching kits, extensive workshop content and access to NVIDIA GPU-accelerated workstations in the cloud.

By empowering educators with the latest AI skills and technologies, the initiative seeks to create a competitive advantage for Utah’s entire higher education system.

“We believe that AI education is more than a pathway to innovation — it’s a foundation for solving some of the world’s most pressing challenges,” said Manish Parashar, director of the University of Utah Scientific Computing and Imaging (SCI) Institute, which leads the One-U Responsible AI Initiative. “By equipping students and researchers with the tools to explore, understand and create with AI, we empower them to be able to drive advancements in medicine, engineering and beyond.”

The initiative will begin with the Utah System of Higher Education (USHE) and several other universities in the state, including the University of Utah, Utah State University, Utah Valley University, Weber State University, Utah Tech University, Southern Utah University, Snow College and Salt Lake Community College.

Setting Up Students and Professionals for Success

The Utah AI education initiative will benefit students entering the job market and working professionals by helping them expand their skill sets beyond community college or adult education courses.

Utah state agencies are exploring how internship and apprenticeship programs can offer students hands-on experience with AI skills, helping bridge the gap between education and industry needs. This initiative aligns with Utah’s broader goals of fostering a tech-savvy workforce and positioning the state as a leader in AI innovation and application.

As AI continues to evolve and gain prevalence across industries, Utah’s proactive approach to equipping educators and students with resources and training will help prepare its workforce for the future of technology, sharpening its competitive edge.

Semantic Telemetry: Understanding how users interact with AI systems

AI tools are proving useful across a range of applications, from helping to drive the new era of business transformation to helping artists craft songs. But which applications are providing the most value to users? We’ll dig into that question in a series of blog posts that introduce the Semantic Telemetry project at Microsoft Research. In this initial post, we will introduce a new data science approach that we will use to analyze topics and task complexity of Copilot in Bing usage.

Human-AI interactions can be iterative and complex, requiring a new data science approach to understand user behavior to build and support increasingly high value use cases. Imagine the following chat:

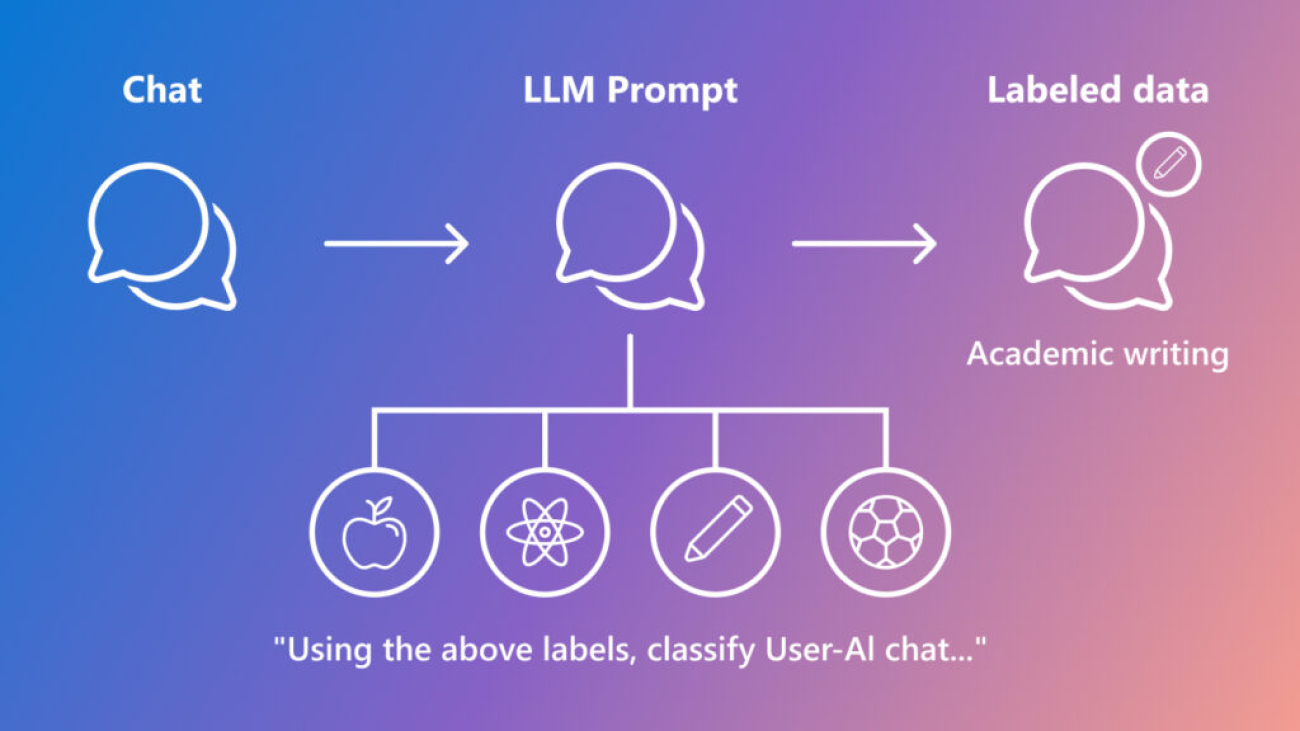

Here we see that chats can be complex and span multiple topics, such as event planning, team building, and logistics. Generative AI has ushered in a two-fold paradigm shift. First, LLMs give us a new thing to measure, that is, how people interact with AI systems. Second, they give us a new way to measure those interactions, that is, they give us the capability to understand and make inferences on these interactions, at scale. The Semantic Telemetry project has created new measures to classify human-AI interactions and understand user behavior, contributing to efforts in developing new approaches for measuring generative AI (opens in new tab) across various use cases.

Semantic Telemetry is a rethink of traditional telemetry–in which data is collected for understanding systems–designed for analyzing chat-based AI. We employ an innovative data science methodology that uses a large language model (LLM) to generate meaningful categorical labels, enabling us to gain insights into chat log data.

This process begins with developing a set of classifications and definitions. We create these classifications by instructing an LLM to generate a short summary of the conversation, and then iteratively prompting the LLM to generate, update, and review classification labels on a batched set of summaries. This process is outlined in the paper: TnT-LLM: Text Mining at Scale with Large Language Models. We then prompt an LLM with these generated classifiers to label new unstructured (and unlabeled) chat log data.

With this approach, we have analyzed how people interact with Copilot in Bing. In this blog, we examine insights into how people are using Copilot in Bing, including how that differs from traditional search engines. Note that all analyses were conducted on anonymous Copilot interactions containing no personal information.

Topics

To get a clear picture of how people are using Copilot in Bing, we need to first classify sessions into topical categories. To do this, we developed a topic classifier. We used the LLM classification approach described above to label the primary topic (domain) for the entire content of the chat. Although a single chat can cover multiple topics, for this analysis, we generated a single label for the primary topic of the conversation. We sampled five million anonymized Copilot in Bing chats during August and September 2024, and found that globally, 21% of all chats were about technology, with a high concentration of these chats in programming and scripting and computers and electronics.

Diving into the technology category, we find a lot of professional tasks in programming and scripting, where users request problem-specific assistance such as fixing a SQL query syntax error. In computers and electronics, we observe users getting help with tasks like adjusting screen brightness and troubleshooting internet connectivity issues. We can compare this with our second most common topic, entertainment, in which we see users seeking information related to personal activities like hiking and game nights.

We also note that top topics differ by platform. The figure below depicts topic popularity based on mobile and desktop usage. Mobile device users tend to use the chat for more personal-related tasks such as helping to plant a garden or understanding medical symptoms whereas desktop users conduct more professional tasks like revising an email.

Microsoft research blog

PromptWizard: The future of prompt optimization through feedback-driven self-evolving prompts

PromptWizard from Microsoft Research is now open source. It is designed to automate and simplify AI prompt optimization, combining iterative LLM feedback with efficient exploration and refinement techniques to create highly effective prompts in minutes.

Search versus Copilot

Beyond analyzing topics, we compared Copilot in Bing usage to that of traditional search. Chat extends beyond traditional online search by enabling users to summarize, generate, compare, and analyze information. Human-AI interactions are conversational and more complex than traditional search (Figure 6).

A major differentiation between search and chat is the ability to ask more complex questions, but how can we measure this? We think of complexity as a scale ranging from simply asking chat to look up information to evaluating several ideas. We aim to understand the difficulty of a task if performed by a human without the assistance of AI. To achieve this, we developed the task complexity classifier, which assesses task difficulty using Anderson and Krathwohl’s Taxonomy of Learning Objectives (opens in new tab). For our analysis, we have grouped the learning objectives into two categories: low complexity and high complexity. Any task more complicated than information lookup is classified as high complexity. Note that this would be very challenging to classify using traditional data science techniques.

Comparing low versus high complexity tasks, most chat interactions were categorized as high complexity (78.9%), meaning that they were more complex than looking up information. Programming and scripting, marketing and sales, and creative and professional writing are topics in which users engage in higher complexity tasks (Figure 7) such as learning a skill, troubleshooting a problem, or writing an article.

Travel and tourism and history and culture scored lowest in complexity, with users looking up information like flights time and latest news updates.