Learn more about Google Research’s FireSat project, built to detect small wildfires.Read More

Learn more about Google Research’s FireSat project, built to detect small wildfires.Read More

New AI Collaboratives to take action on wildfires and food insecurity

Learn how our AI Collaboratives for wildfires and food security are taking a new funding approach to help people around the world.Read More

Learn how our AI Collaboratives for wildfires and food security are taking a new funding approach to help people around the world.Read More

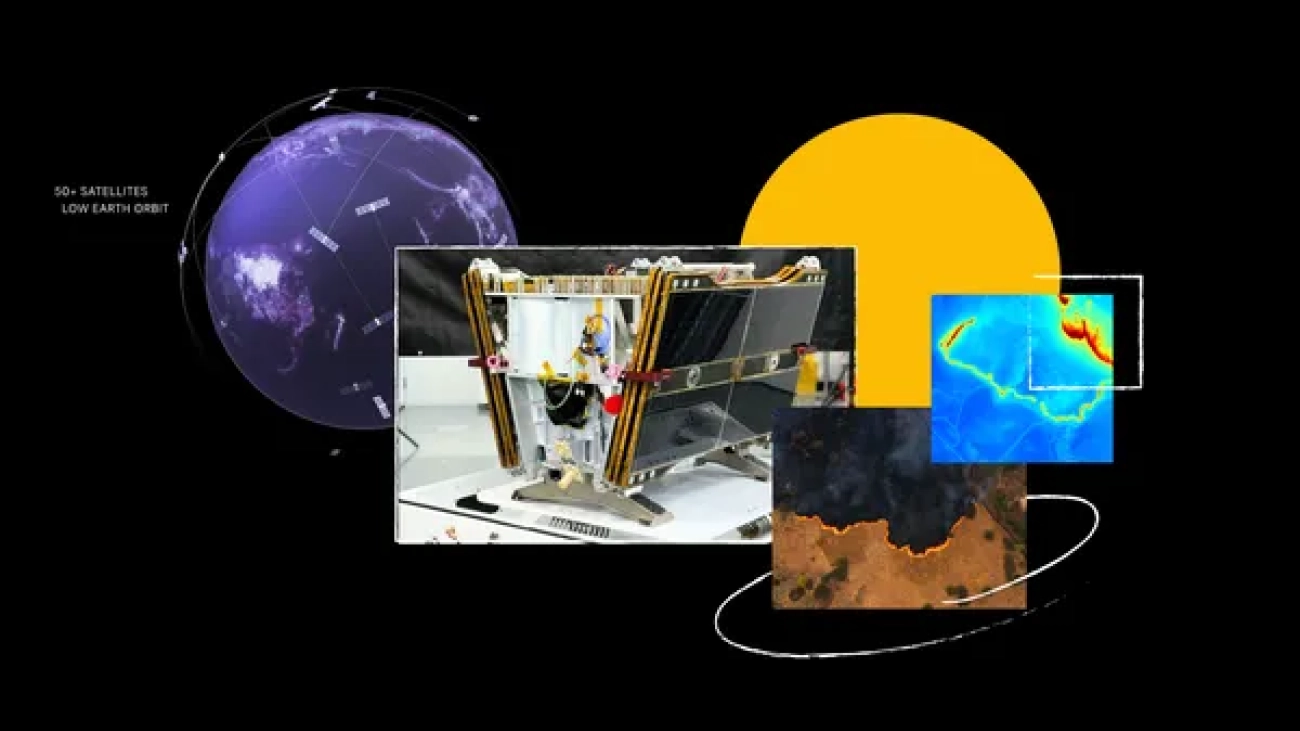

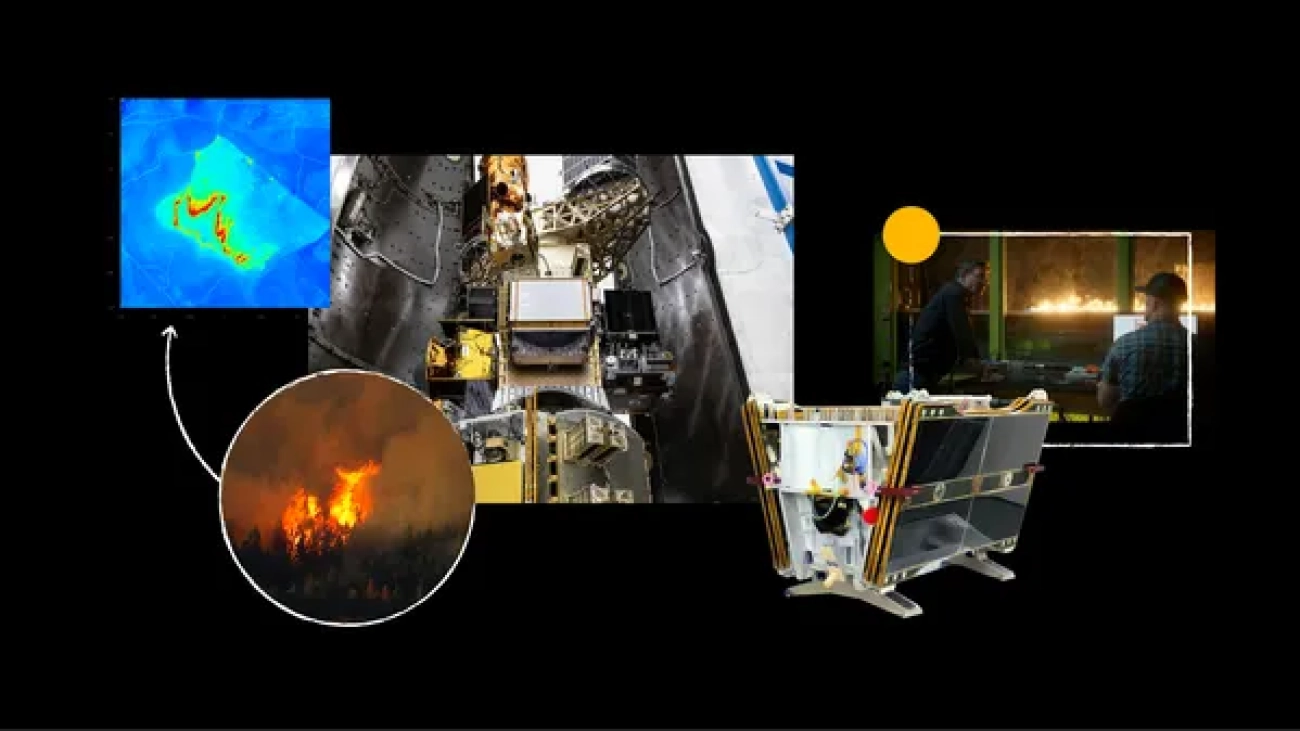

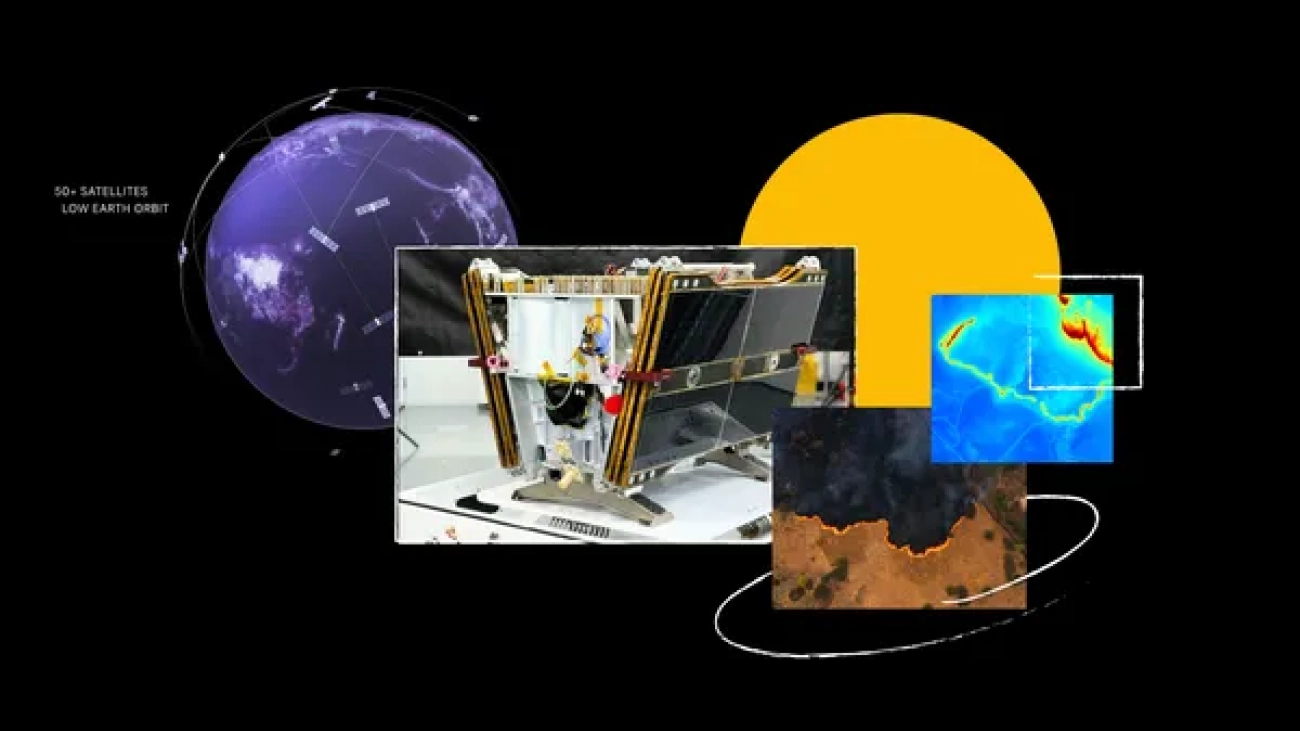

The first FireSat satellite has launched to help detect smaller wildfires earlier.

The first satellite for the FireSat constellation officially made contact with Earth. This satellite is the first of more than 50 in a first-of-its-kind constellation de…Read More

The first satellite for the FireSat constellation officially made contact with Earth. This satellite is the first of more than 50 in a first-of-its-kind constellation de…Read More

Inside the launch of FireSat, a system to find wildfires earlier

Learn more about Google Research’s FireSat project, built to detect small wildfires.Read More

Learn more about Google Research’s FireSat project, built to detect small wildfires.Read More

PyTorch at GTC 2025

GTC is coming back to San Jose on March 17–21, 2025. Join PyTorch Foundation members Arm, AWS, Google Cloud, IBM, Lightning AI, Meta, Microsoft Azure, Snowflake, and thousands of developers as we celebrate PyTorch. Together learn how AI & accelerated computing are helping humanity solve our most complex challenges.

Join in person with discounted GTC registration for PyTorch Foundation or watch online with free registration.

Scaling Open Source AI: From Foundation Models to Ecosystem Success

Hear from PyTorch Foundation’s Executive Director Matt White & panelists from UC Berkeley, Meta, NVIDIA, & Sequoia Capital how open source is transforming AI development, bringing together experts from industry, academia, and venture capital to discuss the technical and business aspects of collaborative open source AI development They’ll examine how open source projects like PyTorch, vLLM, Ray, and NVIDIA’s NeMo are accelerating AI innovation while creating new opportunities for businesses and researchers. They’ll share real-world experiences from PyTorch’s development, Berkeley’s research initiatives, and successful AI startups. Take away valuable insights into the technical and business aspects of open source AI. – Monday, Mar 17 10:00 AM – 11:00 AM PDT

PyTorch @ GTC

The Performance of CUDA with the Flexibility of PyTorch

Mark Saroufim, Software Engineer, Meta Platforms

This talk explores how PyTorch users are also becoming CUDA developers. We’ll start with motivating examples from eager, the launch of torch.compile and the more recent trend of kernel zoos. We will share details on how we went about integrating low bit matmuls in torchao and the torch.compile CUTLASS backend. We’ll also discuss details on how you can define, build and package your own custom ops in PyTorch so you get the raw performance of CUDA while maintaining the flexibility of PyTorch.

Make My PyTorch Model Fast, and Show Me How You Did It

Thomas Viehmann, Principal Research Engineer, Lightning AI

Luca Antiga, CTO, Lightning AI

PyTorch is popular in deep learning and LLMs for richness and ease of expressions. To make the most of compute resources, PyTorch models benefit from nontrivial optimizations, but this means losing some of their ease and understandability. Learn how with Thunder, a PyTorch-to-Python compiler focused on usability, understandability, and extensibility, you can optimize and transform (i.e., distribute across many machines) models while • leaving the PyTorch code unchanged • targeting a variety of models without needing to adapt to each of them • understanding each transformation step because the results are presented as simple Python code • accessing powerful extension code for your own optimizations with just one or a few lines of code We’ll show how the combination of Thunder transforms and the NVIDIA stack (NVFuser, cuDNN, Apex) delivers optimized performance in training and inference on a variety of models.

FlexAttention: The Flexibility of PyTorch With the Performance of FlashAttention

Driss Guessous, Machine Learning Engineer, Meta Platforms

Introducing FlexAttention: a novel PyTorch API that enables custom, user-defined attention mechanisms with performance comparable to state-of-the-art solutions. By leveraging the PyTorch compiler stack, FlexAttention supports dynamic modifications to attention scores within SDPA, achieving both runtime and memory efficiency through kernel fusion with the FlashAttention algorithm. Our benchmarks on A100 GPUs show FlexAttention achieves 90% of FlashAttention2’s performance in forward passes and 85% in backward passes. On H100 GPUs, FlexAttention’s forward performance averages 85% of FlashAttention3 and is ~25% faster than FlashAttention2, while backward performance averages 76% of FlashAttention3 and is ~3% faster than FlashAttention2. Explore how FlexAttention balances near-state-of-the-art performance with unparalleled flexibility, empowering researchers to rapidly iterate on attention mechanisms without sacrificing efficiency.

Keep Your GPUs Going Brrr : Crushing Whitespace in Model Training

Syed Ahmed, Senior Software Engineer, NVIDIA

Alban Desmaison, Research Engineer, Meta

Aidyn Aitzhan, Senior Software Engineer, NVIDIA

Substantial progress has recently been made on the compute-intensive portions of model training, such as high-performing attention variants. While invaluable, this progress exposes previously hidden bottlenecks in model training, such as redundant copies during collectives and data loading time. We’ll present recent improvements in PyTorch achieved through Meta/NVIDIA collaboration to tackle these newly exposed bottlenecks and how practitioners can leverage them.

Accelerated Python: The Community and Ecosystem

Andy Terrel, CUDA Python Product Lead, NVIDIA

Jeremy Tanner, Open Source Programs, NVIDIA

Anshuman Bhat, CUDA Product Management, NVIDIA

Python is everywhere. Simulation, data science, and Gen AI all depend on it. Unfortunately, the dizzying array of tools leaves a newcomer baffled at where to start. We’ll take you on a guided tour of the vibrant community and ecosystem surrounding accelerated Python programming. Explore a variety of tools, libraries, and frameworks that enable efficient computation and performance optimization in Python, including CUDA Python, RAPIDS, Warp, and Legate. We’ll also discuss integration points with PyData, PyTorch, and JAX communities. Learn about collaborative efforts within the community, including open source projects and contributions that drive innovation in accelerated computing. We’ll discuss best practices for leveraging these frameworks to enhance productivity in developing AI-driven applications and conducting large-scale data analyses.

Supercharge large scale AI with Google Cloud AI hypercomputer (Presented by Google Cloud)

Deepak Patil, Product Manager, Google Cloud

Rajesh Anantharaman, Product Management Lead, ML Software, Google Cloud

Unlock the potential of your large-scale AI workloads with Google Cloud AI Hypercomputer – a supercomputing architecture designed for maximum performance and efficiency. In this session, we will deep dive into PyTorch and JAX stacks on Google Cloud on NVIDIA GPUs, and showcase capabilities for high performance foundation model building on Google Cloud.

Peering Into the Future: What AI and Graph Networks Can Mean for the Future of Financial Analysis

Siddharth Samsi, Sr. Solutions Architect, NVIDIA

Sudeep Kesh, Chief Innovation Officer, S&P Global

Artificial Intelligence, agentic systems, and graph neural networks (GNNs) are providing the new frontier to assess, monitor, and estimate opportunities and risks across work portfolios within financial services. Although many of these technologies are still developing, organizations are eager to understand their potential. See how S&P Global and NVIDIA are working together to find practical ways to learn and integrate such capabilities, ranging from forecasting corporate debt issuance to understanding capital markets at a deeper level. We’ll show a graph representation of market data using the PyTorch-Geometric library and a dataset of issuances spanning three decades and across financial and non-financial industries. Technical developments include generation of a bipartite graph and link-prediction GNN forecasting. We’ll address data preprocessing, pipelines, model training, and how these technologies can broaden capabilities in an increasingly complex world.

Unlock Deep Learning Performance on Blackwell With cuDNN

Yang Xu (Enterprise Products), DL Software Engineering Manager, NVIDIA

Since its launch, cuDNN, a library for GPU-accelerating deep learning (DL) primitives, has been powering many AI applications in domains such as conversational AI, recommender systems, and speech recognition, among others. CuDNN remains a core library for DL primitives in popular frameworks such as PyTorch, JAX, Tensorflow, and many more while covering training, fine-tuning, and inference use cases. Even in the rapidly evolving space of Gen AI — be it Llama, Gemma, or mixture-of-experts variants requiring complex DL primitives such as flash attention variants — cuDNN is powering them all. Learn about new/updated APIs of cuDNN pertaining to Blackwell’s microscaling format, and how to program against those APIs. We’ll deep dive into leveraging its graph APIs to build some fusion patterns, such as matmul fusion patterns and fused flash attention from state-of-the-art models. Understand how new CUDA graph support in cuDNN, not to be mistaken with the cuDNN graph API, could be exploited to avoid rebuilding CUDA graphs, offering an alternative to CUDA graph capture with real-world framework usage.

Train and Serve AI Systems Fast With the Lightning AI Open-Source Stack (Presented by Lightning AI)

Luca Antiga, CTO, Lightning AI

See how the Lightning stack can cover the full life cycle, from data preparation to deployment, with practical examples and particular focus on distributed training and high-performance inference. We’ll show examples that focus on new features like support for multi-dimensional parallelism through DTensors, as well as quantization through torchao.

Connect With Experts (Interactive Sessions)

Meet the Experts From Deep Learning Framework Teams

Eddie Yan, Technical Lead of PyTorch, NVIDIA

Masaki Kozuki, Senior Software Engineer in PyTorch, NVIDIA

Patrick Wang (Enterprise Products), Software Engineer in PyTorch, NVIDIA

Mike Ruberry, Distinguished Engineer in Deep Learning Frameworks, NVIDIA

Rishi Puri, Sr. Deep Learning Engineer and Lead for PyTorch Geometric, NVIDIA

Training Labs

Kernel Optimization for AI and Beyond: Unlocking the Power of Nsight Compute

Felix Schmitt, Sr. System Software Engineer, NVIDIA

Peter Labus, Senior System Software Engineer, NVIDIA

Learn how to unlock the full potential of NVIDIA GPUs with the powerful profiling and analysis capabilities of Nsight Compute. AI workloads are rapidly increasing the demand for GPU computing, and ensuring that they efficiently utilize all available GPU resources is essential. Nsight Compute is the most powerful tool for understanding kernel execution behavior and performance. Learn how to configure and launch profiles customized for your needs, including advice on profiling accelerated Python applications, AI frameworks like PyTorch, and optimizing Tensor Core utilization essential to modern AI performance. Learn how to debug your kernel and use the expert system built into Nsight Compute, known as “Guided Analysis,” that automatically detects common issues and directs you to the most relevant performance data all the way down to the source code level.

Make Retrieval Better: Fine-Tuning an Embedding Model for Domain-Specific RAG

Gabriel Moreira, Sr. Research Scientist, NVIDIA

Ronay Ak, Sr. Data Scientist, NVIDIA

LLMs power AI applications like conversational chatbots and content generators, but are constrained by their training data. This might lead to hallucinations in content generation, which requires up-to-date or domain-specific information. Retrieval augmented generation (RAG) addresses this issue by enabling LLMs to access external context without modifying model parameters. Embedding or dense retrieval models are a key component of a RAG pipeline for retrieving relevant context to the LLM. However, an embedding model’s effectiveness to capture the unique characteristics of the custom data hinges on the quality and domain relevance of its training data. Fine-tuning embedding models is gaining interest to provide more accurate and relevant responses tailored to users’ specific domain.

In this lab, you’ll learn to generate a synthetic dataset with question-context pairs from a domain-specific corpus, and process the data for fine-tuning. Then, fine-tune a text embedding model using synthetic data and evaluate it.

Poster Presentations

Single-View X-Ray 3D Reconstruction Using Neural Back Projection and Frustum Resampling

Tran Minh Quan, Developer Technologist, NVIDIA

Enable Novel Applications in the New AI Area in Medicine: Accelerated Feature Computation for Pathology Slides

Nils Bruenggel, Principal Software Engineer, Roche Diagnostics Int. AG

Getting started with computer use in Amazon Bedrock Agents

Computer use is a breakthrough capability from Anthropic that allows foundation models (FMs) to visually perceive and interpret digital interfaces. This capability enables Anthropic’s Claude models to identify what’s on a screen, understand the context of UI elements, and recognize actions that should be performed such as clicking buttons, typing text, scrolling, and navigating between applications. However, the model itself doesn’t execute these actions—it requires an orchestration layer to safely implement the supported actions.

Today, we’re announcing computer use support within Amazon Bedrock Agents using Anthropic’s Claude 3.5 Sonnet V2 and Anthropic’s Claude Sonnet 3.7 models on Amazon Bedrock. This integration brings Anthropic’s visual perception capabilities as a managed tool within Amazon Bedrock Agents, providing you with a secure, traceable, and managed way to implement computer use automation in your workflows.

Organizations across industries struggle with automating repetitive tasks that span multiple applications and systems of record. Whether processing invoices, updating customer records, or managing human resource (HR) documents, these workflows often require employees to manually transfer information between different systems – a process that’s time-consuming, error-prone, and difficult to scale.

Traditional automation approaches require custom API integrations for each application, creating significant development overhead. Computer use capabilities change this paradigm by allowing machines to perceive existing interfaces just as humans.

In this post, we create a computer use agent demo that provides the critical orchestration layer that transforms computer use from a perception capability into actionable automation. Without this orchestration layer, computer use would only identify potential actions without executing them. The computer use agent demo powered by Amazon Bedrock Agents provides the following benefits:

- Secure execution environment – Execution of computer use tools in a sandbox environment with limited access to the AWS ecosystem and the web. It is crucial to note that currently Amazon Bedrock Agent does not provide a sandbox environment

- Comprehensive logging – Ability to track each action and interaction for auditing and debugging

- Detailed tracing capabilities – Visibility into each step of the automated workflow

- Simplified testing and experimentation – Reduced risk when working with this experimental capability through managed controls

- Seamless orchestration – Coordination of complex workflows across multiple systems without custom code

This integration combines Anthropic’s perceptual understanding of digital interfaces with the orchestration capabilities of Amazon Bedrock Agents, creating a powerful agent for automating complex workflows across applications. Rather than build custom integrations for each system, developers can now create agents that perceive and interact with existing interfaces in a managed, secure way.

With computer use, Amazon Bedrock Agents can automate tasks through basic GUI actions and built-in Linux commands. For example, your agent could take screenshots, create and edit text files, and run built-in Linux commands. Using Amazon Bedrock Agents and compatible Anthropic’s Claude models, you can use the following action groups:

- Computer tool – Enables interactions with user interfaces (clicking, typing, scrolling)

- Text editor tool – Provides capabilities to edit and manipulate files

- Bash – Allows execution of built-in Linux commands

Solution overview

An example computer use workflow consists of the following steps:

- Create an Amazon Bedrock agent and use natural language to describe what the agent should do and how it should interact with users, for example: “You are computer use agent capable of using Firefox web browser for web search.”

- Add the Amazon Bedrock Agents supported computer use action groups to your agent using CreateAgentActionGroup API.

- Invoke the agent with a user query that requires computer use tools, for example, “What is Amazon Bedrock, can you search the web?”

- The Amazon Bedrock agent uses the tool definitions at its disposal and decides to use the computer action group to click a screenshot of the environment. Using the return control capability of Amazon Bedrock Agents, the agent the responds with the tool or tools that it wants to execute. The return control capability is required for using computer use with Amazon Bedrock Agents.

- The workflow parses the agent response and executes the tool returned in a sandbox environment. The output is given back to the Amazon Bedrock agent for further processing.

- The Amazon Bedrock agent continues to respond with tools at its disposal until the task is complete.

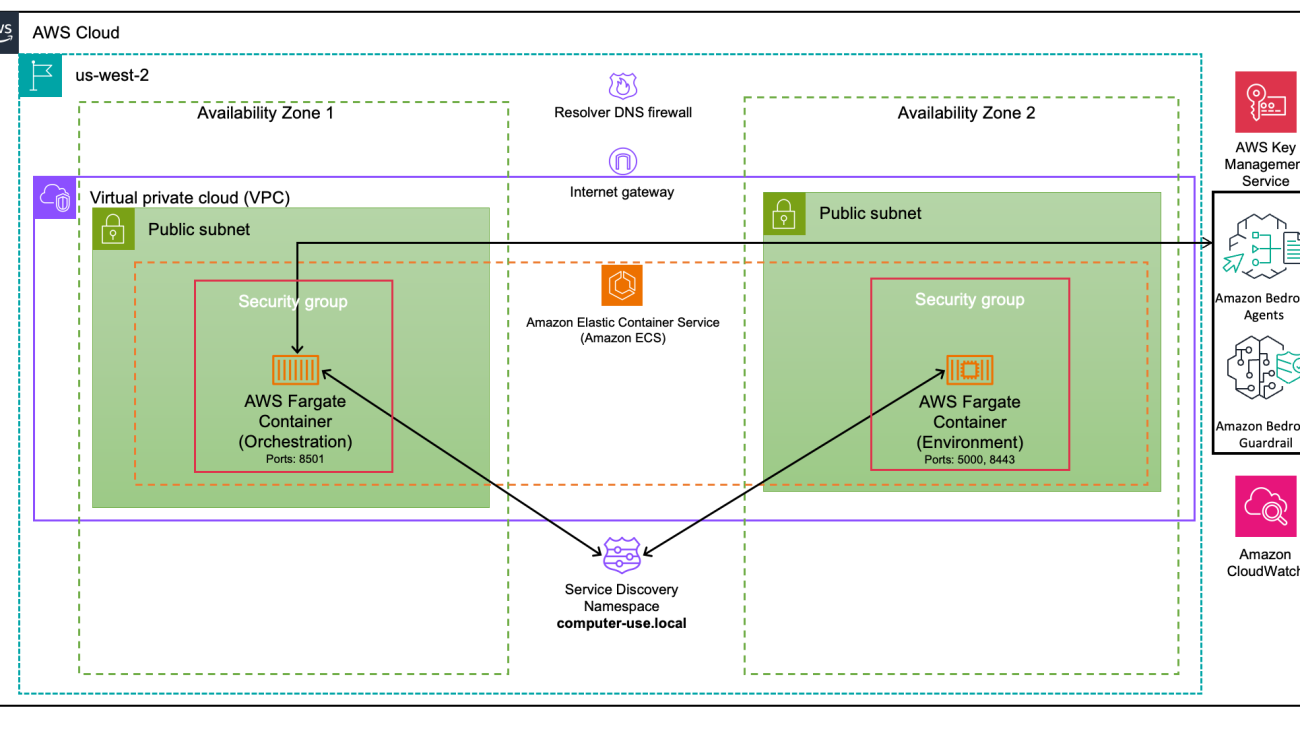

You can recreate this example in the us-west-2 AWS Region with the AWS Cloud Development Kit (AWS CDK) by following the instructions in the GitHub repository. This demo deploys a containerized application using AWS Fargate across two Availability Zones in the us-west-2 Region. The infrastructure operates within a virtual private cloud (VPC) containing public subnets in each Availability Zone, with an internet gateway providing external connectivity. The architecture is complemented by essential supporting services, including AWS Key Management Service (AWS KMS) for security and Amazon CloudWatch for monitoring, creating a resilient, serverless container environment that alleviates the need to manage underlying infrastructure while maintaining robust security and high availability.

The following diagram illustrates the solution architecture.

At the core of our solution are two Fargate containers managed through Amazon Elastic Container Service (Amazon ECS), each protected by its own security group. The first is our orchestration container, which not only handles the communication between Amazon Bedrock Agents and end users, but also orchestrates the workflow that enables tool execution. The second is our environment container, which serves as a secure sandbox where the Amazon Bedrock agent can safely run its computer use tools. The environment container has limited access to the rest of the ecosystem and the internet. We utilize service discovery to connect Amazon ECS services with DNS names.

The orchestration container includes the following components:

- Streamlit UI – The Streamlit UI that facilitates interaction between the end user and computer use agent

- Return control loop – The workflow responsible for parsing the tools that the agent wants to execute and returning the output of these tools

The environment container includes the following components:

- UI and pre-installed applications – A lightweight UI and pre-installed Linux applications like Firefox that can be used to complete the user’s tasks

- Tool implementation – Code that can execute computer use tool in the environment like “screenshot” or “double-click”

- Quart (RESTful) JSON API – An orchestration container that uses Quart to execute tools in a sandbox environment

The following diagram illustrates these components.

Prerequisites

- AWS Command Line Interface (CLI), follow instructions here. Make sure to setup credentials, follow instructions here.

- Require Python 3.11 or later.

- Require Node.js 14.15.0 or later.

- AWS CDK CLI, follow instructions here.

- Enable model access for Anthropic’s Claude Sonnet 3.5 V2 and for Anthropic’s Claude Sonnet 3.7.

- Boto3 version >= 1.37.10.

Create an Amazon Bedrock agent with computer use

You can use the following code sample to create a simple Amazon Bedrock agent with computer, bash, and text editor action groups. It is crucial to provide a compatible action group signature when using Anthropic’s Claude 3.5 Sonnet V2 and Anthropic’s Claude 3.7 Sonnet as highlighted here.

| Model | Action Group Signature |

| Anthropic’s Claude 3.5 Sonnet V2 | computer_20241022 text_editor_20241022 bash_20241022 |

| Anthropic’s Claude 3.7 Sonnet | computer_20250124 text_editor_20250124 bash_20250124 |

Example use case

In this post, we demonstrate an example where we use Amazon Bedrock Agents with the computer use capability to complete a web form. In the example, the computer use agent can also switch Firefox tabs to interact with a customer relationship management (CRM) agent to get the required information to complete the form. Although this example uses a sample CRM application as the system of record, the same approach works with Salesforce, SAP, Workday, or other systems of record with the appropriate authentication frameworks in place.

In the demonstrated use case, you can observe how well the Amazon Bedrock agent performed with computer use tools. Our implementation completed the customer ID, customer name, and email by visually examining the excel data. However, for the overview, it decided to select the cell and copy the data, because the information wasn’t completely visible on the screen. Finally, the CRM agent was used to get additional information on the customer.

Best practices

The following are some ways you can improve the performance for your use case:

- Implement Security Groups, Network Access Control Lists (NACLs), and Amazon Route 53 Resolver DNS Firewall domain lists to control access to the sandbox environment.

- Apply AWS Identity and Access Management (IAM) and the principle of least privilege to assign limited permissions to the sandbox environment.

- When providing the Amazon Bedrock agent with instructions, be concise and direct. Specify simple, well-defined tasks and provide explicit instructions for each step.

- Understand computer use limitations as highlighted by Anthropic here.

- Complement return of control with user confirmation to help safeguard your application from malicious prompt injections by requesting confirmation from your users before invoking a computer use tool.

- Use multi-agent collaboration and computer use with Amazon Bedrock Agents to automate complex workflows.

- Implement safeguards by filtering harmful multimodal content based on your responsible AI policies for your application by associating Amazon Bedrock Guardrails with your agent.

Considerations

The computer use feature is made available to you as a beta service as defined in the AWS Service Terms. It is subject to your agreement with AWS and the AWS Service Terms, and the applicable model EULA. Computer use poses unique risks that are distinct from standard API features or chat interfaces. These risks are heightened when using the computer use feature to interact with the internet. To minimize risks, consider taking precautions such as:

- Operate computer use functionality in a dedicated virtual machine or container with minimal privileges to minimize direct system exploits or accidents

- To help prevent information theft, avoid giving the computer use API access to sensitive accounts or data

- Limit the computer use API’s internet access to required domains to reduce exposure to malicious content

- To enforce proper oversight, keep a human in the loop for sensitive tasks (such as making decisions that could have meaningful real-world consequences) and for anything requiring affirmative consent (such as accepting cookies, executing financial transactions, or agreeing to terms of service)

Any content that you enable Anthropic’s Claude to see or access can potentially override instructions or cause the model to make mistakes or perform unintended actions. Taking proper precautions, such as isolating Anthropic’s Claude from sensitive surfaces, is essential – including to avoid risks related to prompt injection. Before enabling or requesting permissions necessary to enable computer use features in your own products, inform end users of any relevant risks, and obtain their consent as appropriate.

Clean up

When you are done using this solution, make sure to clean up all the resources. Follow the instructions in the provided GitHub repository.

Conclusion

Organizations across industries face significant challenges with cross-application workflows that traditionally require manual data entry or complex custom integrations. The integration of Anthropic’s computer use capability with Amazon Bedrock Agents represents a transformative approach to these challenges.

By using Amazon Bedrock Agents as the orchestration layer, organizations can alleviate the need for custom API development for each application, benefit from comprehensive logging and tracing capabilities essential for enterprise deployment, and implement automation solutions quickly.

As you begin exploring computer use with Amazon Bedrock Agents, consider workflows in your organization that could benefit from this approach. From invoice processing to customer onboarding, HR documentation to compliance reporting, the potential applications are vast and transformative.

We’re excited to see how you will use Amazon Bedrock Agents with the computer use capability to securely streamline operations and reimagine business processes through AI-driven automation.

Resources

To learn more, refer to the following resources:

- Computer use with Amazon Bedrock Agents guide

- Computer use with Amazon Bedrock Agents implementation

- Computer use with Anthropic’s Claude implementation

- Computer use with Anthropic guide

- Amazon Bedrock Agent Samples

About the Authors

Eashan Kaushik is a Specialist Solutions Architect AI/ML at Amazon Web Services. He is driven by creating cutting-edge generative AI solutions while prioritizing a customer-centric approach to his work. Before this role, he obtained an MS in Computer Science from NYU Tandon School of Engineering. Outside of work, he enjoys sports, lifting, and running marathons.

Eashan Kaushik is a Specialist Solutions Architect AI/ML at Amazon Web Services. He is driven by creating cutting-edge generative AI solutions while prioritizing a customer-centric approach to his work. Before this role, he obtained an MS in Computer Science from NYU Tandon School of Engineering. Outside of work, he enjoys sports, lifting, and running marathons.

Maira Ladeira Tanke is a Tech Lead for Agentic workloads in Amazon Bedrock at AWS, where she enables customers on their journey to develop autonomous AI systems. With over 10 years of experience in AI/ML. At AWS, Maira partners with enterprise customers to accelerate the adoption of agentic applications using Amazon Bedrock, helping organizations harness the power of foundation models to drive innovation and business transformation. In her free time, Maira enjoys traveling, playing with her cat, and spending time with her family someplace warm.

Maira Ladeira Tanke is a Tech Lead for Agentic workloads in Amazon Bedrock at AWS, where she enables customers on their journey to develop autonomous AI systems. With over 10 years of experience in AI/ML. At AWS, Maira partners with enterprise customers to accelerate the adoption of agentic applications using Amazon Bedrock, helping organizations harness the power of foundation models to drive innovation and business transformation. In her free time, Maira enjoys traveling, playing with her cat, and spending time with her family someplace warm.

Raj Pathak is a Principal Solutions Architect and Technical advisor to Fortune 50 and Mid-Sized FSI (Banking, Insurance, Capital Markets) customers across Canada and the United States. Raj specializes in Machine Learning with applications in Generative AI, Natural Language Processing, Intelligent Document Processing, and MLOps.

Raj Pathak is a Principal Solutions Architect and Technical advisor to Fortune 50 and Mid-Sized FSI (Banking, Insurance, Capital Markets) customers across Canada and the United States. Raj specializes in Machine Learning with applications in Generative AI, Natural Language Processing, Intelligent Document Processing, and MLOps.

Adarsh Srikanth is a Software Development Engineer at Amazon Bedrock, where he develops AI agent services. He holds a master’s degree in computer science from USC and brings three years of industry experience to his role. He spends his free time exploring national parks, discovering new hiking trails, and playing various racquet sports.

Adarsh Srikanth is a Software Development Engineer at Amazon Bedrock, where he develops AI agent services. He holds a master’s degree in computer science from USC and brings three years of industry experience to his role. He spends his free time exploring national parks, discovering new hiking trails, and playing various racquet sports.

Abishek Kumar is a Senior Software Engineer at Amazon, bringing over 6 years of valuable experience across both retail and AWS organizations. He has demonstrated expertise in developing generative AI and machine learning solutions, specifically contributing to key AWS services including SageMaker Autopilot, SageMaker Canvas, and AWS Bedrock Agents. Throughout his career, Abishek has shown passion for solving complex problems and architecting large-scale systems that serve millions of customers worldwide. When not immersed in technology, he enjoys exploring nature through hiking and traveling adventures with his wife.

Abishek Kumar is a Senior Software Engineer at Amazon, bringing over 6 years of valuable experience across both retail and AWS organizations. He has demonstrated expertise in developing generative AI and machine learning solutions, specifically contributing to key AWS services including SageMaker Autopilot, SageMaker Canvas, and AWS Bedrock Agents. Throughout his career, Abishek has shown passion for solving complex problems and architecting large-scale systems that serve millions of customers worldwide. When not immersed in technology, he enjoys exploring nature through hiking and traveling adventures with his wife.

Krishna Gourishetti is a Senior Software Engineer for the Bedrock Agents team in AWS. He is passionate about building scalable software solutions that solve customer problems. In his free time, Krishna loves to go on hikes.

Krishna Gourishetti is a Senior Software Engineer for the Bedrock Agents team in AWS. He is passionate about building scalable software solutions that solve customer problems. In his free time, Krishna loves to go on hikes.

Evaluating RAG applications with Amazon Bedrock knowledge base evaluation

Organizations building and deploying AI applications, particularly those using large language models (LLMs) with Retrieval Augmented Generation (RAG) systems, face a significant challenge: how to evaluate AI outputs effectively throughout the application lifecycle. As these AI technologies become more sophisticated and widely adopted, maintaining consistent quality and performance becomes increasingly complex.

Traditional AI evaluation approaches have significant limitations. Human evaluation, although thorough, is time-consuming and expensive at scale. Although automated metrics are fast and cost-effective, they can only evaluate the correctness of an AI response, without capturing other evaluation dimensions or providing explanations of why an answer is problematic. Furthermore, traditional automated evaluation metrics typically require ground truth data, which for many AI applications is difficult to obtain. Especially for those involving open-ended generation or retrieval augmented systems, defining a single “correct” answer is practically impossible. Finally, metrics such as ROUGE and F1 can be fooled by shallow linguistic similarities (word overlap) between the ground truth and the LLM response, even when the actual meaning is very different. These challenges make it difficult for organizations to maintain consistent quality standards across their AI applications, particularly for generative AI outputs.

Amazon Bedrock has recently launched two new capabilities to address these evaluation challenges: LLM-as-a-judge (LLMaaJ) under Amazon Bedrock Evaluations and a brand new RAG evaluation tool for Amazon Bedrock Knowledge Bases. Both features rely on the same LLM-as-a-judge technology under the hood, with slight differences depending on if a model or a RAG application built with Amazon Bedrock Knowledge Bases is being evaluated. These evaluation features combine the speed of automated methods with human-like nuanced understanding, enabling organizations to:

- Assess AI model outputs across various tasks and contexts

- Evaluate multiple evaluation dimensions of AI performance simultaneously

- Systematically assess both retrieval and generation quality in RAG systems

- Scale evaluations across thousands of responses while maintaining quality standards

These capabilities integrate seamlessly into the AI development lifecycle, empowering organizations to improve model and application quality, promote responsible AI practices, and make data-driven decisions about model selection and application deployment.

This post focuses on RAG evaluation with Amazon Bedrock Knowledge Bases, provides a guide to set up the feature, discusses nuances to consider as you evaluate your prompts and responses, and finally discusses best practices. By the end of this post, you will understand how the latest Amazon Bedrock evaluation features can streamline your approach to AI quality assurance, enabling more efficient and confident development of RAG applications.

Key features

Before diving into the implementation details, we examine the key features that make the capabilities of RAG evaluation on Amazon Bedrock Knowledge Bases particularly powerful. The key features are:

- Amazon Bedrock Evaluations

- Evaluate Amazon Bedrock Knowledge Bases directly within the service

- Systematically evaluate both retrieval and generation quality in RAG systems to change knowledge base build-time parameters or runtime parameters

- Comprehensive, understandable, and actionable evaluation metrics

- Retrieval metrics: Assess context relevance and coverage using an LLM as a judge

- Generation quality metrics: Measure correctness, faithfulness (to detect hallucinations), completeness, and more

- Provide natural language explanations for each score in the output and on the console

- Compare results across multiple evaluation jobs for both retrieval and generation

- Metrics scores are normalized to 0 and 1 range

- Scalable and efficient assessment

- Scale evaluation across thousands of responses

- Reduce costs compared to manual evaluation while maintaining high quality standards

- Flexible evaluation framework

- Support both ground truth and reference-free evaluations

- Equip users to select from a variety of metrics for evaluation

- Supports evaluating fine-tuned or distilled models on Amazon Bedrock

- Provides a choice of evaluator models

- Model selection and comparison

- Compare evaluation jobs across different generating models

- Facilitate data-driven optimization of model performance

- Responsible AI integration

- Incorporate built-in responsible AI metrics such as harmfulness, answer refusal, and stereotyping

- Seamlessly integrate with Amazon Bedrock Guardrails

These features enable organizations to comprehensively assess AI performance, promote responsible AI development, and make informed decisions about model selection and optimization throughout the AI application lifecycle. Now that we’ve explained the key features, we examine how these capabilities come together in a practical implementation.

Feature overview

The Amazon Bedrock Knowledge Bases RAG evaluation feature provides a comprehensive, end-to-end solution for assessing and optimizing RAG applications. This automated process uses the power of LLMs to evaluate both retrieval and generation quality, offering insights that can significantly improve your AI applications.

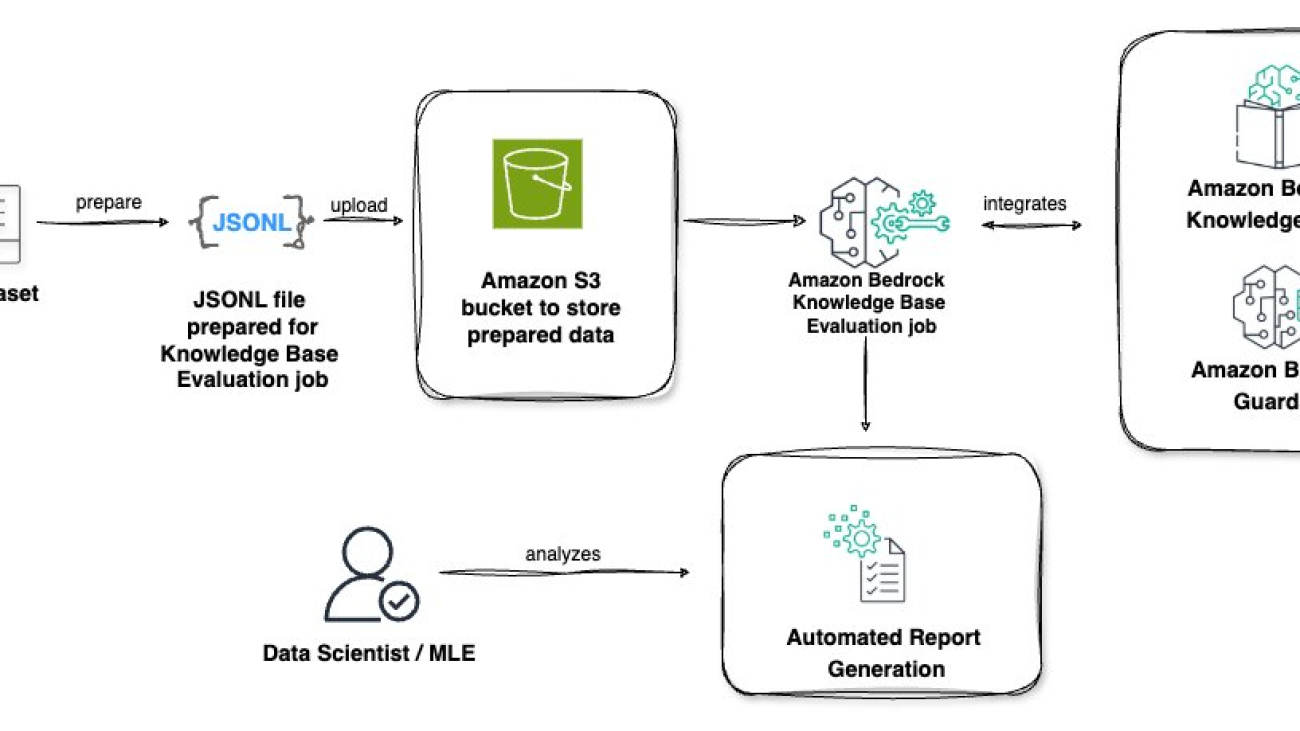

The workflow is as follows, as shown moving from left to right in the following architecture diagram:

- Prompt dataset – Prepared set of prompts, optionally including ground truth responses

- JSONL file – Prompt dataset converted to JSONL format for the evaluation job

- Amazon Simple Storage Service (Amazon S3) bucket – Storage for the prepared JSONL file

- Amazon Bedrock Knowledge Bases RAG evaluation job – Core component that processes the data, integrating with Amazon Bedrock Guardrails and Amazon Bedrock Knowledge Bases.

- Automated report generation – Produces a comprehensive report with detailed metrics and insights at individual prompt or conversation level

- Analyze the report to derive actionable insights for RAG system optimization

Designing holistic RAG evaluations: Balancing cost, quality, and speed

RAG system evaluation requires a balanced approach that considers three key aspects: cost, speed, and quality. Although Amazon Bedrock Evaluations primarily focuses on quality metrics, understanding all three components helps create a comprehensive evaluation strategy. The following diagram shows how these components interact and feed into a comprehensive evaluation strategy, and the next sections examine each component in detail.

Cost and speed considerations

The efficiency of RAG systems depends on model selection and usage patterns. Costs are primarily driven by data retrieval and token consumption during retrieval and generation, and speed depends on model size and complexity as well as prompt and context size. For applications requiring high performance content generation with lower latency and costs, model distillation can be an effective solution to use for creating a generator model, for example. As a result, you can create smaller, faster models that maintain quality of larger models for specific use cases.

Quality assessment framework

Amazon Bedrock knowledge base evaluation provides comprehensive insights through various quality dimensions:

- Technical quality through metrics such as context relevance and faithfulness

- Business alignment through correctness and completeness scores

- User experience through helpfulness and logical coherence measurements

- Incorporates built-in responsible AI metrics such as harmfulness, stereotyping, and answer refusal.

Establishing baseline understanding

Begin your evaluation process by choosing default configurations in your knowledge base (vector or graph database), such as default chunking strategies, embedding models, and prompt templates. These are just some of the possible options. This approach establishes a baseline performance, helping you understand your RAG system’s current effectiveness across available evaluation metrics before optimization. Next, create a diverse evaluation dataset. Make sure this dataset contains a diverse set of queries and knowledge sources that accurately reflect your use case. The diversity of this dataset will provide a comprehensive view of your RAG application performance in production.

Iterative improvement process

Understanding how different components affect these metrics enables informed decisions about:

- Knowledge base configuration (chunking strategy or embedding size or model) and inference parameter refinement

- Retrieval strategy modifications (semantic or hybrid search)

- Prompt engineering refinements

- Model selection and inference parameter configuration

- Choice between different vector stores including graph databases

Continuous evaluation and improvement

Implement a systematic approach to ongoing evaluation:

- Schedule regular offline evaluation cycles aligned with knowledge base updates

- Track metric trends over time to identify areas for improvement

- Use insights to guide knowledge base refinements and generator model customization and selection

Prerequisites

To use the knowledge base evaluation feature, make sure that you have satisfied the following requirements:

- An active AWS account.

- Selected evaluator and generator models enabled in Amazon Bedrock. You can confirm that the models are enabled for your account on the Model access page of the Amazon Bedrock console.

- Confirm the AWS Regions where the model is available and quotas.

- Complete the knowledge base evaluation prerequisites related to AWS Identity and Access Management (IAM) creation and add permissions for an S3 bucket to access and write output data.

- You also need to set up and enable CORS on your S3 bucket.

- Have an Amazon Bedrock knowledge base created and sync your data such that it’s ready to be used by a knowledge base evaluation job.

- If yo’re using a custom model instead of an on-demand model for your generator model, make sure you have sufficient quota for running a Provisioned Throughput during inference. Go to the Service Quotas console and check the following quotas:

- Model units no-commitment Provisioned Throughputs across custom models

- Model units per provisioned model for [your custom model name]

- Both fields need to have enough quota to support your Provisioned Throughput model unit. Request a quota increase if necessary to accommodate your expected inference workload.

Prepare input dataset

To prepare your dataset for a knowledge base evaluation job, you need to follow two important steps:

- Dataset requirements:

- Maximum 1,000 conversations per evaluation job (1 conversation is contained in the

conversationTurnskey in the dataset format) - Maximum 5 turns (prompts) per conversation

- File must use JSONL format (

.jsonlextension) - Each line must be a valid JSON object and complete prompt

- Stored in an S3 bucket with CORS enabled

- Maximum 1,000 conversations per evaluation job (1 conversation is contained in the

- Follow the following format:

- Retrieve only evaluation jobs.

Special note: On March 20, 2025, the referenceContexts key will change to referenceResponses. The content of referenceResponses should be the expected ground truth answer that an end-to-end RAG system would have generated given the prompt, not the expected passages/chunks retrieved from the Knowledge Base.

- Retrieve and generate evaluation jobs

Start a knowledge base RAG evaluation job using the console

Amazon Bedrock Evaluations provides you with an option to run an evaluation job through a guided user interface on the console. To start an evaluation job through the console, follow these steps:

- On the Amazon Bedrock console, under Inference and Assessment in the navigation pane, choose Evaluations and then choose Knowledge Bases.

- Choose Create, as shown in the following screenshot.

- Give an Evaluation name, a Description, and choose an Evaluator model, as shown in the following screenshot. This model will be used as a judge to evaluate the response of the RAG application.

- Choose the knowledge base and the evaluation type, as shown in the following screenshot. Choose Retrieval only if you want to evaluate only the retrieval component and Retrieval and response generation if you want to evaluate the end-to-end retrieval and response generation. Select a model, which will be used for generating responses in this evaluation job.

- (Optional) To change inference parameters, choose configurations. You can update or experiment with different values of temperature, top-P, update knowledge base prompt templates, associate guardrails, update search strategy, and configure numbers of chunks retrieved.

The following screenshot shows the Configurations screen.

The following screenshot shows the Configurations screen.

- Choose the Metrics you would like to use to evaluate the RAG application, as shown in the following screenshot.

- Provide the S3 URI, as shown in step 3 for evaluation data and for evaluation results. You can use the Browse S3

- Select a service (IAM) role with the proper permissions. This includes service access to Amazon Bedrock, the S3 buckets in the evaluation job, the knowledge base in the job, and the models being used in the job. You can also create a new IAM role in the evaluation setup and the service will automatically give the role the proper permissions for the job.

- Choose Create.

- You will be able to check the evaluation job In Progress status on the Knowledge Base evaluations screen, as shown in in the following screenshot.

- Wait for the job to be complete. This could be 10–15 minutes for a small job or a few hours for a large job with hundreds of long prompts and all metrics selected. When the evaluation job has been completed, the status will show as Completed, as shown in the following screenshot.

- When it’s complete, select the job, and you’ll be able to observe the details of the job. The following screenshot is the Metric summary.

- You should also observe a directory with the evaluation job name in the Amazon S3 path. You can find the output S3 path from your job results page in the evaluation summary section.

- You can compare two evaluation jobs to gain insights about how different configurations or selections are performing. You can view a radar chart comparing performance metrics between two RAG evaluation jobs, making it simple to visualize relative strengths and weaknesses across different dimensions, as shown in the following screenshot.

On the Evaluation details tab, examine score distributions through histograms for each evaluation metric, showing average scores and percentage differences. Hover over the histogram bars to check the number of conversations in each score range, helping identify patterns in performance, as shown in the following screenshots.

Start a knowledge base evaluation job using Python SDK and APIs

To use the Python SDK for creating a knowledge base evaluation job, follow these steps. First, set up the required configurations:

For retrieval-only evaluation, create a job that focuses on assessing the quality of retrieved contexts:

For a complete evaluation of both retrieval and generation, use this configuration:

To monitor the progress of your evaluation job, use this configuration:

Interpreting results

After your evaluation jobs are completed, Amazon Bedrock RAG evaluation provides a detailed comparative dashboard across the evaluation dimensions.

The evaluation dashboard includes comprehensive metrics, but we focus on one example, the completeness histogram shown below. This visualization represents how well responses cover all aspects of the questions asked. In our example, we notice a strong right-skewed distribution with an average score of 0.921. The majority of responses (15) scored above 0.9, while a small number fell in the 0.5-0.8 range. This type of distribution helps quickly identify if your RAG system has consistent performance or if there are specific cases needing attention.

Selecting specific score ranges in the histogram reveals detailed conversation analyses. For each conversation, you can examine the input prompt, generated response, number of retrieved chunks, ground truth comparison, and most importantly, the detailed score explanation from the evaluator model.

Consider this example response that scored 0.75 for the question, “What are some risks associated with Amazon’s expansion?” Although the generated response provided a structured analysis of operational, competitive, and financial risks, the evaluator model identified missing elements around IP infringement and foreign exchange risks compared to the ground truth. This detailed explanation helps in understanding not just what’s missing, but why the response received its specific score.

This granular analysis is crucial for systematic improvement of your RAG pipeline. By understanding patterns in lower-performing responses and specific areas where context retrieval or generation needs improvement, you can make targeted optimizations to your system—whether that’s adjusting retrieval parameters, refining prompts, or modifying knowledge base configurations.

Best practices for implementation

These best practices help build a solid foundation for your RAG evaluation strategy:

- Design your evaluation strategy carefully, using representative test datasets that reflect your production scenarios and user patterns. If you have large workloads greater than 1,000 prompts per batch, optimize your workload by employing techniques such as stratified sampling to promote diversity and representativeness within your constraints such as time to completion and costs associated with evaluation.

- Schedule periodic batch evaluations aligned with your knowledge base updates and content refreshes because this feature supports batch analysis rather than real-time monitoring.

- Balance metrics with business objectives by selecting evaluation dimensions that directly impact your application’s success criteria.

- Use evaluation insights to systematically improve your knowledge base content and retrieval settings through iterative refinement.

- Maintain clear documentation of evaluation jobs, including the metrics selected and improvements implemented based on results. The job creation configuration settings in your results pages can help keep a historical record here.

- Optimize your evaluation batch size and frequency based on application needs and resource constraints to promote cost-effective quality assurance.

- Structure your evaluation framework to accommodate growing knowledge bases, incorporating both technical metrics and business KPIs in your assessment criteria.

To help you dive deeper into the scientific validation of these practices, we’ll be publishing a technical deep-dive post that explores detailed case studies using public datasets and internal AWS validation studies. This upcoming post will examine how our evaluation framework performs across different scenarios and demonstrate its correlation with human judgments across various evaluation dimensions. Stay tuned as we explore the research and validation that powers Amazon Bedrock Evaluations.

Conclusion

Amazon Bedrock knowledge base RAG evaluation enables organizations to confidently deploy and maintain high-quality RAG applications by providing comprehensive, automated assessment of both retrieval and generation components. By combining the benefits of managed evaluation with the nuanced understanding of human assessment, this feature allows organizations to scale their AI quality assurance efficiently while maintaining high standards. Organizations can make data-driven decisions about their RAG implementations, optimize their knowledge bases, and follow responsible AI practices through seamless integration with Amazon Bedrock Guardrails.

Whether you’re building customer service solutions, technical documentation systems, or enterprise knowledge base RAG, Amazon Bedrock Evaluations provides the tools needed to deliver reliable, accurate, and trustworthy AI applications. To help you get started, we’ve prepared a Jupyter notebook with practical examples and code snippets. You can find it on our GitHub repository.

We encourage you to explore these capabilities in the Amazon Bedrock console and discover how systematic evaluation can enhance your RAG applications.

About the Authors

Ishan Singh is a Generative AI Data Scientist at Amazon Web Services, where he helps customers build innovative and responsible generative AI solutions and products. With a strong background in AI/ML, Ishan specializes in building Generative AI solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Ishan Singh is a Generative AI Data Scientist at Amazon Web Services, where he helps customers build innovative and responsible generative AI solutions and products. With a strong background in AI/ML, Ishan specializes in building Generative AI solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Ayan Ray is a Senior Generative AI Partner Solutions Architect at AWS, where he collaborates with ISV partners to develop integrated Generative AI solutions that combine AWS services with AWS partner products. With over a decade of experience in Artificial Intelligence and Machine Learning, Ayan has previously held technology leadership roles at AI startups before joining AWS. Based in the San Francisco Bay Area, he enjoys playing tennis and gardening in his free time.

Ayan Ray is a Senior Generative AI Partner Solutions Architect at AWS, where he collaborates with ISV partners to develop integrated Generative AI solutions that combine AWS services with AWS partner products. With over a decade of experience in Artificial Intelligence and Machine Learning, Ayan has previously held technology leadership roles at AI startups before joining AWS. Based in the San Francisco Bay Area, he enjoys playing tennis and gardening in his free time.

Adewale Akinfaderin is a Sr. Data Scientist–Generative AI, Amazon Bedrock, where he contributes to cutting edge innovations in foundational models and generative AI applications at AWS. His expertise is in reproducible and end-to-end AI/ML methods, practical implementations, and helping global customers formulate and develop scalable solutions to interdisciplinary problems. He has two graduate degrees in physics and a doctorate in engineering.

Adewale Akinfaderin is a Sr. Data Scientist–Generative AI, Amazon Bedrock, where he contributes to cutting edge innovations in foundational models and generative AI applications at AWS. His expertise is in reproducible and end-to-end AI/ML methods, practical implementations, and helping global customers formulate and develop scalable solutions to interdisciplinary problems. He has two graduate degrees in physics and a doctorate in engineering.

Evangelia Spiliopoulou is an Applied Scientist in the AWS Bedrock Evaluation group, where the goal is to develop novel methodologies and tools to assist automatic evaluation of LLMs. Her overall work focuses on Natural Language Processing (NLP) research and developing NLP applications for AWS customers, including LLM Evaluations, RAG, and improving reasoning for LLMs. Prior to Amazon, Evangelia completed her Ph.D. at Language Technologies Institute, Carnegie Mellon University.

Evangelia Spiliopoulou is an Applied Scientist in the AWS Bedrock Evaluation group, where the goal is to develop novel methodologies and tools to assist automatic evaluation of LLMs. Her overall work focuses on Natural Language Processing (NLP) research and developing NLP applications for AWS customers, including LLM Evaluations, RAG, and improving reasoning for LLMs. Prior to Amazon, Evangelia completed her Ph.D. at Language Technologies Institute, Carnegie Mellon University.

Jesse Manders is a Senior Product Manager on Amazon Bedrock, the AWS Generative AI developer service. He works at the intersection of AI and human interaction with the goal of creating and improving generative AI products and services to meet our needs. Previously, Jesse held engineering team leadership roles at Apple and Lumileds, and was a senior scientist in a Silicon Valley startup. He has an M.S. and Ph.D. from the University of Florida, and an MBA from the University of California, Berkeley, Haas School of Business.

Jesse Manders is a Senior Product Manager on Amazon Bedrock, the AWS Generative AI developer service. He works at the intersection of AI and human interaction with the goal of creating and improving generative AI products and services to meet our needs. Previously, Jesse held engineering team leadership roles at Apple and Lumileds, and was a senior scientist in a Silicon Valley startup. He has an M.S. and Ph.D. from the University of Florida, and an MBA from the University of California, Berkeley, Haas School of Business.

Exploring Prediction Targets in Masked Pre-Training for Speech Foundation Models

Speech foundation models, such as HuBERT and its variants, are pre-trained on large amounts of unlabeled speech data and then used for a range of downstream tasks. These models use a masked prediction objective, where the model learns to predict information about masked input segments from the unmasked context. The choice of prediction targets in this framework impacts their performance on downstream tasks. For instance, models pre-trained with targets that capture prosody learn representations suited for speaker-related tasks, while those pre-trained with targets that capture phonetics learn…Apple Machine Learning Research

Google’s comments on the U.S. AI Action Plan

Google shares policy recommendations in response to OSTP’s request for information for the U.S. AI Action Plan.Read More

Google shares policy recommendations in response to OSTP’s request for information for the U.S. AI Action Plan.Read More

How GoDaddy built a category generation system at scale with batch inference for Amazon Bedrock

This post was co-written with Vishal Singh, Data Engineering Leader at Data & Analytics team of GoDaddy

Generative AI solutions have the potential to transform businesses by boosting productivity and improving customer experiences, and using large language models (LLMs) in these solutions has become increasingly popular. However, inference of LLMs as single model invocations or API calls doesn’t scale well with many applications in production.

With batch inference, you can run multiple inference requests asynchronously to process a large number of requests efficiently. You can also use batch inference to improve the performance of model inference on large datasets.

This post provides an overview of a custom solution developed by the for GoDaddy, a domain registrar, registry, web hosting, and ecommerce company that seeks to make entrepreneurship more accessible by using generative AI to provide personalized business insights to over 21 million customers—insights that were previously only available to large corporations. In this collaboration, the Generative AI Innovation Center team created an accurate and cost-efficient generative AI–based solution using batch inference in Amazon Bedrock, helping GoDaddy improve their existing product categorization system.

Solution overview

GoDaddy wanted to enhance their product categorization system that assigns categories to products based on their names. For example:

GoDaddy used an out-of-the-box Meta Llama 2 model to generate the product categories for six million products where a product is identified by an SKU. The generated categories were often incomplete or mislabeled. Moreover, employing an LLM for individual product categorization proved to be a costly endeavor. Recognizing the need for a more precise and cost-effective solution, GoDaddy sought an alternative approach that was a more accurate and cost-efficient way for product categorization to improve their customer experience.

This solution uses the following components to categorize products more accurately and efficiently:

- Batch processing in Amazon Bedrock using the Meta Llama 2 and Anthropic’s Claude models

- Amazon Simple Storage Service (Amazon S3) to store product and output data

- AWS Lambda to orchestrate the Amazon Bedrock models

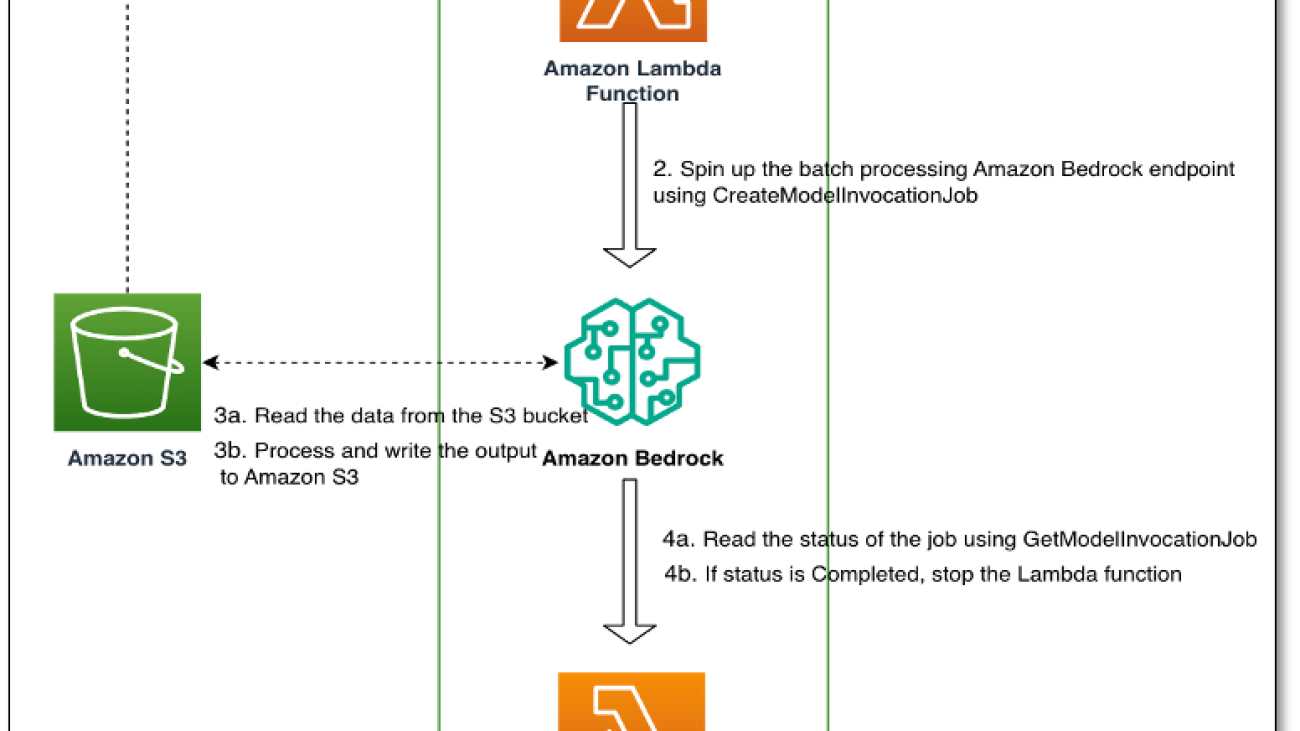

The key steps are illustrated in the following figure:

- A JSONL file containing product data is uploaded to an S3 bucket, triggering the first Lambda function. Amazon Bedrock batch processes this single JSONL file, where each row contains input parameters and prompts. It generates an output JSONL file with a new

model_outputvalue appended to each row, corresponding to the input data. - The Lambda function spins up an Amazon Bedrock batch processing endpoint and passes the S3 file location.

- The Amazon Bedrock endpoint performs the following tasks:

- It reads the product name data and generates a categorized output, including category, subcategory, season, price range, material, color, product line, gender, and year of first sale.

- It writes the output to another S3 location.

- The second Lambda function performs the following tasks:

- It monitors the batch processing job on Amazon Bedrock.

- It shuts down the endpoint when processing is complete.

The security measures are inherently integrated into the AWS services employed in this architecture. For detailed information, refer to the Security Best Practices section of this post.

We used a dataset that consisted of 30 labeled data points and 100,000 unlabeled test data points. The labeled data points were generated by llama2-7b and verified by a human subject matter expert (SME). As shown in the following screenshot of the sample ground truth, some fields have N/A or missing values, which isn’t ideal because GoDaddy wants a solution with high coverage for downstream predictive modeling. Higher coverage for each possible field can provide more business insights to their customers.

The distribution for the number of words or tokens per SKU shows mild outlier concern, suitable for bundling many products to be categorized in the prompts and potentially more efficient model response.

The solution delivers a comprehensive framework for generating insights within GoDaddy’s product categorization system. It’s designed to be compatible with a range of LLMs on Amazon Bedrock, features customizable prompt templates, and supports batch and real-time (online) inferences. Additionally, the framework includes evaluation metrics that can be extended to accommodate changes in accuracy requirements.

In the following sections, we look at the key components of the solution in more detail.

Batch inference

We used Amazon Bedrock for batch inference processing. Amazon Bedrock provides the CreateModelInvocationJob API to create a batch job with a unique job name. This API returns a response containing jobArn. Refer to the following code:

We can monitor the job status using GetModelInvocationJob with the jobArn returned on job creation. The following are valid statuses during the lifecycle of a job:

- Submitted – The job is marked Submitted when the JSON file is ready to be processed by Amazon Bedrock for inference.

- InProgress – The job is marked InProgress when Amazon Bedrock starts processing the JSON file.

- Failed – The job is marked Failed if there was an error while processing. The error can be written into the JSON file as a part of

modelOutput. If it was a 4xx error, it’s written in the metadata of the Job. - Completed – The job is marked Completed when the output JSON file is generated for the input JSON file and has been uploaded to the S3 output path submitted as a part of the

CreateModelInvocationJobinoutputDataConfig. - Stopped – The job is marked Stopped when a

StopModelInvocationJobAPI is called on a job that is InProgress. A terminal state job (Succeeded or Failed) can’t be stopped usingStopModelInvocationJob.

The following is example code for the GetModelInvocationJob API:

When the job is complete, the S3 path specified in s3OutputDataConfig will contain a new folder with an alphanumeric name. The folder contains two files:

- json.out – The following code shows an example of the format:

- <file_name>.jsonl.out – The following screenshot shows an example of the code, containing the successfully processed records under The

modelOutputcontains a list of categories for a given product name in JSON format.

We then process the jsonl.out file in Amazon S3. This file is parsed using LangChain’s PydanticOutputParser to generate a .csv file. The PydanticOutputParser requires a schema to be able to parse the JSON generated by the LLM. We created a CCData class that contains the list of categories to be generated for each product as shown in the following code example. Because we enable n-packing, we wrap the schema with a List, as defined in List_of_CCData.

We also use OutputFixingParser to handle situations where the initial parsing attempt fails. The following screenshot shows a sample generated .csv file.

Prompt engineering

Prompt engineering involves the skillful crafting and refining of input prompts. This process entails choosing the right words, phrases, sentences, punctuation, and separator characters to efficiently use LLMs for diverse applications. Essentially, prompt engineering is about effectively interacting with an LLM. The most effective strategy for prompt engineering needs to vary based on the specific task and data, specifically, data card generation and GoDaddy SKUs.

Prompts consist of particular inputs from the user that direct LLMs to produce a suitable response or output based on a specified task or instruction. These prompts include several elements, such as the task or instruction itself, the surrounding context, full examples, and the input text that guides LLMs in crafting their responses. The composition of the prompt will vary based on factors like the specific use case, data availability, and the nature of the task at hand. For example, in a Retrieval Augmented Generation (RAG) use case, we provide additional context and add a user-supplied query in the prompt that asks the LLM to focus on contexts that can answer the query. In a metadata generation use case, we can provide the image and ask the LLM to generate a description and keywords describing the image in a specific format.

In this post, we briefly distribute the prompt engineering solutions into two steps: output generation and format parsing.

Output generation

The following are best practices and considerations for output generation:

- Provide simple, clear and complete instructions – This is the general guideline for prompt engineering work.

- Use separator characters consistently – In this use case, we use the newline character n

- Deal with default output values such as missing – For this use case, we don’t want special values such as N/A or missing, so we put multiple instructions in line, aiming to exclude the default or missing values.

- Use few-shot prompting – Also termed in-context learning, few-shot prompting involves providing a handful of examples, which can be beneficial in helping LLMs understand the output requirements more effectively. In this use case, 0–10 in-context examples were tested for both Llama 2 and Anthropic’s Claude models.

- Use packing techniques – We combined multiple SKU and product names into one LLM query, so that some prompt instructions can be shared across different SKUs for cost and latency optimization. In this use case, 1–10 packing numbers were tested for both Llama 2 and Anthropic’s Claude models.

- Test for good generalization – You should keep a hold-out test set and correct responses to check if your prompt modifications generalize.

- Use additional techniques for Anthropic’s Claude model families – We incorporated the following techniques:

- Enclosing examples in XML tags:

- Using the Human and Assistant annotations:

- Guiding the assistant prompt:

- Use additional techniques for Llama model families – For Llama 2 model families, you can enclose examples in [INST] tags:

Format parsing

The following are best practices and considerations for format parsing:

- Refine the prompt with modifiers – Refinement of task instructions typically involves altering the instruction, task, or question part of the prompt. The effectiveness of these techniques varies based on the task and data. Some beneficial strategies in this use case include:

- Role assumption – Ask the model to assume it’s playing a role. For example:

You are a Product Information Manager, Taxonomist, and Categorization Expert who follows instruction well.

- Prompt specificity: Being very specific and providing detailed instructions to the model can help generate better responses for the required task.

EVERY category information needs to be filled based on BOTH product name AND your best guess. If you forget to generate any category information, leave it as missing or N/A, then an innocent people will die.

- Output format description – We provided the JSON format instructions through a JSON string directly, as well as through the few-shot examples indirectly.

- Pay attention to few-shot example formatting – The LLMs (Anthropic’s Claude and Llama) are sensitive to subtle formatting differences. Parsing time was significantly improved after several iterations on few-shot examples formatting. The final solution is as follows:

- Use additional techniques for Anthropic’s Claude model families – For the Anthropic’s Claude model, we instructed it to format the output in JSON format:

- Use additional techniques for Llama 2 model families – For the Llama 2 model, we instructed it to format the output in JSON format as follows:

Format your output in the JSON format (ensure to escape special character):

The output should be formatted as a JSON instance that conforms to the JSON schema below.

As an example, for the schema {"properties": {"foo": {"title": "Foo", "description": "a list of strings", "type": "array", "items": {"type": "string"}}}, "required": ["foo"]}

the object {"foo": ["bar", "baz"]} is a well-formatted instance of the schema. The object {"properties": {"foo": ["bar", "baz"]}} is not well-formatted.

Here is the output schema:

{“properties”: {“list_of_dict”: {“title”: “List Of Dict”, “type”: “array”, “items”: {“$ref”: “#/definitions/CCData”}}}, “required”: [“list_of_dict”], “definitions”: {“CCData”: {“title”: “CCData”, “type”: “object”, “properties”: {“product_name”: {“title”: “Product Name”, “description”: “product name, which will be given as input”, “type”: “string”}, “brand”: {“title”: “Brand”, “description”: “Brand of the product inferred from the product name”, “type”: “string”}, “color”: {“title”: “Color”, “description”: “Color of the product inferred from the product name”, “type”: “string”}, “material”: {“title”: “Material”, “description”: “Material of the product inferred from the product name”, “type”: “string”}, “price”: {“title”: “Price”, “description”: “Price of the product inferred from the product name”, “type”: “string”}, “category”: {“title”: “Category”, “description”: “Category of the product inferred from the product name”, “type”: “string”}, “sub_category”: {“title”: “Sub Category”, “description”: “Sub-category of the product inferred from the product name”, “type”: “string”}, “product_line”: {“title”: “Product Line”, “description”: “Product Line of the product inferred from the product name”, “type”: “string”}, “gender”: {“title”: “Gender”, “description”: “Gender of the product inferred from the product name”, “type”: “string”}, “year_of_first_sale”: {“title”: “Year Of First Sale”, “description”: “Year of first sale of the product inferred from the product name”, “type”: “string”}, “season”: {“title”: “Season”, “description”: “Season of the product inferred from the product name”, “type”: “string”}}}}}

Models and parameters

We used the following prompting parameters:

- Number of packings – 1, 5, 10

- Number of in-context examples – 0, 2, 5, 10

- Format instruction – JSON format pseudo example (shorter length), JSON format full example (longer length)

For Llama 2, the model choices were meta.llama2-13b-chat-v1 or meta.llama2-70b-chat-v1. We used the following LLM parameters:

For Anthropic’s Claude, the model choices were anthropic.claude-instant-v1 and anthropic.claude-v2. We used the following LLM parameters:

The solution is straightforward to extend to other LLMs hosted on Amazon Bedrock, such as Amazon Titan (switch the model ID to amazon.titan-tg1-large, for example), Jurassic (model ID ai21.j2-ultra), and more.

Evaluations

The framework includes evaluation metrics that can be extended further to accommodate changes in accuracy requirements. Currently, it involves five different metrics:

- Content coverage – Measures portions of missing values in the output generation step.

- Parsing coverage – Measures portions of missing samples in the format parsing step:

- Parsing recall on product name – An exact match serves as a lower bound for parsing completeness (parsing coverage is the upper bound for parsing completeness) because in some cases, two virtually identical product names need to be normalized and transformed to be an exact match (for example, “Nike Air Jordan” and “nike. air Jordon”).

- Parsing precision on product name – For an exact match, we use a similar metric to parsing recall, but use precision instead of recall.

- Final coverage – Measures portions of missing values in both output generation and format parsing steps.

- Human evaluation – Focuses on holistic quality evaluation such as accuracy, relevance, and comprehensiveness (richness) of the text generation.

Results

The following are the approximate sample input and output lengths under some best performing settings:

- Input length for Llama 2 model family – 2,068 tokens for 10-shot, 1,585 tokens for 5-shot, 1,319 tokens for 2-shot

- Input length for Anthropic’s Claude model family – 1,314 tokens for 10-shot, 831 tokens for 5-shot, 566 tokens for 2-shot, 359 tokens for zero-shot

- Output length with 5-packing – Approximately 500 tokens

Quantitative results

The following table summarizes our consolidated quantitative results.

- To be concise, the table contains only some of our final recommendations for each model types.

- The metrics used are latency and accuracy.

- The best model and results are highlighted in green color and in bold font.

| Config | Latency | Accuracy | ||||||

| Batch process service | Model | Prompt | Batch process latency (5 packing) | Near-real-time process latency (1 packing) | Programmatic evaluation (coverage) | |||

| test set = 20 | test set = 5k | GoDaddy rqmt @ 5k | Recall on parsing exact match | Final content coverage | ||||

| Amazon Bedrock batch inference | Llama2-13b | zero-shot | n/a | n/a | 3600s | n/a | n/a | n/a |

| 5-shot (template12) | 65.4s | 1704s | 3600s | 72/20=3.6s | 92.60% | 53.90% | ||

| Llama2-70b | zero-shot | n/a | n/a | 3600s | n/a | n/a | n/a | |

| 5-shot (template13) | 139.6s | 5299s | 3600s | 156/20=7.8s | 98.30% | 61.50% | ||

| Claude-v1 (instant) | zero-shot (template6) | 29s | 723s | 3600s | 44.8/20=2.24s | 98.50% | 96.80% | |

| 5-shot (template12) | 30.3s | 644s | 3600s | 51/20=2.6s | 99% | 84.40% | ||

| Claude-v2 | zero-shot (template6) | 82.2s | 1706s | 3600s | 104/20=5.2s | 99% | 84.40% | |

| 5-shot (template14) | 49.1s | 1323s | 3600s | 104/20=5.2s | 99.40% | 90.10% | ||

The following tables summarize the scaling effect in batch inference.

- When scaling from 5,000 to 100,000 samples, only eight times more computation time was needed.

- Performing categorization with individual LLM calls for each product would have increased the inference time for 100,000 products by approximately 40 times compared to the batch processing method.

- The accuracy in coverage remained stable, and cost scaled approximately linearly.

| Batch process service | Model | Prompt | Batch process latency (5 packing) | Near-real-time process latency (1 packing) | |||

| test set = 20 | test set = 5k | GoDaddy rqmt @ 5k | test set = 100k | ||||

| Amazon Bedrock batch | Claude-v1 (instant) | zero-shot (template6) | 29s | 723s | 3600s | 5733s | 44.8/20=2.24s |

| Amazon Bedrock batch | Anthropic’s Claude-v2 | zero-shot (template6) | 82.2s | 1706s | 3600s | 7689s | 104/20=5.2s |

| Batch process service | Near-real-time process latency (1 packing) | Programmatic evaluation (coverage) | |||

| Parsing recall on product name (test set = 5k) | Parsing recall on product name (test set = 100k) | Final content coverage (test set = 5k) | Final content coverage (test set = 100k) | ||

| Amazon Bedrock batch | 44.8/20=2.24s | 98.50% | 98.40% | 96.80% | 96.50% |

| Amazon Bedrock batch | 104/20=5.2s | 99% | 98.80% | 84.40% | 97% |

The following table summarizes the effect of n-packing. Llama 2 has an output length limit of 2,048 and fits up to around 20 packing. Anthropic’s Claude has a higher limit. We tested on 20 ground truth samples for 1, 5, and 10 packing and selected results from all model and prompt templates. The scaling effect on latency was more obvious in the Anthropic’s Claude model family than Llama 2. Anthropic’s Claude had better generalizability than Llama 2 when extending the packing numbers in output.