We’re exploring the frontiers of AGI, prioritizing technical safety, proactive risk assessment, and collaboration with the AI community.Read More

Evaluating potential cybersecurity threats of advanced AI

Our framework enables cybersecurity experts to identify which defenses are necessary—and how to prioritize themRead More

NVIDIA GeForce RTX 50 Series Accelerates Adobe Premiere Pro and Media Encoder’s 4:2:2 Color Sampling

Video editing workflows are getting a lot more colorful.

Adobe recently announced massive updates to Adobe Premiere Pro (beta) and Adobe Media Encoder, including PC support for 4:2:2 video color editing.

The 4:2:2 color format is a game changer for professional video editors, as it retains nearly as much color information as 4:4:4 while greatly reducing file size. This improves color grading and chroma keying — using color information to isolate a specific range of hues — while maximizing efficiency and quality.

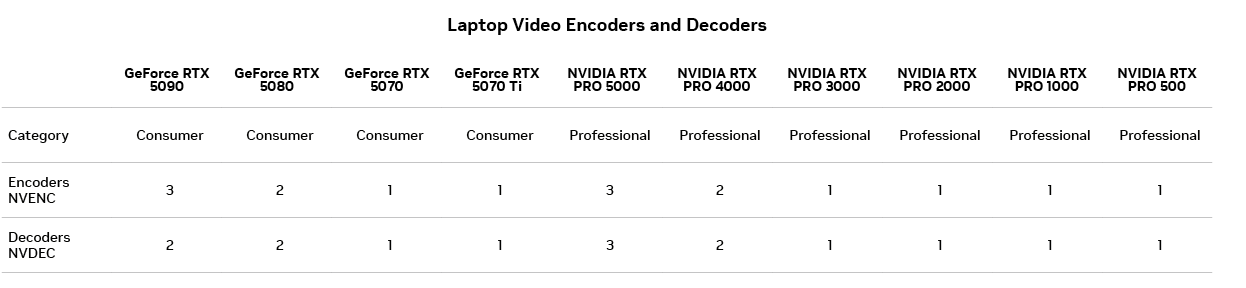

In addition, new NVIDIA GeForce RTX 5090 and 5080 laptops — built on the NVIDIA Blackwell architecture — are out now, accelerating 4:2:2 and advanced AI-powered features across video-editing workflows.

Adobe and other industry partners are attending NAB Show — a premier gathering of over 100,000 leaders in the broadcast, media and entertainment industries — running April 5-9 in Las Vegas. Professionals in these fields will come together for education, networking and exploring the latest technologies and trends.

Shed Some Color on 4:2:2

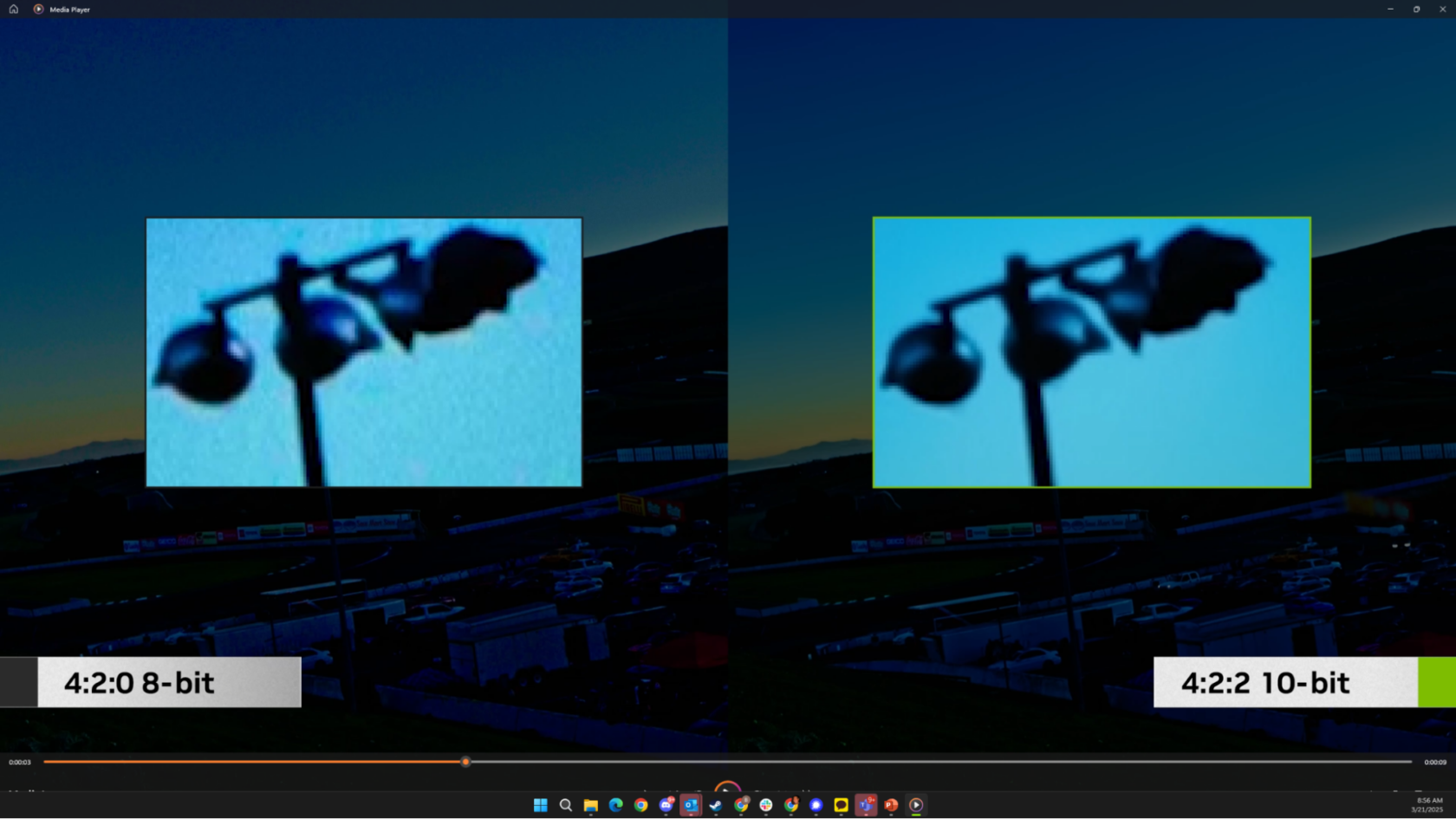

Consumer cameras that are limited to 4:2:0 color compression capture a limited amount of color information. 4:2:0 is acceptable for video playback on browsers, but professional video editors often rely on cameras that capture 4:2:2 color depth with precise color accuracy to ensure higher color fidelity.

Adobe Premiere Pro’s beta with 4:2:2 means video data can now provide double the color information with just a 1.3x increase in raw file size over 4:2:0. This unlocks several key benefits within professional video-production workflows:

Increased Color Accuracy: 10-bit 4:2:2 retains more color information compared with 8-bit 4:2:0, leading to more accurate color representation and better color grading results.

More Flexibility: The extra color data allows for increased flexibility during color correction and grading, enabling more nuanced adjustments and corrections.

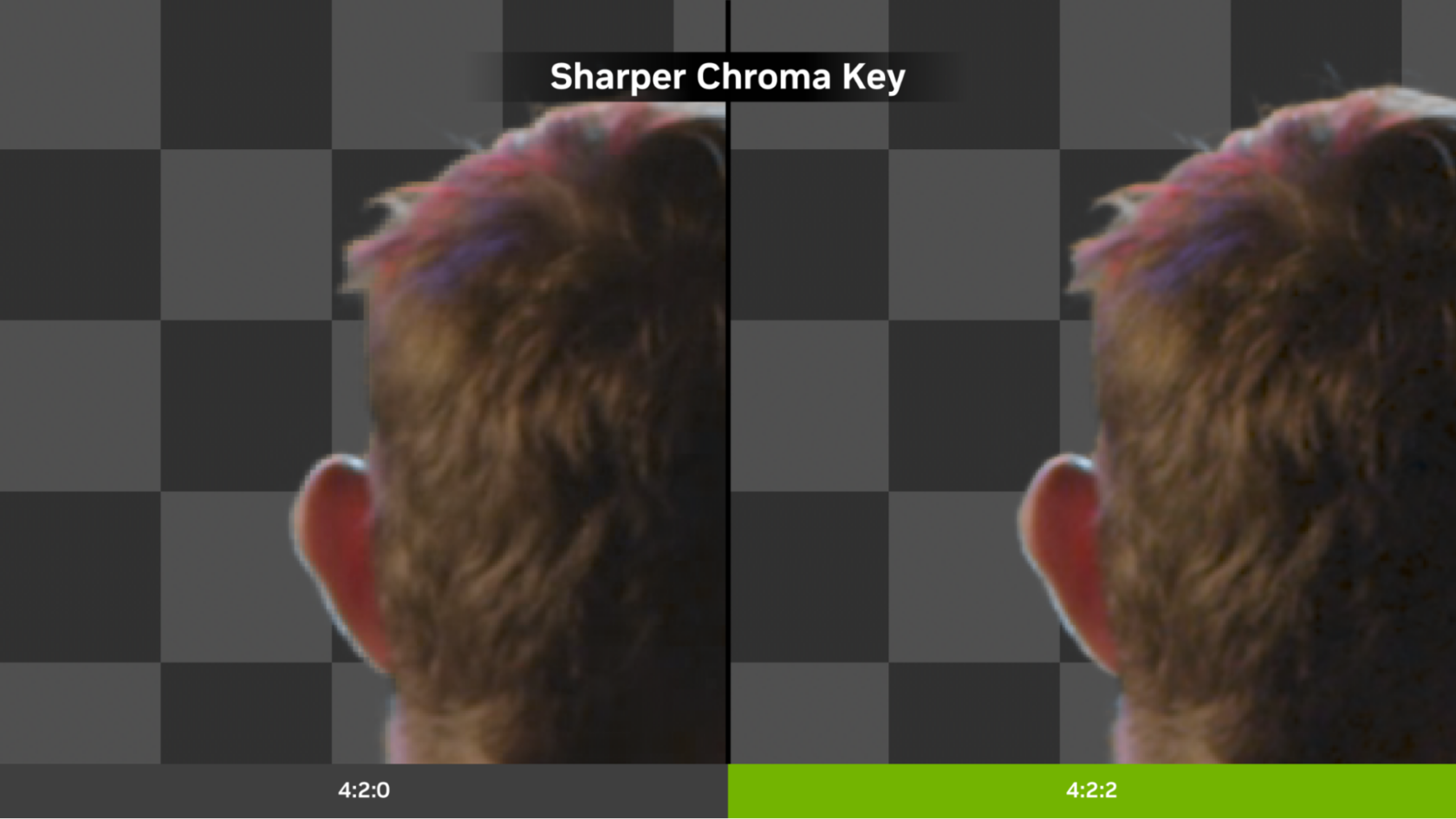

Improved Keying: 4:2:2 is particularly beneficial for keying — including green screening — as it enables cleaner, more accurate extraction of the subject from the background, as well as cleaner edges of small keyed objects like hair.

Smaller File Sizes: Compared with 4:4:4, 4:2:2 reduces file sizes without significantly impacting picture quality, offering an optimal balance between quality and storage.

Combining 4:2:2 support with NVIDIA hardware increases creative possibilities.

Advanced Video Editing

Prosumer-grade cameras from most major brands support HEVC and H.264 10-bit 4:2:2 formats to deliver superior image quality, manageable file sizes and the flexibility needed for professional video production.

GeForce RTX 50 Series GPUs paired with Microsoft Windows 11 come with GPU-powered decode acceleration in HEVC and H.264 10-bit 4:2:2 formats.

GPU-powered decode enables faster-than-real-time playback without stuttering, the ability to work with original camera media instead of proxies, smoother timeline responsiveness and reduced CPU load — freeing system resources for multi-app workflows and creative tasks.

RTX 50 Series’ 4:2:2 hardware can decode up to six 4K 60 frames-per-second video sources on an RTX 5090-enabled studio PC, enabling smooth multi-camera video-editing workflows on Adobe Premiere Pro.

Video exports are also accelerated with NVIDIA’s ninth-generation encoder and sixth-generation decoder.

In GeForce RTX 50 Series GPUs, the ninth-generation NVIDIA video encoder, NVENC, offers an 8% BD-BR upgrade in video encoding efficiency when exporting to HEVC on Premiere Pro.

Adobe AI Accelerated

Adobe delivers an impressive array of advanced AI features for idea generation, enabling streamlined processes, improved productivity and opportunities to explore new artistic avenues — all accelerated by NVIDIA RTX GPUs.

For example, Adobe Media Intelligence, a feature in Premiere Pro (beta) and After Effects (beta), uses AI to analyze footage and apply semantic tags to clips. This lets users more easily and quickly find specific footage by describing its content, including objects, locations, camera angles and even transcribed spoken words.

Media Intelligence runs 30% faster on the GeForce RTX 5090 Laptop GPU compared with the GeForce RTX 4090 Laptop GPU.

In addition, the Enhance Speech feature in Premiere Pro (beta) improves the quality of recorded speech by filtering out unwanted noise and making the audio sound clearer and more professional. Enhance Speech runs 7x faster on GeForce RTX 5090 Laptop GPUs compared to the MacBook Pro M4 Max.

Visit Adobe’s Premiere Pro page to download a free trial of the beta and explore the slew of AI-powered features across the Adobe Creative Cloud and Substance 3D apps.

Unleash (AI)nfinite Possibilities

GeForce RTX 5090 and 5080 Series laptops deliver the largest-ever generational leap in portable performance for creating, gaming and all things AI.

They can run creative generative AI models such as Flux up to 2x faster in a smaller memory footprint, compared with the previous generation.

The previously mentioned ninth-generation NVIDIA encoders elevate video editing and livestreaming workflows, and come with NVIDIA DLSS 4 technology and up to 24GB of VRAM to tackle massive 3D projects.

NVIDIA Max-Q hardware technologies use AI to optimize every aspect of a laptop — the GPU, CPU, memory, thermals, software, display and more — to deliver incredible performance and battery life in thin and quiet devices.

All GeForce RTX 50 Series laptops include NVIDIA Studio platform optimizations, with over 130 GPU-accelerated content creation apps and exclusive Studio tools including NVIDIA Studio Drivers, tested extensively to enhance performance and maximize stability in popular creative apps.

Adobe will participate in the Creator Lab at NAB Show, offering hands-on training for editors to elevate their skills with Adobe tools. Attend a 30-minute section and try out Puget Systems laptops equipped with GeForce RTX 5080 Laptop GPUs to experience blazing-fast performance and demo new generative AI features.

Use NVIDIA’s product finder to explore available GeForce RTX 50 Series laptops with complete specifications.

New creative app updates and optimizations are powered by the NVIDIA Studio platform. Follow NVIDIA Studio on Instagram, X and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

See notice regarding software product information.

Finding answers, building hope

Googler Thomas Wagner shares how he used Gemini to learn more about his son’s rare disease, and jumpstart research.Read More

Googler Thomas Wagner shares how he used Gemini to learn more about his son’s rare disease, and jumpstart research.Read More

The Role of Prosody in Spoken Question Answering

Spoken language understanding research to date has generally carried a heavy text perspective. Most datasets are derived from text, which is then subsequently synthesized into speech, and most models typically rely on automatic transcriptions of speech. This is to the detriment of prosody–additional information carried by the speech signal beyond the phonetics of the words themselves and difficult to recover from text alone. In this work, we investigate the role of prosody in Spoken Question Answering. By isolating prosodic and lexical information on the SLUE-SQA-5 dataset, which consists of…Apple Machine Learning Research

Mutual Reinforcement of LLM Dialogue Synthesis and Summarization Capabilities for Few-Shot Dialogue Summarization

In this work, we propose Mutual Reinforcing Data Synthesis (MRDS) within LLMs to improve few-shot dialogue summarization task. Unlike prior methods that require external knowledge, we mutually reinforce the LLM’s dialogue synthesis and summarization capabilities, allowing them to complement each other during training and enhance overall performances. The dialogue synthesis capability is enhanced by directed preference optimization with preference scoring from summarization capability. The summarization capability is enhanced by the additional high quality dialogue-summary paired data produced…Apple Machine Learning Research

Universally Instance-Optimal Mechanisms for Private Statistical Estimation

We consider the problem of instance-optimal statistical estimation under the constraint of differential privacy where mechanisms must adapt to the difficulty of the input dataset. We prove a

new instance specific lower bound using a new divergence and show it characterizes the local minimax optimal rates for private statistical estimation. We propose two new mechanisms that are

universally instance-optimal for general estimation problems up to logarithmic factors. Our first

mechanism, the total variation mechanism, builds on the exponential mechanism with stable approximations of the total…Apple Machine Learning Research

Modeling Speech Emotion With Label Variance and Analyzing Performance Across Speakers and Unseen Acoustic Conditions

Spontaneous speech emotion data usually contain perceptual grades where graders assign emotion score after listening to the speech files. Such perceptual grades introduce uncertainty in labels due to grader opinion variation. Grader variation is addressed by using consensus grades as groundtruth, where the emotion with the highest vote is selected, and as a consequence fails to consider ambiguous instances where a speech sample may contain multiple emotions, as captured through grader opinion uncertainty. We demonstrate that using the probability density function of the emotion grades as…Apple Machine Learning Research

How we built the new family of Gemini Robotics models

Robots powered by Gemini Robotics models can learn complex actions like preparing salads and even folding an origami fox.Read More

Robots powered by Gemini Robotics models can learn complex actions like preparing salads and even folding an origami fox.Read More

Introducing AWS MCP Servers for code assistants (Part 1)

We’re excited to announce the open source release of AWS MCP Servers for code assistants — a suite of specialized Model Context Protocol (MCP) servers that bring Amazon Web Services (AWS) best practices directly to your development workflow. Our specialized AWS MCP servers combine deep AWS knowledge with agentic AI capabilities to accelerate development across key areas. Each AWS MCP Server focuses on a specific domain of AWS best practices, working together to provide comprehensive guidance throughout your development journey.

This post is the first in a series covering AWS MCP Servers. In this post, we walk through how these specialized MCP servers can dramatically reduce your development time while incorporating security controls, cost optimizations, and AWS Well-Architected best practices into your code. Whether you’re an experienced AWS developer or just getting started with cloud development, you’ll discover how to use AI-powered coding assistants to tackle common challenges such as complex service configurations, infrastructure as code (IaC) implementation, and knowledge base integration. By the end of this post, you’ll understand how to start using AWS MCP Servers to transform your development workflow and deliver better solutions, faster.

If you want to get started right away, skip ahead to the section “From Concept to working code in minutes.”

AI is transforming how we build software, creating opportunities to dramatically accelerate development while improving code quality and consistency. Today’s AI assistants can understand complex requirements, generate production-ready code, and help developers navigate technical challenges in real time. This AI-driven approach is particularly valuable in cloud development, where developers need to orchestrate multiple services while maintaining security, scalability, and cost-efficiency.

Developers need code assistants that understand the nuances of AWS services and best practices. Specialized AI agents can address these needs by:

- Providing contextual guidance on AWS service selection and configuration

- Optimizing compliance with security best practices and regulatory requirements

- Promoting the most efficient utilization and cost-effective solutions

- Automating repetitive implementation tasks with AWS specific patterns

This approach means developers can focus on innovation while AI assistants handle the undifferentiated heavy lifting of coding. Whether you’re using Amazon Q, Amazon Bedrock, or other AI tools in your workflow, AWS MCP Servers complement and enhance these capabilities with deep AWS specific knowledge to help you build better solutions faster.

Model Context Protocol (MCP) is a standardized open protocol that enables seamless interaction between large language models (LLMs), data sources, and tools. This protocol allows AI assistants to use specialized tooling and to access domain-specific knowledge by extending the model’s capabilities beyond its built-in knowledge—all while keeping sensitive data local. Through MCP, general-purpose LLMs can now seamlessly access relevant knowledge beyond initial training data and be effectively steered towards desired outputs by incorporating specific context and best practices.

Accelerate building on AWS

What if your AI assistant could instantly access deep AWS knowledge, understanding every AWS service, best practice, and architectural pattern? With MCP, we can transform general-purpose LLMs into AWS specialists by connecting them to specialized knowledge servers. This opens up exciting new possibilities for accelerating cloud development while maintaining security and following best practices.

Build on AWS in a fraction of the time, with best practices automatically applied from the first line of code. Skip hours of documentation research and immediately access ready-to-use patterns for complex services such as Amazon Bedrock Knowledge Bases. Our MCP Servers will help you write well-architected code from the start, implement AWS services correctly the first time, and deploy solutions that are secure, observable, and cost-optimized by design. Transform how you build on AWS today.

- Enforce AWS best practices automatically – Write well-architected code from the start with built-in security controls, proper observability, and optimized resource configurations

- Cut research time dramatically – Stop spending hours reading documentation. Our MCP Servers provide contextually relevant guidance for implementing AWS services correctly, addressing common pitfalls automatically

- Access ready-to-use patterns instantly – Use pre-built AWS CDK constructs, Amazon Bedrock Agents schema generators, and Amazon Bedrock Knowledge Bases integration templates that follow AWS best practices from the start

- Optimize cost proactively – Prevent over-provisioning as you design your solution by getting cost-optimization recommendations and generating a comprehensive cost report to analyze your AWS spending before deployment

To turn this vision into reality and make AWS development faster, more secure, and more efficient, we’ve created AWS MCP Servers—a suite of specialized AWS MCP Servers that bring AWS best practices directly to your development workflow. Our specialized AWS MCP Servers combine deep AWS knowledge with AI capabilities to accelerate development across key areas. Each AWS MCP Server focuses on a specific domain of AWS best practices, working together to provide comprehensive guidance throughout your development journey.

Overview of domain-specific MCP Servers for AWS development

Our specialized MCP Servers are designed to cover distinct aspects of AWS development, each bringing deep knowledge to specific domains while working in concert to deliver comprehensive solutions:

- Core – The foundation server that provides AI processing pipeline capabilities and serves as a central coordinator. It helps provide clear plans for building AWS solutions and can federate to other MCP servers as needed.

- AWS Cloud Development Kit (AWS CDK) – Delivers AWS CDK knowledge with tools for implementing best practices, security configurations with cdk-nag, Powertools for AWS Lambda integration, and specialized constructs for generative AI services. It makes sure infrastructure as code (IaC) follows AWS Well-Architected principles from the start.

- Amazon Bedrock Knowledge Bases – Enables seamless access to Amazon Bedrock Knowledge Bases so developers can query enterprise knowledge with natural language, filter results by data source, and use reranking for improved relevance.

- Amazon Nova Canvas – Provides image generation capabilities using Amazon Nova Canvas through Amazon Bedrock, enabling the creation of visuals from text prompts and color palettes—perfect for mockups, diagrams, and UI design concepts.

- Cost – Analyzes AWS service costs and generates comprehensive cost reports, helping developers understand the financial implications of their architectural decisions and optimize for cost-efficiency.

Prerequisites

To complete the solution, you need to have the following prerequisites in place:

- uv package manager

- Install Python using

uv python install 3.13 - AWS credentials with appropriate permissions

- An MCP-compatible LLM client (such as Anthropic’s Claude for Desktop, Cline, Amazon Q CLI, or Cursor)

From concept to working code in minutes

You can download the AWS MCP Servers on GitHub or through the PyPI package manager. Here’s how to get started using your favorite code assistant with MCP support.

To install MCP Servers, enter the following code:

AWS MCP Servers in action

Here’s how AWS MCP servers transform the development experience:

Developer: “I need to build an AI-powered chatbot using Amazon Bedrock that can answer questions from our company’s knowledge base. I also want to add a tool for the chatbot to call our internal API.”

Core: “I’ll help you build an Amazon Bedrock Knowledge Bases chatbot with API integration. Let’s create an architecture that uses Amazon Bedrock Agents with a custom action group to call your internal API.”

Core generates a comprehensive architecture diagram showing the knowledge base integration, Amazon Bedrock Agents configuration with action groups, API connectivity, and data flow between components.

AWS CDK: “Here’s the infrastructure code for your chatbot with the Amazon Bedrock Agents action group. I’ve included proper IAM roles, security controls, and Lambda Powertools for observability.”

The CDK MCP Server generates complete AWS CDK code to deploy the entire solution. It automatically runs cdk-nag to identify potential security issues and provides remediation steps for each finding, making sure that the infrastructure follows AWS Well-Architected best practices.

Amazon Bedrock Knowledge Bases retrieval: “I’ve configured the optimal settings for your knowledge base queries, including proper reranking for improved relevance.”

Amazon Bedrock Knowledge Bases MCP Server demonstrates how to structure queries to the knowledge base for maximum relevance, provides sample code for filtering by data source, and shows how to integrate the knowledge base responses with the chatbot interface.

Amazon Nova Canvas: “To enhance your chatbot’s capabilities, I’ve created visualizations that can be generated on demand when users request data explanations.”

Amazon Nova Canvas MCP server generates sample images showing how Amazon Nova Canvas can create charts, diagrams, and visual explanations based on knowledge base content, making complex information more accessible to users.

Cost Analysis: “Based on your expected usage patterns, here’s the estimated monthly cost breakdown and optimization recommendations.”

The Cost Analysis MCP Server generates a detailed cost analysis report showing projected expenses for each AWS service, identifies cost optimization opportunities such as reserved capacity for Amazon Bedrock, and provides specific recommendations to reduce costs without impacting performance.

With AWS MCP Servers, what would typically take days of research and implementation is completed in minutes, with better quality, security, and cost-efficiency than manual development in that same time.

Best practices for MCP-assisted development

To maximize the benefits of MCP assisted development while maintaining security and code quality, developers should follow these essential guidelines:

- Always review generated code for security implications before deployment

- Use MCP Servers as accelerators, not replacements for developer judgment and expertise

- Keep MCP Servers updated with the latest AWS security best practices

- Follow the principle of least privilege when configuring AWS credentials

- Run security scanning tools on generated infrastructure code

Coming up in the series

This post introduced the foundations of AWS MCP Servers and how they accelerate AWS development through specialized, AWS specific MCP Servers. In upcoming posts, we’ll dive deeper into:

- Detailed walkthroughs of each MCP server’s capabilities

- Advanced patterns for integrating AWS MCP Servers into your development workflow

- Real-world case studies showing AWS MCP Servers’ impact on development velocity

- How to extend AWS MCP Servers with your own custom MCP servers

Stay tuned to learn how AWS MCP Servers can transform your specific AWS development scenarios and help you build better solutions faster. Visit our GitHub repository or Pypi package manager to explore example implementations and get started today.

About the Authors

Jimin Kim is a Prototyping Architect on the AWS Prototyping and Cloud Engineering (PACE) team, based in Los Angeles. With specialties in Generative AI and SaaS, she loves helping her customers succeed in their business. Outside of work, she cherishes moments with her wife and three adorable calico cats.

Jimin Kim is a Prototyping Architect on the AWS Prototyping and Cloud Engineering (PACE) team, based in Los Angeles. With specialties in Generative AI and SaaS, she loves helping her customers succeed in their business. Outside of work, she cherishes moments with her wife and three adorable calico cats.

Pranjali Bhandari is part of the Prototyping and Cloud Engineering (PACE) team at AWS, based in the San Francisco Bay Area. She specializes in Generative AI, distributed systems, and cloud computing. Outside of work, she loves exploring diverse hiking trails, biking, and enjoying quality family time with her husband and son.

Pranjali Bhandari is part of the Prototyping and Cloud Engineering (PACE) team at AWS, based in the San Francisco Bay Area. She specializes in Generative AI, distributed systems, and cloud computing. Outside of work, she loves exploring diverse hiking trails, biking, and enjoying quality family time with her husband and son.

Laith Al-Saadoon is a Principal Prototyping Architect on the Prototyping and Cloud Engineering (PACE) team. He builds prototypes and solutions using generative AI, machine learning, data analytics, IoT & edge computing, and full-stack development to solve real-world customer challenges. In his personal time, Laith enjoys the outdoors–fishing, photography, drone flights, and hiking.

Laith Al-Saadoon is a Principal Prototyping Architect on the Prototyping and Cloud Engineering (PACE) team. He builds prototypes and solutions using generative AI, machine learning, data analytics, IoT & edge computing, and full-stack development to solve real-world customer challenges. In his personal time, Laith enjoys the outdoors–fishing, photography, drone flights, and hiking.

Paul Vincent is a Principal Prototyping Architect on the AWS Prototyping and Cloud Engineering (PACE) team. He works with AWS customers to bring their innovative ideas to life. Outside of work, he loves playing drums and piano, talking with others through Ham radio, all things home automation, and movie nights with the family.

Paul Vincent is a Principal Prototyping Architect on the AWS Prototyping and Cloud Engineering (PACE) team. He works with AWS customers to bring their innovative ideas to life. Outside of work, he loves playing drums and piano, talking with others through Ham radio, all things home automation, and movie nights with the family.

Justin Lewis leads the Emerging Technology Accelerator at AWS. Justin and his team help customers build with emerging technologies like generative AI by providing open source software examples to inspire their own innovation. He lives in the San Francisco Bay Area with his wife and son.

Justin Lewis leads the Emerging Technology Accelerator at AWS. Justin and his team help customers build with emerging technologies like generative AI by providing open source software examples to inspire their own innovation. He lives in the San Francisco Bay Area with his wife and son.

Anita Lewis is a Technical Program Manager on the AWS Emerging Technology Accelerator team, based in Denver, CO. She specializes in helping customers accelerate their innovation journey with generative AI and emerging technologies. Outside of work, she enjoys competitive pickleball matches, perfecting her golf game, and discovering new travel destinations.

Anita Lewis is a Technical Program Manager on the AWS Emerging Technology Accelerator team, based in Denver, CO. She specializes in helping customers accelerate their innovation journey with generative AI and emerging technologies. Outside of work, she enjoys competitive pickleball matches, perfecting her golf game, and discovering new travel destinations.