Editor’s note: This post is a part of our Meet the Omnivore series, which features individual creators and developers who use OpenUSD to build tools, applications and services for 3D workflows and physically accurate virtual worlds.

A failed furniture-shopping trip turned into a business idea for Steven Gay, cofounder and CEO of company Mode Maison.

Gay grew up in Houston and studied at the University of Texas before working in New York as one of the youngest concept designers at Ralph Lauren. He was inspired to start his own company after a long day of trying — and failing — to pick out a sofa.

The experience illuminated how the luxury home-goods industry has traditionally lagged in adopting digital technologies, especially those for creating immersive, interactive experiences for consumers.

Gay founded Mode Maison in 2018 with the goal of solving this challenge and paving the way for scalability, creativity and a generative future in retail. Using the Universal Scene Description framework, aka OpenUSD, and the NVIDIA Omniverse platform, Gay, along with Mode Maison Chief Technology Officer Jakub Cech and the Mode Maison team, are helping enhance and digitalize entire product lifecycle processes — from design and manufacturing to consumer experiences.

Register for NVIDIA GTC, which takes place March 17-21, to hear how leading companies are using the latest innovations in AI and graphics. And join us for OpenUSD Day to learn how to build generative AI-enabled 3D pipelines and tools using Universal Scene Description.

They developed a photometric scanning system, called Total Material Appearance Capture, which offers an unbiased, physically based approach to digitizing the material world that’s enabled by real-world embedded sensors.

TMAC captures proprietary data and the composition of any material, then turns it into input that serves as a single source of truth, which can be used for creating a fully digitized retail model. Using the system, along with OpenUSD and NVIDIA Omniverse, Mode Maison customers can create highly accurate digital twins of any material or product.

“By enabling this, we’re effectively collapsing and fostering a complete integration across the entire product lifecycle process — from design and production to manufacturing to consumer experiences and beyond,” said Gay.

Streamlining Workflows and Enhancing Productivity With Digital Twins

Previously, Mode Maison faced significant challenges in creating physically based, highly flexible and scalable digital materials. The limitations were particularly noticeable when rendering complex materials and textures, or integrating digital models into cohesive, multilayered environments.

Using Omniverse helped Gay and his team overcome these challenges by offering advanced rendering capabilities, physics simulations and extensibility for AI training that unlock new possibilities in digital retail.

Before using Omniverse and OpenUSD, Mode Maison used disjointed processes for digital material capture, modeling and rendering, often leading to inconsistencies, the inability to scale and minimal interoperability. After integrating Omniverse, the company experienced a streamlined, coherent workflow where high-fidelity digital twins can be created with greater efficiency and interoperability.

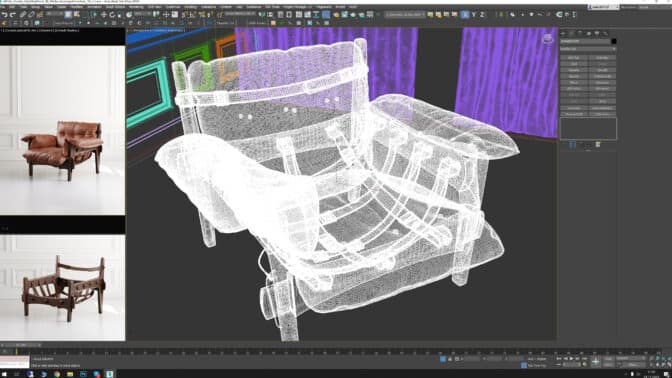

The team primarily uses Autodesk 3ds Max for design, and they import the 3D data using Omniverse Connectors. Gay says OpenUSD is playing an increasingly critical role in its workflows, especially when developing composable, flexible, interoperable capabilities across asset creation.

This enhanced pipeline starts with capturing high-fidelity material data using TMAC. The data is then processed and formatted into OpenUSD for the creation of physically based, scientifically accurate, high-fidelity digital twins.

“OpenUSD allows for an unprecedented level of collaboration and interoperability in creating complex, multi-layered capabilities and advanced digital materials,” Gay said. “Its ability to seamlessly integrate diverse digital assets and maintain their fidelity across various applications is instrumental in creating realistic, interactive digital twins for retail.”

OpenUSD and Omniverse have sped Mode Maison and their clients’ ability to bring products to market, reduced costs associated with building and modifying digital twins, and enhanced productivity through streamlined creation.

“Our work represents a major step toward a future where digital and physical realities will be seamlessly integrated,” said Gay. “This shift enhances consumer engagement and paves the way for more sustainable business practices by reducing the need for physical prototyping while enabling more precise manufacturing.”

As for emerging technological advancements in digital retail, Gay says AI will play a central role in creating hyper-personalized design, production, sourcing and front-end consumer experiences — all while reducing carbon footprints and paving the way for a more sustainable future in retail.

Join In on the Creation

Anyone can build their own Omniverse extension or Connector to enhance 3D workflows and tools.

Learn more about how OpenUSD and NVIDIA Omniverse are transforming industries at NVIDIA GTC, a global AI conference running March 18-21, online and at the San Jose Convention Center.

Join OpenUSD Day at GTC on Tuesday, March 19, to learn more about building generative AI-enabled 3D pipelines and tools using USD.

Get started with NVIDIA Omniverse by downloading the standard license free, access OpenUSD resources, and learn how Omniverse Enterprise can connect your team. Stay up to date on Instagram, Medium and X. For more, join the Omniverse community on the forums, Discord server, Twitch and YouTube channels.