Millions of people already use generative AI to assist in writing and learning. Now, the technology can also help them more effectively navigate the physical world.

NVIDIA announced at SIGGRAPH generative physical AI advancements including the NVIDIA Metropolis reference workflow for building interactive visual AI agents and new NVIDIA NIM microservices that will help developers train physical machines and improve how they handle complex tasks.

These include three fVDB NIM microservices that support NVIDIA’s new deep learning framework for 3D worlds, as well as the USD Code, USD Search and USD Validate NIM microservices for working with Universal Scene Description (aka OpenUSD).

The NVIDIA OpenUSD NIM microservices work together with the world’s first generative AI models for OpenUSD development — also developed by NVIDIA — to enable developers to incorporate generative AI copilots and agents into USD workflows and broaden the possibilities of 3D worlds.

NVIDIA NIM Microservices Transform Physical AI Landscapes

Physical AI uses advanced simulations and learning methods to help robots and other industrial automation more effectively perceive, reason and navigate their surroundings. The technology is transforming industries like manufacturing and healthcare, and advancing smart spaces with robots, factory and warehouse technologies, surgical AI agents and cars that can operate more autonomously and precisely.

NVIDIA offers a broad range of NIM microservices customized for specific models and industry domains. NVIDIA’s suite of NIM microservices tailored for physical AI supports capabilities for speech and translation, vision and intelligence, and realistic animation and behavior.

Turning Visual AI Agents Into Visionaries With NVIDIA NIM

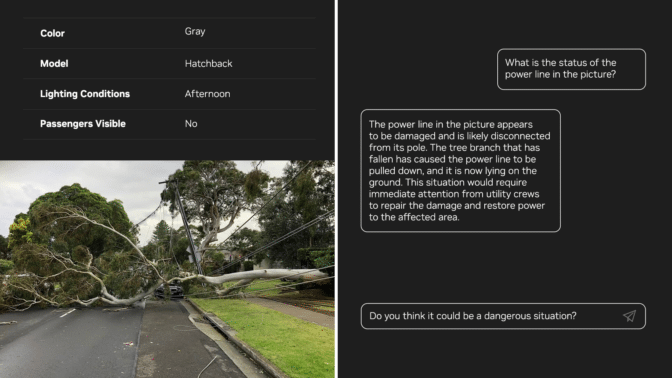

Visual AI agents use computer vision capabilities to perceive and interact with the physical world and perform reasoning tasks.

Highly perceptive and interactive visual AI agents are powered by a new class of generative AI models called vision language models (VLMs), which bridge digital perception and real-world interaction in physical AI workloads to enable enhanced decision-making, accuracy, interactivity and performance. With VLMs, developers can build vision AI agents that can more effectively handle challenging tasks, even in complex environments.

Generative AI-powered visual AI agents are rapidly being deployed across hospitals, factories, warehouses, retail stores, airports, traffic intersections and more.

To help physical AI developers more easily build high-performing, custom visual AI agents, NVIDIA offers NIM microservices and reference workflows for physical AI. The NVIDIA Metropolis reference workflow provides a simple, structured approach for customizing, building and deploying visual AI agents, as detailed in the blog.

NVIDIA NIM Helps K2K Make Palermo More Efficient, Safe and Secure

City traffic managers in Palermo, Italy, deployed visual AI agents using NVIDIA NIM to uncover physical insights that help them better manage roadways.

K2K, an NVIDIA Metropolis partner, is leading the effort, integrating NVIDIA NIM microservices and VLMs into AI agents that analyze the city’s live traffic cameras in real time. City officials can ask the agents questions in natural language and receive fast, accurate insights on street activity and suggestions on how to improve the city’s operations, like adjusting traffic light timing.

Leading global electronics giants Foxconn and Pegatron have adopted physical AI, NIM microservices and Metropolis reference workflows to more efficiently design and run their massive manufacturing operations.

The companies are building virtual factories in simulation to save significant time and costs. They’re also running more thorough tests and refinements for their physical AI — including AI multi-camera and visual AI agents — in digital twins before real-world deployment, improving worker safety and leading to operational efficiencies.

Bridging the Simulation-to-Reality Gap With Synthetic Data Generation

Many AI-driven businesses are now adopting a “simulation-first” approach for generative physical AI projects involving real-world industrial automation.

Manufacturing, factory logistics and robotics companies need to manage intricate human-worker interactions, advanced facilities and expensive equipment. NVIDIA physical AI software, tools and platforms — including physical AI and VLM NIM microservices, reference workflows and fVDB — can help them streamline the highly complex engineering required to create digital representations or virtual environments that accurately mimic real-world conditions.

VLMs are seeing widespread adoption across industries because of their ability to generate highly realistic imagery. However, these models can be challenging to train because of the immense volume of data required to create an accurate physical AI model.

Synthetic data generated from digital twins using computer simulations offers a powerful alternative to real-world datasets, which can be expensive — and sometimes impossible — to acquire for model training, depending on the use case.

Tools like NVIDIA NIM microservices and Omniverse Replicator let developers build generative AI-enabled synthetic data pipelines to accelerate the creation of robust, diverse datasets for training physical AI. This enhances the adaptability and performance of models such as VLMs, enabling them to generalize more effectively across industries and use cases.

Availability

Developers can access state-of-the-art, open and NVIDIA-built foundation AI models and NIM microservices at ai.nvidia.com. The Metropolis NIM reference workflow is available in the GitHub repository, and Metropolis VIA microservices are available for download in developer preview.

OpenUSD NIM microservices are available in preview through the NVIDIA API catalog.

Watch how accelerated computing and generative AI are transforming industries and creating new opportunities for innovation and growth in NVIDIA founder and CEO Jensen Huang’s fireside chats at SIGGRAPH.

See notice regarding software product information.