Two simulations of a billion atoms, two fresh insights into how the SARS-CoV-2 virus works, and a new AI model to speed drug discovery.

Those are results from finalists for Gordon Bell awards, considered a Nobel prize in high performance computing. They used AI, accelerated computing or both to advance science with NVIDIA’s technologies.

A finalist for the special prize for COVID-19 research used AI to link multiple simulations, showing at a new level of clarity how the virus replicates inside a host.

The research — led by Arvind Ramanathan, a computational biologist at the Argonne National Laboratory — provides a way to improve the resolution of traditional tools used to explore protein structures. That could provide fresh insights into ways to arrest the spread of a virus.

The team, drawn from a dozen organizations in the U.S. and the U.K., designed a workflow that ran across systems including Perlmutter, an NVIDIA A100-powered system, built by Hewlett Packard Enterprise, and Argonne’s NVIDIA DGX A100 systems.

“The capability to perform multisite data analysis and simulations for integrative biology will be invaluable for making use of large experimental data that are difficult to transfer,” the paper said.

As part of its work, the team developed a technique to speed molecular dynamics research using the popular NAMD program on GPUs. They also leveraged NVIDIA NVLink to speed data “far beyond what is currently possible with a conventional HPC network interconnect, or … PCIe transfers.”

A Billion Atoms in High Fidelity

Ivan Oleynik, a professor of physics at the University of South Florida, led a team named a finalist for the standard Gordon Bell award for their work producing the first highly accurate simulation of a billion atoms. It broke by 23x a record set by a Gordon Bell winner last year.

“It’s a joy to uncover phenomena never seen before, it’s a really big achievement we’re proud of,” said Oleynik.

The simulation of carbon atoms under extreme temperature and pressure could open doors to new energy sources and help describe the makeup of distant planets. It’s especially stunning because the simulation has quantum-level accuracy, faithfully reflecting the forces among the atoms.

“It’s accuracy we could only achieve by applying machine learning techniques on a powerful GPU supercomputer — AI is creating a revolution in how science is done,” said Oleynik.

The team exercised 4,608 IBM Power AC922 servers and 27,900 NVIDIA GPUs on the U.S. Department of Energy’s Summit supercomputer, built by IBM, one of the world’s most powerful supercomputers. It demonstrated their code could scale with almost 100-percent efficiency to simulations of 20 billion atoms or more.

That code is available to any researcher who wants to push the boundaries of materials science.

Inside a Deadly Droplet

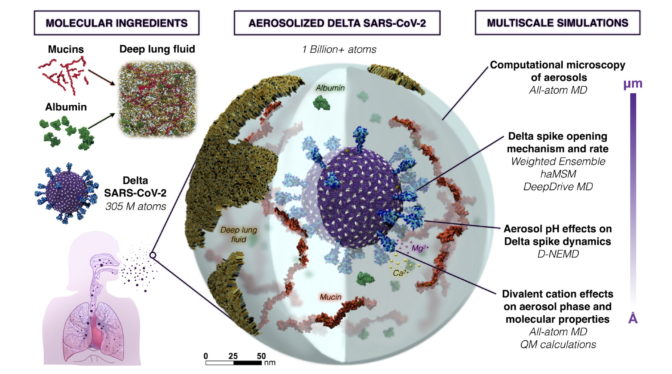

In another billion-atom simulation, a second finalist for the COVID-19 prize showed the Delta variant in an airborne droplet (below). It reveals biological forces that spread COVID and other diseases, providing a first atomic-level look at aerosols.

The work has “far reaching … implications for viral binding in the deep lung, and for the study of other airborne pathogens,” according to the paper from a team led by last year’s winner of the special prize, researcher Rommie Amaro from the University of California San Diego.

“We demonstrate how AI coupled to HPC at multiple levels can result in significantly improved effective performance, enabling new ways to understand and interrogate complex biological systems,” Amaro said.

Researchers used NVIDIA GPUs on Summit, the Longhorn supercomputer built by Dell Technologies for the Texas Advanced Computing Center and commercial systems in Oracle Cloud Infrastructure (OCI).

“HPC and cloud resources can be used to significantly drive down time-to-solution for major scientific efforts as well as connect researchers and greatly enable complex collaborative interactions,” the team concluded.

The Language of Drug Discovery

Finalists for the COVID prize at Oak Ridge National Laboratory (ORNL) applied natural language processing (NLP) to the problem of screening chemical compounds for new drugs.

They used a dataset containing 9.6 billion molecules — the largest dataset applied to this task to date — to train in two hours a BERT NLP model that can speed discovery of new drugs. Previous best efforts took four days to train a model using a dataset with 1.1 billion molecules.

The work exercised more than 24,000 NVIDIA GPUs on the Summit supercomputer to deliver a whopping 603 petaflops. Now that the training is done, the model can run on a single GPU to help researchers find chemical compounds that could inhibit COVID and other diseases.

“We have collaborators here who want to apply the model to cancer signaling pathways,” said Jens Glaser, a computational scientist at ORNL.

“We’re just scratching the surface of training data sizes — we hope to use a trillion molecules soon,” said Andrew Blanchard, a research scientist who led the team.

Relying on a Full-Stack Solution

NVIDIA software libraries for AI and accelerated computing helped the team complete its work in what one observer called a surprisingly short time.

“We didn’t need to fully optimize our work for the GPU’s tensor cores because you don’t need specialized code, you can just use the standard stack,” said Glaser.

He summed up what many finalists felt: “Having a chance to be part of meaningful research with potential impact on people’s lives is something that’s very satisfying for a scientist.”

Tune in to our special address at SC21 either live on Monday, Nov. 15 at 3 pm PST or later on demand. NVIDIA’s Marc Hamilton will provide an overview of our latest news, innovations and technologies, followed by a live Q&A panel with NVIDIA experts.

The post Gordon Bell Finalists Fight COVID, Advance Science With NVIDIA Technologies appeared first on The Official NVIDIA Blog.