Autonomous vehicle development and validation require the ability to replicate real-world scenarios in simulation.

At GTC, NVIDIA founder and CEO Jensen Huang showcased new AI-based tools for NVIDIA DRIVE Sim that accurately reconstruct and modify actual driving scenarios. These tools are enabled by breakthroughs from NVIDIA Research that leverage technologies such as NVIDIA Omniverse platform and NVIDIA DRIVE Map.

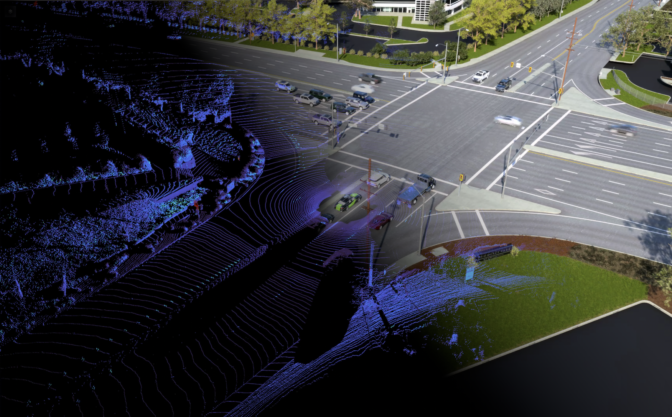

Huang demonstrated the methods side-by-side, showing how developers can easily test multiple scenarios in rapid iterations:

Once any scenario is reconstructed in simulation, it can act as the foundation for many different variations — from changing the trajectory of an oncoming vehicle, or adding an obstacle to the driving path — giving developers the ability to improve the AI driver.

However, reconstructing real-world driving scenarios and generating realistic data from it in simulation is a time- and labor-intensive process. It requires skilled engineers and artists, and even then, can be difficult to do.

NVIDIA has implemented two AI-based methods to seamlessly perform this process: virtual reconstruction and neural reconstruction. The first replicates the real-world scenario as a fully synthetic 3D scene, while the second uses neural simulation to augment real-world sensor data.

Both methods are able to expand well beyond recreating a single scenario to generating many new and challenging scenarios. This capability accelerates the continuous AV training, testing and validation pipeline.

Virtual Reconstruction

In the keynote video above, an entire driving environment and set of scenarios around NVIDIA’s headquarters are reconstructed in 3D using NVIDIA DRIVE Map, Omniverse and DRIVE Sim.

With DRIVE Map, developers have access to a digital twin of a road network in Omniverse. Using tools built on Omniverse, the detailed map is converted into a drivable simulation environment that can be used with NVIDIA DRIVE Sim.

With the reconstructed simulation environment, developers can recreate events, like a close call at an intersection or navigating a construction zone, using camera, lidar and vehicle data from real-world drives.

The platform’s AI helps reconstruct the scenario. First, for each tracked object, an AI looks at camera images and finds the most similar 3D asset available from the DRIVE Sim catalog and color that most closely matches the color of the object from the video.

Finally, the actual path of the tracked object is recreated; however, there are often gaps because of occlusions. In such cases, an AI-based traffic model is applied to the tracked object to predict what it would have done and fill in the gaps in its trajectory.

Virtual reconstruction enables developers to find potentially challenging situations to train and validate the AV system with high-fidelity data generated by physically based sensors and AI behavior models that can create many new scenarios. Data from the scenario can also train the behavior model.

Neural Reconstruction

The other approach relies on neural simulation rather than synthetically generating the scene, starting with real sensor data then modifying it.

Sensor replay — the process of playing back recorded sensor data to test the AV system’s performance — is a staple of AV development. This process is open loop, meaning the AV stack’s decisions don’t affect the world since all of the data is prerecorded.

A preview of neural reconstruction methods by NVIDIA Research turn this recorded data into a fully reactive and modifiable world — as in the demo, when the originally recorded van driving past the car could be reenacted to swerve right instead. This revolutionary approach allows closed-loop testing and full interaction between the AV stack and the world it’s driving in.

The process starts with recorded driving data. AI identifies the dynamic objects in the scene and removes them to create an exact replica of the 3D environment that can be rendered from new views. Dynamic objects are then reinserted into the 3D scene with realistic AI-based behaviors and physical appearance, accounting for illumination and shadows.

The AV system then drives in this virtual world and the scene reacts accordingly. The scene can be made more complex through augmented reality by inserting other virtual objects, vehicles and pedestrians which are rendered as if they were part of the real scene and can physically interact with the environment.

Every sensor on the vehicle, including camera and lidar, can be simulated in the scene using AI.

A Virtual World of Possibilities

These new approaches are driven by NVIDIA’s expertise in rendering, graphics and AI.

As a modular platform, DRIVE Sim supports these capabilities with a foundation of deterministic simulation. It provides the vehicle dynamics, AI-based traffic models, scenario tools and a comprehensive SDK to build any tool needed.

With these two powerful new AI methods, developers can easily move from the real world to the virtual one for faster AV development and deployment.

The post NVIDIA Showcases Novel AI Tools in DRIVE Sim to Advance Autonomous Vehicle Development appeared first on NVIDIA Blog.