When it comes to autonomous vehicle sensor innovation, it’s best to keep an open mind — and an open development platform.

That’s why NVIDIA DRIVE is the chosen platform on which the majority of these sensors run.

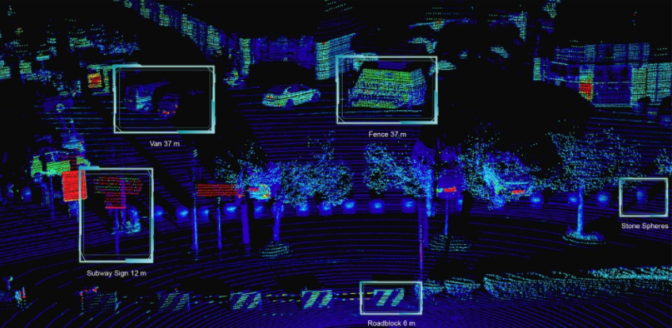

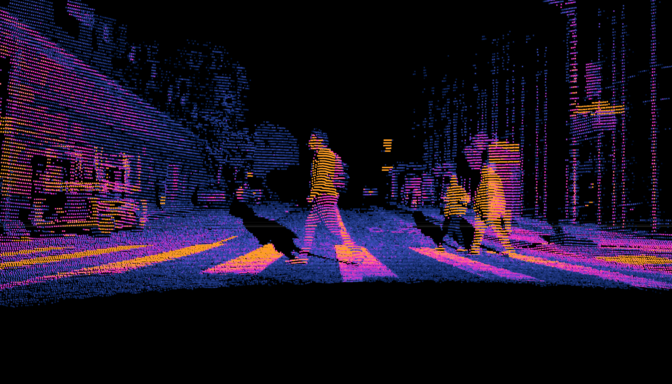

In addition to camera sensors, NVIDIA has long recognized that lidar is a crucial component to an autonomous vehicle’s perception stack. By emitting invisible lasers at incredibly fast speeds, lidar sensors can paint a detailed 3D picture from the signals that bounce back instantaneously.

These signals create “point clouds” that represent a three-dimensional view of the environment, allowing lidar sensors to provide the visibility, redundancy and diversity that contribute to safe automated and autonomous driving.

Most recently, lidar makers Baraja, Hesai, Innoviz, Magna and Ouster have developed their offerings to run on the NVIDIA DRIVE platform to deliver robust performance and flexibility to customers.

These sensors offer differentiated capabilities for AV sensing, from Innoviz’s lightweight, affordable and long-range solid-state lidar to Baraja’s long wavelength, long-range sensors.

“The open and flexible NVIDIA DRIVE platform is a game changer in allowing seamless integration of Innoviz lidar sensors in endless new and exciting opportunities,” said Innoviz CEO Omer Keilaf.

Ouster’s OS series of sensors offers high resolution as well as programmable fields of view to address autonomous driving use cases. It also provides a camera-like image with its digital lidar system-on-a-chip for greater perception capabilities.

Hesai’s latest Pandar128 sensor offers a 360-degree horizontal field of view with a detection range from 0.3 to 200 meters. In the vertical field of view, it uses denser beams to allow for high resolution in a focused region of interest. The low minimum range reduces the blind spot area close to and in front of the lidar sensor.

“The Hesai Pandar128’s resolution and detection range enable object detection at greater distances, making it an ideal solution for highly automated and autonomous driving systems,” said David Li, co-founder and CEO of Hesai. “Integrating our sensor with NVIDIA’s industry-leading DRIVE platform will provide an efficient pathway for AV developers.”

With the addition of these companies, the NVIDIA DRIVE ecosystem addresses every autonomous vehicle development need with verified hardware.

Plug and Drive

Typically, AV developers experiment with different variations of a sensor suite, modifying the number, type and placement of sensors. These configurations are necessary to continuously improve a vehicle’s capabilities and test new features.

An open, flexible compute platform can facilitate these iterations for effective autonomous vehicle development. And the industry agrees, with more than 60 sensor makers — from camera suppliers such as Sony, to radar makers like Continental, to thermal sensing companies such as FLIR — choosing to develop their products with the NVIDIA DRIVE AGX platform.

Along with the compute platform, NVIDIA provides the infrastructure to experience chosen sensor configurations with NVIDIA DRIVE Sim — an open simulation platform with plug-ins for third-party sensor models. As an end-to-end autonomous vehicle solutions provider, NVIDIA has long been a close partner to the leading sensor manufacturers.

“Ouster’s flexible digital lidar platform, including the new OS0-128 and OS2-128 lidar sensors, gives customers a wide variety of choices in range, resolution and field of view to fit in nearly any application that needs high-performance, low-cost 3D imaging,” said Angus Pacala, CEO of Ouster. “With Ouster as a member of the NVIDIA DRIVE ecosystem, our customers can plug and play our sensors easier than ever.”

Laser Focused

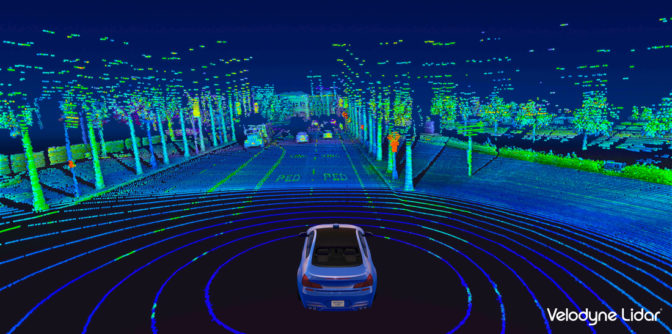

The NVIDIA DRIVE ecosystem includes a diverse set of lidar manufacturers — including Velodyne and Luminar as well as tier-1 suppliers — specializing in different features such as wavelength, signaling technique, field of view, range and resolution. This variety gives users flexibility as well as room for customization for their specific autonomous driving application.

The NVIDIA DriveWorks software development kit includes a sensor abstraction layer that provides a simple and unified interface that streamlines the bring-up process for new sensors on the platform. The interface saves valuable time and effort for developers as they test and validate different sensor configurations.

“To achieve maximum autonomous vehicle safety, a combination of sensor technologies including radar and lidar is required to see in all conditions. NVIDIA’s open ecosystem approach allows OEMs and tier 1s to select the safest and most cost-effective sensor configurations for each application,” said Jim McGregor, principal analyst at Tirias Research.

The NVIDIA DRIVE ecosystem gives autonomous vehicle developers the flexibility to select the right types of sensors for their vehicles, as well as iterate on configurations for different levels of autonomy. As an open AV platform with premier choices, NVIDIA DRIVE puts the automaker in the driver’s seat.

The post A Sense of Responsibility: Lidar Sensor Makers Build on NVIDIA DRIVE appeared first on The Official NVIDIA Blog.