Editor’s note: This is the latest post in our NVIDIA DRIVE Labs series, which takes an engineering-focused look at individual autonomous vehicle challenges and how NVIDIA DRIVE addresses them. Catch up on all of our automotive posts, here.

Even with advanced driver assistance systems automating more driving functions, human drivers must maintain their attention at the wheel and build trust in the AI system.

Traditional driver monitoring systems typically don’t understand subtle cues such as a driver’s cognitive state, behavior or other activity that indicates whether they’re ready to take over the driving controls.

NVIDIA DRIVE IX is an open, scalable cockpit software platform that provides AI functions to enable a full range of in-cabin experiences, including intelligent visualization with augmented reality and virtual reality, conversational AI and interior sensing.

Driver perception is a key aspect of the platform that enables the AV system to ensure a driver is alert and paying attention to the road. It also enables the AI system to perform cockpit functions that are more intuitive and intelligent.

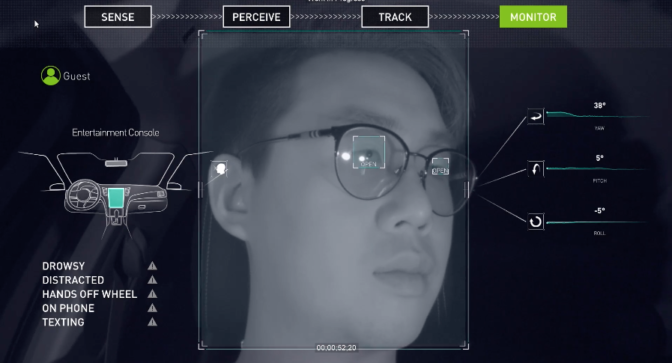

In this DRIVE Labs episode, NVIDIA experts demonstrate how DRIVE IX perceives driver attention, activity, emotion, behaviour, posture, speech, gesture and mood with a variety of detection capabilities.

A Multi-DNN Approach

Facial expressions are complex signals to interpret. A simple wrinkle of the brow or shift of the gaze can have a variety of meanings.

DRIVE IX uses multiple DNNs to recognize faces and decipher the expressions of vehicle occupants. The first DNN detects the face itself, while a second identifies fiducial points, or reference markings — such as eye location, nose, etc.

On top of these base networks, a variety of DNNs operate to determine whether a driver is paying attention or requires other actions from the AI system.

The GazeNet DNN tracks gazes by detecting the vector of the driver’s eyes and mapping it to the road to check if they’re able to see obstacles ahead. SleepNet monitors drowsiness, classifying whether eyes are open or closed, running through a state machine to determine levels of exhaustion. Finally, ActivityNet tracks driver activity such as phone usage, hands on/off the wheel and driver attention to road events. DRIVE IX can also detect whether the driver is properly sitting in their seat to focus on road events.

In addition to driver focus, a separate DNN can determine a driver’s emotions — a key indicator of their ability to safely operate the vehicle. Taking in data from the base face-detect and fiducial-point networks, DRIVE IX can classify a driver’s state as happy, surprised, neutral, disgusted or angry.

It can also tell if the driver is squinting or screaming, indicating their level of visibility or alertness and state of mind.

A Customizable Solution

Vehicle manufacturers can leverage the driver monitoring capabilities in DRIVE IX to develop advanced AI-based driver understanding capabilities for personalizing the car cockpit.

The car can be programmed to alert a driver if their attention drifts from the road, or the cabin can adjust settings to soothe occupants if tensions are high.

And these capabilities extend well beyond driver monitoring. The aforementioned DNNs, together with gesture DNN and speech capabilities, enable multi-modal conversational AI offerings such as automatic speech recognition, natural language processing and speech synthesis.

These networks can be used for in-cabin personalization and virtual assistant applications. Additionally, the base facial recognition and facial key point models can be used for AI-based video conferencing platforms.

The driver monitoring capabilities of DRIVE IX help build trust between occupants and the AI system as automated driving technology develops, creating a safer, more enjoyable intelligent vehicle experience.

The post A Trusted Companion: AI Software Keeps Drivers Safe and Focused on the Road Ahead appeared first on The Official NVIDIA Blog.