Creating robots that exhibit robust and dynamic locomotion capabilities, similar to animals or humans, has been a long-standing goal in the robotics community. In addition to completing tasks quickly and efficiently, agility allows legged robots to move through complex environments that are otherwise difficult to traverse. Researchers at Google have been pursuing agility for multiple years and across various form factors. Yet, while researchers have enabled robots to hike or jump over some obstacles, there is still no generally accepted benchmark that comprehensively measures robot agility or mobility. In contrast, benchmarks are driving forces behind the development of machine learning, such as ImageNet for computer vision, and OpenAI Gym for reinforcement learning (RL).

In “Barkour: Benchmarking Animal-level Agility with Quadruped Robots”, we introduce the Barkour agility benchmark for quadruped robots, along with a Transformer-based generalist locomotion policy. Inspired by dog agility competitions, a legged robot must sequentially display a variety of skills, including moving in different directions, traversing uneven terrains, and jumping over obstacles within a limited timeframe to successfully complete the benchmark. By providing a diverse and challenging obstacle course, the Barkour benchmark encourages researchers to develop locomotion controllers that move fast in a controllable and versatile way. Furthermore, by tying the performance metric to real dog performance, we provide an intuitive metric to understand the robot performance with respect to their animal counterparts.

| We invited a handful of dooglers to try the obstacle course to ensure that our agility objectives were realistic and challenging. Small dogs complete the obstacle course in approximately 10s, whereas our robot’s typical performance hovers around 20s. |

Barkour benchmark

The Barkour scoring system uses a per obstacle and an overall course target time based on the target speed of small dogs in the novice agility competitions (about 1.7m/s). Barkour scores range from 0 to 1, with 1 corresponding to the robot successfully traversing all the obstacles along the course within the allotted time of approximately 10 seconds, the average time needed for a similar-sized dog to traverse the course. The robot receives penalties for skipping, failing obstacles, or moving too slowly.

Our standard course consists of four unique obstacles in a 5m x 5m area. This is a denser and smaller setup than a typical dog competition to allow for easy deployment in a robotics lab. Beginning at the start table, the robot needs to weave through a set of poles, climb an A-frame, clear a 0.5m broad jump and then step onto the end table. We chose this subset of obstacles because they test a diverse set of skills while keeping the setup within a small footprint. As is the case for real dog agility competitions, the Barkour benchmark can be easily adapted to a larger course area and may incorporate a variable number of obstacles and course configurations.

Learning agile locomotion skills

The Barkour benchmark features a diverse set of obstacles and a delayed reward system, which pose a significant challenge when training a single policy that can complete the entire obstacle course. So in order to set a strong performance baseline and demonstrate the effectiveness of the benchmark for robotic agility research, we adopt a student-teacher framework combined with a zero-shot sim-to-real approach. First, we train individual specialist locomotion skills (teacher) for different obstacles using on-policy RL methods. In particular, we leverage recent advances in large-scale parallel simulation to equip the robot with individual skills, including walking, slope climbing, and jumping policies.

Next, we train a single policy (student) that performs all the skills and transitions in between by using a student-teacher framework, based on the specialist skills we previously trained. We use simulation rollouts to create datasets of state-action pairs for each one of the specialist skills. This dataset is then distilled into a single Transformer-based generalist locomotion policy, which can handle various terrains and adjust the robot’s gait based on the perceived environment and the robot’s state.

|

During deployment, we pair the locomotion transformer policy that is capable of performing multiple skills with a navigation controller that provides velocity commands based on the robot’s position. Our trained policy controls the robot based on the robot’s surroundings represented as an elevation map, velocity commands, and on-board sensory information provided by the robot.

| Deployment pipeline for the locomotion transformer architecture. At deployment time, a high-level navigation controller guides the real robot through the obstacle course by sending commands to the locomotion transformer policy. |

Robustness and repeatability are difficult to achieve when we aim for peak performance and maximum speed. Sometimes, the robot might fail when overcoming an obstacle in an agile way. To handle failures we train a recovery policy that quickly gets the robot back on its feet, allowing it to continue the episode.

Evaluation

We evaluate the Transformer-based generalist locomotion policy using custom-built quadruped robots and show that by optimizing for the proposed benchmark, we obtain agile, robust, and versatile skills for our robot in the real world. We further provide analysis for various design choices in our system and their impact on the system performance.

|

| Model of the custom-built robots used for evaluation. |

We deploy both the specialist and generalist policies to hardware (zero-shot sim-to-real). The robot’s target trajectory is provided by a set of waypoints along the various obstacles. In the case of the specialist policies, we switch between specialist policies by using a hand-tuned policy switching mechanism that selects the most suitable policy given the robot’s position.

| Typical performance of our agile locomotion policies on the Barkour benchmark. Our custom-built quadruped robot robustly navigates the terrain’s obstacles by leveraging various skills learned using RL in simulation. |

We find that very often our policies can handle unexpected events or even hardware degradation resulting in good average performance, but failures are still possible. As illustrated in the image below, in case of failures, our recovery policy quickly gets the robot back on its feet, allowing it to continue the episode. By combining the recovery policy with a simple walk-back-to-start policy, we are able to run repeated experiments with minimal human intervention to measure the robustness.

| Qualitative example of robustness and recovery behaviors. The robot trips and rolls over after heading down the A-frame. This triggers the recovery policy, which enables the robot to get back up and continue the course. |

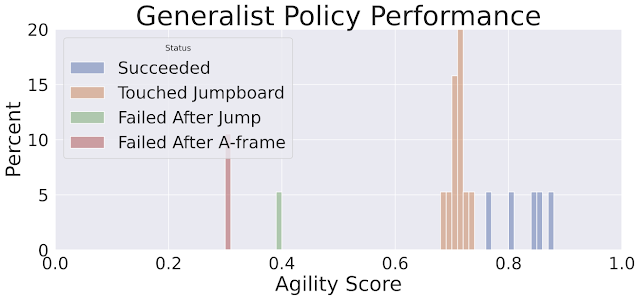

We find that across a large number of evaluations, the single generalist locomotion transformer policy and the specialist policies with the policy switching mechanism achieve similar performance. The locomotion transformer policy has a slightly lower average Barkour score, but exhibits smoother transitions between behaviors and gaits.

| Measuring robustness of the different policies across a large number of runs on the Barkour benchmark. |

|

| Histogram of the agility scores for the locomotion transformer policy. The highest scores shown in blue (0.75 – 0.9) represent the runs where the robot successfully completes all obstacles. |

Conclusion

We believe that developing a benchmark for legged robotics is an important first step in quantifying progress toward animal-level agility. To establish a strong baseline, we investigated a zero-shot sim-to-real approach, taking advantage of large-scale parallel simulation and recent advancements in training Transformer-based architectures. Our findings demonstrate that Barkour is a challenging benchmark that can be easily customized, and that our learning-based method for solving the benchmark provides a quadruped robot with a single low-level policy that can perform a variety of agile low-level skills.

Acknowledgments

The authors of this post are now part of Google DeepMind. We would like to thank our co-authors at Google DeepMind and our collaborators at Google Research: Wenhao Yu, J. Chase Kew, Tingnan Zhang, Daniel Freeman, Kuang-Hei Lee, Lisa Lee, Stefano Saliceti, Vincent Zhuang, Nathan Batchelor, Steven Bohez, Federico Casarini, Jose Enrique Chen, Omar Cortes, Erwin Coumans, Adil Dostmohamed, Gabriel Dulac-Arnold, Alejandro Escontrela, Erik Frey, Roland Hafner, Deepali Jain, Yuheng Kuang, Edward Lee, Linda Luu, Ofir Nachum, Ken Oslund, Jason Powell, Diego Reyes, Francesco Romano, Feresteh Sadeghi, Ron Sloat, Baruch Tabanpour, Daniel Zheng, Michael Neunert, Raia Hadsell, Nicolas Heess, Francesco Nori, Jeff Seto, Carolina Parada, Vikas Sindhwani, Vincent Vanhoucke, and Jie Tan. We would also like to thank Marissa Giustina, Ben Jyenis, Gus Kouretas, Nubby Lee, James Lubin, Sherry Moore, Thinh Nguyen, Krista Reymann, Satoshi Kataoka, Trish Blazina, and the members of the robotics team at Google DeepMind for their contributions to the project.