Posted by Khanh LeViet, TensorFlow Developer Advocate

Sound classification is a machine learning task where you input some sound to a machine learning model to categorize it into predefined categories such as dog barking, car horn and so on. There are already many applications of sound classification, including detecting illegal deforestation activities, or detecting sound of humpback whales for better understanding about their natural behaviors.

We are excited to announce that Teachable Machine now allows you to train your own sound classification model and export it in the TensorFlow Lite (TFLite) format. Then you can integrate the TFLite model to your mobile applications or your IoT devices. This is an easy way to quickly get up and running with sound classification, and you can then explore building production models in Python and exporting them to TFLite as a next step.

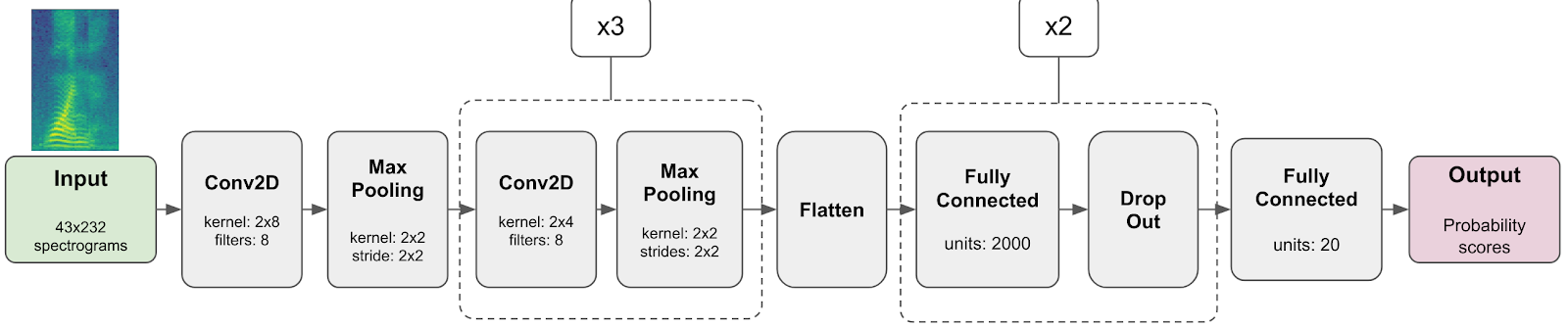

Model architecture

The model that Teachable Machine uses to classify 1-second audio samples is a small convolutional neural network. As the diagram above illustrates, the model receives a spectrogram (2D time-frequency representation of sound obtained through Fourier transform). It first processes the spectrogram with successive layers of 2D convolution (Conv2D) and max pooling layers. The model ends in a number of dense (fully-connected) layers, which are interleaved with dropout layers for the purpose of reducing overfitting during training. The final output of the model is an array of probability scores, one for each class of sound the model is trained to recognize.

You can find a tutorial to train your own sound classifications models using this approach in Python here.

Train a model using your own dataset

There are two ways to train a sound classification model using your own dataset:

- Simple way: Use Teachable Machine to collect training data and train the model all within your browser without writing a single line of code. This approach is useful for those who want to build a prototype quickly and interactively.

- Robust way: Record sounds to use as your training dataset in advance then use Python to train and carefully evaluate your model. Of course, his approach is also more automated and repeatable than the simple way.

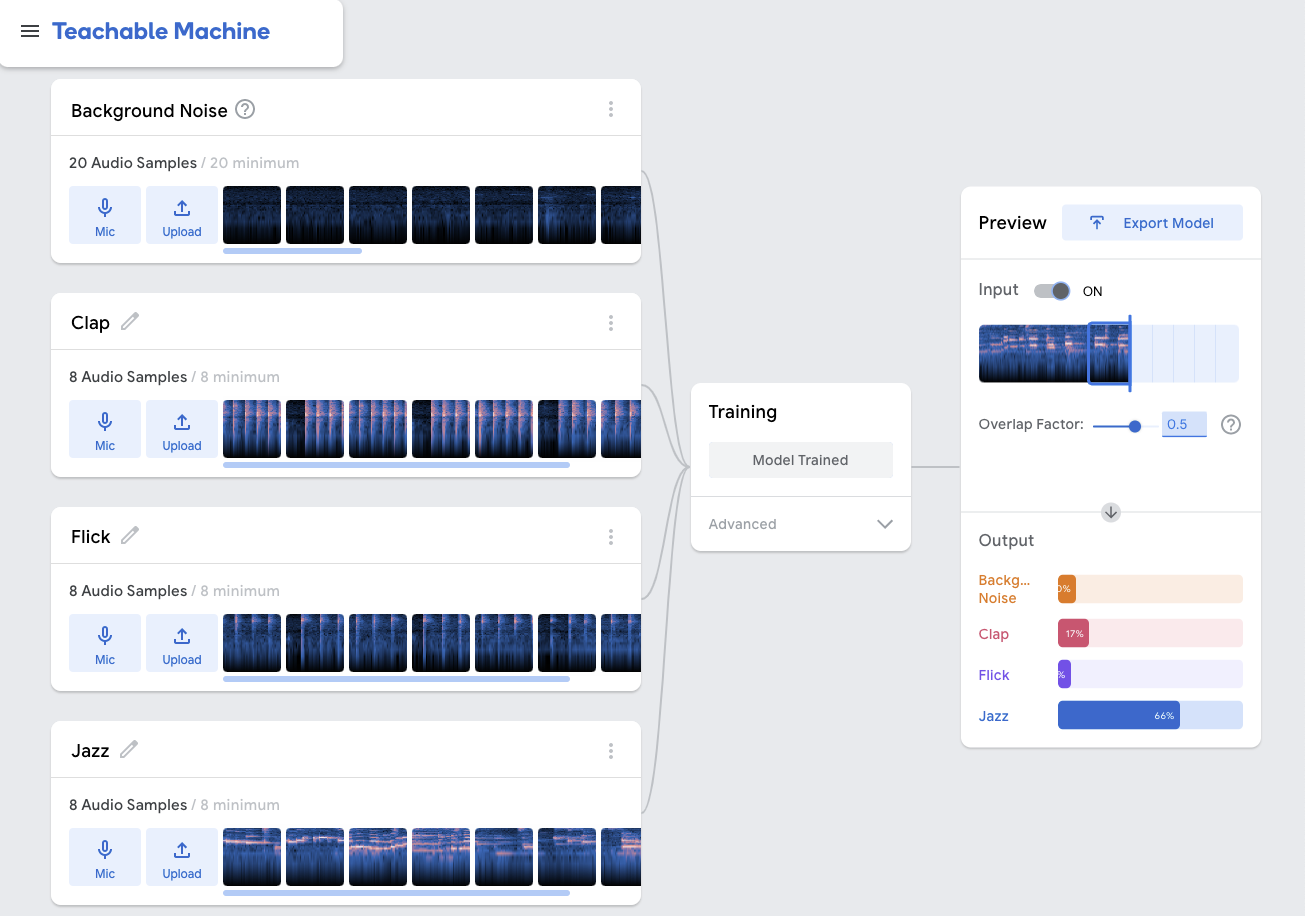

Train a model using Teachable Machine

Teachable Machine is a GUI tool that allows you to create training dataset and train several types of machine learning models, including image classification, pose classification and sound classification. Teachable Machine uses TensorFlow.js under the hood to train your machine learning model. You can export the trained models in TensorFlow.js format to use in web browsers, or export in TensorFlow Lite format to use in mobile applications or IoT devices.

Here are the steps to train your models:

- Go to Teachable Machine website

- Create an audio project

- Record some sound clips for each category that you want to recognize. You need only 8 seconds of sound for each category.

- Start training. Once it has finished, you can test your model on live audio feed.

- Export the model in TFLite format.

Train a model using Python

If you have a large training dataset with several hours of sound recording and or than a dozen of categories, then training a sound classification on a web browser will likely take a lot of time. In that case, you can collect the training dataset in advance, convert them to the WAV format and use this Colab notebook (which includes steps to convert the model to TFLite format) to train your sound classification. Google Colab offers a free GPU so that you can significantly speed up your model training.

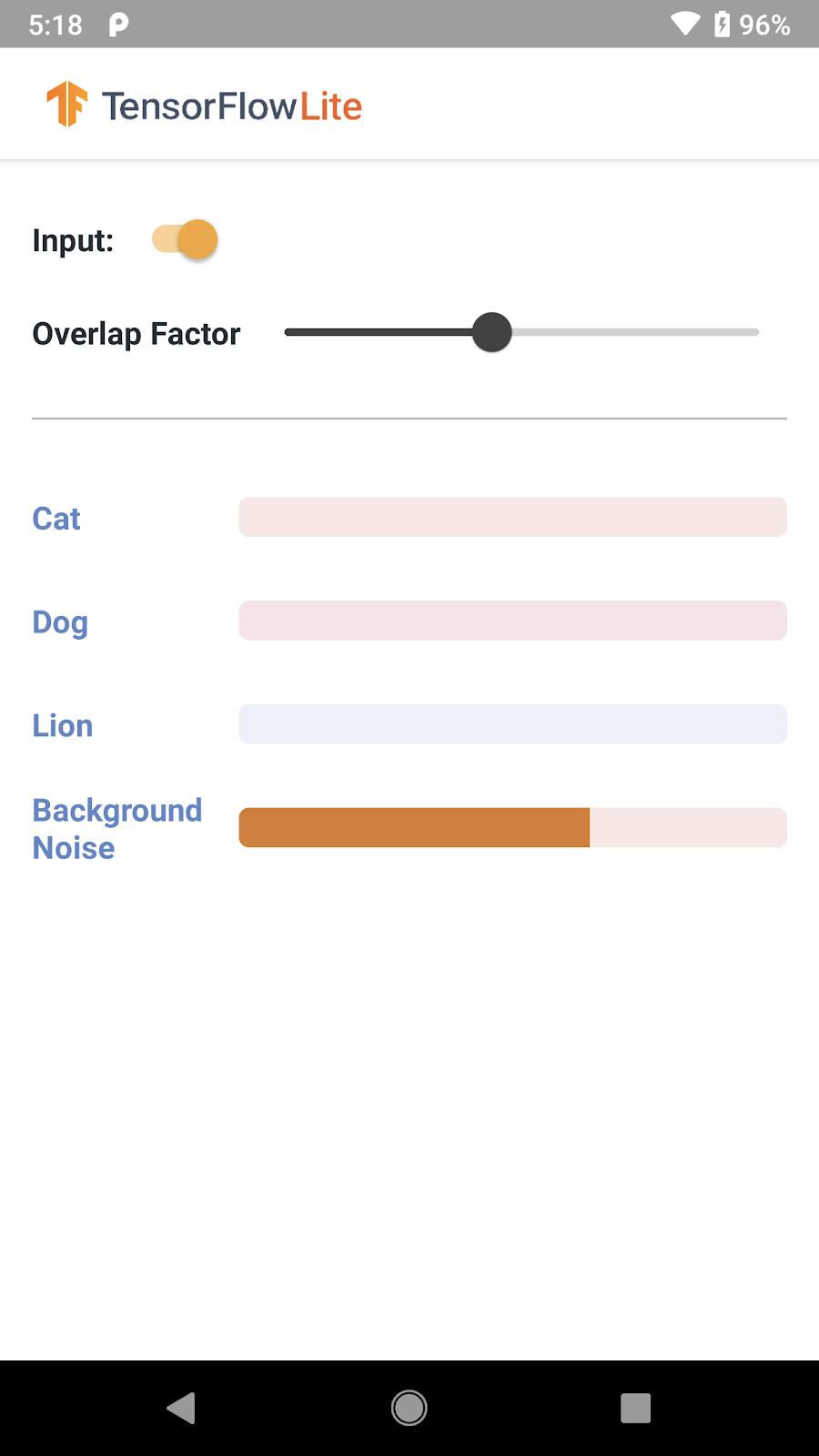

Deploy the model to Android with TensorFlow Lite

Once you have trained your TensorFlow Lite sound classification model, you can just put it in this Android sample app to try it out. Just follow these steps:

- Clone the sample app from GitHub:

git clone https://github.com/tensorflow/examples.git - Import the sound classification Android app into Android Studio. You can find it in the

lite/examples/sound_classification/androidfolder. - Add your model (both the

soundclassifier.tfliteandlabels.txt) into thesrc/main/assetsfolder replacing the example model that is already there. - Build the app and deploy it on an Android device. Now you can classify sound in real time!

To integrate the model into your own app, you can copy the SoundClassifier.kt class from the sample app and the TFLite model you have trained to your app. Then you can use the model as below:

1. Initialize a `SoundClassifier` instance from your `Activity` or `Fragment` class.

var soundClassifier: SoundClassifier

soundClassifier = SoundClassifier(context).also {

it.lifecycleOwner = context

}2. Start capturing live audio from the device’s microphone and classify in real time:

soundClassifier.start()

3. Receive classification results in real time as a map of human-readable class names and probabilities of the current sound belonging to each particular category.

let labelName = soundClassifier.labelList[0] // e.g. "Clap"

soundClassifier.probabilities.observe(this) { resultMap ->

let probability = result[labelName] // e.g. 0.7

}What’s next

We are working on an iOS version of the sample app that will be released in a few weeks. We will also extend TensorFlow Lite Model Maker to allow easy training of sound classification in Python. Stay tuned!

Acknowledgements

This project is a joint effort between multiple teams inside Google. Special thanks to:

- Google Research: Shanqing Cai, Lisie Lillianfeld

- TensorFlow team: Tian Lin

- Teachable Machine team: Gautam Bose, Jonas Jongejan

- Android team: Saryong Kang, Daniel Galpin, Jean-Michel Trivi, Don Turner