This post is co-written with Adarsh Kyadige and Salma Taoufiq from Sophos.

As a leader in cutting-edge cybersecurity, Sophos is dedicated to safeguarding over 500,000 organizations and millions of customers across more than 150 countries. By harnessing the power of threat intelligence, machine learning (ML), and artificial intelligence (AI), Sophos delivers a comprehensive range of advanced products and services. These solutions are designed to protect and defend users, networks, and endpoints against a wide array of cyber threats including phishing, ransomware, and malware. The Sophos Artificial Intelligence (AI) group (SophosAI) oversees the development and maintenance of Sophos’s major ML security technology.

Large language models (LLMs) have demonstrated impressive capabilities in natural language understanding and generation across diverse domains as showcased in numerous leaderboards (e.g., HELM, Hugging Face Open LLM leaderboard) that evaluate them on a myriad of generic tasks. However, their effectiveness in specialized fields like cybersecurity relies heavily on domain-specific knowledge. In this context, fine-tuning emerges as a crucial technique to adapt these general-purpose models to the intricacies of cybersecurity. For example, we could use Instruction fine-tuning to increase the model performance on an incident classification or summarization. However, before fine-tuning, it’s important to determine an out-of-the-box model’s potential by testing its abilities on a set of tasks based on the domain. We have defined three specialized tasks that are covered later in the blog. These same tasks can also be used to measure the gains in performance obtained through fine-tuning, Retrieval-Augmented Generation (RAG), or knowledge distillation.

In this post, SophosAI shares insights in using and evaluating an out-of-the-box LLM for the enhancement of a security operations center’s (SOC) productivity using Amazon Bedrock and Amazon SageMaker. We use Anthropic’s Claude 3 Sonnet on Amazon Bedrock to illustrate the use cases.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

Tasks

We will showcase three example tasks to delve into using LLMs in the context of an SOC. An SOC is an organizational unit responsible for monitoring, detecting, analyzing, and responding to cybersecurity threats and incidents. It employs a combination of technology, processes, and skilled personnel to maintain the confidentiality, integrity, and availability of information systems and data. SOC analysts continuously monitor security events, investigate potential threats, and take appropriate action to mitigate risks. Known challenges faced by SOCs are the high volume of alerts generated by detection tools and the subsequent alert fatigue among analysts. These challenges are often coupled with staffing shortages. To address these challenges and enhance operational efficiency and scalability, many SOCs are increasingly turning to automation technologies to streamline repetitive tasks, prioritize alerts, and accelerate incident response. Considering the nature of tasks analysts need to perform, LLMs are good tools to enhance the level of automation in SOCs and empower security teams.

For this work, we focus on three essential SOC use cases where LLMs have the potential of greatly assisting analysts, namely:

- SQL Query generation from natural language to simplify data extraction

- Incident severity prediction to prioritize which incidents analysts should focus on

- Incident summarization based on its constituent alert data to increase analyst productivity

Based on the token consumption of these tasks, particularly the summarization component, we need a model with a context window of at least 4000 tokens. While the tasks have been tested in English, Anthropic’s Claude 3 Sonnet model can perform in other languages. However, we recommend evaluating the performance in your specific language of interest.

Let’s dive into the details of each task.

Task 1: Query generation from natural language

This task’s objective is to assess a model’s capacity to translate natural language questions into SQL queries, using contextual knowledge of the underlying data schema. This skill simplifies the data extraction process, allowing security analysts to conduct investigations more efficiently without requiring deep technical knowledge. We used prompt engineering guidelines to tailor our prompts to generate better responses from the LLM.

A three-shot prompting strategy is used for this task. Given a database schema, the model is provided with three examples pairing a natural-language question with its corresponding SQL query. Following these examples, the model is then prompted to generate the SQL query for a question of interest.

The prompt below is a three-shot prompt example for query generation from natural language. Empirically, we have obtained better results with few-shot prompting as opposed to one-shot (where the model is provided with only one example question and corresponding query before the actual question of interest) or zero-shot (where the model is directly prompted to generate a desired query without any examples).

Translate the following request into SQL

Schema for alert_table table

<Table schema>

Schema for process_table table

<Table schema>

Schema for network_table table

<Table schema>

Here are some examples

<examples>

Request:tell me a list of processes that were executed between 2021/10/19 and 2021/11/30

SQL:select * from process_table where timestamp between '2021-10-19' and '2021-11-30';

Request:show me any low severity security alerts for the 23 days ago

SQL:select * from alert_table where severity='low' and timestamp>=DATEADD('day', -23, CURRENT_TIMESTAMP());

Request:show me the count of msword.exe processes that ran between Dec/01 and Dec/11

SQL:select count(*) from process_table where process='msword.exe' and timestamp>='2022-12-01' and timestamp<='2022-12-11';

</examples>

Request:"Any Ubuntu processes that was run by the user ""admin"" from host ""db-server"""

SQL:

To evaluate a model’s performance on this task, we rely on a proprietary data set of about 100 target queries based on a test database schema. To determine the accuracy of the queries generated by the model, a multi-step evaluation is followed. First, we verify whether the model’s output is an exact match to the expected SQL statement. Exact matches are then recorded as successful outcomes. If there is a mismatch, we then run both the model’s query and the expected query against our mock database to compare their results. However, this method can be prone to false positives and false negatives. To mitigate this, we further perform a query equivalence assessment using a different stronger LLM on this task. This method is known as LLM-as-a-judge.

Anthropic’s Claude 3 Sonnet model achieved a good accuracy rate of 88 percent on the chosen dataset, suggesting that this natural-language-to-SQL task is quite simple for LLMs. With basic few-shot prompting, an LLM can therefore be used out-of-the-box without fine-tuning by security analysts to assist them in retrieving key information while investigating threats. The above model performance is based on our dataset and our experiment. This means that you can perform your own test using the strategy explained above.

Task 2: Incident severity prediction

For the second task, we assess a model’s ability to recognize the severity of observed events as indicators of an incident. Specifically, we try to determine whether an LLM can review a security incident and accurately gauge its importance. Armed with such a capability, a model can assist analysts in determining which incidents are most pressing, so they can work more efficiently by organizing their work queue based on severity levels, cut through the noise, and save time and energy.

The input data in this use case is semi-structured alert data, typical of what is produced by various detection systems during an incident. We clearly define severity categories—critical, high, medium, low, and informational—across which the model is to classify the severity of the incident. This is therefore a classification problem that tests an LLM’s intrinsic cybersecurity knowledge.

Each security incident within the Sophos Managed Detection and Response (MDR) platform is made up of multiple detections that highlight suspicious activities occurring in a user’s environment. A detection might involve identifying potentially harmful patterns, such as unusual command executions, abnormal file access, anomalous network traffic, or suspicious script use. We have attached below an example input data.

The “detection” section provides detailed information about each specific suspicious activity that was identified. It includes the type of security incident, such as “Execution,” along with a description that explains the nature of the threat, like the use of suspicious PowerShell commands. The detection is tied to a unique identifier for tracking and reference purposes. Additionally, it contains details from the MITRE ATT&CK framework which categorizes the tactics and techniques involved in the threat. This section might also reference related Sigma rules, which are community-driven signatures for detecting threats across different systems. By including these elements, the detection section serves as a comprehensive outline of the potential threat, helping analysts understand not just what was detected but also why it matters.

The “machine_data” section holds crucial information about the machine on which the detection occurred. It can provide further metadata on the machine, helping to pinpoint where exactly in the environment the suspicious activity was observed.

{

...

"detection": {

"attack": "Execution",

"description": "Identifies the use of suspicious PowerShell IEX patterns. IEX is the shortened version of the Invoke-Expression PowerShell cmdlet. The cmdlet runs the specified string as a command.",

"id": <Detection ID>,

"mitre_attack": [

{

"tactic": {

"id": "TA0002",

"name": "Execution",

"techniques": [

{

"id": "T1059.001",

"name": "PowerShell"

}

]

}

},

{

"tactic": {

"id": "TA0005",

"name": "Defense Evasion",

"techniques": [

{

"id": "T1027",

"name": "Obfuscated Files or Information"

}

]

}

}

],

"sigma": {

"id": <Detection ID>,

"references": [

"https://github.com/SigmaHQ/sigma/blob/master/rules/windows/process_creation/proc_creation_win_susp_powershell_download_iex.yml",

"https://github.com/VirtualAlllocEx/Payload-Download-Cradles/blob/main/Download-Cradles.cmd"

]

},

"type": "process",

},

"machine_data": {

...

"username": <Username>

},

"customer_id": <Customer ID>,

"decorations": {

<Customer data>

},

"original_file_name": "powershell.exe",

"os_platform": "windows",

"parent_process_name": "cmd.exe",

"parent_process_path": "C:\Windows\System32\cmd.exe",

"powershell_code": "iex ([system.text.encoding]::ASCII.GetString([Convert]::FromBase64String('aWYoR2V0LUNvbW1hbmQgR2V0LVdpbmRvd3NGZWF0dXJlIC1lYSBTaWxlbnRseUNvbnRpbnVlKQp7CihHZXQtV2luZG93c0ZlYXR1cmUgfCBXaGVyZS1PYmplY3QgeyRfLm5hbWUgLWVxICdSRFMtUkQtU2VydmVyJ30gfCBTZWxlY3QgSW5zdGFsbFN0YXRlKS5JbnN0YWxsU3RhdGUKfQo=')))",

"process_name": "powershell.exe",

"process_path": "C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe",

},

...

}

To facilitate evaluation, the prompt used for this task requires that the model communicates its severity assessments in a uniform way, providing the response in a standardized format, for example, as a dictionary with severity_pred as the key and their chosen severity level as the value. The prompt below is an example for incident severity classification. Model performance is then evaluated against a test set of over 3,800 security incidents with target severity levels.

You are a helpful cybersecurity incident investigation expert that classifies incidents according to their severity level given a set of detections per incident.

Respond strictly with this JSON format: {"severity_pred": "xxx"} where xxx should only be either:

- Critical,

<Criteria for a critical incident>

- High,

<Criteria for a high severity incident>

- Medium,

<Criteria for a medium severity incident>

- Low,

<Criteria for a low severity incident>

- Informational

<Criteria for an informational incident>

No other value is allowed.

Detections:

Various experimental setups are used for this task, including zero-shot prompting, three-shot prompting using random or nearest-neighbor incidents examples, and simple classifiers.

This task turned out to be quite challenging, because of the noise in the target labels and the inherent difficulty of assessing the criticality of an incident without further investigation by models that weren’t trained specifically for this use case.

Even under various setups, such as few-shot prompting with nearest neighbor incidents, the model’s performance couldn’t reliably outperform random chance. For reference, the baseline accuracy on the test set is approximately 71 percent and the baseline balanced accuracy is 20 percent.

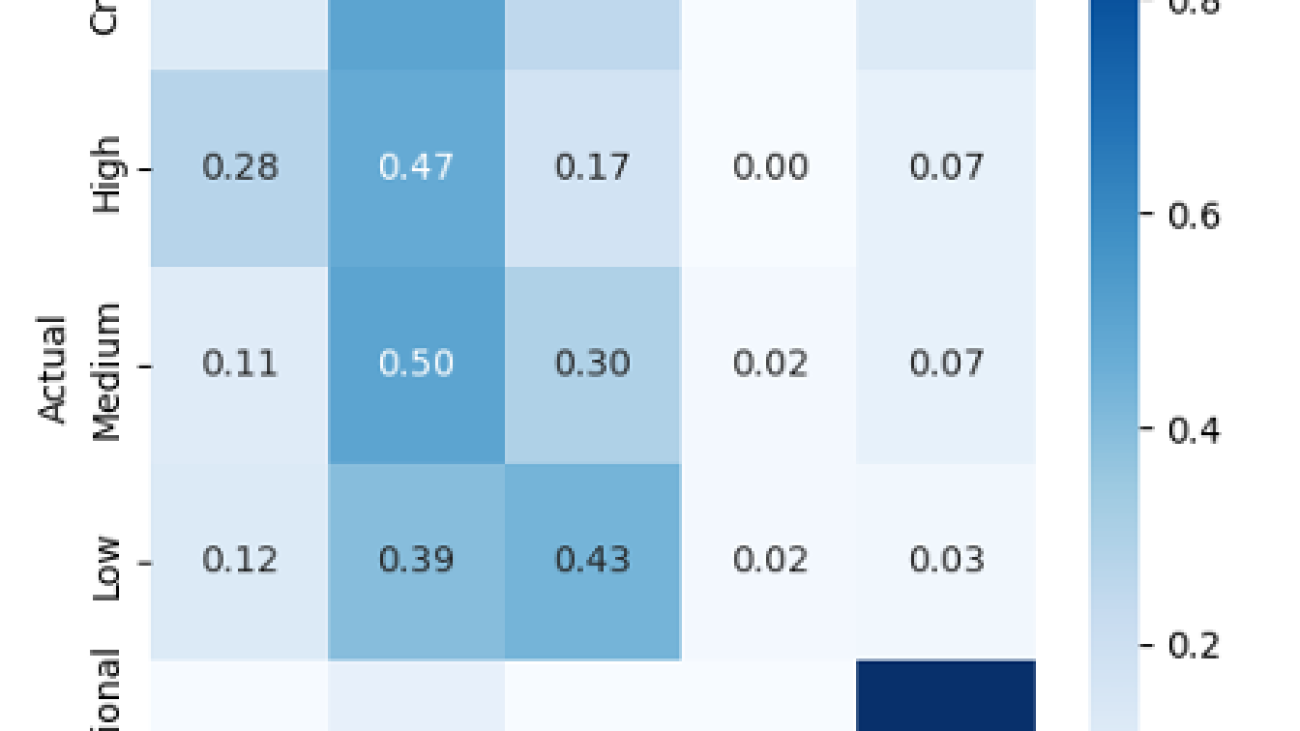

Figure 1 presents the confusion matrix of the model’s responses. The confusion matrix allows to see in one graph the performance of the model’s classification. We can see that only 12% (0.12) of the Actual critical incidents have been correctly predicted/classified. Then 50% of the Critical incidents have been predicted as High incidents, 25% as Medium incidents and 12% as Informational incidents. We can similarly see low accuracy on the rest of the labels and the lowest being bee the Low incidents label with only 2% of the incidents correctly predicted. There is also a notable tendency to overpredict High and Medium categories across the board.

Figure 1: Confusion matrix for the five-severity-level classification using Anthropic Claude 3 Sonnet

The performance observed in this benchmark task indicates this is a particularly hard problem for an unmodified, all-purpose LLM, and the problem requires a more specialized model, specifically trained or fine-tuned on cybersecurity data.

Task 3: Incident summarization

The third task is concerned with the summarization of incoming incidents. It evaluates the potential of a model to assist threat analysts in the triage and investigation of security incidents as they come in by providing a succinct and concise summary of the activity that triggered the incident.

Security incidents typically consist of a series of events occurring on a user endpoint or network, associated with detected suspicious activity. The analysts investigating the incident are presented with a series of events that occurred on the endpoint at the time the suspicious activity was detected. However, analyzing this event sequence can be challenging and time-consuming, resulting in difficulty in identifying noteworthy events. This is where LLMs can be beneficial by helping organize and categorize event data following a specific template, thereby aiding comprehension, and helping analysts quickly determine the appropriate next actions.

We use real incident data from Sophos’s MDR for incident summarization. The input for this task encompasses a set of JSON events, each having distinct schemas and attributes based on the capturing sensor. Along with instructions and a predefined template, this data is provided to the model to generate a summary. The prompt below is an example template prompt for generating incident summaries from SOC data.

As a cybersecurity assistant, your task is to:

1. Analyze the provided cybersecurity detections data.

2. Create a report of the events using the information from the '### Detections' section, which may include security artifacts such as command lines and file paths.

3. [Any other additional general requirements for formatting, etc.]

The report outline should look like this:

Summary:

<Few sentence description of the activity. [Any additional requirements for the summary: what to include, etc.]>

Observed MITRE Techniques:

<List only the registered MITRE Technique or Tactic ID and name pairs if available. The ID should start with 'T'.>

Impacted Hosts:

<List of all hostname observed in the detections, provide corresponding IPs if available>

Active Users:

<List of all usernames observed in the detections. There could be multiple, list all of them>

Events:

<One sentence description for top three detection events. Start the list with n1. >

IPs/URLs:

<List available IPs and URLs.>

<Enumerate only up to ten artifacts under each report category, and summarize any remaining events beyond that.>

Files:

<List the files found in the incident as follows:>

<TEMPLATE FOR FILES WITH DETAILS>

Command Lines:

<List the command lines found in the detections as follows:>

<TEMPLATE FOR COMMAND LINES WITH DETAILS>

### Detections:

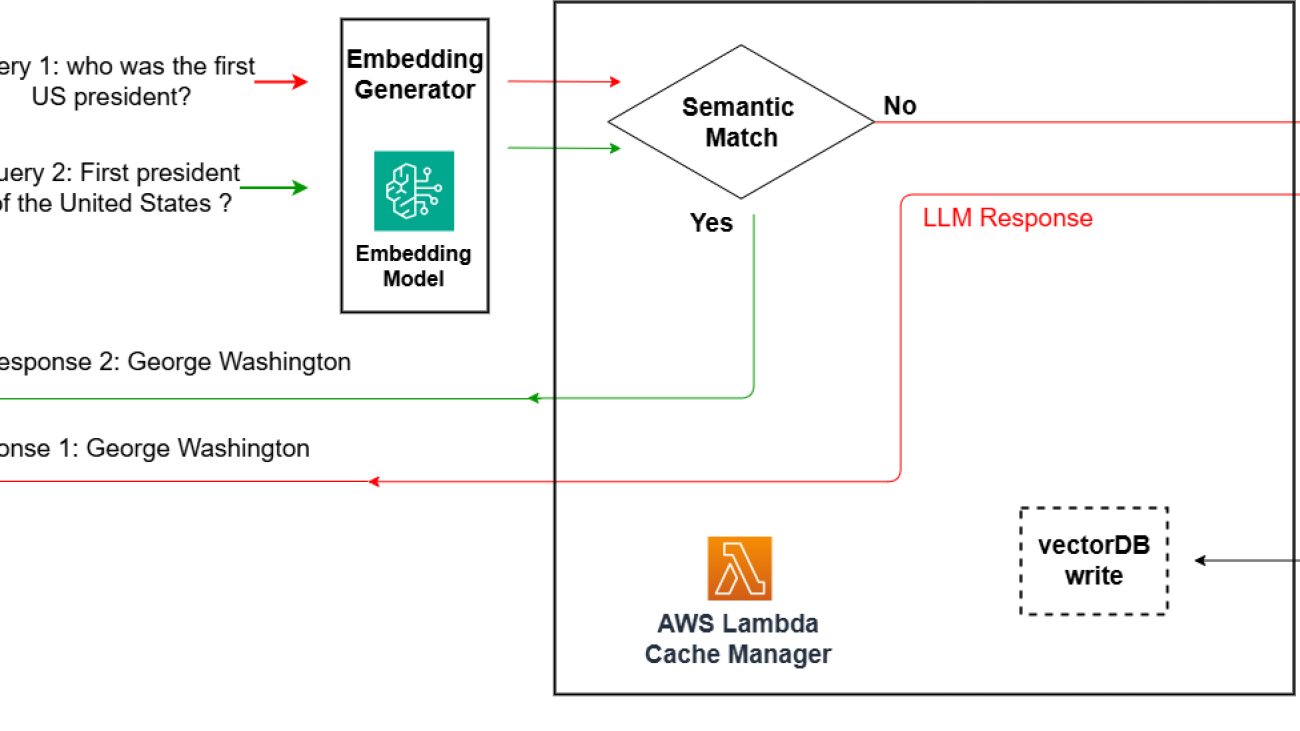

Evaluating these generated incident summaries is tricky because several factors must be considered. For example, it’s crucial that the extracted information is not only correct, but also relevant. To gain a general understanding of the quality of a model’s incident summarization, we use a set of five distinct metrics and rely on a dataset comprising of N incidents. We compare the generated descriptions with corresponding gold-standard descriptions crafted based on Sophos analysts’ feedback.

We compute two classes of metrics. The first class of metrics assesses factual accuracy; they are used to evaluate how many artifacts such as command lines, file paths, usernames, and so on were correctly identified and summarized by the model. The computation here is straightforward; we compute the average distance across extracted artifacts between the generated description and the target. We use two distance metrics, Levenshtein distance and longest common subsequence (LCS).

The second class of metrics is used to provide a more semantic evaluation of the generated description, using three different metrics:

- BERTScore metric: This metric is used to evaluate the generated summaries using a pre-trained BERT model’s contextual embeddings. It determines the similarity between the generated summary and the reference summary using cosine similarity.

- ADA2 embeddings cosine similarity: This metric assesses the cosine similarity of ADA2 embeddings of tokens in the generated summary with those of the reference summary.

- METEOR score: METEOR is an evaluation metric based on the harmonic mean of unigram precision and recall.

More advanced evaluation methods can be used such as training a reward model on human preferences and using it as an evaluator, but for the sake of simplicity and cost-effectiveness, we limited the scope to these metrics.

Below is a summary of our results on this task:

| Model |

Levenshtein-based factual accuracy |

LCS-based factual accuracy |

BERTScore |

Cosine similarity of ADA2 embeddings |

METEOR score |

| Anthropic’s Claude 3 Sonnet |

0.810 |

0.721 |

0.886 |

0.951 |

0.4165 |

Based on these findings, we gain a broad understanding of the performance of the model when it comes to generating incident summaries, focusing especially on factual accuracy and retrieval rate. Anthropic’s Claude 3 Sonnet model can capture the activity that’s occurring in the incident and summarize it well. However, it ignores certain instructions such as defanging all IPs and URLs. The returned reports are also not fully aligned with the target responses on a token level as signaled by the METEOR score. Anthropic’s Claude 3 Sonnet model skims over some details and explanations in the reports.

Experimental setup using Amazon Bedrock and Amazon SageMaker

This section outlines the experimental setup for evaluating various large language models (LLMs) using Amazon Bedrock and Amazon SageMaker. These services allowed us to efficiently interact with and deploy multiple LLMs for quick and cost-effective experimentation.

Amazon Bedrock

Amazon Bedrock is a managed service that allows experimenting with various LLMs quickly in an on-demand manner. This brings the advantage of being able to interact and experiment with LLMs without having to self-host them and only pay by tokens consumed. We used the InvokeModel API to interact with the model with minimal latency. We wrote the following function that let us call different models by passing the necessary inference parameters to the API. For more details on what the inference parameters are per provider, we recommend you read the Inference request parameters and response fields for foundation models section in the Amazon Bedrock documentation. The example below uses the function based on Anthropic’s Claude 3 Sonnet model. Notice that we gave the model a role via the system prompt and that we prefilled its response.

system_prompt = “You are a helpful cybersecurity incident investigation expert that classifies incidents according to their severity level given a set of detections per incident”

messages = [

{"role": "user",

"content":

" Respond strictly with this JSON format:{"severity_pred": "xxx"} where xxx should only be either:

- Critical,

<Criteria for a critical incident>

- High,

<Criteria for a high severity incident>

- Medium,

<Criteria for a medium severity incident>

- Low,

<Criteria for a low severity incident>

- Informational

<Criteria for an informational incident>

No other value is allowed."},

{"role": "assistant", "content": " Detections:"}]

def generate_message(bedrock_runtime, model_id, system_prompt, messages, max_tokens):

body=json.dumps(

{

"anthropic_version": " bedrock-2023-05-31",

"max_tokens": max_tokens,

"system": system_prompt,

"messages": messages

}

)

response = bedrock_runtime.invoke_model(body=body, modelId=model_id)

response_body = json.loads(response.get('body').read())

return response_body

The above example is based on our use case. The model_id parameter specifies the identifier of the specific model you wish to invoke using the Bedrock runtime. We used the model id anthropic.claude-3-sonnet-20240229-v1:0. For other model ids, please refer to the bedrock documentation. For further details about this API, we recommend you read the API documentation. We advise you to adapt it to your use case based on your requirements.

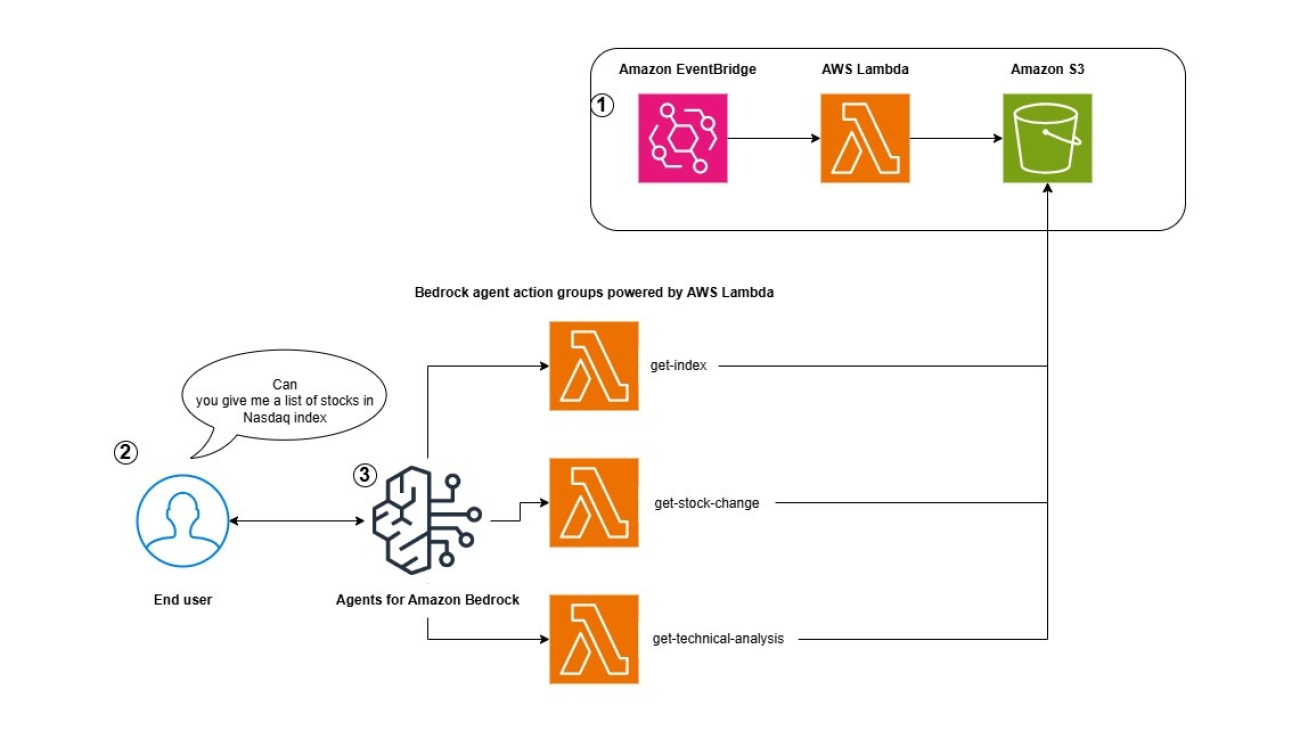

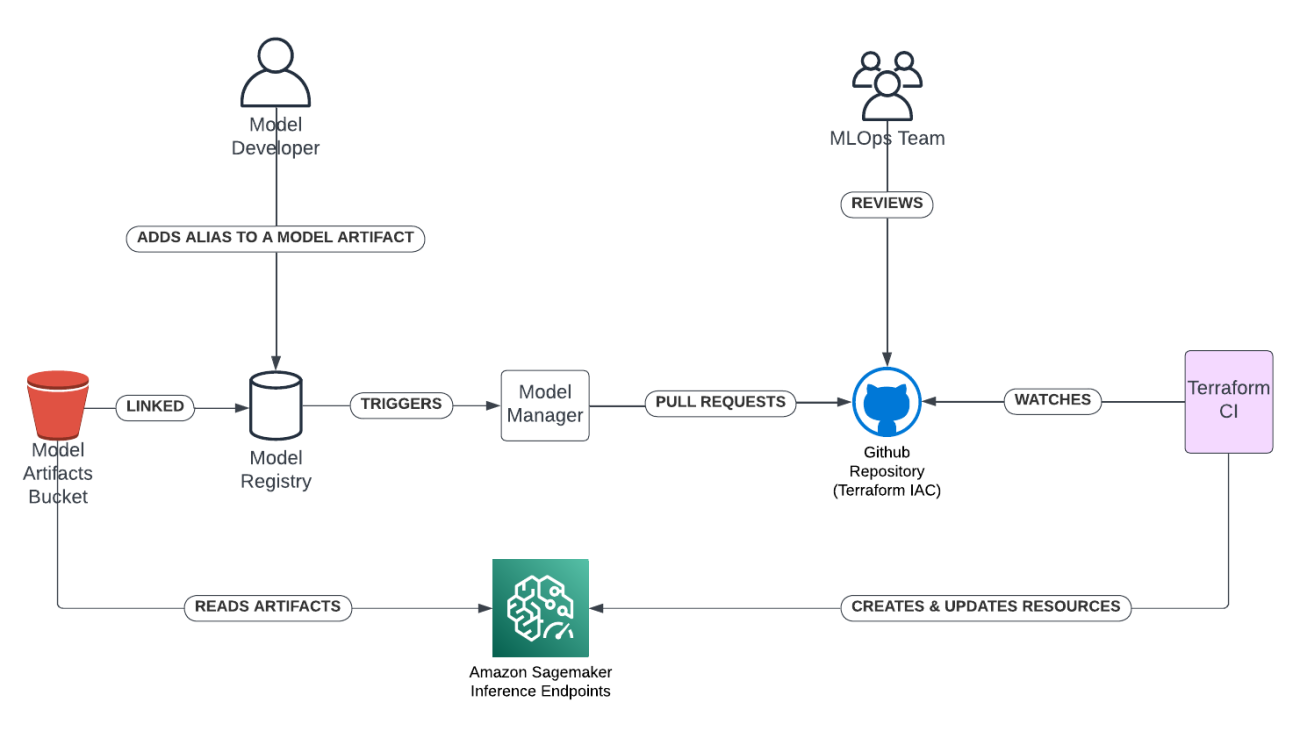

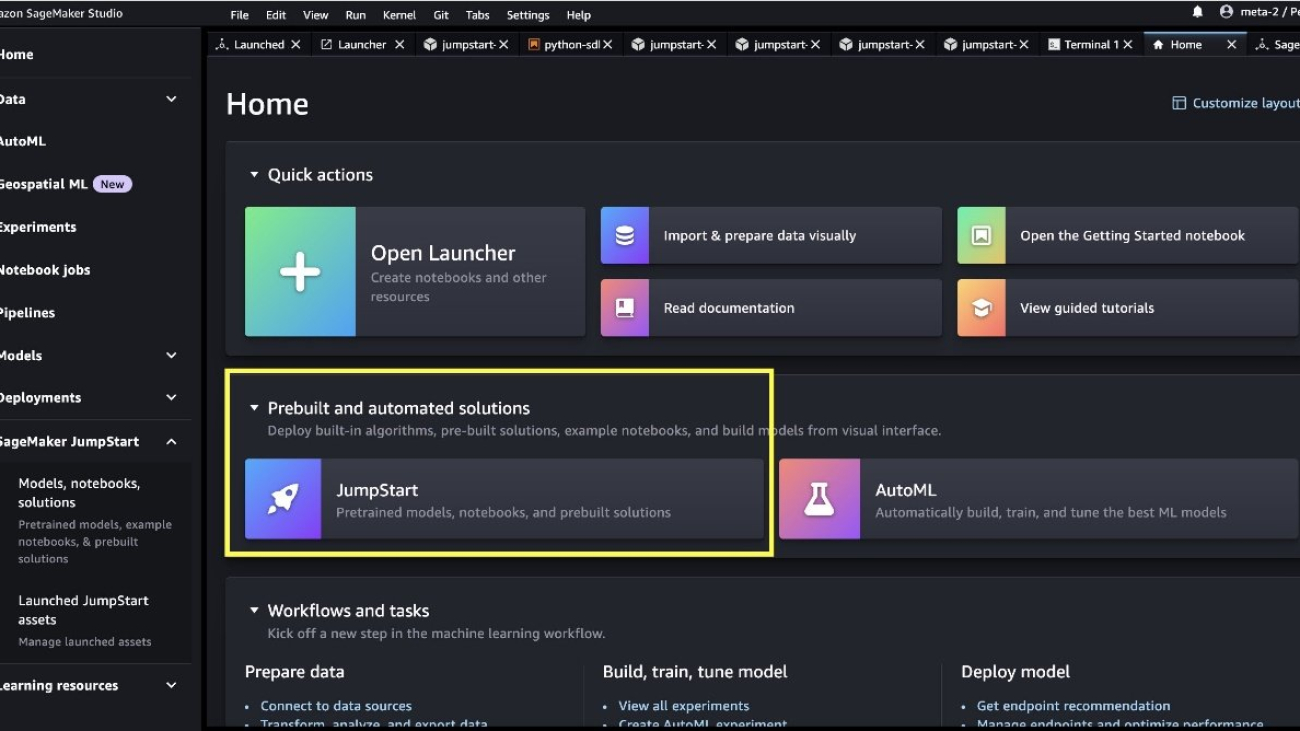

Our analysis in this blog post has focused on Anthropic’s Claude 3 Sonnet model and three specific use cases. These insights can be adapted to other SOCs’ specific requirements and desired models. For example, it’s possible to access other models such as Meta’s Llama models, Mistral models, Amazon Titan models and others. For additional models, we used Amazon SageMaker Jumpstart.

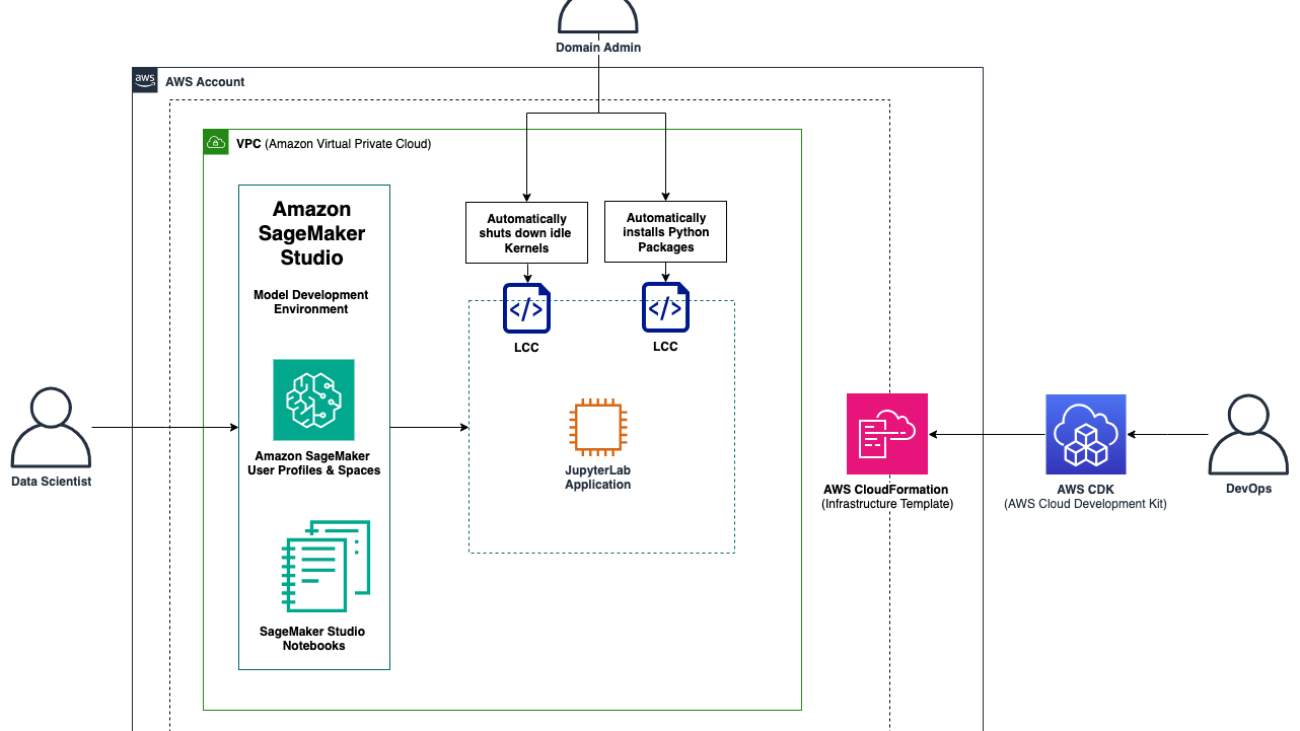

Amazon SageMaker

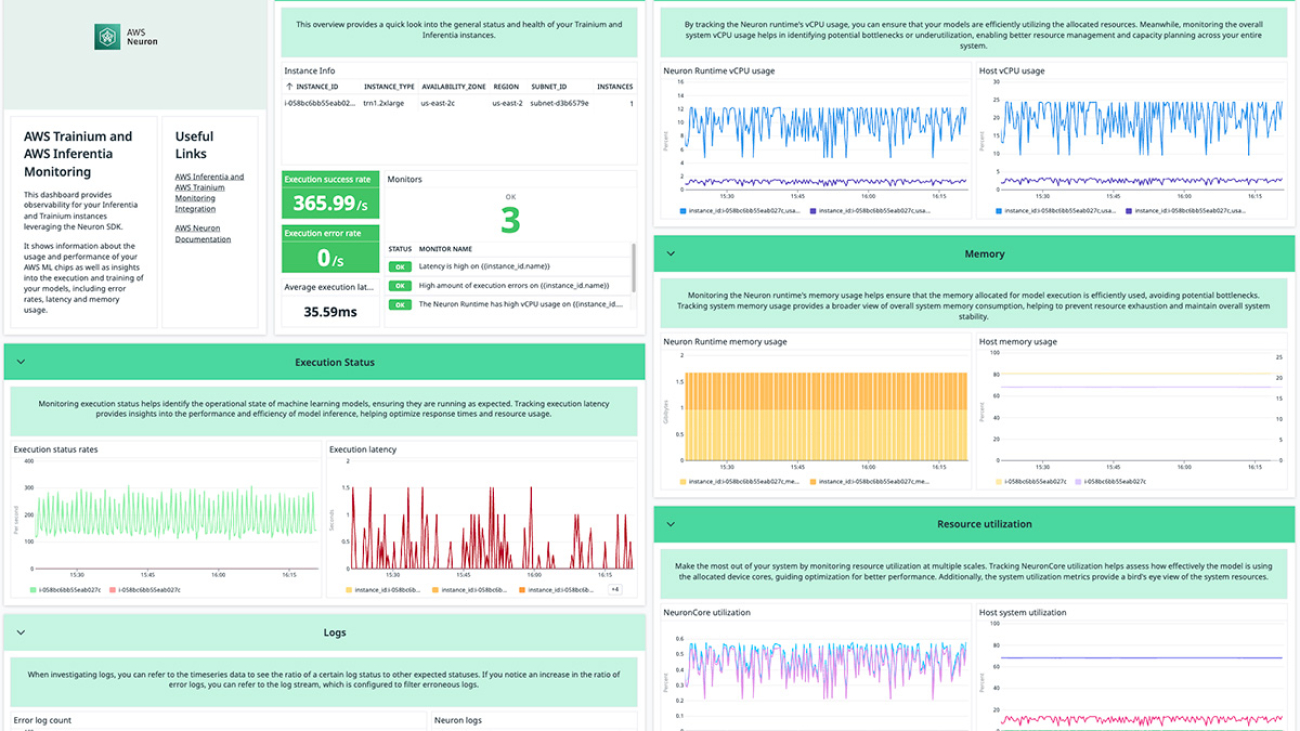

Amazon SageMaker is a fully managed machine learning (ML) service. With SageMaker, data scientists and developers can quickly and confidently build, train, and deploy ML models into a production-ready hosted environment. Amazon SageMaker JumpStart is a robust feature within the SageMaker machine learning (ML) environment, offering practitioners a comprehensive hub of publicly available and proprietary foundation models (FMs). It offers a wide range of publicly available and proprietary LLMs that you can, in a low-code manner, quickly tune and deploy. To quickly deploy and experiment with the out of the box models in SageMaker in a cost-effective manner, we deployed the LLMs from SageMaker JumpStart using asynchronous inference endpoints.

Inference endpoints were an effortless way for us to directly download these models from the respective Hugging Face repositories and deploy them using a few lines of code and pre-made Text Generation Inference (TGI) containers (see the example notebook on GitHub). In addition, we used asynchronous inference endpoints with autoscaling, which helped us to manage costs by automatically scaling the inference endpoints down to zero when they weren’t being used. Considering the number of endpoints we were creating, asynchronous inference made it simple for us to manage endpoints by having the endpoint ready to use whenever they were needed and scaling them down when they weren’t being used, without additional management on our end after the scaling policy was defined.

Next steps

In this blog post we applied the tasks on a single model to show case it as an example; in reality, you would select a couple of LLMs that you would put through the experiments in this post based on your requirements. From there, if the out-of-the-box models aren’t sufficient for the task, you would select the best suited LLM and then fine-tune it on the specific task.

For example, based on the outcomes of our three experimental tasks, we found that the results of the incident information summarization task didn’t meet our expectations. Therefore, we will fine-tune the out-of-the-box model that best suits our needs. This fine-tuning process can be accomplished using Amazon Bedrock Custom Models or SageMaker fine tuning, and the fine-tuned model could then be deployed using the customized model by importing it into Amazon Bedrock or by deploying the model to a SageMaker endpoint.

In this blog we covered the experimentation phase. Once you identify an LLM that meets your performance requirements, it’s important to start considering how to productionize it. When productionizing an LLM, it is important to consider things like guardrails and scalability of the LLM. Implementing guardrails helps you to minimize the risk of the model being misused or security breaches. Amazon Bedrock Guardrails enables you to implement safeguards for your generative AI applications based on your use cases and responsible AI policies. This blog covers how to build guardrails in your generative AI applications. When moving an LLM into ] production, you also want to validate the scalability of the LLM based on request traffic. In Amazon Bedrock, consider increasing the quotas of your model, batch inference, queuing the requests, or even distributing the requests between different Regions that have the same model. Select the technique that suits you based on your use case and traffic.

Conclusion

In this post, SophosAI shared insights on how to use and evaluate out-of-the-box LLMs following a set of specialized tasks for the enhancement of a security operations center’s (SOC) productivity by using Amazon Bedrock and Amazon SageMaker. We used Anthropic’s Claude 3 Sonnet model on Amazon Bedrock to illustrate three use cases.

Amazon Bedrock and SageMaker have been key to enabling us to run these experiments. With the convenient access to high-performing foundation models (FMs) from leading AI companies provided by Amazon Bedrock through a single API call, we were able to test various LLMs without needing to deploy them ourselves. Additionally, the on-demand pricing model allowed us to only pay for what we used based on token consumption.

To access additional models with flexible control, SageMaker is a great alternative that offers a wide range of LLMs ready for deployment. While you would deploy these models yourself, you can still achieve great cost optimization by using asynchronous endpoints with a scaling policy that scales the instance down to zero when not in use.

General takeaways as to the applicability of an LLM such as Anthropic’s Claude 3 Sonnet model in cybersecurity can be summarized as follows:

- An out-of-the-box LLM can be an effective assistant in threat hunting and incident investigation. However, it still requires some guardrails and guidance. We believe that this potential application can be implemented using an existing powerful model, such as Anthropic’s Claude 3 Sonnet model, with careful prompt engineering.

- When it comes to summarizing incident information from raw data, Anthropic’s Claude 3 Sonnet model performs adequately, but there’s room for improvement through fine-tuning.

- Evaluating individual artifacts or groups of artifacts remains a challenging task for a pre-trained LLM. To tackle this problem, a specialized LLM trained specifically on cybersecurity data might be required.

It is also worth noticing that while we used the InvokeModel API from Amazon Bedrock, another simpler way to access Amazon Bedrock models is by using the Converse API. The Converse API provides consistent API calls that work with Amazon Bedrock models that support messages. This means you can write code once and use it with different models. Should a model have unique inference parameters, the Converse API also allows you to pass those unique parameters in a model specific structure.

About the Authors

Benoit de Patoul is a GenAI/AI/ML Specialist Solutions Architect at AWS. He helps customers by providing guidance and technical assistance to build solutions related to GenAI/AI/ML using Amazon Web Services. In his free time, he likes to play piano and spend time with friends.

Benoit de Patoul is a GenAI/AI/ML Specialist Solutions Architect at AWS. He helps customers by providing guidance and technical assistance to build solutions related to GenAI/AI/ML using Amazon Web Services. In his free time, he likes to play piano and spend time with friends.

Naresh Nagpal is a Solutions Architect at AWS with extensive experience in application development, integration, and technology architecture. At AWS, he works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS. He is also helping customers to integrate GenAI capabilities in their SaaS applications.

Naresh Nagpal is a Solutions Architect at AWS with extensive experience in application development, integration, and technology architecture. At AWS, he works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS. He is also helping customers to integrate GenAI capabilities in their SaaS applications.

Adarsh Kyadige oversees the Research wing of the Sophos AI team, where he has been working since 2018 at the intersection of Machine Learning and Security. He earned a Masters degree in Computer Science, with a specialization in Artificial Intelligence and Machine Learning, from UC San Diego. His interests and responsibilities involve applying Deep Learning to Cybersecurity, as well as orchestrating pipelines for large scale data processing. In his leisure time, Adarsh can be found at the archery range, tennis courts, or in nature. His latest research can be found on Google Scholar.

Adarsh Kyadige oversees the Research wing of the Sophos AI team, where he has been working since 2018 at the intersection of Machine Learning and Security. He earned a Masters degree in Computer Science, with a specialization in Artificial Intelligence and Machine Learning, from UC San Diego. His interests and responsibilities involve applying Deep Learning to Cybersecurity, as well as orchestrating pipelines for large scale data processing. In his leisure time, Adarsh can be found at the archery range, tennis courts, or in nature. His latest research can be found on Google Scholar.

Salma Taoufiq was a Senior Data Scientist at Sophos focusing at the intersection of machine learning and cybersecurity. With an undergraduate background in computer science, she graduated from the Central European University with a MSc. in Mathematics and Its Applications. When not developing a malware detector, Salma is an avid hiker, traveler, and consumer of thrillers.

Salma Taoufiq was a Senior Data Scientist at Sophos focusing at the intersection of machine learning and cybersecurity. With an undergraduate background in computer science, she graduated from the Central European University with a MSc. in Mathematics and Its Applications. When not developing a malware detector, Salma is an avid hiker, traveler, and consumer of thrillers.

Read More

Omri Shiv is an Open Source Machine Learning Engineer focusing on helping customers through their AI/ML journey. In his free time, he likes cooking, tinkering with open source and open hardware, and listening to and playing music.

Omri Shiv is an Open Source Machine Learning Engineer focusing on helping customers through their AI/ML journey. In his free time, he likes cooking, tinkering with open source and open hardware, and listening to and playing music. Pinak Panigrahi works with customers to build ML-driven solutions to solve strategic business problems on AWS. In his current role, he works on optimizing training and inference of generative AI models on AWS AI chips.

Pinak Panigrahi works with customers to build ML-driven solutions to solve strategic business problems on AWS. In his current role, he works on optimizing training and inference of generative AI models on AWS AI chips.

Benoit de Patoul is a GenAI/AI/ML Specialist Solutions Architect at AWS. He helps customers by providing guidance and technical assistance to build solutions related to GenAI/AI/ML using Amazon Web Services. In his free time, he likes to play piano and spend time with friends.

Benoit de Patoul is a GenAI/AI/ML Specialist Solutions Architect at AWS. He helps customers by providing guidance and technical assistance to build solutions related to GenAI/AI/ML using Amazon Web Services. In his free time, he likes to play piano and spend time with friends. Naresh Nagpal is a Solutions Architect at AWS with extensive experience in application development, integration, and technology architecture. At AWS, he works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS. He is also helping customers to integrate GenAI capabilities in their SaaS applications.

Naresh Nagpal is a Solutions Architect at AWS with extensive experience in application development, integration, and technology architecture. At AWS, he works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS. He is also helping customers to integrate GenAI capabilities in their SaaS applications. Adarsh Kyadige oversees the Research wing of the Sophos AI team, where he has been working since 2018 at the intersection of Machine Learning and Security. He earned a Masters degree in Computer Science, with a specialization in Artificial Intelligence and Machine Learning, from UC San Diego. His interests and responsibilities involve applying Deep Learning to Cybersecurity, as well as orchestrating pipelines for large scale data processing. In his leisure time, Adarsh can be found at the archery range, tennis courts, or in nature. His latest research can be found on Google Scholar.

Adarsh Kyadige oversees the Research wing of the Sophos AI team, where he has been working since 2018 at the intersection of Machine Learning and Security. He earned a Masters degree in Computer Science, with a specialization in Artificial Intelligence and Machine Learning, from UC San Diego. His interests and responsibilities involve applying Deep Learning to Cybersecurity, as well as orchestrating pipelines for large scale data processing. In his leisure time, Adarsh can be found at the archery range, tennis courts, or in nature. His latest research can be found on Google Scholar. Salma Taoufiq was a Senior Data Scientist at Sophos focusing at the intersection of machine learning and cybersecurity. With an undergraduate background in computer science, she graduated from the Central European University with a MSc. in Mathematics and Its Applications. When not developing a malware detector, Salma is an avid hiker, traveler, and consumer of thrillers.

Salma Taoufiq was a Senior Data Scientist at Sophos focusing at the intersection of machine learning and cybersecurity. With an undergraduate background in computer science, she graduated from the Central European University with a MSc. in Mathematics and Its Applications. When not developing a malware detector, Salma is an avid hiker, traveler, and consumer of thrillers.

Curtis Maher is a Product Marketing Manager at Datadog, focused on the platform’s cloud and AI/ML integrations. Curtis works closely with Datadog’s product, marketing, and sales teams to coordinate product launches and help customers observe and secure their cloud infrastructure.

Curtis Maher is a Product Marketing Manager at Datadog, focused on the platform’s cloud and AI/ML integrations. Curtis works closely with Datadog’s product, marketing, and sales teams to coordinate product launches and help customers observe and secure their cloud infrastructure. Anjali Thatte is a Product Manager at Datadog. She currently focuses on building technology to monitor AI infrastructure and ML tooling and helping customers gain visibility across their AI application tech stacks.

Anjali Thatte is a Product Manager at Datadog. She currently focuses on building technology to monitor AI infrastructure and ML tooling and helping customers gain visibility across their AI application tech stacks. Jason Mimick is a Senior Partner Solutions Architect at AWS working closely with product, engineering, marketing, and sales teams daily.

Jason Mimick is a Senior Partner Solutions Architect at AWS working closely with product, engineering, marketing, and sales teams daily. Anuj Sharma is a Principal Solution Architect at Amazon Web Services. He specializes in application modernization with hands-on technologies such as serverless, containers, generative AI, and observability. With over 18 years of experience in application development, he currently leads co-building with containers and observability focused AWS Software Partners.

Anuj Sharma is a Principal Solution Architect at Amazon Web Services. He specializes in application modernization with hands-on technologies such as serverless, containers, generative AI, and observability. With over 18 years of experience in application development, he currently leads co-building with containers and observability focused AWS Software Partners.

Gabriel Rodriguez Garcia is a Machine Learning Engineer at AWS Professional Services in Zurich. In his current role, he has helped customers achieve their business goals on a variety of ML use cases, ranging from setting up MLOps inference pipelines to developing generative AI applications.

Gabriel Rodriguez Garcia is a Machine Learning Engineer at AWS Professional Services in Zurich. In his current role, he has helped customers achieve their business goals on a variety of ML use cases, ranging from setting up MLOps inference pipelines to developing generative AI applications.

Kamran Razi is a Data Scientist at the Amazon Generative AI Innovation Center. With a passion for delivering cutting-edge generative AI solutions, Kamran helps customers unlock the full potential of AWS AI/ML services to solve real-world business challenges. Leveraging over a decade of experience in software development, he specializes in building AI-driven solutions, including chatbots, document processing, and retrieval-augmented generation (RAG) pipelines. Kamran holds a PhD in Electrical Engineering from Queen’s University.

Kamran Razi is a Data Scientist at the Amazon Generative AI Innovation Center. With a passion for delivering cutting-edge generative AI solutions, Kamran helps customers unlock the full potential of AWS AI/ML services to solve real-world business challenges. Leveraging over a decade of experience in software development, he specializes in building AI-driven solutions, including chatbots, document processing, and retrieval-augmented generation (RAG) pipelines. Kamran holds a PhD in Electrical Engineering from Queen’s University. Sungmin Hong is a Senior Applied Scientist at Amazon Generative AI Innovation Center where he helps expedite the variety of use cases of AWS customers. Before joining Amazon, Sungmin was a postdoctoral research fellow at Harvard Medical School. He holds Ph.D. in Computer Science from New York University. Outside of work, Sungmin enjoys hiking, reading and cooking.

Sungmin Hong is a Senior Applied Scientist at Amazon Generative AI Innovation Center where he helps expedite the variety of use cases of AWS customers. Before joining Amazon, Sungmin was a postdoctoral research fellow at Harvard Medical School. He holds Ph.D. in Computer Science from New York University. Outside of work, Sungmin enjoys hiking, reading and cooking. Yash Shah is a Science Manager in the AWS Generative AI Innovation Center. He and his team of applied scientists and machine learning engineers work on a range of machine learning use cases from healthcare, sports, automotive and manufacturing.

Yash Shah is a Science Manager in the AWS Generative AI Innovation Center. He and his team of applied scientists and machine learning engineers work on a range of machine learning use cases from healthcare, sports, automotive and manufacturing. Anila Joshi has more than a decade of experience building AI solutions. As a Senior Manager, Applied Science at AWS Generative AI Innovation Center, Anila pioneers innovative applications of AI that push the boundaries of possibility and accelerate the adoption of AWS services with customers by helping customers ideate, identify, and implement secure generative AI solutions.

Anila Joshi has more than a decade of experience building AI solutions. As a Senior Manager, Applied Science at AWS Generative AI Innovation Center, Anila pioneers innovative applications of AI that push the boundaries of possibility and accelerate the adoption of AWS services with customers by helping customers ideate, identify, and implement secure generative AI solutions.

Ken Kao is an executive leader with 12+ years leading engineering and product across early, mid-stage startups and public companies. He is currently the VP of Engineering at Rad AI pushing the frontier of applying Gen AI to healthcare to help make physicians more efficient and improve patient outcome. Prior to that, he was at Meta driving VR Device performance, emulation, and development tooling & Infrastructure. He has also previously held engineering leadership roles at Airbnb, Flatiron Health, and Palantir. Ken holds M.S. and B.S degrees in Electrical Engineering from Stanford University.

Ken Kao is an executive leader with 12+ years leading engineering and product across early, mid-stage startups and public companies. He is currently the VP of Engineering at Rad AI pushing the frontier of applying Gen AI to healthcare to help make physicians more efficient and improve patient outcome. Prior to that, he was at Meta driving VR Device performance, emulation, and development tooling & Infrastructure. He has also previously held engineering leadership roles at Airbnb, Flatiron Health, and Palantir. Ken holds M.S. and B.S degrees in Electrical Engineering from Stanford University. Hasan Ali Demirci is a Staff Engineer at Rad AI, specializing in software and infrastructure for machine learning. Since joining as an early engineer hire in 2019, he has steadily worked on the design and architecture of Rad AI’s online inference systems. He is certified as an AWS Certified Solutions Architect and holds a bachelor’s degree in mechanical engineering from Boğaziçi University in Istanbul and a graduate degree in finance from the University of California, Santa Cruz.

Hasan Ali Demirci is a Staff Engineer at Rad AI, specializing in software and infrastructure for machine learning. Since joining as an early engineer hire in 2019, he has steadily worked on the design and architecture of Rad AI’s online inference systems. He is certified as an AWS Certified Solutions Architect and holds a bachelor’s degree in mechanical engineering from Boğaziçi University in Istanbul and a graduate degree in finance from the University of California, Santa Cruz. Karan Jain is a Senior Machine Learning Specialist at AWS, where he leads the worldwide Go-To-Market strategy for Amazon SageMaker Inference. He helps customers accelerate their generative AI and ML journey on AWS by providing guidance on deployment, cost-optimization, and GTM strategy. He has led product, marketing, and business development efforts across industries for over 10 years, and is passionate about mapping complex service features to customer solutions.

Karan Jain is a Senior Machine Learning Specialist at AWS, where he leads the worldwide Go-To-Market strategy for Amazon SageMaker Inference. He helps customers accelerate their generative AI and ML journey on AWS by providing guidance on deployment, cost-optimization, and GTM strategy. He has led product, marketing, and business development efforts across industries for over 10 years, and is passionate about mapping complex service features to customer solutions. Dmitry Soldatkin is a Senior Machine Learning Solutions Architect at Amazon Web Services (AWS), helping customers design and build AI/ML solutions. Dmitry’s work covers a wide range of ML use cases, with a primary interest in Generative AI, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, utilities, and telecommunications. He has a passion for continuous innovation and using data to drive business outcomes. Prior to joining AWS, Dmitry was an architect, developer, and technology leader in data analytics and machine learning fields in financial services industry.

Dmitry Soldatkin is a Senior Machine Learning Solutions Architect at Amazon Web Services (AWS), helping customers design and build AI/ML solutions. Dmitry’s work covers a wide range of ML use cases, with a primary interest in Generative AI, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, utilities, and telecommunications. He has a passion for continuous innovation and using data to drive business outcomes. Prior to joining AWS, Dmitry was an architect, developer, and technology leader in data analytics and machine learning fields in financial services industry.

Mithil Shah is a Principal AI/ML Solution Architect at Amazon Web Services. He helps commercial and public sector customers use AI/ML to achieve their business outcome. He is currently helping customers build chat bots and search functionality using LLM agents and RAG.

Mithil Shah is a Principal AI/ML Solution Architect at Amazon Web Services. He helps commercial and public sector customers use AI/ML to achieve their business outcome. He is currently helping customers build chat bots and search functionality using LLM agents and RAG. Santosh Kulkarni is an Senior Solutions Architect at Amazon Web Services specializing in AI/ML. He is passionate about generative AI and is helping customers unlock business potential and drive actionable outcomes with machine learning at scale. Outside of work, he enjoys reading and traveling.

Santosh Kulkarni is an Senior Solutions Architect at Amazon Web Services specializing in AI/ML. He is passionate about generative AI and is helping customers unlock business potential and drive actionable outcomes with machine learning at scale. Outside of work, he enjoys reading and traveling.

Isaac Smothers is a Senior DevOps Engineer at Crexi. Isaac focuses on automating the creation and maintenance of robust, secure cloud infrastructure with built-in observability. Based in San Luis Obispo, he is passionate about providing self-service solutions that enable developers to build, configure, and manage their services independently, without requiring cloud or DevOps expertise. In his free time, he enjoys hiking, video editing, and gaming.

Isaac Smothers is a Senior DevOps Engineer at Crexi. Isaac focuses on automating the creation and maintenance of robust, secure cloud infrastructure with built-in observability. Based in San Luis Obispo, he is passionate about providing self-service solutions that enable developers to build, configure, and manage their services independently, without requiring cloud or DevOps expertise. In his free time, he enjoys hiking, video editing, and gaming. James Healy-Mirkovich is a principal data scientist at Crexi in Los Angeles. Passionate about making data actionable and impactful, he develops and deploys customer-facing AI/ML solutions and collaborates with product teams to explore the possibilities of AI/ML. Outside work, he unwinds by playing guitar, traveling, and enjoying music and movies.

James Healy-Mirkovich is a principal data scientist at Crexi in Los Angeles. Passionate about making data actionable and impactful, he develops and deploys customer-facing AI/ML solutions and collaborates with product teams to explore the possibilities of AI/ML. Outside work, he unwinds by playing guitar, traveling, and enjoying music and movies. Marina Novikova is a Senior Partner Solution Architect at AWS. Marina works on the technical co-enablement of AWS ISV Partners in the DevOps and Data and Analytics segments to enrich partner solutions and solve complex challenges for AWS customers. Outside of work, Marina spends time climbing high peaks around the world.

Marina Novikova is a Senior Partner Solution Architect at AWS. Marina works on the technical co-enablement of AWS ISV Partners in the DevOps and Data and Analytics segments to enrich partner solutions and solve complex challenges for AWS customers. Outside of work, Marina spends time climbing high peaks around the world.

Sharon Yu is a Software Development Engineer with Amazon SageMaker based in New York City.

Sharon Yu is a Software Development Engineer with Amazon SageMaker based in New York City. Saurabh Trikande is a Senior Product Manager for Amazon Bedrock and SageMaker Inference. He is passionate about working with customers and partners, motivated by the goal of democratizing AI. He focuses on core challenges related to deploying complex AI applications, inference with multi-tenant models, cost optimizations, and making the deployment of Generative AI models more accessible. In his spare time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch, and spending time with his family.

Saurabh Trikande is a Senior Product Manager for Amazon Bedrock and SageMaker Inference. He is passionate about working with customers and partners, motivated by the goal of democratizing AI. He focuses on core challenges related to deploying complex AI applications, inference with multi-tenant models, cost optimizations, and making the deployment of Generative AI models more accessible. In his spare time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch, and spending time with his family. Michael Nguyen is a Senior Startup Solutions Architect at AWS, specializing in leveraging AI/ML to drive innovation and develop business solutions on AWS. Michael holds 12 AWS certifications and has a BS/MS in Electrical/Computer Engineering and an MBA from Penn State University, Binghamton University, and the University of Delaware.

Michael Nguyen is a Senior Startup Solutions Architect at AWS, specializing in leveraging AI/ML to drive innovation and develop business solutions on AWS. Michael holds 12 AWS certifications and has a BS/MS in Electrical/Computer Engineering and an MBA from Penn State University, Binghamton University, and the University of Delaware. Dr. Xin Huang is a Senior Applied Scientist for Amazon SageMaker JumpStart and Amazon SageMaker built-in algorithms. He focuses on developing scalable machine learning algorithms. His research interests are in the area of natural language processing, explainable deep learning on tabular data, and robust analysis of non-parametric space-time clustering. He has published many papers in ACL, ICDM, KDD conferences, and Royal Statistical Society: Series A.

Dr. Xin Huang is a Senior Applied Scientist for Amazon SageMaker JumpStart and Amazon SageMaker built-in algorithms. He focuses on developing scalable machine learning algorithms. His research interests are in the area of natural language processing, explainable deep learning on tabular data, and robust analysis of non-parametric space-time clustering. He has published many papers in ACL, ICDM, KDD conferences, and Royal Statistical Society: Series A.