This week, the 16th European Conference on Computer Vision (ECCV2020) begins, a premier forum for the dissemination of research in computer vision and related fields. Being held virtually for the first time this year, Google is proud to be an ECCV2020 Platinum Partner and is excited to share our research with the community with nearly 50 accepted publications, alongside several tutorials and workshops.

If you are registered for ECCV this year, please visit our virtual booth in the Platinum Exhibition Hall to learn more about the research we’re presenting at ECCV 2020, including some demos and opportunities to connect with our researchers. You can also learn more about our contributions below (Google affiliations in bold).

Organizing Committee

General Chairs: Vittorio Ferrari, Bob Fisher, Cordelia Schmid, Emanuele TrucoAcademic Demonstrations Chair: Thomas Mensink

Accepted Publications

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis (Honorable Mention Award)

Ben Mildenhall, Pratul Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng

Quaternion Equivariant Capsule Networks for 3D Point Clouds

Yongheng Zhao, Tolga Birdal, Jan Eric Lenssen, Emanuele Menegatti, Leonidas Guibas, Federico Tombari

SoftpoolNet: Shape Descriptor for Point Cloud Completion and Classification

Yida Wang, David Joseph Tan, Nassir Navab, Federico Tombari

Combining Implicit Function Learning and Parametric Models for 3D Human Reconstruction

Bharat Lal Bhatnagar, Cristian Sminchisescu, Christian Theobalt, Gerard Pons-Moll

CoReNet: Coherent 3D scene reconstruction from a single RGB image

Stefan Popov, Pablo Bauszat, Vittorio Ferrari

Adversarial Generative Grammars for Human Activity Prediction

AJ Piergiovanni, Anelia Angelova, Alexander Toshev, Michael S. Ryoo

Self6D: Self-Supervised Monocular 6D Object Pose Estimation

Gu Wang, Fabian Manhardt, Jianzhun Shao, Xiangyang Ji, Nassir Navab, Federico Tombari

Du2Net: Learning Depth Estimation from Dual-Cameras and Dual-Pixels

Yinda Zhang, Neal Wadhwa, Sergio Orts-Escolano, Christian Häne, Sean Fanello, Rahul Garg

What Matters in Unsupervised Optical Flow

Rico Jonschkowski, Austin Stone, Jonathan T. Barron, Ariel Gordon, Kurt Konolige, Anelia Angelova

Appearance Consensus Driven Self-Supervised Human Mesh Recovery

Jogendra N. Kundu, Mugalodi Rakesh, Varun Jampani, Rahul M. Venkatesh, R. Venkatesh Babu

Fashionpedia: Ontology, Segmentation, and an Attribute Localization Dataset

Menglin Jia, Mengyun Shi, Mikhail Sirotenko, Yin Cui, Claire Cardie, Bharath Hariharan, Hartwig Adam, Serge Belongie

PointMixup: Augmentation for Point Clouds

Yunlu Chen, Vincent Tao Hu, Efstratios Gavves, Thomas Mensink, Pascal Mettes1, Pengwan Yang, Cees Snoek

Connecting Vision and Language with Localized Narratives (see our blog post)

Jordi Pont-Tuset, Jasper Uijlings, Soravit Changpinyo, Radu Soricut, Vittorio Ferrari

Big Transfer (BiT): General Visual Representation Learning (see our blog post)

Alexander Kolesnikov, Lucas Beyer, Xiaohua Zhai, Joan Puigcerver, Jessica Yung, Sylvain Gelly, Neil Houlsby

View-Invariant Probabilistic Embedding for Human Pose

Jennifer J. Sun, Jiaping Zhao, Liang-Chieh Chen, Florian Schroff, Hartwig Adam, Ting Liu

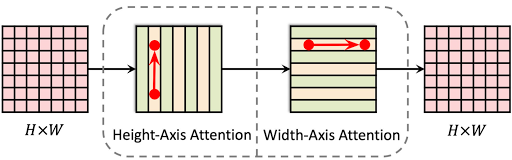

Axial-DeepLab: Stand-Alone Axial-Attention for Panoptic Segmentation

Huiyu Wang, Yukun Zhu, Bradley Green, Hartwig Adam, Alan Yuille, Liang-Chieh Chen

Mask2CAD: 3D Shape Prediction by Learning to Segment and Retrieve

Weicheng Kuo, Anelia Angelova, Tsung-Yi Lin, Angela Dai

A Generalization of Otsu’s Method and Minimum Error Thresholding

Jonathan T. Barron

Learning to Factorize and Relight a City

Andrew Liu, Shiry Ginosar, Tinghui Zhou, Alexei A. Efros, Noah Snavely

Weakly Supervised 3D Human Pose and Shape Reconstruction with Normalizing Flows

Andrei Zanfir, Eduard Gabriel Bazavan, Hongyi Xu, Bill Freeman, Rahul Sukthankar, Cristian Sminchisescu

Multi-modal Transformer for Video Retrieval

Valentin Gabeur, Chen Sun, Karteek Alahari, Cordelia Schmid

Generative Latent Textured Proxies for Category-Level Object Modeling

Ricardo Martin Brualla, Sofien Bouaziz, Matthew Brown, Rohit Pandey, Dan B Goldman

Neural Design Network: Graphic Layout Generation with Constraints

Hsin-Ying Lee*, Lu Jiang, Irfan Essa, Phuong B Le, Haifeng Gong, Ming-Hsuan Yang, Weilong Yang

Neural Articulated Shape Approximation

Boyang Deng, Gerard Pons-Moll, Timothy Jeruzalski, JP Lewis, Geoffrey Hinton, Mohammad Norouzi, Andrea Tagliasacchi

Uncertainty-Aware Weakly Supervised Action Detection from Untrimmed Videos

Anurag Arnab, Arsha Nagrani, Chen Sun, Cordelia Schmid

Beyond Controlled Environments: 3D Camera Re-Localization in Changing Indoor Scenes

Johanna Wald, Torsten Sattler, Stuart Golodetz, Tommaso Cavallari, Federico Tombari

Consistency Guided Scene Flow Estimation

Yuhua Chen, Luc Van Gool, Cordelia Schmid, Cristian Sminchisescu

Continuous Adaptation for Interactive Object Segmentation by Learning from Corrections

Theodora Kontogianni*, Michael Gygli, Jasper Uijlings, Vittorio Ferrari

SimPose: Effectively Learning DensePose and Surface Normal of People from Simulated Data

Tyler Lixuan Zhu, Per Karlsson, Christoph Bregler

Learning Data Augmentation Strategies for Object Detection

Barret Zoph, Ekin Dogus Cubuk, Golnaz Ghiasi, Tsung-Yi Lin, Jonathon Shlens, Quoc V Le

Streaming Object Detection for 3-D Point Clouds

Wei Han, Zhengdong Zhang, Benjamin Caine, Brandon Yang, Christoph Sprunk, Ouais Alsharif, Jiquan Ngiam, Vijay Vasudevan, Jonathon Shlens, Zhifeng Chen

Improving 3D Object Detection through Progressive Population Based Augmentation

Shuyang Cheng, Zhaoqi Leng, Ekin Dogus Cubuk, Barret Zoph, Chunyan Bai, Jiquan Ngiam, Yang Song, Benjamin Caine, Vijay Vasudevan, Congcong Li, Quoc V. Le, Jonathon Shlens, Dragomir Anguelov

An LSTM Approach to Temporal 3D Object Detection in LiDAR Point Clouds

Rui Huang, Wanyue Zhang, Abhijit Kundu, Caroline Pantofaru, David A Ross, Thomas Funkhouser, Alireza Fathi

BigNAS: Scaling Up Neural Architecture Search with Big Single-Stage Models

Jiahui Yu, Pengchong Jin, Hanxiao Liu, Gabriel Bender, Pieter-Jan Kindermans, Mingxing Tan, Thomas Huang, Xiaodan Song, Ruoming Pang, Quoc Le

Memory-Efficient Incremental Learning Through Feature Adaptation

Ahmet Iscen, Jeffrey Zhang, Svetlana Lazebnik, Cordelia Schmid

Virtual Multi-view Fusion for 3D Semantic Segmentation

Abhijit Kundu, Xiaoqi Yin, Alireza Fathi, David A Ross, Brian E Brewington, Thomas Funkhouser, Caroline Pantofaru

Efficient Scale-permuted Backbone with Learned Resource Distribution

Xianzhi Du, Tsung-Yi Lin, Pengchong Jin, Yin Cui, Mingxing Tan, Quoc V Le, Xiaodan Song

RetrieveGAN: Image Synthesis via Differentiable Patch Retrieval

Hung-Yu Tseng*, Hsin-Ying Lee*, Lu Jiang, Ming-Hsuan Yang, Weilong Yang

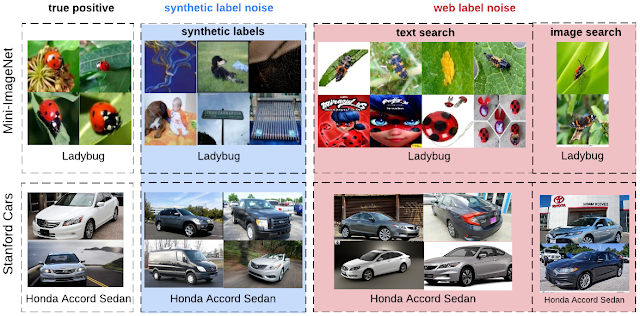

Graph convolutional networks for learning with few clean and many noisy labels

Ahmet Iscen, Giorgos Tolias, Yannis Avrithis, Ondrej Chum, Cordelia Schmid

Deep Positional and Relational Feature Learning for Rotation-Invariant Point Cloud Analysis

Ruixuan Yu, Xin Wei, Federico Tombari, Jian Sun

Federated Visual Classification with Real-World Data Distribution

Tzu-Ming Harry Hsu, Hang Qi, Matthew Brown

Joint Bilateral Learning for Real-time Universal Photorealistic Style Transfer

Xide Xia, Meng Zhang, Tianfan Xue, Zheng Sun, Hui Fang, Brian Kulis, Jiawen Chen

AssembleNet++: Assembling Modality Representations via Attention Connections

Michael S. Ryoo, AJ Piergiovanni, Juhana Kangaspunta, Anelia Angelova

Naive-Student: Leveraging Semi-Supervised Learning in Video Sequences for Urban Scene Segmentation

Liang-Chieh Chen, Raphael Gontijo-Lopes, Bowen Cheng, Maxwell D. Collins, Ekin D. Cubuk, Barret Zoph, Hartwig Adam, Jonathon Shlens

AttentionNAS: Spatiotemporal Attention Cell Search for Video Classification

Xiaofang Wang, Xuehan Xiong, Maxim Neumann, AJ Piergiovanni, Michael S. Ryoo, Anelia Angelova, Kris M. Kitani, Wei Hua

Unifying Deep Local and Global Features for Image Search

Bingyi Cao, Andre Araujo, Jack Sim

Pillar-based Object Detection for Autonomous Driving

Yue Wang, Alireza Fathi, Abhijit Kundu, David Ross, Caroline Pantofaru, Tom Funkhouser, Justin Solomon

Improving Object Detection with Selective Self-supervised Self-training

Yandong Li, Di Huang, Danfeng Qin, Liqiang Wang, Boqing Gong

Environment-agnostic Multitask Learning for Natural Language Grounded NavigationXin Eric Wang*, Vihan Jain, Eugene Ie, William Yang Wang, Zornitsa Kozareva, Sujith Ravi

SimAug: Learning Robust Representations from Simulation for Trajectory Prediction

Junwei Liang, Lu Jiang, Alex Hauptmann

Tutorials

New Frontiers for Learning with Limited Labels or Data

Organizers: Shalini De Mello, Sifei Liu, Zhiding Yu, Pavlo Molchanov, Varun Jampani, Arash Vahdat, Animashree Anandkumar, Jan Kautz

Weakly Supervised Learning in Computer Vision

Organizers: Seong Joon Oh, Rodrigo Benenson, Hakan Bilen

Workshops

Joint COCO and LVIS Recognition Challenge

Organizers: Alexander Kirillov, Tsung-Yi Lin, Yin Cui, Matteo Ruggero Ronchi, Agrim Gupta, Ross Girshick, Piotr Dollar

4D Vision

Organizers: Anelia Angelova, Vincent Casser, Jürgen Sturm, Noah Snavely, Rahul Sukthankar

GigaVision: When Gigapixel Videography Meets Computer Vision

Organizers: Lu Fang, Shengjin Wang, David J. Brady, Feng Yang

Advances in Image Manipulation Workshop and Challenges

Organizers: Radu Timofte, Andrey Ignatov, Luc Van Gool, Wangmeng Zuo, Ming-Hsuan Yang, Kyoung Mu Lee, Liang Lin, Eli Shechtman, Kai Zhang, Dario Fuoli, Zhiwu Huang, Martin Danelljan, Shuhang Gu, Ming-Yu Liu, Seungjun Nah, Sanghyun Son, Jaerin Lee, Andres Romero, ETH Zurich, Hannan Lu, Ruofan Zhou, Majed El Helou, Sabine Süsstrunk, Roey Mechrez, BeyondMinds & Technion, Pengxu Wei, Evangelos Ntavelis, Siavash Bigdeli

Robust Vision Challenge 2020

Organizers:Oliver Zendel, Hassan Abu Alhaija, Rodrigo Benenson, Marius Cordts, Angela Dai, Xavier Puig Fernandez, Andreas Geiger, Niklas Hanselmann, Nicolas Jourdan, Vladlen Koltun, Peter Kontschider, Alina Kuznetsova, Yubin Kang, Tsung-Yi Lin, Claudio Michaelis, Gerhard Neuhold, Matthias Niessner, Marc Pollefeys, Rene Ranftl, Carsten Rother, Torsten Sattler, Daniel Scharstein, Hendrik Schilling, Nick Schneider, Jonas Uhrig, Xiu-Shen Wei, Jonas Wulff, Bolei Zhou

“Deep Internal Learning”: Training with no prior examples

Organizers: Michal Irani,Tomer Michaeli, Tali Dekel, Assaf Shocher, Tamar Rott Shaham

Instance-Level Recognition

Organizers: Andre Araujo, Cam Askew, Bingyi Cao, Ondrej Chum, Bohyung Han, Torsten Sattler, Jack Sim, Giorgos Tolias, Tobias Weyand, Xu Zhang

Women in Computer Vision Workshop (WiCV) (Platinum Sponsor)

Panel Participation: Dina Damen, Sanja Fiddler, Zeynep Akata, Grady Booch, Rahul Sukthankar

*Work performed while at Google

Read More

Read More