3.6 billion. That’s about how many medical imaging tests are performed annually worldwide to diagnose, monitor and treat various conditions.

Speeding up the processing and evaluation of all these X-rays, CT scans, MRIs and ultrasounds is essential to helping doctors manage their workloads and to improving health outcomes.

That’s why NVIDIA introduced MONAI, which serves as an open-source research and development platform for AI applications used in medical imaging and beyond. MONAI unites doctors with data scientists to unlock the power of medical data to build deep learning models and deployable applications for medical AI workflows.

This week at the annual meeting of RSNA, the Radiological Society of North America, NVIDIA announced that Siemens Healthineers has adopted MONAI Deploy, a module within MONAI that bridges the gap from research to clinical production, to boost the speed and efficiency of integrating AI workflows for medical imaging into clinical deployments.

With over 15,000 installations in medical devices around the world, the Siemens Healthineers Syngo Carbon and syngo.via enterprise imaging platforms help clinicians better read and extract insights from medical images of many sources.

Developers typically use a variety of frameworks when building AI applications. This makes it a challenge to deploy their applications into clinical environments.

With a few lines of code, MONAI Deploy builds AI applications that can run anywhere. It is a tool for developing, packaging, testing, deploying and running medical AI applications in clinical production. Using it streamlines the process of developing and integrating medical imaging AI applications into clinical workflows.

.MONAI Deploy on the Siemens Healthineers platform has significantly accelerated the AI integration process, letting users port trained AI models into real-world clinical settings with just a few clicks, compared with what used to take months. This helps researchers, entrepreneurs and startups get their applications into the hands of radiologists more quickly.

“By accelerating AI model deployment, we empower healthcare institutions to harness and benefit from the latest advancements in AI-based medical imaging faster than ever,” said Axel Heitland, head of digital technologies and research at Siemens Healthineers. “With MONAI Deploy, researchers can quickly tailor AI models and transition innovations from the lab to clinical practice, providing thousands of clinical researchers worldwide access to AI-driven advancements directly on their syngo.via and Syngo Carbon imaging platforms.”

Enhanced with MONAI-developed apps, these platforms can significantly streamline AI integration. These apps can be easily provided and used on the Siemens Healthineers Digital Marketplace, where users can browse, select and seamlessly integrate them into their clinical workflows.

MONAI Ecosystem Boosts Innovation and Adoption

Now marking its five-year anniversary, MONAI has seen over 3.5 million downloads, 220 contributors from around the world, acknowledgements in over 3,000 publications, 17 MICCAI challenge wins and use in numerous clinical products.

The latest release of MONAI — v1.4 — includes updates that give researchers and clinicians even more opportunities to take advantage of the innovations of MONAI and contribute to Siemens Healthineers Syngo Carbon, syngo.via and the Siemens Healthineers Digital Marketplace.

The updates in MONAI v1.4 and related NVIDIA products include new foundation models for medical imaging, which can be customized in MONAI and deployed as NVIDIA NIM microservices. The following models are now generally available as NIM microservices:

- MAISI (Medical AI for Synthetic Imaging) is a latent diffusion generative AI foundation model that can simulate high-resolution, full-format 3D CT images and their anatomic segmentations.

- VISTA-3D is a foundation model for CT image segmentation that offers accurate out-of-the-box performance covering over 120 major organ classes. It also offers effective adaptation and zero-shot capabilities to learn to segment novel structures.

Alongside MONAI 1.4’s major features, the new MONAI Multi-Modal Model, or M3, is now accessible through MONAI’s VLM GitHub repo. M3 is a framework that extends any multimodal LLM with medical AI experts such as trained AI models from MONAI’s Model Zoo. The power of this new framework is demonstrated by the VILA-M3 foundation model that’s now available on Hugging Face, offering state-of-the-art radiological image copilot performance.

MONAI Bridges Hospitals, Healthcare Startups and Research Institutions

Leading healthcare institutions, academic medical centers, startups and software providers around the world are adopting and advancing MONAI, including:

- German Cancer Research Center leads MONAI’s benchmark and metrics working group, which provides metrics for measuring AI performance and guidelines for how and when to use those metrics.

- Nadeem Lab from Memorial Sloan Kettering Cancer Center (MSK) pioneered the cloud-based deployment of multiple AI-assisted annotation pipelines and inference modules for pathology data using MONAI.

- University of Colorado School of Medicine faculty developed MONAI-based ophthalmology tools for detecting retinal diseases using a variety of imaging modalities. The university also leads some of the original federated learning developments and clinical demonstrations using MONAI.

- MathWorks has integrated MONAI Label with its Medical Imaging Toolbox, bringing medical imaging AI and AI-assisted annotation capabilities to thousands of MATLAB users engaged in medical and biomedical applications throughout academia and industry.

- GSK is exploring MONAI foundation models such as VISTA-3D and VISTA-2D for image segmentation.

- Flywheel offers a platform, which includes MONAI for streamlining imaging data management, automating research workflows, and enabling AI development and analysis, that scales for the needs of research institutions and life sciences organizations.

- Alara Imaging published its work on integrating MONAI foundation models such as VISTA-3D with LLMs such as Llama 3 at the 2024 Society for Imaging Informatics in Medicine conference.

- RadImageNet is exploring the use of MONAI’s M3 framework to develop cutting-edge vision language models that utilize expert image AI models from MONAI to generate high-quality radiological reports.

- Kitware is providing professional software development services surrounding MONAI, helping integrate MONAI into custom workflows for device manufacturers as well as regulatory-approved products.

Researchers and companies are also using MONAI on cloud service providers to run and deploy scalable AI applications. Cloud platforms providing access to MONAI include AWS HealthImaging, Google Cloud, Precision Imaging Network, part of Microsoft Cloud for Healthcare, and Oracle Cloud Infrastructure.

See disclosure statements about syngo.via, Syngo Carbon and products in the Digital Marketplace.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)

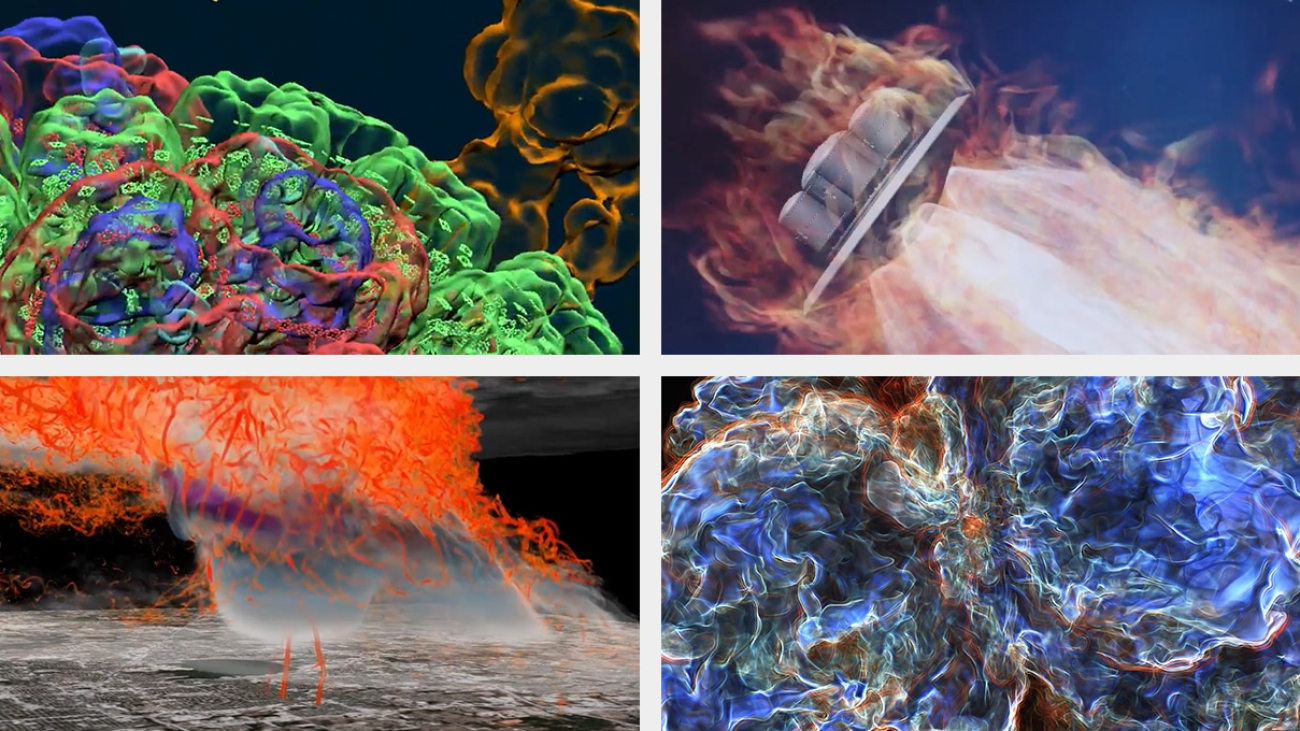

Example images created using the NVIDIA Omniverse Blueprint for 3D conditioning for precise visual generative AI.

Example images created using the NVIDIA Omniverse Blueprint for 3D conditioning for precise visual generative AI.