Improving the sustainability of manufacturing involves optimizing entire product lifecycles — from material sourcing and transportation to design, production, distribution and end-of-life disposal.

According to the International Energy Agency, reducing the carbon footprint of industrial production by just 1% could save 90 million tons of CO₂ emissions annually. That’s equivalent to taking more than 20 million gasoline-powered cars off the road each year.

Technologies such as digital twins and accelerated computing are enabling manufacturers to reduce emissions, enhance energy efficiency and meet the growing demand for environmentally conscious production.

Siemens and NVIDIA are at the forefront of developing technologies that help customers achieve their sustainability goals and improve production processes.

Key Challenges in Sustainable Manufacturing

Balancing sustainability with business objectives like profitability remains a top concern for manufacturers. A study by Ernst & Young in 2022 found that digital twins can reduce construction costs by up to 35%, underscoring the close link between resource consumption and construction expenses.

Yet, one of the biggest challenges in driving sustainable manufacturing and reducing overhead is the presence of silos between departments, different plants within the same organization and across production teams. These silos arise from a variety of issues, including conflicting priorities and incentives, a lack of common energy-efficiency metrics and language, and the need for new skills and solutions to bridge these gaps.

Data management also presents a hurdle, with many manufacturers struggling to turn vast amounts of data into actionable insights — particularly those that can impact sustainability goals.

According to a case study by The Manufacturer, a quarter of respondents surveyed acknowledged that their data shortcomings negatively impact energy efficiency and environmental sustainability, with nearly a third reporting that data is siloed to local use cases.

Addressing these challenges requires innovative approaches that break down barriers and use data to drive sustainability. Acting as a central hub for information, digital twin technology is proving to be an essential tool in this effort.

The Role of Digital Twins in Sustainable Manufacturing

Industrial-scale digital twins built on the NVIDIA Omniverse development platform and Universal Scene Description (OpenUSD) are transforming how manufacturers approach sustainability and scalability.

These technologies power digital twins that take engineering data from various sources and contextualize it as it would appear in the real world. This breaks down information silos and offers a holistic view that can be shared across teams — from engineering to sales and marketing.

This enhanced visibility enables engineers and designers to simulate and optimize product designs, facility layouts, energy use and manufacturing processes before physical production begins. That allows for deeper insights and collaboration by helping stakeholders make more informed decisions to improve efficiency and reduce costly errors and last-minute changes that can result in significant waste.

To further transform how products and experiences are designed and manufactured, Siemens is integrating NVIDIA Omniverse Cloud application programming interfaces into its Siemens Xcelerator platform, starting with Teamcenter X, its cloud-based product lifecycle management software.

These integrations enable Siemens to bring the power of photorealistic visualization to complex engineering data and workflows, allowing companies to create physics-based digital twins that help eliminate workflow waste and errors.

Siemens and NVIDIA have demonstrated how companies like HD Hyundai, a leader in sustainable ship manufacturing, are using these new capabilities to visualize and interact with complex engineering data at new levels of scale and fidelity.

HD Hyundai is unifying and visualizing complex engineering projects directly within Teamcenter X.

Physics-based digital twins are also being utilized to test and validate robotics and physical AI before they’re deployed into real-world manufacturing facilities.

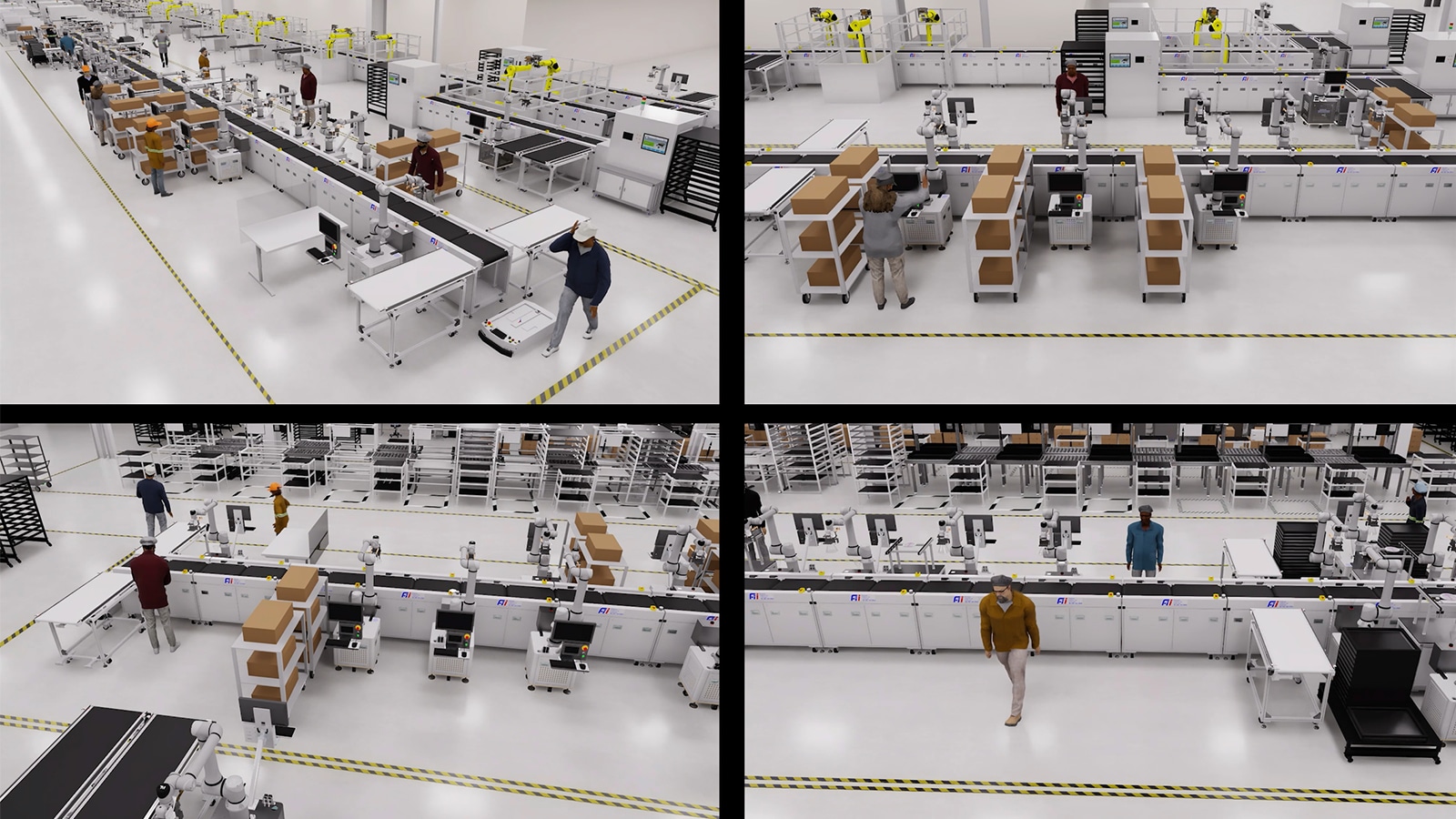

Foxconn, the world’s largest electronics manufacturer, has introduced a virtual plant that pushes the boundaries of industrial automation. Foxconn’s digital twin platform, built on Omniverse and NVIDIA Isaac, replicates a new factory in the Guadalajara, Mexico, electronics hub to allow engineers to optimize processes and train robots for efficient production of NVIDIA Blackwell systems.

By simulating the factory environment, engineers can determine the best placement for heavy robotic arms, optimize movement and maximize safe operations while strategically positioning thousands of sensors and video cameras to monitor the entire production process.

The use of digital twins, like those in Foxconn’s virtual factory, is becoming increasingly common in industrial settings for simulation and testing.

Foxconn’s chairman, Young Liu, highlighted how the digital twin will lead to enhanced automation and efficiency, resulting in significant savings in time, cost and energy. The company expects to increase manufacturing efficiency while reducing energy consumption by over 30% annually.

By connecting data from Siemens Xcelerator software to its platform built on NVIDIA Omniverse and OpenUSD, the virtual plant allows Foxconn to design and train robots in a realistic, simulated environment, revolutionizing its approach to automation and sustainable manufacturing.

Making Every Watt Count

One consideration for industries everywhere is how the rising demand for AI is outpacing the adoption of renewable energy. This means business leaders, particularly manufacturing plant and data center operators, must maximize energy efficiency and ensure every watt is utilized effectively to balance decarbonization efforts alongside AI growth.

The best and simplest means of optimizing energy use is to accelerate every possible workload.

Using accelerated computing platforms that integrate both GPUs and CPUs, manufacturers can significantly enhance computational efficiency.

GPUs, specifically designed for handling complex calculations, can outperform traditional CPU-only systems in AI tasks. These systems can be up to 20x more energy efficient when it comes to AI inference and training.

This leap in efficiency has fueled substantial gains over the past decade, enabling AI to address more complex challenges while maintaining energy-efficient operations.

Building on these advances, businesses can further reduce their environmental impact by adopting key energy management strategies. These include implementing energy demand management and efficiency measures, scaling battery storage for short-duration power outages, securing renewable energy sources for baseload electricity, using renewable fuels for backup generation and exploring innovative ideas like heat reuse.

Join the Siemens and NVIDIA session at the 7X24 Exchange 2024 Fall Conference to discover how digital twins and AI are driving sustainable solutions across data centers.

The Future of Sustainable Manufacturing: Industrial Digitalization

The next frontier in manufacturing is the convergence of the digital and physical worlds in what is known as industrial digitalization, or the “industrial metaverse.” Here, digital twins become even more immersive and interactive, allowing manufacturers to make data-driven decisions faster than ever.

“We will revolutionize how products and experiences are designed, manufactured and serviced,” said Roland Busch, president and CEO of Siemens AG. “On the path to the industrial metaverse, this next generation of industrial software enables customers to experience products as they would in the real world: in context, in stunning realism and — in the future — interact with them through natural language input.”

Leading the Way With Digital Twins and Sustainable Computing

Siemens and NVIDIA’s collaboration showcases the power of digital twins and accelerated computing for reducing the environmental impact caused by the manufacturing industry every year. By leveraging advanced simulations, AI insights and real-time data, manufacturers can reduce waste and increase energy efficiency on their path to decarbonization.

Learn more about how Siemens and NVIDIA are accelerating sustainable manufacturing.

Read about NVIDIA’s sustainable computing efforts and check out the energy-efficiency calculator to discover potential energy and emissions savings.

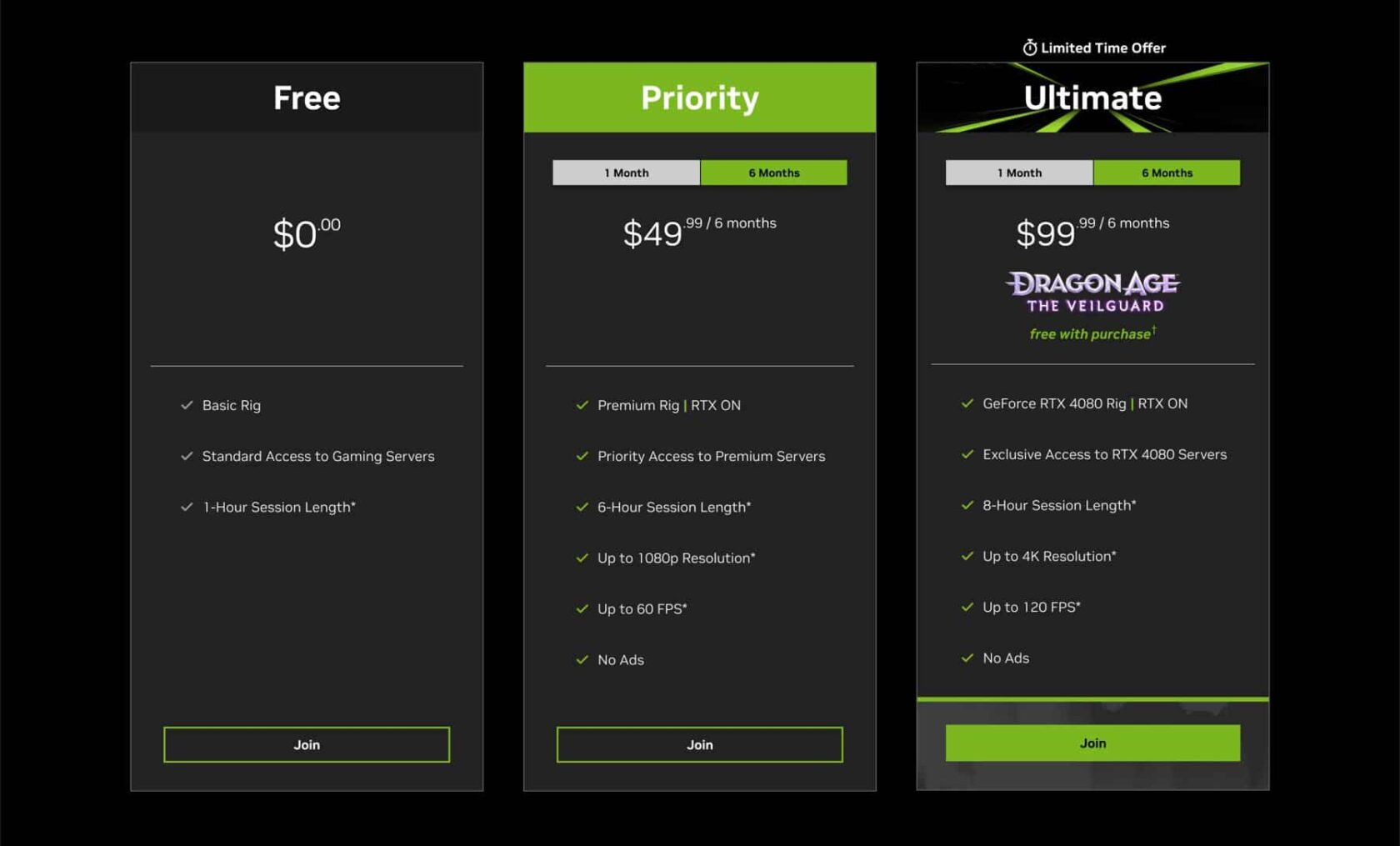

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)