Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and showcases new hardware, software, tools and accelerations for GeForce RTX PC and NVIDIA RTX workstation users.

Image generation models — a popular subset of generative AI — can parse and understand written language, then translate words into images in almost any style.

Representing the cutting edge of what’s possible in image generation, a new series of models from Black Forest Labs — now available to try on PC and workstations — run fastest on GeForce RTX and NVIDIA RTX GPUs.

Fluxible Capabilities

FLUX.1 AI is a text-to-image generation model suite developed by Black Forest Labs. The models are built on the diffusion transformer (DiT) architecture, which allows models with a high number of parameters to maintain efficiency. The Flux models are trained on 12 billion parameters for high-quality image generation.

DiT models are efficient and computationally intensive — and NVIDIA RTX GPUs are essential for handling these new models, the largest of which can’t run on non-RTX GPUs without significant tweaking. Flux models now support the NVIDIA TensorRT software development kit, which improves their performance up to 20%. Users can try Flux and other models with TensorRT in ComfyUI.

Flux Appeal

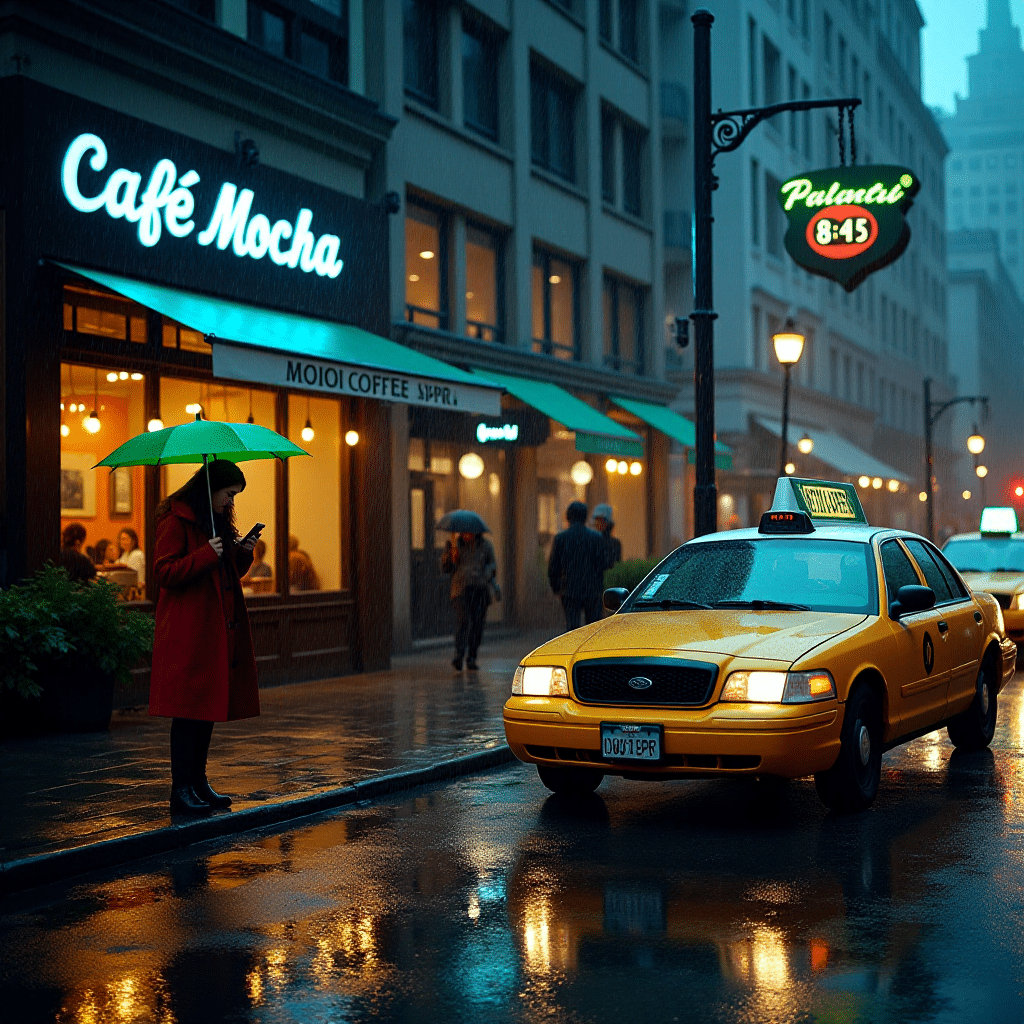

FLUX.1 excels in generating high-quality, diverse images with exceptional prompt adherence, which refers to how accurately the AI interprets and executes instructions. High prompt adherence means the generated image closely matches the text prompt’s described elements, style and mood. Low prompt adherence results in images that may partially or completely deviate from given instructions.

FLUX.1 is noted for its ability to render the human anatomy accurately, including for challenging, intricate features like hands and faces. FLUX.1 also significantly improves the generation of legible text within images, addressing another common challenge in text-to-image models. This makes FLUX.1 models suitable for applications that require precise text representation, such as promotional materials and book covers.

FLUX.AI is available in three variants, offering users choices to best fit their workflows without sacrificing quality:

- FLUX.1 pro: State-of-the-art quality for enterprise users; accessible through an application programming interface.

- FLUX.1 dev: A distilled, free version of FLUX.1 pro that still provides high quality.

- FLUX.1 schnell: The fastest model, ideal for local development and personal use; has a permissive Apache 2.0 license.

The dev and schnell models are open source, and Black Forest Labs provides access to its weights on the popular platform Hugging Face. This encourages innovation and collaboration within the image generation community by allowing researchers and developers to build upon and enhance the models.

Embraced by the Community

The Flux models’ dev and schnell variants were downloaded more than 2 million times on HuggingFace in less than three weeks since their launch.

Users have praised FLUX.1 for its abilities to produce visually stunning images with exceptional detail and realism, as well as to process complex prompts without requiring extensive parameter adjustments.

In addition, FLUX.1’s versatility in handling various artistic styles and efficiency in quickly generating images makes it a valuable tool for both personal and professional projects.

Get Started

Users can access FLUX.1 using popular community webpages like ComfyUI. The community-run ComfyUI Wiki includes step-by-step instructions for getting started.

Many YouTube creators also offer video tutorials on Flux models, like this one from MDMZ:

Share your generated images on social media using the hashtag #fluxRTX for a chance to be featured on NVIDIA AI’s channels.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

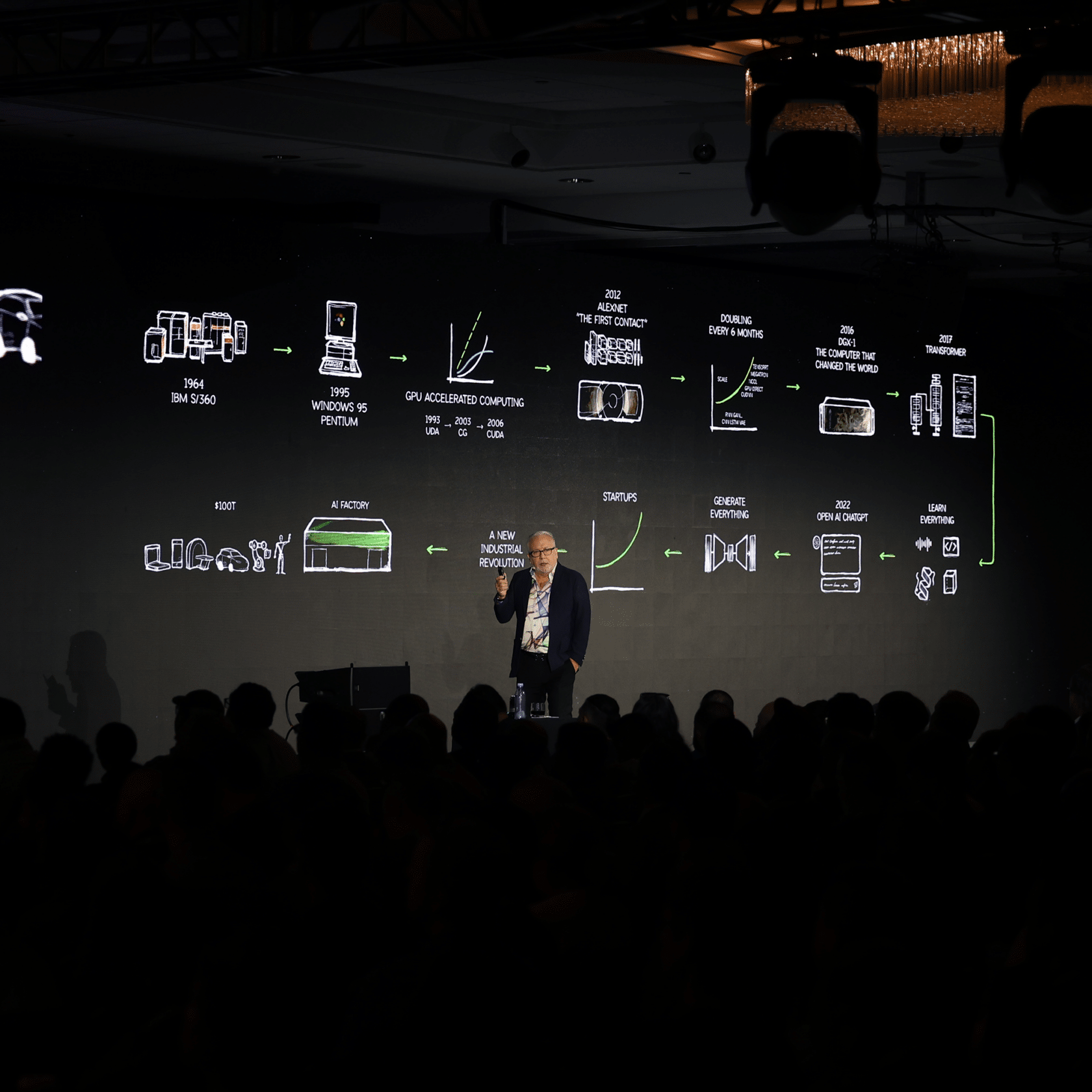

NVIDIA’s CUDA libraries, which have been fundamental in enabling breakthroughs across industries, now power over 4,000 accelerated applications, Pette explained.

NVIDIA’s CUDA libraries, which have been fundamental in enabling breakthroughs across industries, now power over 4,000 accelerated applications, Pette explained.