Since the advent of the computer age, industries have been so awash in stored data that most of it never gets put to use.

This data is estimated to be in the neighborhood of 120 zettabytes — the equivalent of trillions of terabytes, or more than 120x the amount of every grain of sand on every beach around the globe. Now, the world’s industries are putting that untamed data to work by building and customizing large language models (LLMs).

As 2025 approaches, industries such as healthcare, telecommunications, entertainment, energy, robotics, automotive and retail are using those models, combining it with their proprietary data and gearing up to create AI that can reason.

The NVIDIA experts below focus on some of the industries that deliver $88 trillion worth of goods and services globally each year. They predict that AI that can harness data at the edge and deliver near-instantaneous insights is coming to hospitals, factories, customer service centers, cars and mobile devices near you.

But first, let’s hear AI’s predictions for AI. When asked, “What will be the top trends in AI in 2025 for industries?” both Perplexity and ChatGPT 4.0 responded that agentic AI sits atop the list alongside edge AI, AI cybersecurity and AI-driven robots.

Agentic AI is a new category of generative AI that operates virtually autonomously. It can make complex decisions and take actions based on continuous learning and analysis of vast datasets. Agentic AI is adaptable, has defined goals and can correct itself, and can chat with other AI agents or reach out to a human for help.

Now, hear from NVIDIA experts on what to expect in the year ahead:

Kimberly Powell

Vice President of Healthcare

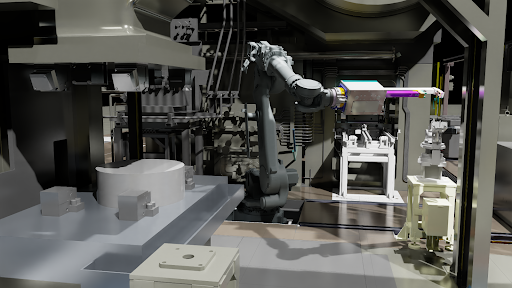

Human-robotic interaction: Robots will assist human clinicians in a variety of ways, from understanding and responding to human commands, to performing and assisting in complex surgeries.

It’s being made possible by digital twins, simulation and AI that train and test robotic systems in virtual environments to reduce risks associated with real-world trials. It also can train robots to react in virtually any scenario, enhancing their adaptability and performance across different clinical situations.

New virtual worlds for training robots to perform complex tasks will make autonomous surgical robots a reality. These surgical robots will perform complex surgical tasks with precision, reducing patient recovery times and decreasing the cognitive workload for surgeons.

Digital health agents: The dawn of agentic AI and multi-agent systems will address the existential challenges of workforce shortages and the rising cost of care.

Administrative health services will become digital humans taking notes for you or making your next appointment — introducing an era of services delivered by software and birthing a service-as-a-software industry.

Patient experience will be transformed with always-on, personalized care services while healthcare staff will collaborate with agents that help them reduce clerical work, retrieve and summarize patient histories, and recommend clinical trials and state-of-the-art treatments for their patients.

Drug discovery and design AI factories: Just as ChatGPT can generate an email or a poem without putting a pen to paper for trial and error, generative AI models in drug discovery can liberate scientific thinking and exploration.

Techbio and biopharma companies have begun combining models that generate, predict and optimize molecules to explore the near-infinite possible target drug combinations before going into time-consuming and expensive wet lab experiments.

The drug discovery and design AI factories will consume all wet lab data, refine AI models and redeploy those models — improving each experiment by learning from the previous one. These AI factories will shift the industry from a discovery process to a design and engineering one.

Rev Lebaredian

Rev Lebaredian

Vice President of Omniverse and Simulation Technology

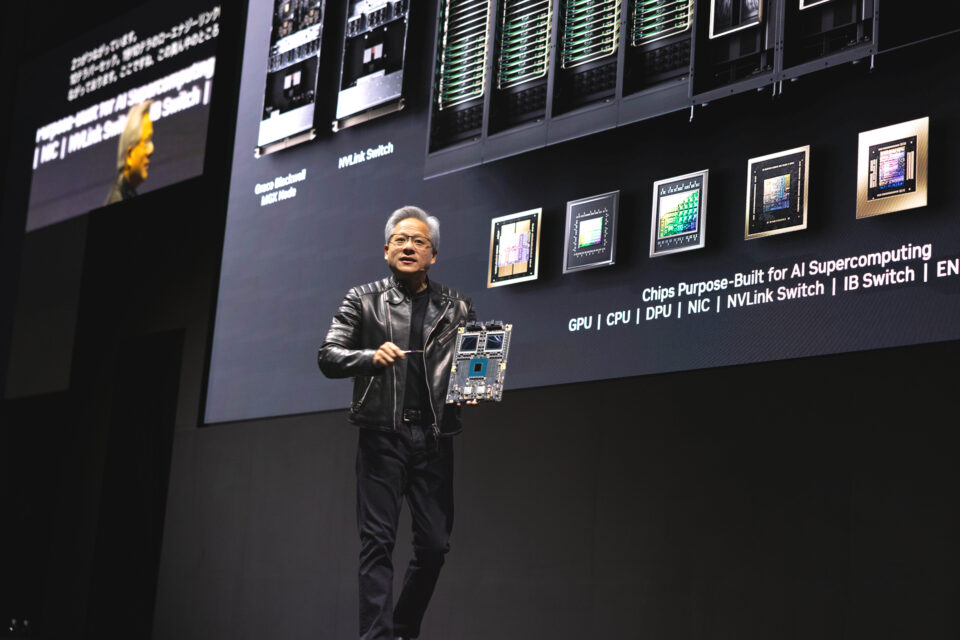

Let’s get physical (AI, that is): Getting ready for AI models that can perceive, understand and interact with the physical world is one challenge enterprises will race to tackle.

While LLMs require reinforcement learning largely in the form of human feedback, physical AI needs to learn in a “world model” that mimics the laws of physics. Large-scale physically based simulations are allowing the world to realize the value of physical AI through robots by accelerating the training of physical AI models and enabling continuous training in robotic systems across every industry.

Cheaper by the dozen: In addition to their smarts (or lack thereof), one big factor that has slowed adoption of humanoid robots has been affordability. As agentic AI brings new intelligence to robots, though, volume will pick up and costs will come down sharply. The average cost of industrial robots is expected to drop to $10,800 in 2025, down sharply from $46K in 2010 to $27K in 2017. As these devices become significantly cheaper, they’ll become as commonplace across industries as mobile devices are.

Deepu Talla

Deepu Talla

Vice President of Robotics and Edge Computing

Redefining robots: When people think of robots today, they’re usually images or content showing autonomous mobile robots (AMRs), manipulator arms or humanoids. But tomorrow’s robots are set to be an autonomous system that perceives, reasons, plans and acts — then learns.

Soon we’ll be thinking of robots embodied everywhere from surgical rooms and data centers to warehouses and factories. Even traffic control systems or entire cities will be transformed from static, manually operated systems to autonomous, interactive systems embodied by physical AI.

The rise of small language models: To improve the functionality of robots operating at the edge, expect to see the rise of small language models that are energy-efficient and avoid latency issues associated with sending data to data centers. The shift to small language models in edge computing will improve inference in a range of industries, including automotive, retail and advanced robotics.

Kevin Levitt

Kevin Levitt

Global Director of Financial Services

AI agents boost firm operations: AI-powered agents will be deeply integrated into the financial services ecosystem, improving customer experiences, driving productivity and reducing operational costs.

AI agents will take every form based on each financial services firm’s needs. Human-like 3D avatars will take requests and interact directly with clients, while text-based chatbots will summarize thousands of pages of data and documents in seconds to deliver accurate, tailored insights to employees across all business functions.

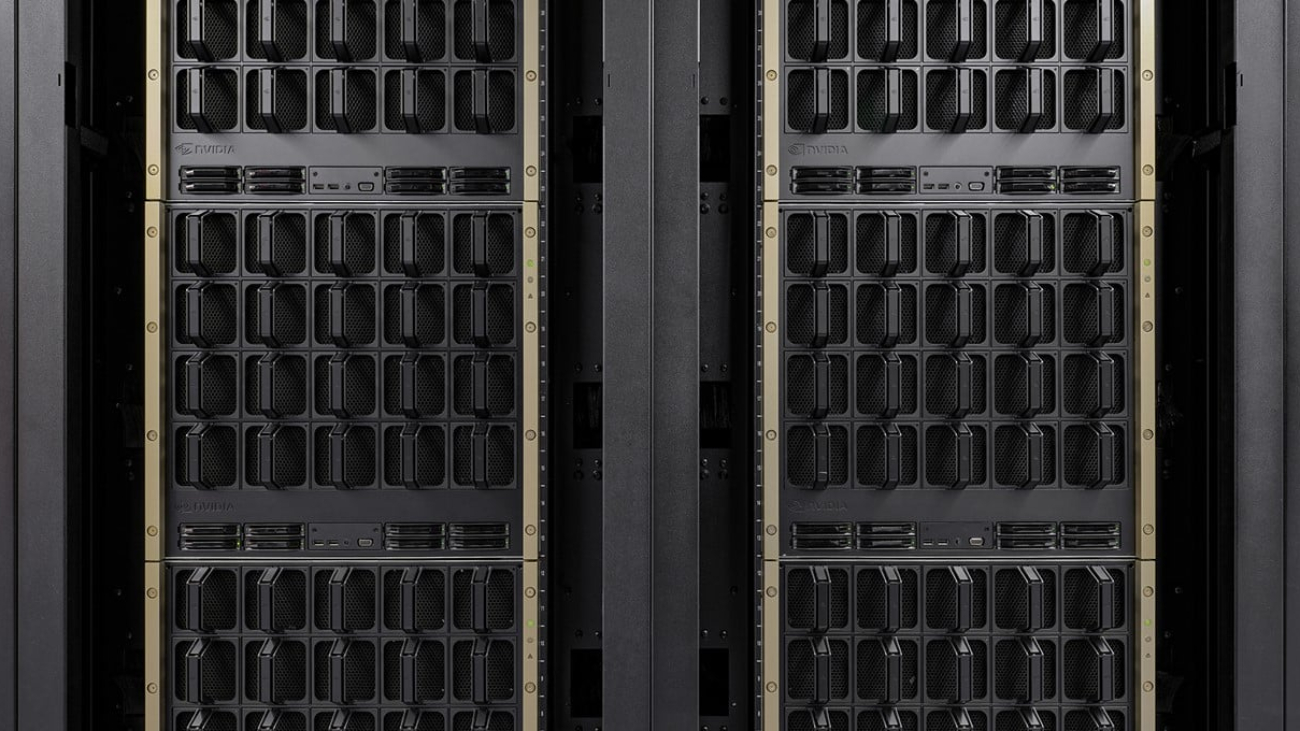

AI factories become table stakes: AI use cases in the industry are exploding. This includes improving identity verification for anti-money laundering and know-your-customer regulations, reducing false positives for transaction fraud and generating new trading strategies to improve market returns. AI also is automating document management, reducing funding cycles to help consumers and businesses on their financial journeys.

To capitalize on opportunities like these, financial institutions will build AI factories that use full-stack accelerated computing to maximize performance and utilization to build AI-enabled applications that serve hundreds, if not thousands, of use cases — helping set themselves apart from the competition.

AI-assisted data governance: Due to the sensitive nature of financial data and stringent regulatory requirements, governance will be a priority for firms as they use data to create reliable and legal AI applications, including for fraud detection, predictions and forecasting, real-time calculations and customer service.

Firms will use AI models to assist in the structure, control, orchestration, processing and utilization of financial data, making the process of complying with regulations and safeguarding customer privacy smoother and less labor intensive. AI will be the key to making sense of and deriving actionable insights from the industry’s stockpile of underutilized, unstructured data.

Richard Kerris

Richard Kerris

Vice President of Media and Entertainment

Let AI entertain you: AI will continue to revolutionize entertainment with hyperpersonalized content on every screen, from TV shows to live sports. Using generative AI and advanced vision-language models, platforms will offer immersive experiences tailored to individual tastes, interests and moods. Imagine teaser images and sizzle reels crafted to capture the essence of a new show or live event and create an instant personal connection.

In live sports, AI will enhance accessibility and cultural relevance, providing language dubbing, tailored commentary and local adaptations. AI will also elevate binge-watching by adjusting pacing, quality and engagement options in real time to keep fans captivated. This new level of interaction will transform streaming from a passive experience into an engaging journey that brings people closer to the action and each other.

AI-driven platforms will also foster meaningful connections with audiences by tailoring recommendations, trailers and content to individual preferences. AI’s hyperpersonalization will allow viewers to discover hidden gems, reconnect with old favorites and feel seen. For the industry, AI will drive growth and innovation, introducing new business models and enabling global content strategies that celebrate unique viewer preferences, making entertainment feel boundless, engaging and personally crafted.

Ronnie Vasishta

Ronnie Vasishta

Senior Vice President of Telecoms

The AI connection: Telecommunications providers will begin to deliver generative AI applications and 5G connectivity over the same network. AI radio access network (AI-RAN) will enable telecom operators to transform traditional single-purpose base stations from cost centers into revenue-producing assets capable of providing AI inference services to devices, while more efficiently delivering the best network performance.

AI agents to the rescue: The telecommunications industry will be among the first to dial into agentic AI to perform key business functions. Telco operators will use AI agents for a wide variety of tasks, from suggesting money-saving plans to customers and troubleshooting network connectivity, to answering billing questions and processing payments.

More efficient, higher-performing networks: AI also will be used at the wireless network layer to enhance efficiency, deliver site-specific learning and reduce power consumption. Using AI as an intelligent performance improvement tool, operators will be able to continuously observe network traffic, predict congestion patterns and make adjustments before failures happen, allowing for optimal network performance.

Answering the call on sovereign AI: Nations will increasingly turn to telcos — which have proven experience managing complex, distributed technology networks — to achieve their sovereign AI objectives. The trend will spread quickly across Europe and Asia, where telcos in Switzerland, Japan, Indonesia and Norway are already partnering with national leaders to build AI factories that can use proprietary, local data to help researchers, startups, businesses and government agencies create AI applications and services.

Xinzhou Wu

Xinzhou Wu

Vice President of Automotive

Pedal to generative AI metal: Autonomous vehicles will become more performant as developers tap into advancements in generative AI. For example, harnessing foundation models, such as vision language models, provides an opportunity to use internet-scale knowledge to solve one of the hardest problems in the autonomous vehicle (AV) field, namely that of efficiently and safely reasoning through rare corner cases.

Simulation unlocks success: More broadly, new AI-based tools will enable breakthroughs in how AV development is carried out. For example, advances in generative simulation will enable the scalable creation of complex scenarios aimed at stress-testing vehicles for safety purposes. Aside from allowing for testing unusual or dangerous conditions, simulation is also essential for generating synthetic data to enable end-to-end model training.

Three-computer approach: Effectively, new advances in AI will catalyze AV software development across the three key computers underpinning AV development — one for training the AI-based stack in the data center, another for simulation and validation, and a third in-vehicle computer to process real-time sensor data for safe driving. Together, these systems will enable continuous improvement of AV software for enhanced safety and performance of cars, trucks, robotaxis and beyond.

Marc Spieler

Marc Spieler

Senior Managing Director of Global Energy Industry

Welcoming the smart grid: Do you know when your daily peak home electricity is? You will soon as utilities around the world embrace smart meters that use AI to broadly manage their grid networks, from big power plants and substations and, now, into the home.

As the smart grid takes shape, smart meters — once deemed too expensive to be installed in millions of homes — that combine software, sensors and accelerated computing will alert utilities when trees in a backyard brush up against power lines or when to offer big rebates to buy back the excess power stored through rooftop solar installations.

Powering up: Delivering the optimal power stack has always been mission-critical for the energy industry. In the era of generative AI, utilities will address this issue in ways that reduce environmental impact.

Expect in 2025 to see a broader embrace of nuclear power as one clean-energy path the industry will take. Demand for natural gas also will grow as it replaces coal and other forms of energy. These resurgent forms of energy are being helped by the increased use of accelerated computing, simulation technology and AI and 3D visualization, which helps optimize design, pipeline flows and storage. We’ll see the same happening at oil and gas companies, which are looking to reduce the impact of energy exploration and production.

Azita Martin

Azita Martin

Vice President of Retail, Consumer-Packaged Goods and Quick-Service Restaurants

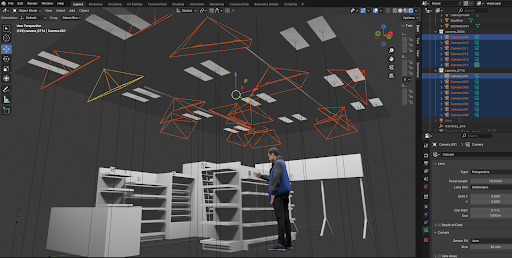

Software-defined retail: Supercenters and grocery stores will become software-defined, each running computer vision and sophisticated AI algorithms at the edge. The transition will accelerate checkout, optimize merchandising and reduce shrink — the industry term for a product being lost or stolen.

Each store will be connected to a headquarters AI network, using collective data to become a perpetual learning machine. Software-defined stores that continually learn from their own data will transform the shopping experience.

Intelligent supply chain: Intelligent supply chains created using digital twins, generative AI, machine learning and AI-based solvers will drive billions of dollars in labor productivity and operational efficiencies. Digital twin simulations of stores and distribution centers will optimize layouts to increase in-store sales and accelerate throughput in distribution centers.

Agentic robots working alongside associates will load and unload trucks, stock shelves and pack customer orders. Also, last-mile delivery will be enhanced with AI-based routing optimization solvers, allowing products to reach customers faster while reducing vehicle fuel costs.

Read More

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)

Rev Lebaredian

Rev Lebaredian Deepu Talla

Deepu Talla Kevin Levitt

Kevin Levitt Richard Kerris

Richard Kerris Ronnie Vasishta

Ronnie Vasishta Xinzhou Wu

Xinzhou Wu Marc Spieler

Marc Spieler Azita Martin

Azita Martin