Well before OpenAI upended the technology industry with its release of ChatGPT in the fall of 2022, Douwe Kiela already understood why large language models, on their own, could only offer partial solutions for key enterprise use cases.

The young Dutch CEO of Contextual AI had been deeply influenced by two seminal papers from Google and OpenAI, which together outlined the recipe for creating fast, efficient transformer-based generative AI models and LLMs.

Soon after those papers were published in 2017 and 2018, Kiela and his team of AI researchers at Facebook, where he worked at that time, realized LLMs would face profound data freshness issues.

They knew that when foundation models like LLMs were trained on massive datasets, the training not only imbued the model with a metaphorical “brain” for “reasoning” across data. The training data also represented the entirety of a model’s knowledge that it could draw on to generate answers to users’ questions.

Kiela’s team realized that, unless an LLM could access relevant real-time data in an efficient, cost-effective way, even the smartest LLM wouldn’t be very useful for many enterprises’ needs.

So, in the spring of 2020, Kiela and his team published a seminal paper of their own, which introduced the world to retrieval-augmented generation. RAG, as it’s commonly called, is a method for continuously and cost-effectively updating foundation models with new, relevant information, including from a user’s own files and from the internet. With RAG, an LLM’s knowledge is no longer confined to its training data, which makes models far more accurate, impactful and relevant to enterprise users.

Today, Kiela and Amanpreet Singh, a former colleague at Facebook, are the CEO and CTO of Contextual AI, a Silicon Valley-based startup, which recently closed an $80 million Series A round, which included NVIDIA’s investment arm, NVentures. Contextual AI is also a member of NVIDIA Inception, a program designed to nurture startups. With roughly 50 employees, the company says it plans to double in size by the end of the year.

The platform Contextual AI offers is called RAG 2.0. In many ways, it’s an advanced, productized version of the RAG architecture Kiela and Singh first described in their 2020 paper.

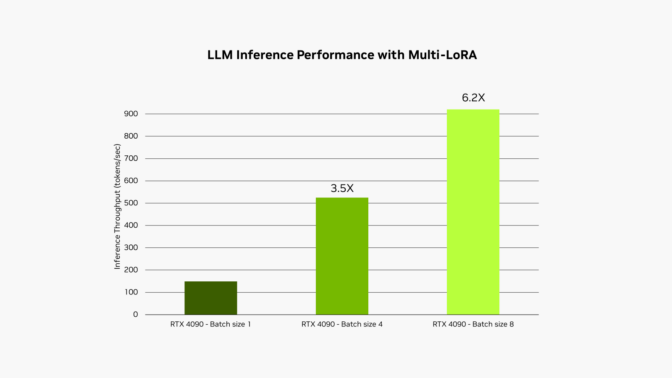

RAG 2.0 can achieve roughly 10x better parameter accuracy and performance over competing offerings, Kiela says.

That means, for example, that a 70-billion-parameter model that would typically require significant compute resources could instead run on far smaller infrastructure, one built to handle only 7 billion parameters without sacrificing accuracy. This type of optimization opens up edge use cases with smaller computers that can perform at significantly higher-than-expected levels.

“When ChatGPT happened, we saw this enormous frustration where everybody recognized the potential of LLMs, but also realized the technology wasn’t quite there yet,” explained Kiela. “We knew that RAG was the solution to many of the problems. And we also knew that we could do much better than what we outlined in the original RAG paper in 2020.”

Integrated Retrievers and Language Models Offer Big Performance Gains

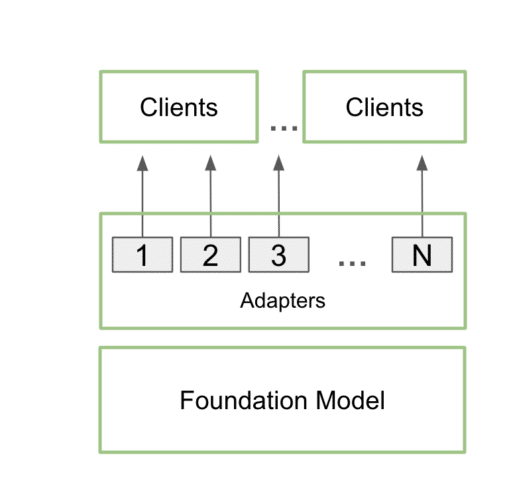

The key to Contextual AI’s solutions is its close integration of its retriever architecture, the “R” in RAG, with an LLM’s architecture, which is the generator, or “G,” in the term. The way RAG works is that a retriever interprets a user’s query, checks various sources to identify relevant documents or data and then brings that information back to an LLM, which reasons across this new information to generate a response.

Since around 2020, RAG has become the dominant approach for enterprises that deploy LLM-powered chatbots. As a result, a vibrant ecosystem of RAG-focused startups has formed.

One of the ways Contextual AI differentiates itself from competitors is by how it refines and improves its retrievers through back propagation, a process of adjusting algorithms — the weights and biases — underlying its neural network architecture.

And, instead of training and adjusting two distinct neural networks, that is, the retriever and the LLM, Contextual AI offers a unified state-of-the-art platform, which aligns the retriever and language model, and then tunes them both through back propagation.

Synchronizing and adjusting weights and biases across distinct neural networks is difficult, but the result, Kiela says, leads to tremendous gains in precision, response quality and optimization. And because the retriever and generator are so closely aligned, the responses they create are grounded in common data, which means their answers are far less likely than other RAG architectures to include made up or “hallucinated” data, which a model might offer when it doesn’t “know” an answer.

“Our approach is technically very challenging, but it leads to much stronger coupling between the retriever and the generator, which makes our system far more accurate and much more efficient,” said Kiela.

Tackling Difficult Use Cases With State-of-the-Art Innovations

RAG 2.0 is essentially LLM-agnostic, which means it works across different open-source language models, like Mistral or Llama, and can accommodate customers’ model preferences. The startup’s retrievers were developed using NVIDIA’s Megatron LM on a mix of NVIDIA H100 and A100 Tensor Core GPUs hosted in Google Cloud.

One of the significant challenges every RAG solution faces is how to identify the most relevant information to answer a user’s query when that information may be stored in a variety of formats, such as text, video or PDF.

Contextual AI overcomes this challenge through a “mixture of retrievers” approach, which aligns different retrievers’ sub-specialties with the different formats data is stored in.

Contextual AI deploys a combination of RAG types, plus a neural reranking algorithm, to identify information stored in different formats which, together, are optimally responsive to the user’s query.

For example, if some information relevant to a query is stored in a video file format, then one of the RAGs deployed to identify relevant data would likely be a Graph RAG, which is very good at understanding temporal relationships in unstructured data like video. If other data were stored in a text or PDF format, then a vector-based RAG would simultaneously be deployed.

The neural reranker would then help organize the retrieved data and the prioritized information would then be fed to the LLM to generate an answer to the initial query.

“To maximize performance, we almost never use a single retrieval approach — it’s usually a hybrid because they have different and complementary strengths,” Kiela said. “The exact right mixture depends on the use case, the underlying data and the user’s query.”

By essentially fusing the RAG and LLM architectures, and offering many routes for finding relevant information, Contextual AI offers customers significantly improved performance. In addition to greater accuracy, its offering lowers latency thanks to fewer API calls between the RAG’s and LLM’s neural networks.

Because of its highly optimized architecture and lower compute demands, RAG 2.0 can run in the cloud, on premises or fully disconnected. And that makes it relevant to a wide array of industries, from fintech and manufacturing to medical devices and robotics.

“The use cases we’re focusing on are the really hard ones,” Kiela said. “Beyond reading a transcript, answering basic questions or summarization, we’re focused on the very high-value, knowledge-intensive roles that will save companies a lot of money or make them much more productive.”

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)