Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and showcases new hardware, software, tools and accelerations for RTX PC users.

Content generators — whether producing language, 2D images, 3D models or videos — are giving the creative community tools that bring visions to life faster.

To help developers build these new generative AI tools, NVIDIA has set up NVIDIA AI Foundry. It helps companies train generative AI models on their own licensed data using NVIDIA Edify, a multimodal AI architecture that can use simple text prompts to generate images, videos, 3D assets, 360-degree high-dynamic-range imaging and physically based rendering (PBR) materials. Using AI Foundry, companies can train bespoke AI models to generate any of these assets.

Key elements of Edify include its ability to generate multiple types of content, its superior training efficiency, which allows it to produce high-quality content while trained on fewer images, and its ability to fine-tune models to style-match or learn characters or objects.

One of the best examples of services built on NVIDIA AI Foundry and Edify is Generative AI by Getty Images, a commercially safe generative photography service. The combination of AI Foundry and Edify allows users to control their training datasets, so they can create models that fit their need.

To avoid copyright issues, Getty Images used Edify to train the service on its own licensed content, ensuring that no famous characters or products are in the dataset. The company also shares part of the profits with the contributors, driving a new revenue stream for creators who contribute to the model.

Asset Generation With Edify

Edify can be trained to generate a variety of image types, including images, 3D assets and 360-degree HDRi environment maps.

Edify Image can generate four high-quality 1K images in around six seconds, doubling the performance of the previous model. Images can also be converted to 4K with a generative upscaler that adds additional details.

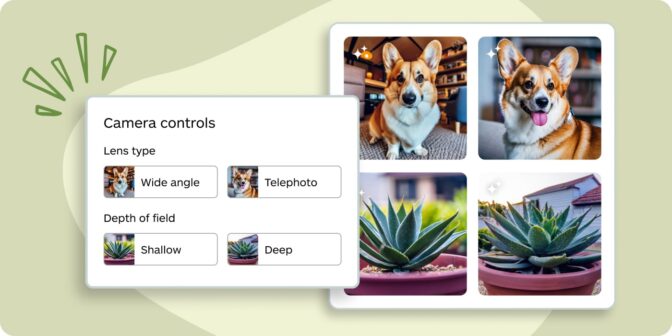

Images are highly controllable thanks to advanced prompt adherence, camera controls to specify focal length or depth of field, and ControlNets to guide the generation. The ControlNets include Sketch, which allows users to provide a sketch to follow or copy the composition of an image, and Depth, to copy the composition of an image.

Images can also be edited with Edify Image. InPaint allows users to add or modify content in an image. Replace — a strict InPaint — can change details such as clothing. And OutPaint can expand an image to match different aspect ratios. And all of this is simplified with Segment, a feature that can mask objects with just a text prompt.

Edify can also create artist-ready 3D meshes. The meshes come with clean quads-based topology, up to 4K PBR materials and automatic UV mapping for easier texture editing. A fast preview mode provides results in as few as 10 seconds, which can then be turned into a full 3D mesh.

Meshes are perfect for prototyping scenes, generating background objects for set decoration or as a head start for 3D sculpting.

Edify 360 HDRi generates environment maps of natural landscapes that can be used to light a scene, for reflections and even as a background. The model can generate up to 16K HDRi images from text or image prompts. With a desired backplate in hand, users can create a custom HDRi to match instead of spending hours looking for one.

Edify’s multimodal capability is unique, enabling advanced workflows that combine different asset types. Used together with an agent, for instance, Edify allows users to prototype a full scene in a couple of minutes with a simple text prompt — like in the NVIDIA Research SIGGRAPH demo that showcased the assistive 3D world-building capabilities of NVIDIA Edify-powered models and the NVIDIA Omniverse platform.

Another use case is to combine Edify 3D and 360 HDRi with Image to give users full control of image generation. By generating the scene in 3D, artists can move objects around and frame their desired shot — and then use Edify Image to turn the prototype into a photorealistic image.

Generative AI by Getty Images

Getty Images is one of the largest content service providers and suppliers of creative visuals, editorial photography, video and music — and is the one of the first places people turn to discover, purchase and share powerful visual content from the world’s best photographers and videographers.

Getty Images used NVIDIA AI Foundry to train an NVIDIA Edify Image model to power its generative AI service. Available through Generative AI by Getty Images for enterprises and Generative AI by iStock for small businesses and amateur creators, the service allows users to generate and modify images using models powered by NVIDIA Edify.

Getty Images and iStock recently updated to the latest version of Edify Image, enabling faster generations and higher prompt adherence and exposing Camera Controls.

Users can now also use the generative AI tools on preshot creative content, allowing them to edit and modify iStock’s library of visuals to rapidly iterate and perfect content. Those same capabilities will be soon available on Gettyimages.com.

Test drive Generative AI by Getty Images on ai.nvidia.com.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)