AI has seen unprecedented growth — spurring the need for new training and education resources for students and industry professionals.

NVIDIA’s latest on-demand webinar, Essential Training and Tips to Accelerate Your Career in AI, featured a panel discussion with industry experts on fostering career growth and learning in AI and other advanced technologies.

Over 1,800 attendees gained insights on how to kick-start their careers and use NVIDIA’s technologies and resources to accelerate their professional development.

Opportunities in AI

AI’s impact is touching nearly every industry, presenting new career opportunities for professionals of all backgrounds.

Lauren Silveira, a university recruiting program manager at NVIDIA, challenged attendees to take their unique education and experience and apply it in the AI field.

“You don’t have to work directly in AI to impact the industry,” said Silveira. “I knew I wouldn’t be a doctor or engineer — that wasn’t in my career path — but I could create opportunities for those that wanted to pursue those dreams.”

Kevin McFall, a principal instructor for the NVIDIA Deep Learning Institute, offered some advice for those looking to navigate a career in AI and advanced technologies but finding themselves overwhelmed or unsure of where to start.

“Don’t try to do it all by yourself,” he said. “Don’t get focused on building everything from scratch — the best skill that you can have is being able to take pieces of code or inspiration from different resources and plug them together to make a whole.”

A main takeaway from the panelists was that students and industry professionals can significantly enhance their capabilities by leveraging tools and resources in addition to their networks.

Every individual can access a variety of free software development kits, community resources and specialized courses in areas like robotics, CUDA and OpenUSD through the NVIDIA Developer Program. Additionally, they can kick off projects with the CUDA code sample library and explore specialized guides such as “A Simple Guide to Deploying Generative AI With NVIDIA NIM”.

Spinning a Network

Staying up to date on the rapidly expanding technology industry involves more than just keeping up with the latest education and certifications.

Sabrina Koumoin, a senior software engineer at NVIDIA, spoke on the importance of networking. She believes people can find like-minded peers and mentors to gain inspiration from by sharing their personal learning journeys or projects on social platforms like LinkedIn.

A self-taught coder, Koumoin also advocates for active engagement and education accessibility. Outside of work, she hosted multiple coding bootcamps for people looking to break into tech.

“It’s a way to show that learning technical skills can be engaging, not intimidating,” she said.

David Ajoku, founder and CEO at Demystifyd and Aware.ai, also emphasized the importance of using LinkedIn to build connections, demonstrate key accomplishments and show passion.

He outlined a three-step strategy to enhance your LinkedIn presence, designed to help you stand out, gain deeper insights into your preferred companies and boldly share your aspirations and interests:

- Think about a company you’d like to work for and what draws you to it.

- Research thoroughly, focusing on its main activities, mission and goals.

- Be bold — create a series of posts informing your network about your career journey and what advancements interest you in the chosen company.

One attendee asked about how AI might evolve over the next decade and what skills professionals should focus on to stay relevant. Louis Stewart, head of strategic initiatives at NVIDIA, replied that crafting a personal narrative and growth journey is just as important as ensuring certifications and skills are up to date.

“Be intentional and purposeful — have an end in mind,” he said. “That’s how you connect with future potential companies and people — it’s a skill you have to develop to stay ahead.”

Deep Dive Into Learning

NVIDIA offers a variety of programs and resources to equip the next generation of AI professionals with the skills and training needed to excel in a career in AI.

NVIDIA’s AI Learning Essentials is designed to give individuals the knowledge, skills and certifications they need to be prepared for the workforce and the fast moving field of AI. It includes free access to self-paced introductory courses and webinars on topics such as generative AI, retrieval-augmented generation (RAG) and CUDA.

The NVIDIA Deep Learning Institute (DLI) provides a diverse range of resources, including learning materials, self-paced and live trainings, and educator programs spanning AI, accelerated computing and data science, graphics simulation and more. They also offer technical workshops for students currently enrolled in universities.

DLI provides comprehensive training for generative AI, RAG, NVIDIA NIM inference microservices and large language models. Offerings also include certifications for generative AI LLMs and generative AI multimodal that help learners showcase their expertise and stand out from the crowd.

Get started with AI Learning Essentials, the NVIDIA Deep Learning Institute and on-demand resources.

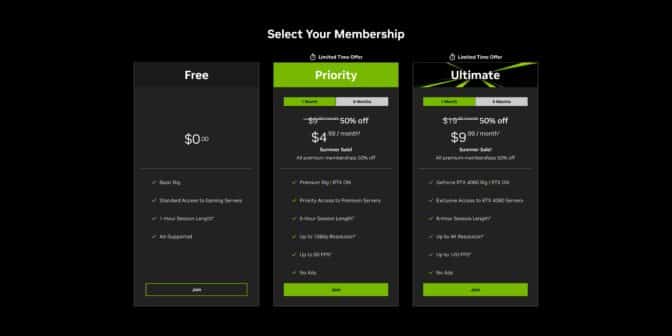

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)