Foxconn operates more than 170 factories around the world — the latest one a virtual plant pushing the state of the art in industrial automation.

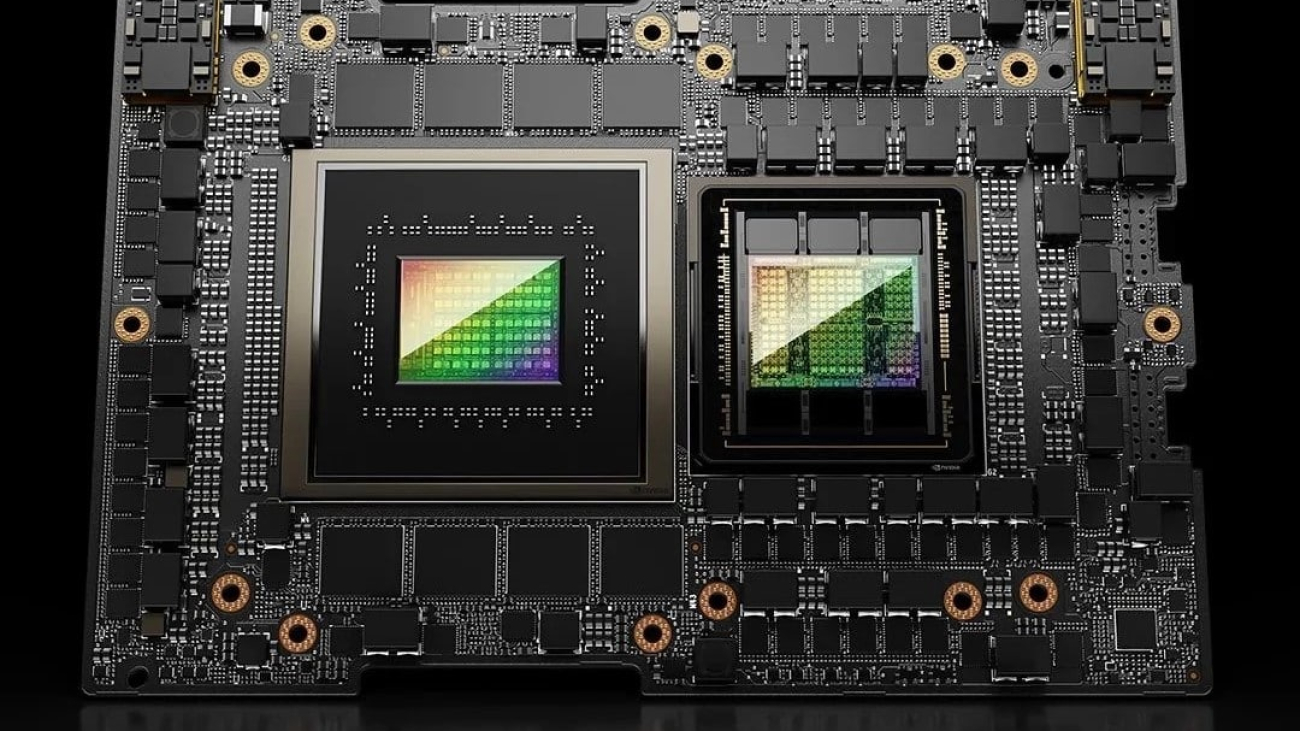

It’s the digital twin of a new factory in Guadalajara, hub of Mexico’s electronics industry. Foxconn’s engineers are defining processes and training robots in this virtual environment, so the physical plant can produce at high efficiency the next engine of accelerated computing, NVIDIA Blackwell HGX systems.

To design an optimal assembly line, factory engineers need to find the best placement for dozens of robotic arms, each weighing hundreds of pounds. To accurately monitor the overall process, they situate thousands of sensors, including many networked video cameras in a matrix to show plant operators all the right details.

Virtual Factories Create Real Savings

Such challenges are why companies like Foxconn are increasingly creating virtual factories for simulation and testing.

“Our digital twin will guide us to new levels of automation and industrial efficiency, saving time, cost and energy,” said Young Liu, chairman of the company that last year had revenues of nearly $200 billion.

Based on its efforts so far, the company anticipates that it can increase the manufacturing efficiency of complex servers using the simulated plant, leading to significant cost savings and reducing kilowatt-hour usage by over 30% annually.

Foxconn Teams With NVIDIA, Siemens

Foxconn is building its digital twin with software from the Siemens Xcelerator portfolio including Teamcenter and NVIDIA Omniverse, a platform for developing 3D workflows and applications based on OpenUSD.

NVIDIA and Siemens announced in March that they will connect Siemens Xcelerator applications to NVIDIA Omniverse Cloud API microservices. Foxconn will be among the first to employ the combined services, so its digital twin is physically accurate and visually realistic.

Engineers will employ Teamcenter with Omniverse APIs to design robot work cells and assembly lines. Then they’ll use Omniverse to pull all the 3D CAD elements into one virtual factory where their robots will be trained with NVIDIA Isaac Sim.

Robots Attend a Virtual School

A growing set of manufacturers is building digital twins to streamline factory processes. Foxconn is among the first to take the next step in automation — training their AI robots in the digital twin.

Inside the Foxconn virtual factory, robot arms from manufacturers such as Epson can learn how to see, grasp and move objects with NVIDIA Isaac Manipulator, a collection of NVIDIA-accelerated libraries and AI foundation models for robot arms.

For example, the robot arms may learn how to pick up a Blackwell server and place it on an autonomous mobile robot (AMR). The arms can use Isaac Manipulator’s cuMotion to find inspection paths for products, even when objects are placed in the way.

Foxconn’s AMRs, from Taiwan’s FARobot, will learn how to see and navigate the factory floor using NVIDIA Perceptor, software that helps them build a real-time 3D map that indicates any obstacles. The robot’s routes are generated and optimized by NVIDIA cuOpt, a world-record holding route optimization microservice.

Unlike many transport robots that need to stick to carefully drawn lines on the factory floor, these smart AMRs will navigate around obstacles to get wherever they need to go.

A Global Trend to Industrial Digitization

The Guadalajara factory is just the beginning. Foxconn is starting to design digital twins of factories around the world, including one in Taiwan where it will manufacture electric buses.

Foxconn is also deploying NVIDIA Metropolis, an application framework for smart cities and spaces, to give cameras on the shop floor AI-powered vision. That gives plant managers deeper insights into daily operations and opportunities to further streamline operations and improve worker safety.

With an estimated 10 million factories worldwide, the $46 trillion manufacturing sector is a rich field for industrial digitalization.

Delta Electronics, MediaTek, MSI and Pegatron are among other top electronics makers revealed at COMPUTEX this week how they’re using NVIDIA AI and Omniverse to build digital twins of their factories.

Like Foxconn, they’re racing to make their factories more agile, autonomous and sustainable to serve the demand for more than a billion smartphones, PCs and servers a year.

A reference architecture shows how to develop factory digital twins with the NVIDIA AI and Omniverse platforms. And learn about the experiences of five companies doing this work.

Watch NVIDIA founder and CEO Jensen Huang’s COMPUTEX keynote to get the latest on AI and more.