Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and showcases new hardware, software, tools and accelerations for GeForce RTX PC and RTX workstation users.

Generative AI is enabling new capabilities for Windows applications and games. It’s powering unscripted, dynamic NPCs, it’s enabling creators to generate novel works of art, and it’s helping gamers boost frame rates by up to 4x. But this is just the beginning.

As the capabilities and use cases for generative AI continue to grow, so does the demand for compute to support it.

Hybrid AI combines the onboard AI acceleration of NVIDIA RTX with scalable, cloud-based GPUs to effectively and efficiently meet the demands of AI workloads.

Hybrid AI, a Love Story

With growing AI adoption, app developers are looking for deployment options: AI running locally on RTX GPUs delivers high performance and low latency, and is always available — even when not connected to the internet. On the other hand, AI running in the cloud can run larger models and scale across many GPUs, serving multiple clients simultaneously. In many cases, a single application will use both.

Hybrid AI is a kind of matchmaker that harmonizes local PC and workstation compute with cloud scalability. It provides the flexibility to optimize AI workloads based on specific use cases, cost and performance. It helps developers ensure that AI tasks run where it makes the most sense for their specific applications.

Whether the AI is running locally or in the cloud it gets accelerated by NVIDIA GPUs and NVIDIA’s AI stack, including TensorRT and TensorRT-LLM. That means less time staring at pinwheels of death and more opportunity to deliver cutting-edge, AI powered features to users.

A range of NVIDIA tools and technologies support hybrid AI workflows for creators, gamers, and developers.

Dream in the Cloud, Bring to Life on RTX

Generative AI has demonstrated its ability to help artists ideate, prototype and brainstorm new creations. One such solution, the cloud-based Generative AI by iStock — powered by NVIDIA Edify — is a generative photography service that was built for and with artists, training only on licensed content and with compensation for artist contributors.

Generative AI by iStock goes beyond image generation, providing artists with extensive tools to explore styles, variations, modify parts of an image or expand the canvas. With all these tools, artists can ideate numerous times and still bring ideas to life quickly.

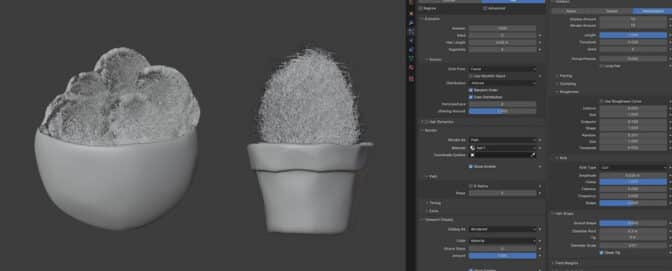

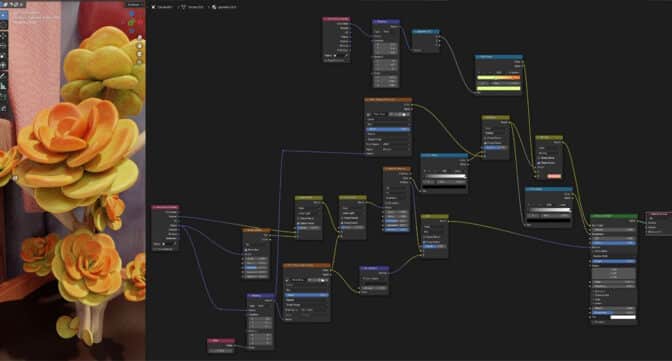

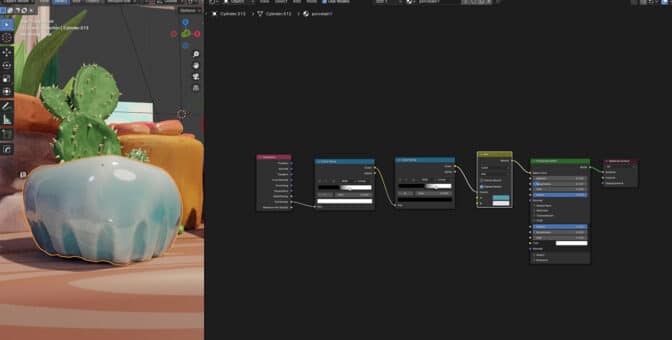

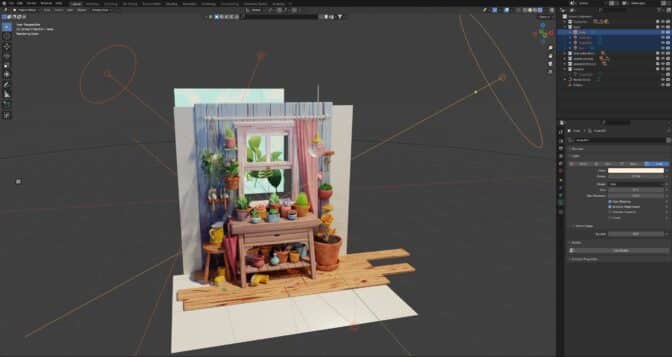

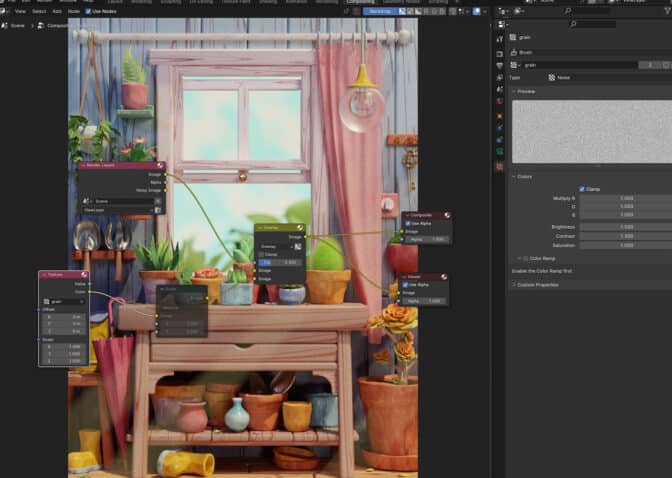

Once the creative concept is ready, artists can bring it back to their local systems. RTX-powered PCs and workstations offer artists AI acceleration in more than 125 of the top creative apps to realize the full vision — whether it’s creating an amazing piece of artwork in Photoshop with local AI tools, animating the image with a parallax effect in DaVinci Resolve, or building a 3D scene with the reference image in Blender with ray tracing acceleration, and AI denoising in Optix.

Hybrid ACE Brings NPCs to Life

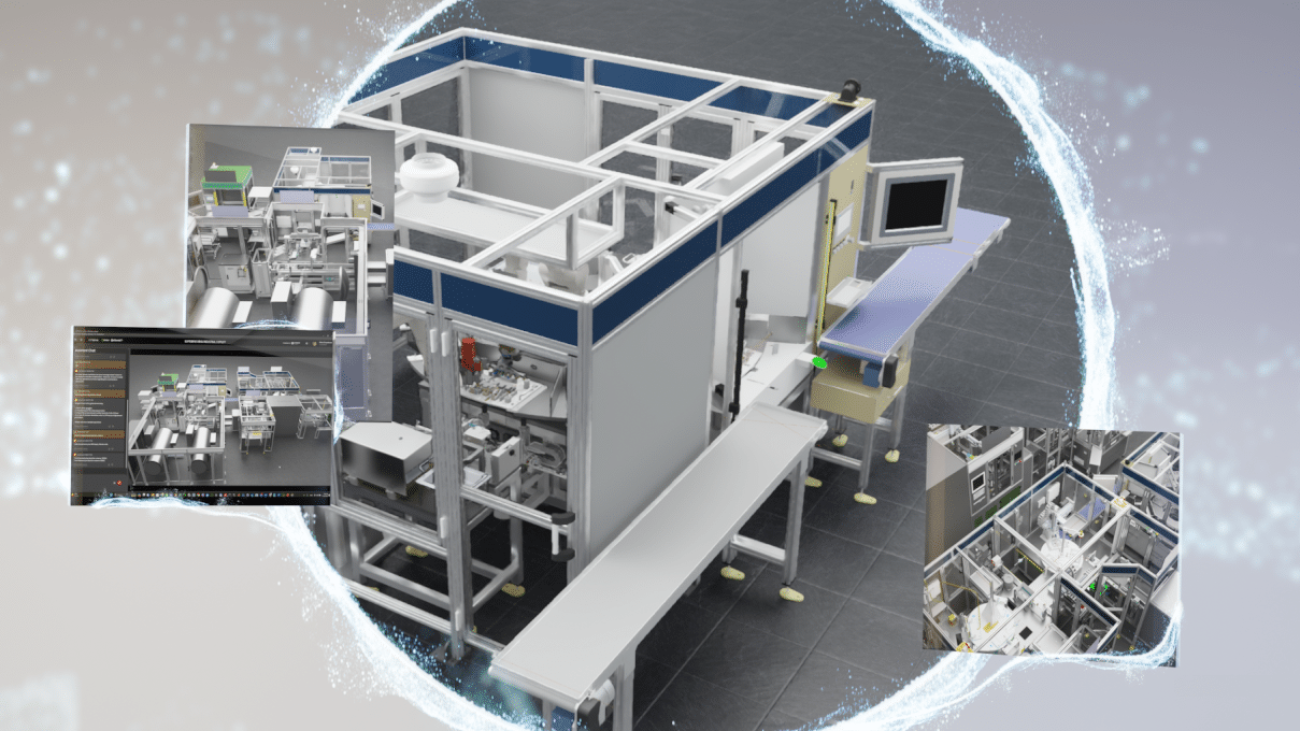

Hybrid AI is also enabling a new realm of interactive PC gaming with NVIDIA ACE, allowing game developers and digital creators to integrate state-of-the-art generative AI models into digital avatars on RTX AI PCs.

Powered by AI neural networks, NVIDIA ACE lets developers and designers create non-playable characters (NPCs) that can understand and respond to human player text and speech. It leverages AI models, including speech-to-text models to handle natural language spoken aloud, to generate NPCs’ responses in real time.

A Hybrid Developer Tool That Runs Anywhere

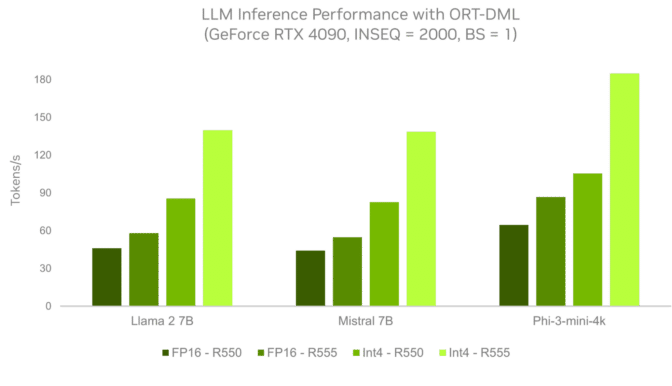

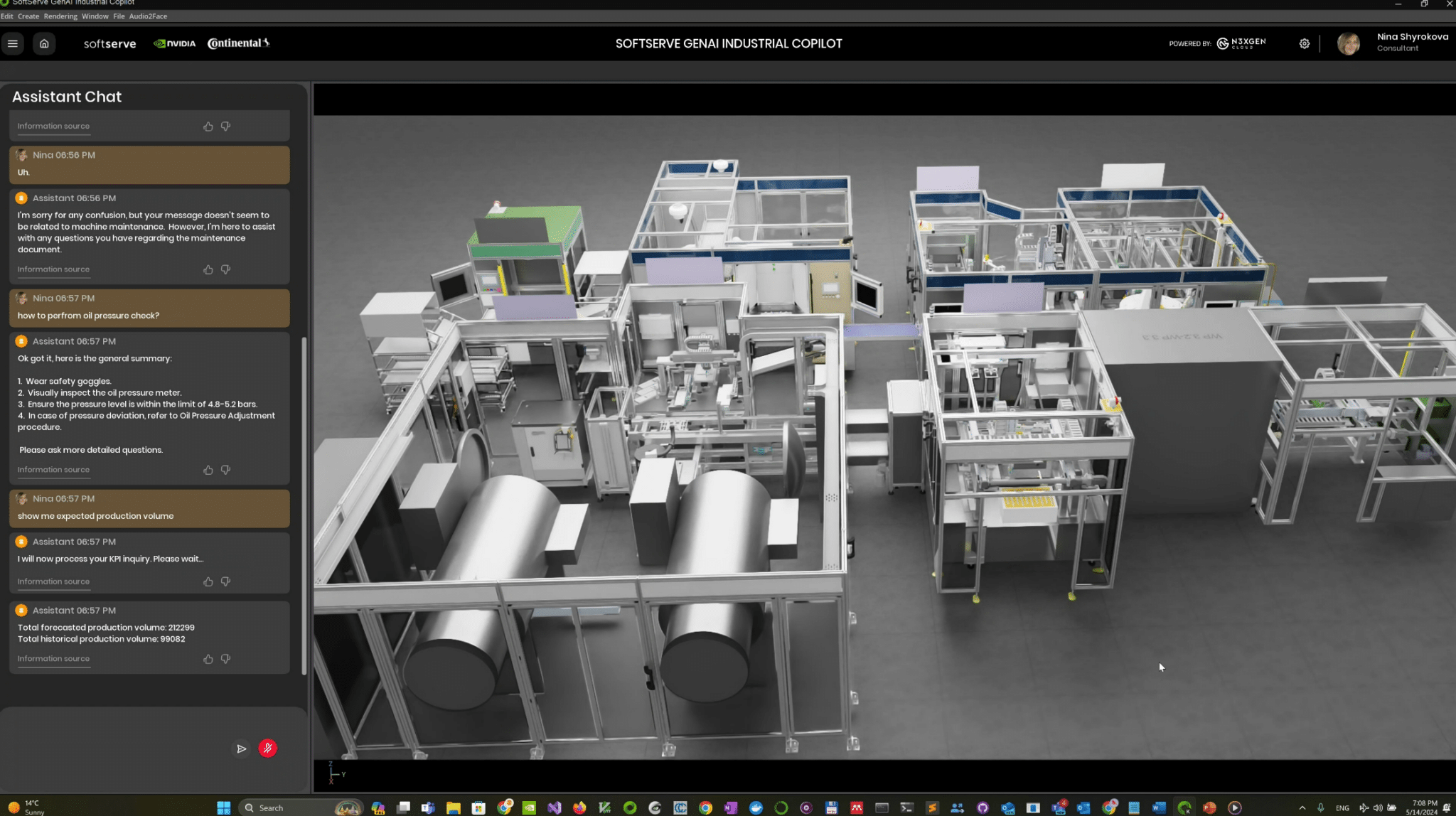

Hybrid also helps developers build and tune new AI models. NVIDIA AI Workbench helps developers quickly create, test and customize pretrained generative AI models and LLMs on RTX GPUs. It offers streamlined access to popular repositories like Hugging Face, GitHub and NVIDIA NGC, along with a simplified user interface that enables data scientists and developers to easily reproduce, collaborate on and migrate projects.

Projects can be easily scaled up when additional performance is needed — whether to the data center, a public cloud or NVIDIA DGX Cloud — and then brought back to local RTX systems on a PC or workstation for inference and light customization. Data scientists and developers can leverage pre-built Workbench projects to chat with documents using retrieval-augmented generation (RAG), customize LLMs using fine-tuning, accelerate data science workloads with seamless CPU-to-GPU transitions and more.

The Hybrid RAG Workbench project provides a customizable RAG application that developers can run and adapt themselves. They can embed their documents locally and run inference either on a local RTX system, a cloud endpoint hosted on NVIDIA’s API catalog or using NVIDIA NIM microservices. The project can be adapted to use various models, endpoints and containers, and provides the ability for developers to quantize models to run on their GPU of choice.

NVIDIA GPUs power remarkable AI solutions locally on NVIDIA GeForce RTX PCs and RTX workstations and in the cloud. Creators, gamers and developers can get the best of both worlds with growing hybrid AI workflows.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)