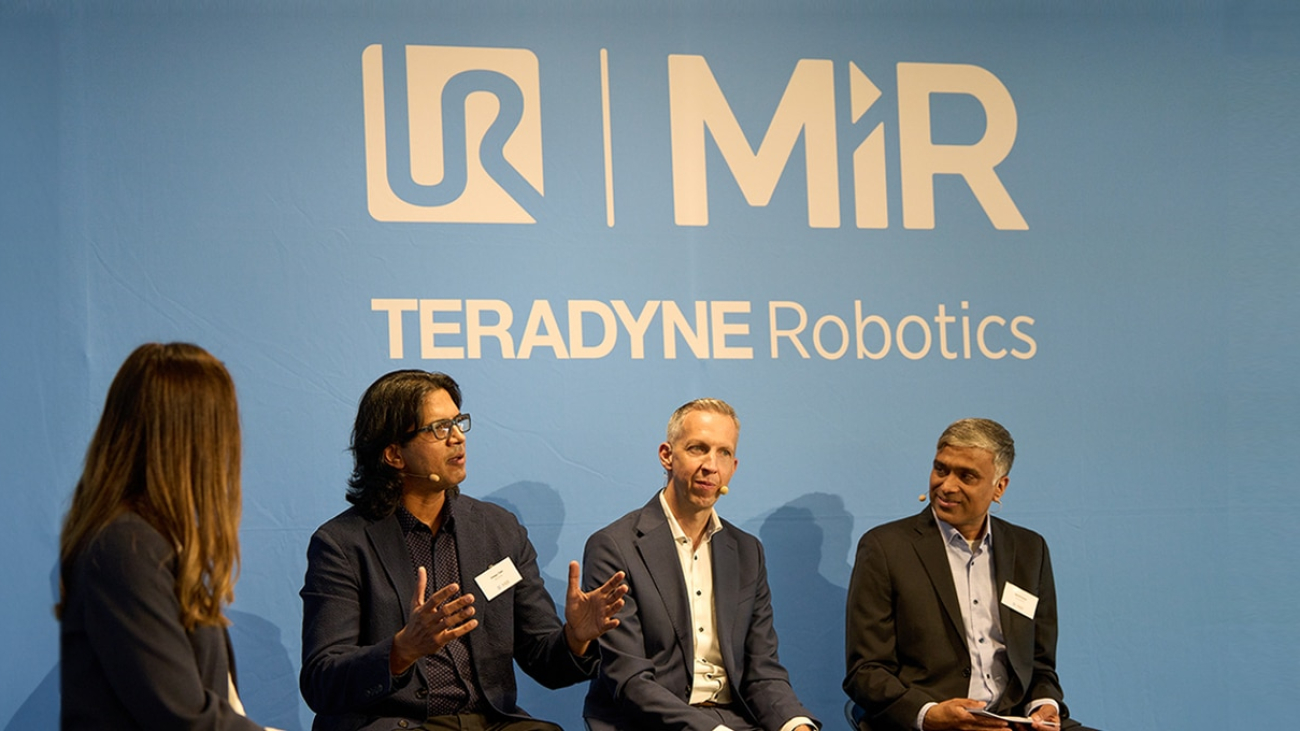

Senior executives from NVIDIA, Siemens and Teradyne Robotics gathered this week in Odense, Denmark, to mark the launch of Teradyne’s new headquarters and discuss the massive advances coming to the robotics industry.

One of Denmark’s oldest cities and known as the city of robotics, Odense is home to over 160 robotics companies with 3,700 employees and contributes profoundly to the industry’s progress.

Teradyne Robotics’ new hub there, which includes cobot company Universal Robots (UR) and autonomous mobile robot (AMR) company MiR, is set to help employees maximize collaborative efforts, foster innovation and provide an environment to revolutionize advanced robotics and autonomous machines.

The grand opening showcased the latest AI robotic applications and featured a panel discussion on the future of advanced robotics. Speakers included Ujjwal Kumar, group president at Teradyne Robotics; Rainer Brehm, CEO of Siemens Factory Automation; and Deepu Talla, vice president of robotics and edge computing at NVIDIA.

“The advent of generative AI coupled with simulation and digital twins technology is at a tipping point right now, and that combination is going to change the trajectory of robotics,” commented Talla.

The Power of Partnerships

The discussion comes as the global robotics market continues to grow rapidly. The cobots market in Europe was valued at $286 million in 2022 and is projected to reach $6.7 billion by 2032, at a yearly growth rate of more than 37%.

Panelists discussed why teaming up is key to innovation for any company — whether a startup or an enterprise — and how physical AI is being used across businesses and workplaces, stressing the game-changing impact of advanced robotics.

The alliance between NVIDIA and Teradyne Robotics, which includes an AI-based intra-logistics solution alongside Siemens, showcases the strength of collaboration across the ecosystem. NVIDIA’s prominent role as a physical AI hardware provider is boosting the cobot and AMR sectors with accelerated computing, while its collaboration with Siemens is transforming industrial automation.

“NVIDIA provides all the core AI capabilities that get integrated into the hundreds and thousands of companies building robotic platforms and robots, so our approach is 100% collaboration,” Talla said.

“What excites me most about AI and robots is that collaboration is at the core of solving our customers’ problems,” Kumar added. “No one company has all the technologies needed to address these problems, so we must work together to understand and solve them at a very fast pace.”

Accelerating Innovation With AI

AI has already made huge strides across industries and plays an important role in enhancing advanced robotics. Leveraging machine learning, computer vision and natural language processing, AI gives robots the cognitive capability to understand, learn and make decisions.

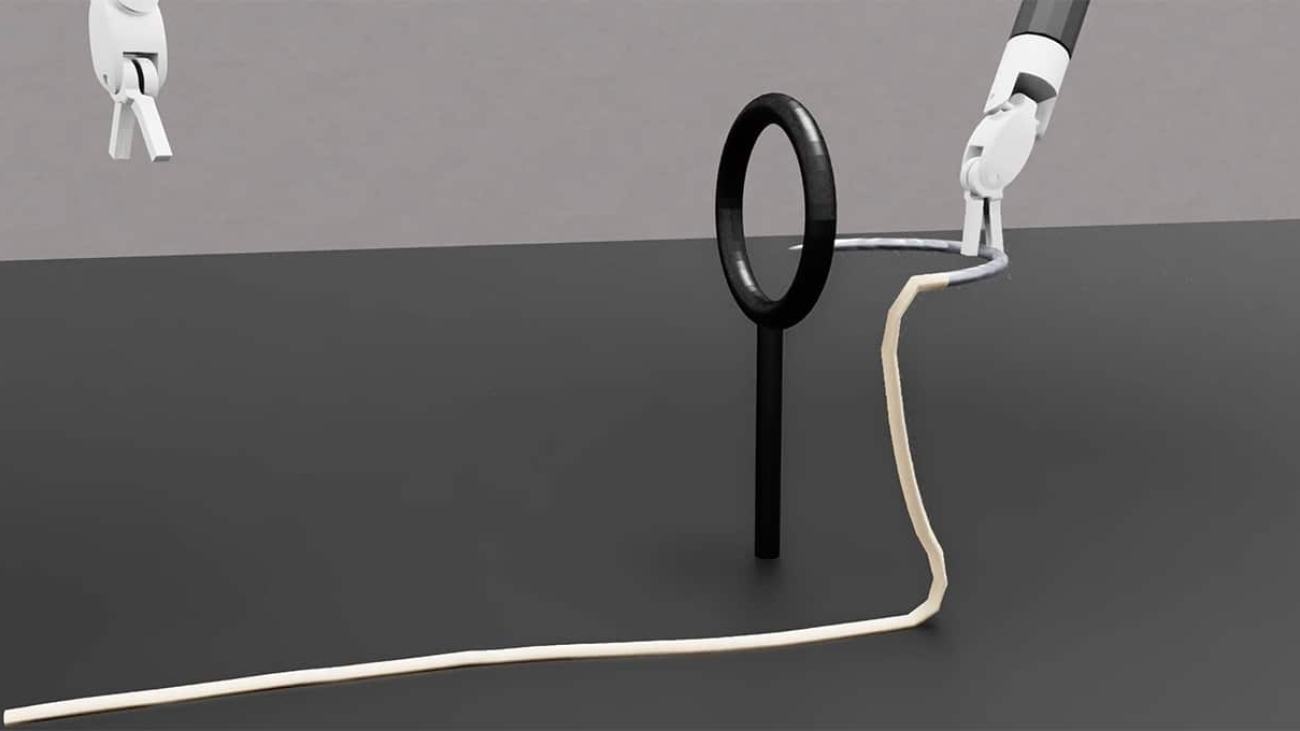

“For humans, we have our senses, but it’s not that easy for a robot, so you have to build these AI capabilities for autonomous navigation,” Talla said. “NVIDIA’s Isaac platform is enabling increased autonomy in robotics with rapid advancements in simulation, generative AI, foundation models and optimized edge computing.”

NVIDIA is working closely with the UR team to infuse AI into UR’s robotics software technology. In the case of autonomous mobile robots that move things from point A to B to C, it’s all about operating in unstructured environments and navigating autonomously.

Brehm emphasized the need to scale AI by industrializing it, allowing for automated deployment, inference and monitoring of models. He spoke about empowering customers to utilize AI effortlessly, even without AI expertise. “We want to enhance automation for more skill-based automation systems in the future,” he said.

As a leading robotics company with one of the largest installed bases of collaborative and AMRs, Teradyne has identified a long list of industry problems and is working closely with NVIDIA to solve them.

“I use the term ‘physical AI’ as opposed to ‘digital AI’ because we are taking AI to a whole new level by applying it in the physical world,” said Kumar. “We see it helping our customers in three ways: adding new capabilities to our robots, making our robots smarter with advanced path planning and navigation, and further enhancing the safety and reliability of our collaborative robots.”

The Impact of Real-World Robotics

Autonomous machines, or AI robots, are already making a noticeable difference in the real world, from industries to our daily lives. Industries such as manufacturing are using advanced robotics to enhance efficiency, accuracy and productivity.

Companies want to produce goods close to where they are consumed, with sustainability being a key driver. But this often means setting up shop in high-cost countries. The challenge is twofold: producing at competitive prices and dealing with shrinking, aging workforces that are less available for factory jobs.

“The problem for large manufacturers is the same as what small and medium manufacturers have always faced: variability,” Kumar said. “High-volume industrial robots don’t suit applications requiring continuous design tweaks. Collaborative robots combined with AI offer solutions to the pain points that small and medium customers have lived with for years, and to the new challenges now faced by large manufacturers.”

Automation isn’t just about making things faster; it’s also about making the most of the workforce. In manufacturing, automation aids smoother processes, ramps up safety, saves time and relieves pressure on employees.

“Automation is crucial and, to get there, AI is a game-changer for solving problems,” Brehm said.

AI and computing technologies are set to redefine the robotics landscape, transforming robots from mere tools to intelligent partners capable of autonomy and adaptability across industries.

Feature image by Steffen Stamp. Left to right: Fleur Nielsen, head of communications at Universal Robots; Deepu Talla, head of robotics at NVIDIA; Rainer Brehm, CEO of Siemens Factory Automation; and Ujjwal Kumar, president of Teradyne Robotics.

Read More

NVIDIA GeForce NOW (@NVIDIAGFN) May 15, 2024