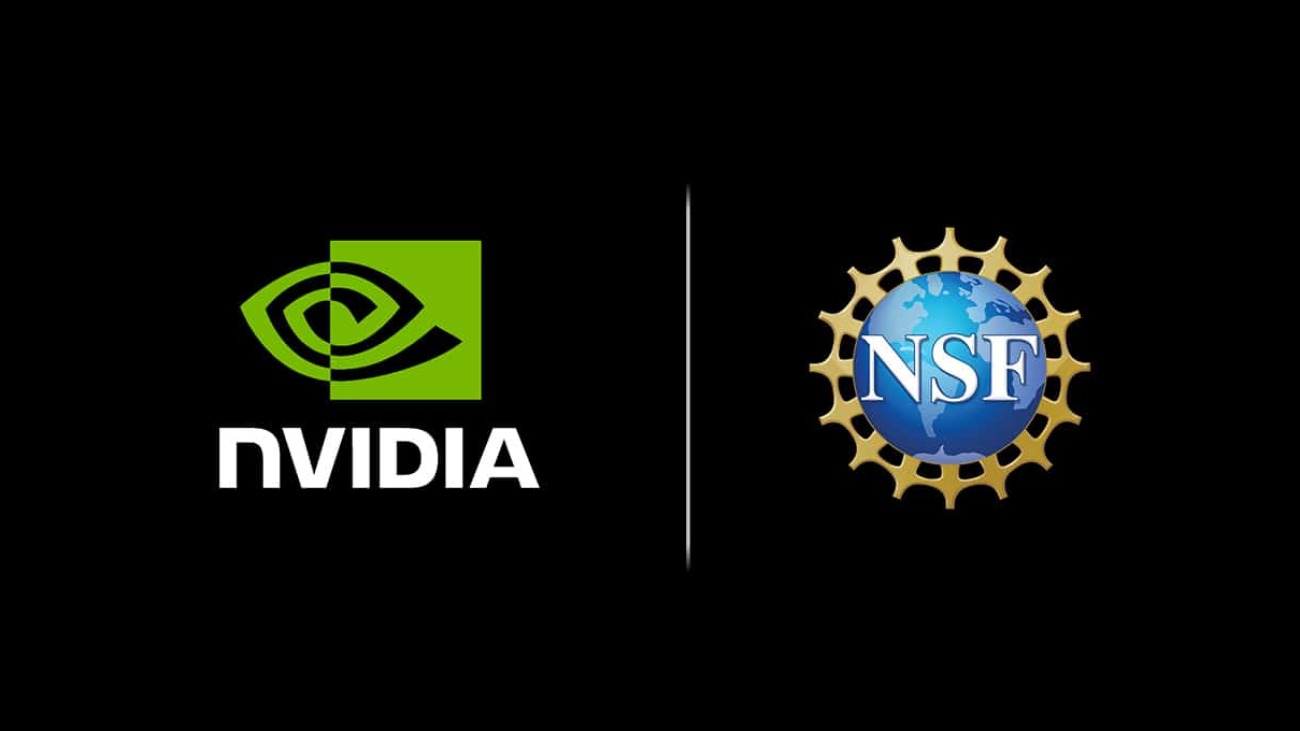

In a major stride toward building a shared national research infrastructure, the U.S. National Science Foundation has launched the National Artificial Intelligence Research Resource pilot program with significant support from NVIDIA.

The initiative aims to broaden access to the tools needed to power responsible AI discovery and innovation. It was announced Wednesday in partnership with 10 other federal agencies as well as private-sector, nonprofit and philanthropic organizations.

“The breadth of partners that have come together for this pilot underscores the urgency of developing a National AI Research Resource for the future of AI in America,” said NSF Director Sethuraman Panchanathan. “By investing in AI research through the NAIRR pilot, the United States unleashes discovery and impact and bolsters its global competitiveness.”

NVIDIA’s commitment of $30 million in technology contributions over two years is a key factor in enlarging the scale of the pilot, fueling the potential for broader achievements and accelerating the momentum toward full-scale implementation.

“The NAIRR is a vision of a national research infrastructure that will provide access to computing, data, models and software to empower researchers and communities,” said Katie Antypas, director of the Office of Advanced Cyberinfrastructure at the NSF.

“Our primary goals for the NAIRR pilot are to support fundamental AI research and domain-specific research applying AI, reach broader communities, particularly those currently unable to participate in the AI innovation ecosystem, and refine the design for the future full NAIRR,” Antypas added.

Accelerating Access to AI

“AI is increasingly defining our era, and its potential can best be fulfilled with broad access to its transformative capabilities,” said NVIDIA founder and CEO Jensen Huang.

“Partnerships are really at the core of the NAIRR pilot,” said Tess DeBlanc-Knowles, NSF’s special assistant to the director for artificial intelligence.

“It’s been incredibly impressive to see this breadth of partners come together in these 90 days, bringing together government, industry, nonprofits and philanthropies,” she added. “Our industry and nonprofit partners are bringing critical expertise and resources, which are essential to advance AI and move forward with trustworthy AI initiatives.”

NVIDIA’s collaboration with scientific centers aims to significantly scale up educational and workforce training programs, enhancing AI literacy and skill development across the scientific community.

NVIDIA will harness insights from researchers using its platform, offering an opportunity to refine and enhance the effectiveness of its technology for science, and supporting continuous advancement in AI applications.

“With NVIDIA AI software and supercomputing, the scientists, researchers and engineers of the extended NSF community will be able to utilize the world’s leading infrastructure to fuel a new generation of innovation,” Huang said.

The Foundation for Modern AI

Accelerating both AI research and research done with AI, NVIDIA’s contributions include NVIDIA DGX Cloud AI supercomputing resources and NVIDIA AI Enterprise software.

Offering full-stack accelerated computing from systems to software, NVIDIA AI provides the foundation for generative AI, with significant adoption across research and industries.

Broad Support Across the US Government

As part of this national endeavor, the NAIRR pilot brings together a coalition of government partners, showcasing a unified approach to advancing AI research.

Its partners include the U.S. National Science Foundation, U.S. Department of Agriculture, U.S. Department of Energy, U.S. Department of Veterans Affairs, National Aeronautics and Space Administration, National Institutes of Health, National Institute of Standards and Technology, National Oceanic and Atmospheric Administration, Defense Advanced Research Projects Agency, U.S. Patent and Trade Office and the U.S. Department of Defense.

The NAIRR pilot builds on the United States’ rich history of leading large-scale scientific endeavors, such as the creation of the internet, which, in turn, led to the advancement of AI.

Leading in Advanced AI

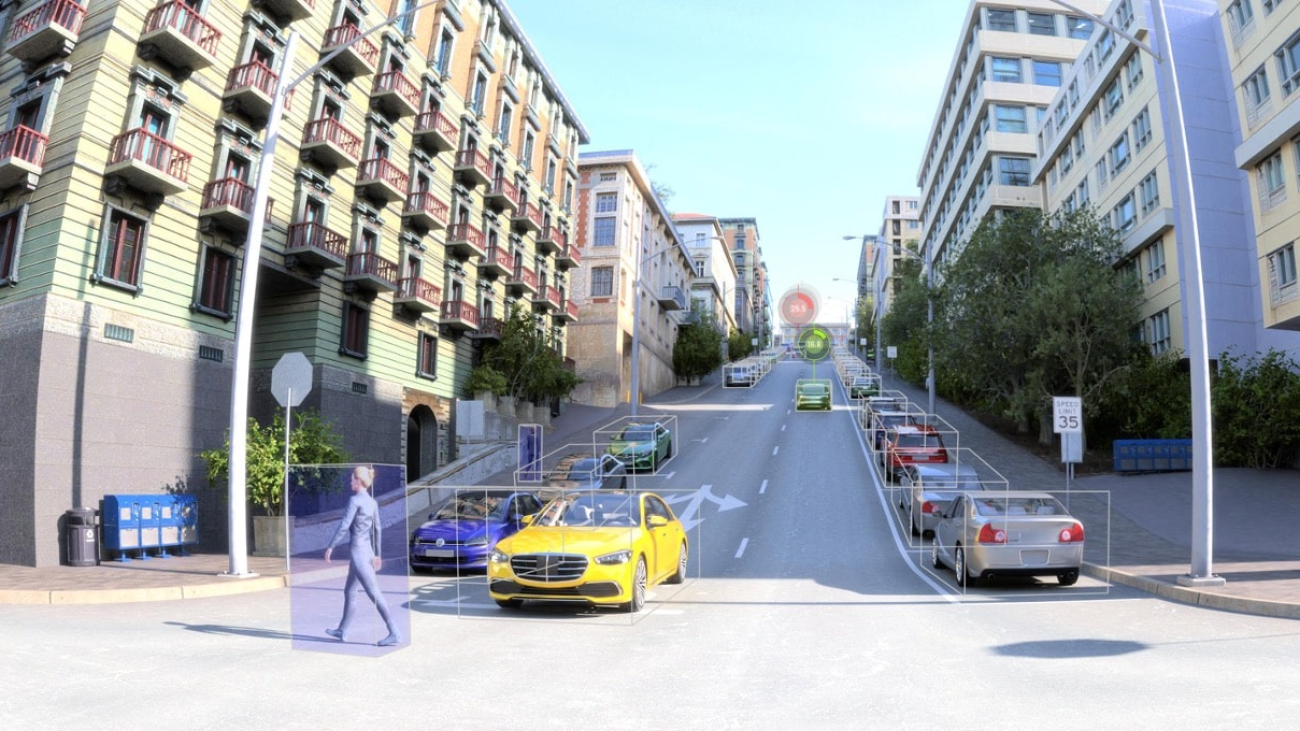

NAIRR promises to drive innovations across various sectors, from healthcare to environmental science, positioning the U.S. at the forefront of global AI advancements.

The launch meets a goal outlined in Executive Order 14110, signed by President Biden in October 2023, directing NSF to launch a pilot for the NAIRR within 90 days.

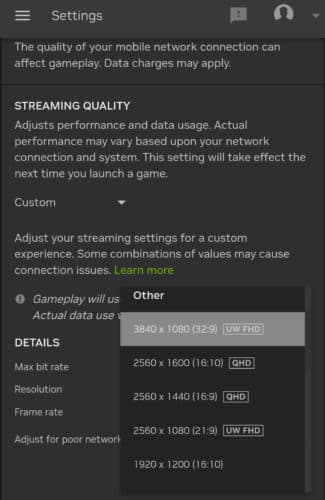

The NAIRR pilot will provide access to advanced computing, datasets, models, software, training and user support to U.S.-based researchers and educators.

“Smaller institutions, rural institutions, institutions serving underrepresented populations are key communities we’re trying to reach with the NAIRR,” said Antypas. “These communities are less likely to have resources to build their own computing or data resources.”

Paving the Way for Future Investments

As the pilot expedites the proof of concept, future investments in the NAIRR will democratize access to AI innovation and support critical work advancing the development of trustworthy AI.

The pilot will initially support AI research to advance safe, secure and trustworthy AI as well as the application of AI to challenges in healthcare and environmental and infrastructure sustainability.

Researchers can apply for initial access to NAIRR pilot resources through the NSF. The NAIRR pilot welcomes additional private-sector and nonprofit partners.

Those interested are encouraged to reach out to NSF at nairr_pilot@nsf.gov.