AI made a splash this year — from Wall Street to the U.S. Congress — driven by a wave of developers aiming to make the world better.

Here’s a look at AI in 2023 across agriculture, natural disasters, medicine and other areas worthy of a cocktail party conversation.

This AI Is on Fire

California has recently seen record wildfires. With scorching heat late into the summer, the state’s crispy foliage becomes a tinderbox that can ignite and quickly blaze out of control. Burning for solutions, developers are embracing AI for early detection.

DigitalPath, based in Chico, California, has refined a convolutional neural network to spot wildfires. The model, run on NVIDIA GPUs, enables thousands of cameras across the state to detect wildfires in real time for the ALERTCalifornia initiative, a collaboration between the University of California, San Diego, and the CAL FIRE wildfire agency.

The mission is near and dear to DigitalPath employees, whose office sits not far from the town of Paradise, where California’s deadliest wildfire killed 85 people in 2018.

“It’s one of the main reasons we’re doing this,” said CEO Jim Higgins. “We don’t want people to lose their lives.”

Earth-Shaking Research

A team from the University of California, Santa Cruz; University of California, Berkeley; and the Technical University of Munich released a paper this year on a new deep learning model for earthquake forecasts.

Shaking up the status quo around the ETAS model standard, developed in 1988, the new RECAST model, trained on NVIDIA GPUs, is capable of using larger datasets and holds promise for making better predictions during earthquake sequences.

“There’s a ton of room for improvement within the forecasting side of things,” said Kelian Dascher-Cousineau, one of the paper’s authors.

AI’s Day in the Sun

Verdant, based in the San Francisco Bay Area, is supporting organic farming. The startup develops AI for tractor implements that can weed, fertilize and spray, providing labor support while lowering production costs for farmers and boosting yields.

The NVIDIA Jetson Orin-based robots-as-a-service business provides farmers with metrics on yield gains and chemical reduction. “We wanted to do something meaningful to help the environment,” said Lawrence Ibarria, chief operating officer at Verdant.

Living the Dream

Ge Dong is living out her childhood dream, following in her mother’s footsteps by pursuing physics. She cofounded Energy Singularity, a startup that aims to lower the cost of building a commercial tokamak — which can cost billions of dollars —for fusion energy development.

It brings the promise of cleaner energy.

“We’ve been using NVIDIA GPUs for all our research — they’re one of the most important tools in plasma physics these days,” she said.

Gimme Shelter

Chaofeng Wang, a University of Florida assistant professor of artificial intelligence, is enlisting deep learning and images from Google Street View to evaluate urban buildings. By automating the process, the work is intended to assist governments in supporting building structures and post-disaster recovery.

“Without NVIDIA GPUs, we wouldn’t have been able to do this,” Wang said. “They significantly accelerate the process, ensuring timely results.”

AI Predicts Covid Variants

A Gordon Bell prize-winning model, GenSLMs has shown it can generate gene sequences closely resembling real-world variants of SARS-CoV-2, the virus behind COVID-19. Researchers trained the model using NVIDIA A100 Tensor Core GPU-powered supercomputers, including NVIDIA’s Selene, the U.S. Department of Energy’s Perlmutter and Argonne’s Polaris system.

“The AI’s ability to predict the kinds of gene mutations present in recent COVID strains — despite having only seen the Alpha and Beta variants during training — is a strong validation of its capabilities,” said Arvind Ramanathan, lead researcher on the project and a computational biologist at Argonne.

Jetson-Enabled Autonomous Wheelchair

Kabilan KB, an undergraduate student from the Karunya Institute of Technology and Sciences in Coimbatore, India, is developing an NVIDIA Jetson-enabled autonomous wheelchair. To help boost development, he’s been using NVIDIA Omniverse, a platform for building and operating 3D tools and applications based on the OpenUSD framework.

“Using Omniverse for simulation, I don’t need to invest heavily in prototyping models for my robots, because I can use synthetic data generation instead,” he said. “It’s the software of the future.”

Digital Twins for Brain Surgery

Atlas Meditech is using the MONAI medical imaging framework and the NVIDIA Omniverse 3D development platform to help build AI-powered decision support and high-fidelity surgery rehearsal platforms — all in an effort to improve surgical outcomes and patient safety.

“With accelerated computing and digital twins, we want to transform this mental rehearsal into a highly realistic rehearsal in simulation,” said Dr. Aaron Cohen-Gadol, founder of the company.

Keeping AI on Energy

Artificial intelligence is helping optimize solar and wind farms, simulate climate and weather, and support power grid reliability and other areas of the energy market.

Check out this installment of the I AM AI video series to learn about how NVIDIA is enabling these technologies and working with energy-conscious collaborators to drive breakthroughs for a cleaner, safer, more sustainable future.

AI Can See Clearly Now

Many patients in lower- and middle-income countries lack access to cataract surgery because of a shortage of ophthalmologists. But more than 2,000 doctors a year in lower-income countries can now treat cataract blindness — the world’s leading cause of blindness —using GPU-powered surgical simulation with the help of nonprofit HelpMeSee.

“We’re lowering the barrier for healthcare practitioners to learn these specific skills that can have a profound impact on patients,” said Bonnie An Henderson, CEO of the New York-based nonprofit.

Waste Not, Want Not

Afresh, based in San Francisco, helps stores reduce food waste. The startup has developed machine learning and AI models using data on fresh produce to help grocers make informed inventory-purchasing decisions. It has also launched software that enables grocers to save time and increase data accuracy with inventory tracking.

“The most impactful thing we can do is reduce food waste to mitigate climate change,” said Nathan Fenner, cofounder and president of Afresh, on the NVIDIA AI podcast.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)

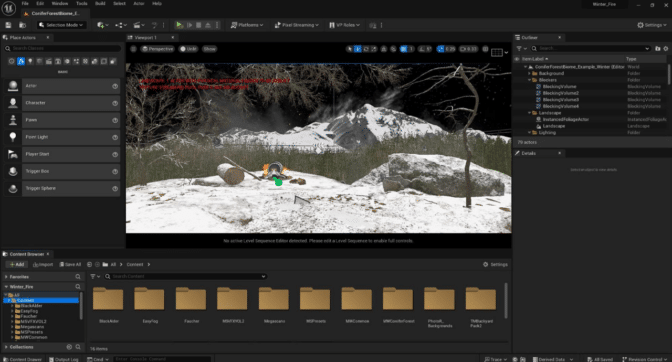

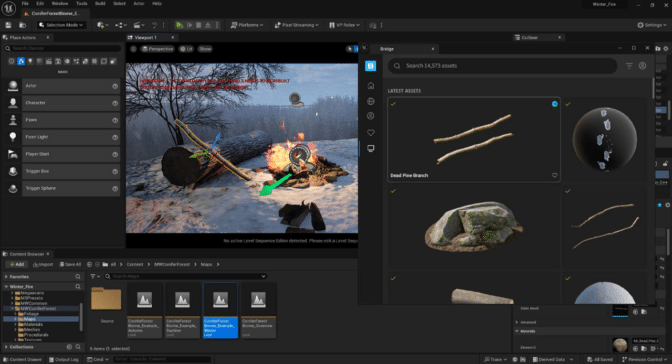

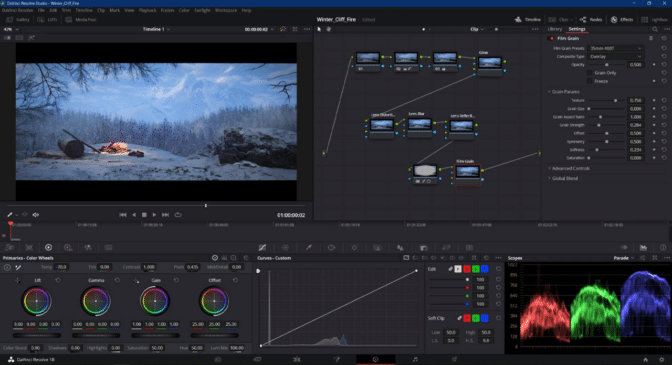

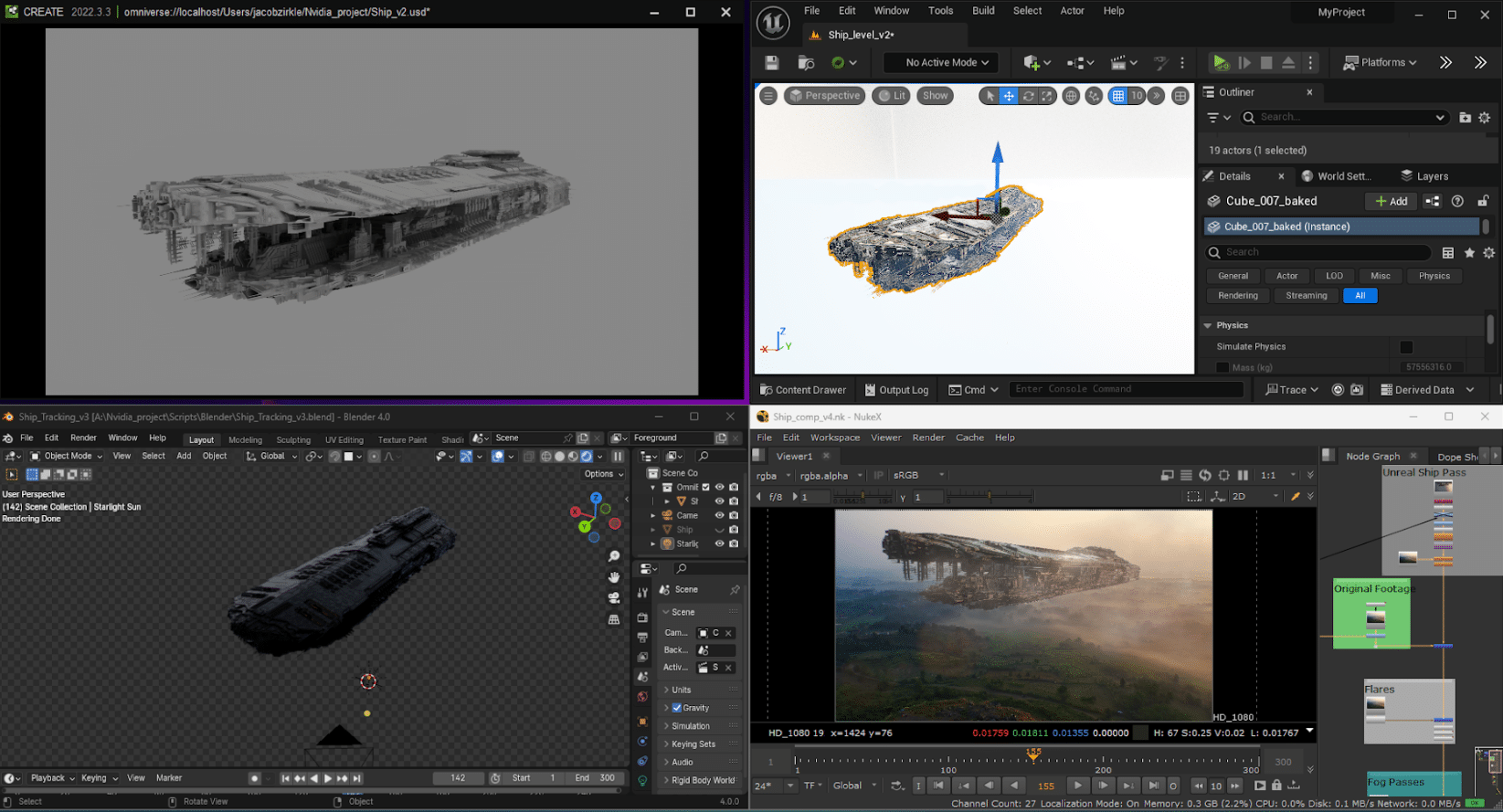

Zirkle’s ship, built using Blender, Nuke, Unreal Engine and USD Composer.

Zirkle’s ship, built using Blender, Nuke, Unreal Engine and USD Composer.