European startups will get a massive boost from a new generation of AI infrastructure, NVIDIA founder and CEO Jensen Huang said Friday in a fireside chat with iliad Group Deputy CEO Aude Durand — and it’s coming just in time.

“We’re now seeing a major second wave,” Huang said of the state of AI during a virtual appearance at Scaleway’s ai-PULSE conference in Paris for an audience of more than 1,000 in-person attendees.

Two elements are propelling this force, Huang explained in a conversation livestreamed from Station F, the world’s largest startup campus, which Huang joined via video conference from NVIDIA’s headquarters in Silicon Valley.

First, “a recognition that every region and every country needs to build their sovereign AI,” Huang said. Second, the “adoption of AI in different industries,” as generative AI spreads throughout the world, Huang explained.

“So the types of breakthroughs that we’re seeing in language I fully expect to see in digital biology and manufacturing and robotics,” Huang said, noting this could create big opportunities for Europe with its rich digital biology and healthcare industries. “And of course, Europe is also home of some of the largest industrial manufacturing companies.”

Praise for France’s AI Leadership

Durand kicked off the conversation by asking Huang about his views on the European AI ecosystem, especially in France, where the government has invested millions of euros in AI research and development.

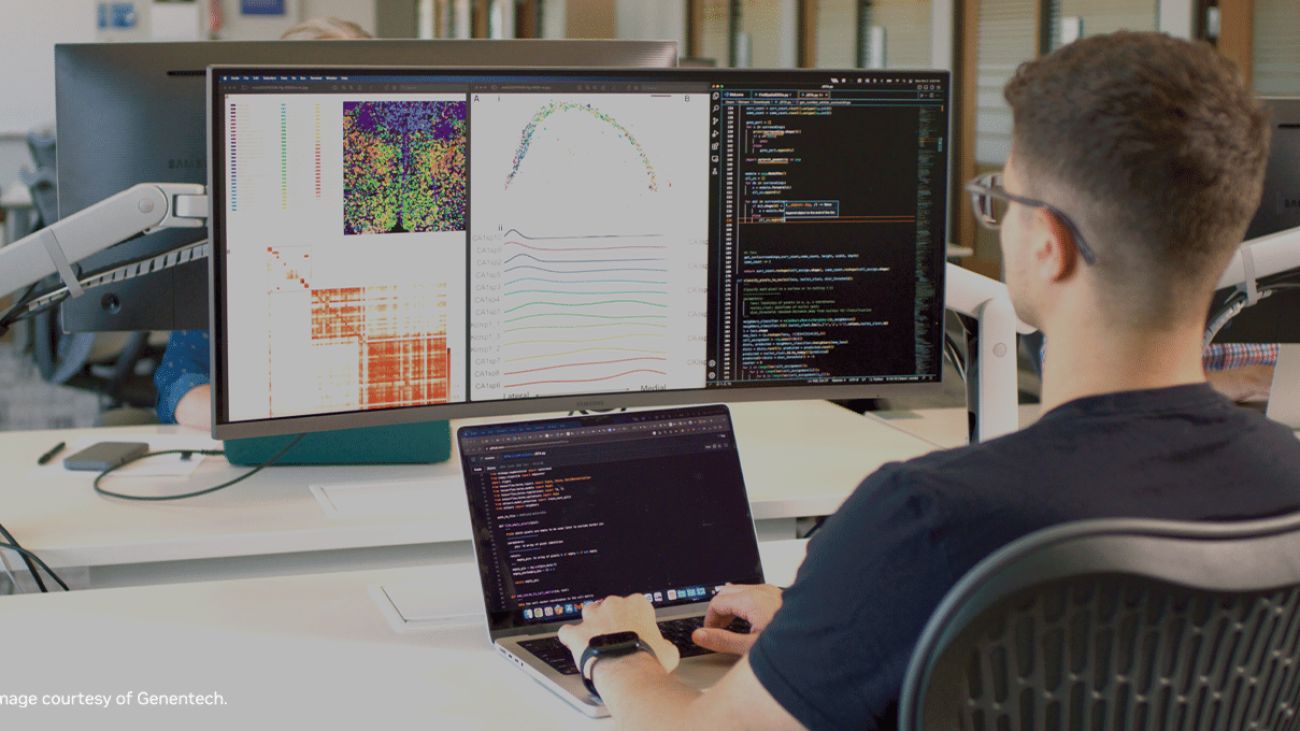

“Europe has always been rich in AI expertise,” Huang said, noting that NVIDIA works with 4,000 startups in Europe, more than 400 of them in France alone, pointing to Mistral, Qubit Pharmaceuticals and Poolside AI.

“At the same time, you have to really get the computing infrastructure going,” Huang said. “And this is the reason why Scaleway is so important to the advancement of AI in France” and throughout Europe, Huang said.

Highlighting the critical role of data in AI’s regional growth, Huang noted companies’ increasing awareness of the value of training AI with region-specific data. AI systems need to reflect the unique cultural and industrial nuances of each region, an approach gaining traction across Europe and beyond.

NVIDIA and Scaleway: Powering Europe’s AI Revolution

Scaleway, a subsidiary of iliad Group, a major European telecoms player, is doing its part to kick-start that second wave in Europe, offering cloud credits for access to its AI supercomputer cluster, which packs 1,016 NVIDIA H100 Tensor Core GPUs.

As a regional cloud service provider, Scaleway also provides sovereign infrastructure that ensures access and compliance with EU data protection laws, which is critical for businesses with a European footprint.

Regional members of the NVIDIA Inception program, which provides development assistance to startups, will also be able to access NVIDIA AI Enterprise software on Scaleway Marketplace.

The software includes the NVIDIA NeMo framework and pretrained models for building LLMs, NVIDIA RAPIDS for accelerated data science and NVIDIA Triton Inference Server and NVIDIA TensorRT-LLM for boosting inference.

Revolutionizing AI With Supercomputing Prowess

Recapping a month packed with announcements, Huang explained how NVIDIA is rapidly advancing high performance computing and AI worldwide to provide the infrastructure needed to power this second wave.

These systems are, in effect, “supercomputers,” Huang said, with AI systems now among the world’s most powerful.

They include Scaleway’s newly available Nabuchodonosor supercomputer, or “Nabu,” an NVIDIA DGX SuperPOD with 127 NVIDIA DGX H100 systems, which will help startups in France and across Europe scale up AI workloads.

“As you know the Scaleway system that we brought online, Nabu, is not your normal computer,” Huang said. “In every single way, it’s a supercomputer.”

Such systems are underpinning powerful new services.

Earlier this week, NVIDIA announced an AI Foundry service on Microsoft Azure, aimed at accelerating the development of customized generative AI applications.

Huang highlighted NVIDIA AI foundry’s appeal to a diverse user base, including established enterprises such as Amdocs, Getty Images, SAP and ServiceNow.

Huang noted that JUPITER, to be hosted at the Jülich facility, in Germany, and poised to be Europe’s premier exascale AI supercomputer, will run on 24,000 NVIDIA GH200 Grace Hopper Superchips, offering unparalleled computational capacity for diverse AI tasks and simulations.

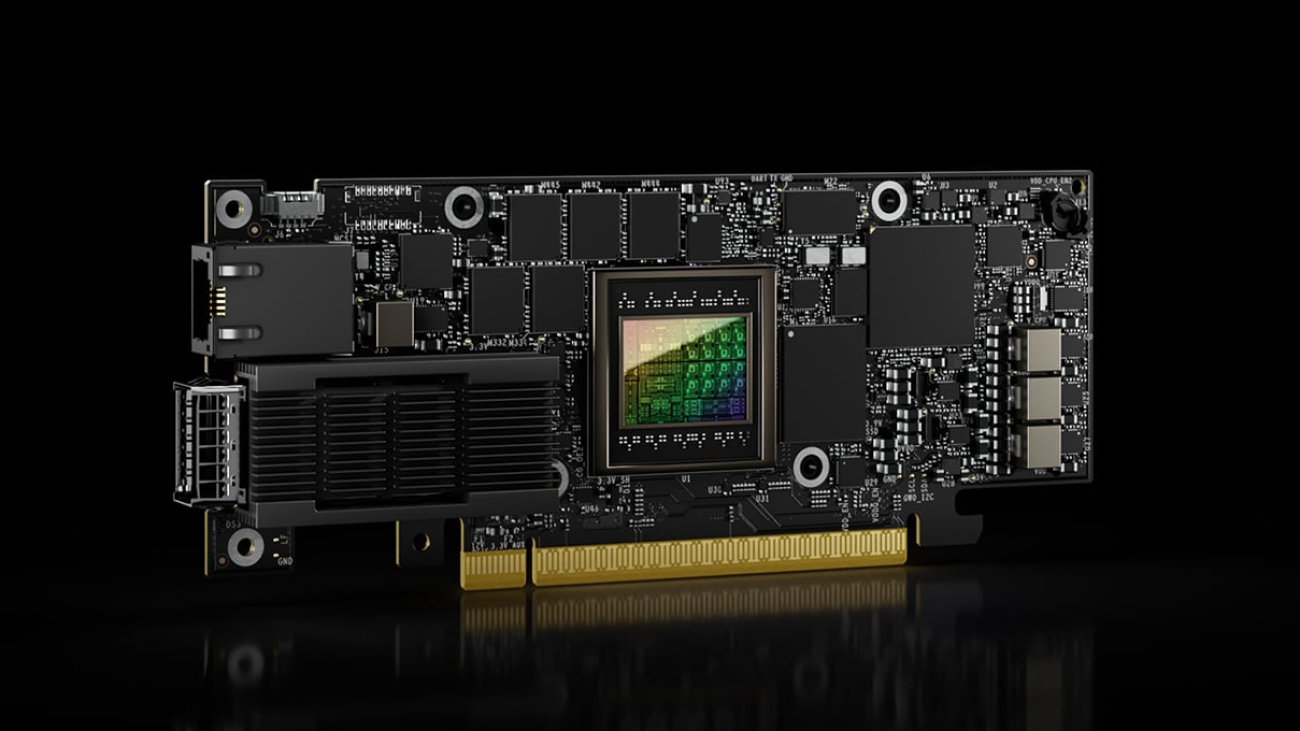

Huang touched on NVIDIA’s just-announced HGX H200 AI computing platform, built on NVIDIA’s Hopper architecture and featuring the H200 Tensor Core GPU. Set for release in Q2 of 2024, it promises to redefine industry standards.

He also detailed NVIDIA’s strategy to develop ‘AI factories,’ advanced data centers that power diverse applications across industries, including electric vehicles, robotics, and generative AI services.

Open Source

Finally, Durand asked Huang about the role of open source and open science in AI.

Huang said he’s a “huge fan” of open source. “Let’s acknowledge that without open source, how would AI have made the tremendous progress it has over the last decade,” Huang said.

“And so the ability for open source to energize the vibrancy and pull in the research and pull in the engagement of every startup, every researcher, every industry is really quite vital,” Huang said. “And you’re seeing it play out just presently, now going forward.”

Friday’s fireside conversation was part of Scaleway’s ai-PULSE conference, showcasing the latest AI trends and innovations. To learn more, visit https://www.ai-pulse.eu/.

Osinski’s panoramic view from the 20th floor terrace in the Upper East Side

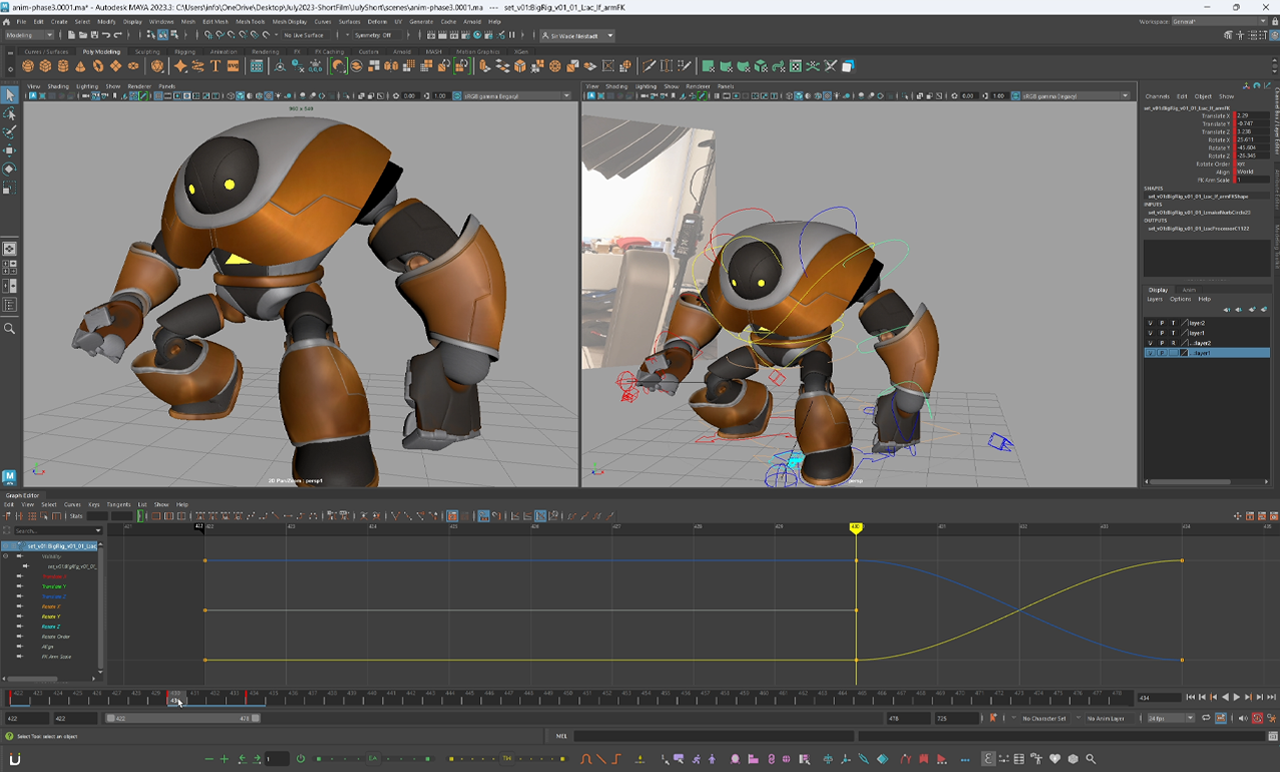

Osinski’s panoramic view from the 20th floor terrace in the Upper East Side The making of Sir Wade’s VFX robot animation

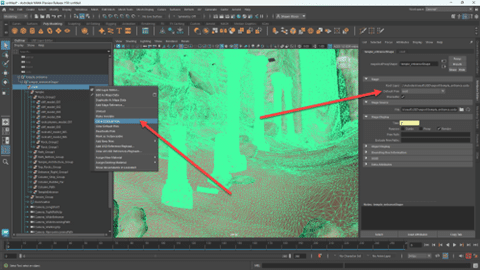

The making of Sir Wade’s VFX robot animation Access to default prim options in Maya UI

Access to default prim options in Maya UI