Visual effects artist Surfaced Studio returns to In the NVIDIA Studio to share his real-world VFX project, created on a brand new Razer Blade 16 Mercury Edition laptop powered by GeForce RTX 4080 graphics.

Surfaced Studio creates photorealistic, digitally generated imagery that seamlessly integrates visual effects into short films, television and console gaming.

He found inspiration for a recent sci-fi project by experimenting with 3D transitions: using a laptop screen as a gateway between worlds, like the portals from Dr. Strange or the transitions from The Matrix.

Break the Rules and Become a Hero

Surfaced Studio aimed to create an immersive experience with his latest project.

“I wanted to get my audience to feel surprised getting ‘sucked into’ the 3D world,” he explained.

Surfaced Studio began with a simple script, alongside sketches of brainstormed ideas and played out shots. “This usually helps me think through how I’d pull each effect off and whether they’re actually possible,” he said.

From there, he shot video and imported the footage into Adobe Premiere Pro for a rough test edit. Then, Surfaced Studio selected the most suitable clips for use.

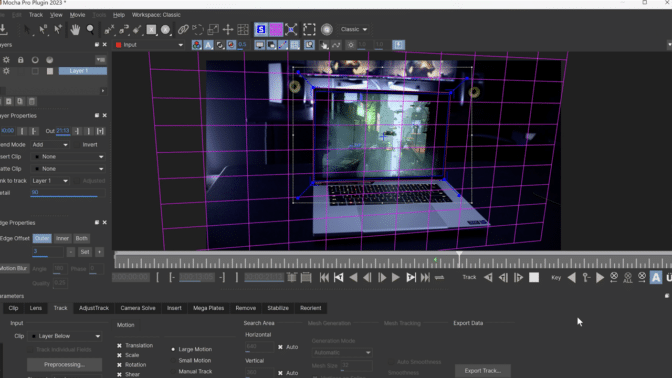

He cleaned up the footage in Adobe After Effects, stabilizing shots with the Warp Stabilizer tool and removing distracting background elements with the Mocha Pro tool. Both effects were accelerated by his GeForce RTX 4080 Laptop GPU.

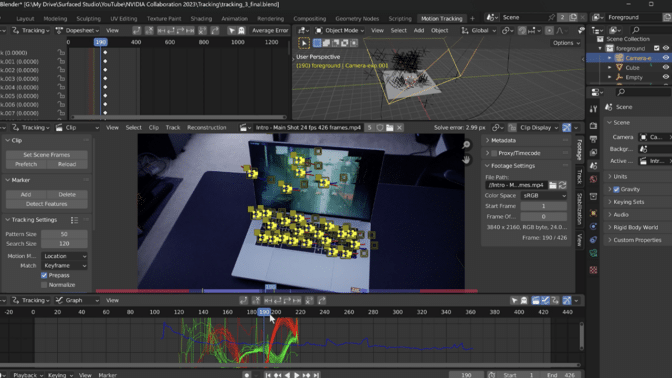

After, he created a high-contrast version of the shot for 3D motion tracking in Blender.

Motion tracking is used to apply tracking data to 3D objects. “This was pretty tricky, as it’s a 16-second gimbal shot with fast moving sections and a decent camera blur,” said Surfaced Studio. “It took me a good few days to get a decent track and fix issues with manual keyframes and ‘patches’ between different sections.”

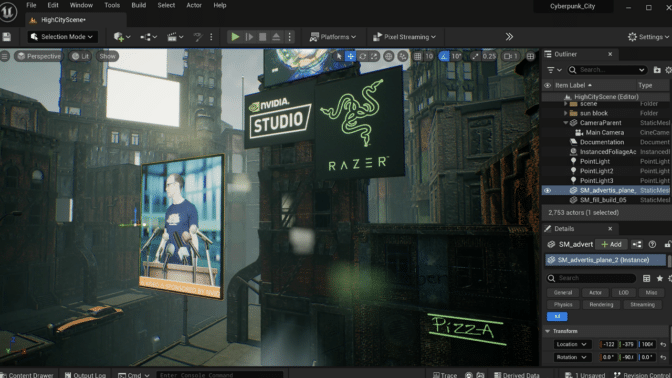

Surfaced Studio exported footage of the animated camera into a 3D FBX file to use in Unreal Engine and set it up in the Cyberpunk High City pack, which contains a modular constructor for creating highly detailed sci-fi city streets, alleys and blocks.

“I’m not much of a 3D artist so using [the Cyberpunk High City pack] was the best option to complete the project on this side of the century,” the artist said. He then made modifications to the cityscape, reducing flickering lights and adding buildings, custom fog and Razer and NVIDIA Studio banners. He even added a billboard with an ad encouraging kindness to cats. “It’s so off to the side of most shots I doubt anyone actually noticed,” noted a satisfied Surfaced Studio.

Learning 3D effects can seem overwhelming due to the vast knowledge needed across multiple apps and district workflows. But Surfaced Studio stresses the simple importance of first understanding workflow hierarchies — and how one feeds into another — as an approachable entry point to choosing a specialty suited to a creator’s unique passion and natural talent.

Surfaced Studio was able to seamlessly run his scene in Unreal Engine full 4K resolution — with all textures and materials loading at maximum graphical fidelity — thanks to the GeForce RTX 4080 Laptop GPU in his Razer Blade 16. The graphics card also contains NVIDIA DLSS capabilities to increase viewport interactivity by using AI to upscale frames rendered at lower resolution while retaining high-fidelity detail.

Surfaced Studio then took the FBX file with the exported camera tracking data into Unreal Engine, matching his ‘3D camera’ with the real-world one used to film the laptop with. “This was the crucial step in creating the ‘look-through’ effect I wanted,” he said.

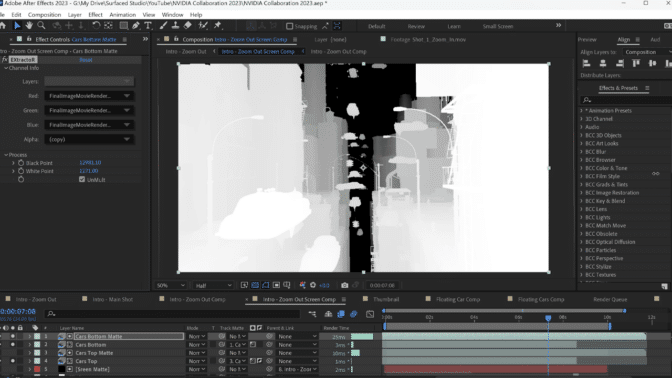

Once satisfied with the look, Surfaced Studio exported all sequences from Unreal Engine as multilayer EXR files — including a Z-depth pass, a grayscale value range to create a depth-of-field effect — to separate visual elements from the 3D footage.

Surfaced Studio went back to After Effects for the final composites. He added distortion effects and some glow for the transition from the physical screen to the 3D world.

Then, Surfaced Studio again used the Z-depth pass to extract the 3D cars and overlay them onto the real footage.

He exported the final project into Premiere Pro and added sound effects, music and a few color correction edits.

With GeForce RTX 4080 dual encoders, Surface Studio nearly halved Adobe Premiere Pro video decoding and encoding export times. Surfaced Studio has been using NVIDIA GPUs for over a decade, citing their widespread integration with commonly used tools.

“NVIDIA has simply done a better job than its competitors to reach out to and integrate with other companies that create creative apps,” said Surfaced Studio. “CUDA and RTX are widespread technologies that you find in most popular creative apps to accelerate workflows.”

When he’s not working on VFX projects, Surfaced Studio also uses his laptop to game. The Razer Blade 16 has the first dual-mode mini-LED display with two native resolutions: UHD+ at 120Hz — suited for VFX workflows — and FHD at 240Hz — ideal for gamers (or creators who like gaming).

For a limited time, gamers and creators can get the critically acclaimed game Alan Wake 2 with the purchase of the Razer Blade 16 powered by GeForce RTX 40 Series graphics cards.

Surfaced Studio’s VFX tutorials are available on YouTube, where he covers filmmaking, VFX and 3D techniques using Adobe After Effects, Blender, Photoshop, Premiere Pro and other apps.

Join the #SeasonalArtChallenge

Don’t forget to join the #SeasonalArtChallenge by submitting spooky Halloween-inspired art in October and harvest- and fall-themed pieces in November.

Welcome to our community #SeasonalArtChallenge!

We want to see your spooky/Halloween-themed art like this piece from @michal_pruski (IG), this October!

Share your art with #SeasonalArtChallenge for a chance to be featured on the Studio or @NVIDIAOmniverse channels! pic.twitter.com/J2tqIXoahP

— NVIDIA Studio (@NVIDIAStudio) October 2, 2023

Follow NVIDIA Studio on Instagram, Twitter and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)

The cover image of Halač’s book.

The cover image of Halač’s book. A Kuka Scara robot simulation with 10 parallel small grippers for sorting and handling pens.

A Kuka Scara robot simulation with 10 parallel small grippers for sorting and handling pens.