California has a new weapon against the wildfires that have devastated the state: AI.

A freshly launched system powered by AI trained on NVIDIA GPUs promises to provide timely alerts to first responders across the Golden State every time a blaze ignites.

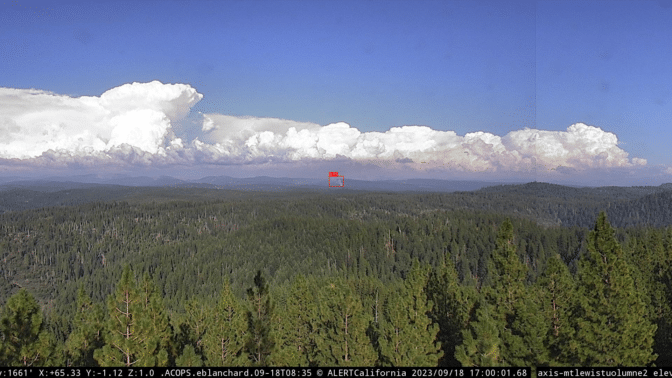

The ALERTCalifornia initiative, a collaboration between California’s wildfire fighting agency CAL FIRE and the University of California, San Diego, uses advanced AI developed by DigitalPath.

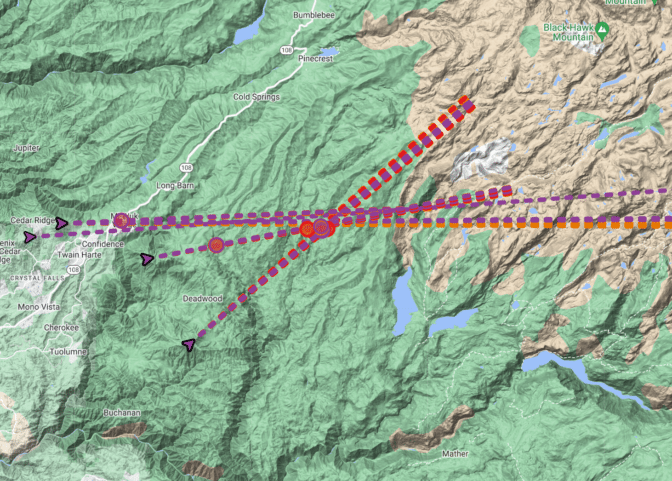

Harnessing the raw power of NVIDIA GPUs and aided by a network of thousands of cameras dotting the Californian landscape, DigitalPath has refined a convolutional neural network to spot signs of fire in real time.

A Mission That’s Close to Home

DigitalPath CEO Jim Higgins said it’s a mission that means a lot to the 100-strong technology partner, which is nestled in the Sierra Nevada foothills in Chico, Calif., a short drive from the town of Paradise, where the state’s deadliest wildfire killed 85 people in 2018.

“It’s one of the main reasons we’re doing this,” Higgins said of the wildfire, the deadliest and most destructive in the history of the most populous U.S. state. “We don’t want people to lose their lives.”

The ALERTCalifornia initiative is based at UC San Diego’s Jacobs School of Engineering, the Qualcomm Institute and the Scripps Institution of Oceanography.

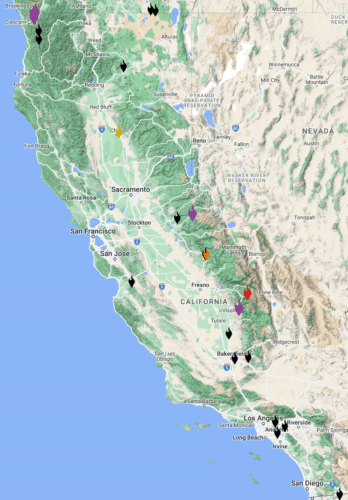

The program manages a network of thousands of monitoring cameras and sensor arrays and collects data that provides actionable, real-time information to inform public safety.

The AI program started in June and was initially deployed in six of Cal Fire’s command centers. This month it expanded to all of CAL FIRE’s 21 command centers.

DigitalPath began by building out a management platform for a network of cameras used to confirm California wildfires after a 911 call.

The company quickly realized there would be no way to have people examine images from the thousands of cameras relaying images to the system every ten to fifteen seconds.

So Ethan Higgins, the company’s system architect, turned to AI.

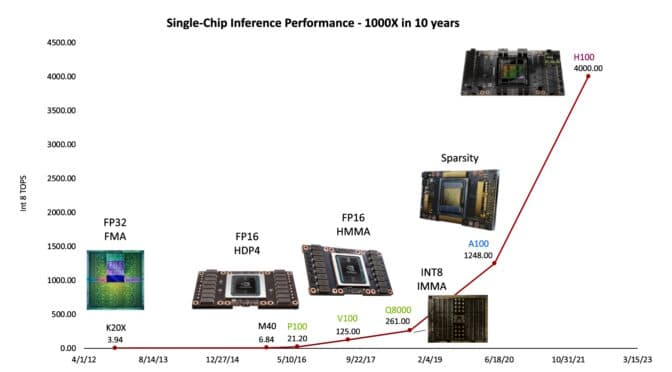

The team began by training a convolutional neural network on a cloud-based system running an NVIDIA A100 Tensor Core GPU and later transitioned to a system running on eight A100 GPUs.

The AI model is crucial to examining a system that sees almost 8 million images a day streaming in from over 1,000 first-party cameras, primarily in California, and thousands more from third-party sources nationwide, he said.

Impact of Wildfires

It’s arriving just in time.

Wildfires have ravaged California over the past decade, burning millions of acres of land, destroying thousands of homes and businesses and claiming hundreds of lives.

According to CAL FIRE, in 2020 alone, the state experienced five of its six largest and seven of its 20 most destructive wildfires.

And the total dollar damage of wildfires in California from 2019 to 2021 was estimated at over $25 billion.

The new system promises to give first responders a crucial tool to prevent such conflagrations.

In fact, during a recent interview with DigitalPath, the system detected two separate fires in Northern California as they ignited.

Every day, the system detects between 50 and 300 events, offering invaluable real-time information to local first responders.

Beyond Detection: Enhancing Capabilities

But AI is just part of the story.

The system is also a case study in how innovative companies can use AI to amplify their unique capabilities.

One of DigitalPath’s breakthroughs is its system’s ability to identify the same fire captured from diverse camera angles. DigitalPath’s system efficiently filters imagery down to a human-digestible level. The system filters 8 million daily images down to just 100 alerts, or 1.25 thousandths of one percent of total images captured.

“The system was designed from the start with human processing in mind,” Higgins said, ensuring that authorities receive a single, consolidated notification for every incident.

“We’ve got to catch every fire we can,” he adds.

Expanding Horizons

DigitalPath eventually hopes to expand its detection technology to help California detect more kinds of natural disasters.

And having proven its worth in California, DigitalPath is now in talks with state and county officials and university research teams across the fire-prone Western United States under its ALERTWest subsidiary.

Their goal: to help partners replicate the success of UC San Diego and ALERTCalifornia, potentially shielding countless lives and homes from the wrath of wildfires.

Featured image credit: SLworking2, via Flickr, Creative Commons license, some rights reserved.

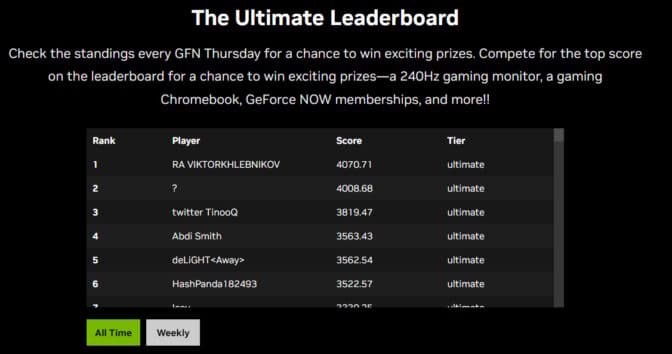

The contest is open to anyone with a creative and innovative idea that could impact the world. The only catch? The idea must originate — like NVIDIA — in a Denny’s booth.

The contest is open to anyone with a creative and innovative idea that could impact the world. The only catch? The idea must originate — like NVIDIA — in a Denny’s booth.