Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks and demonstrates how NVIDIA Studio technology improves creative workflows. We’re also deep-diving on new GeForce RTX 40 Series GPU features, technologies and resources and how they dramatically accelerate content creation.

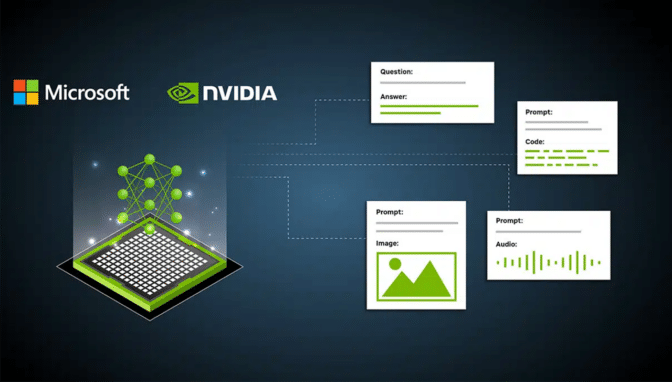

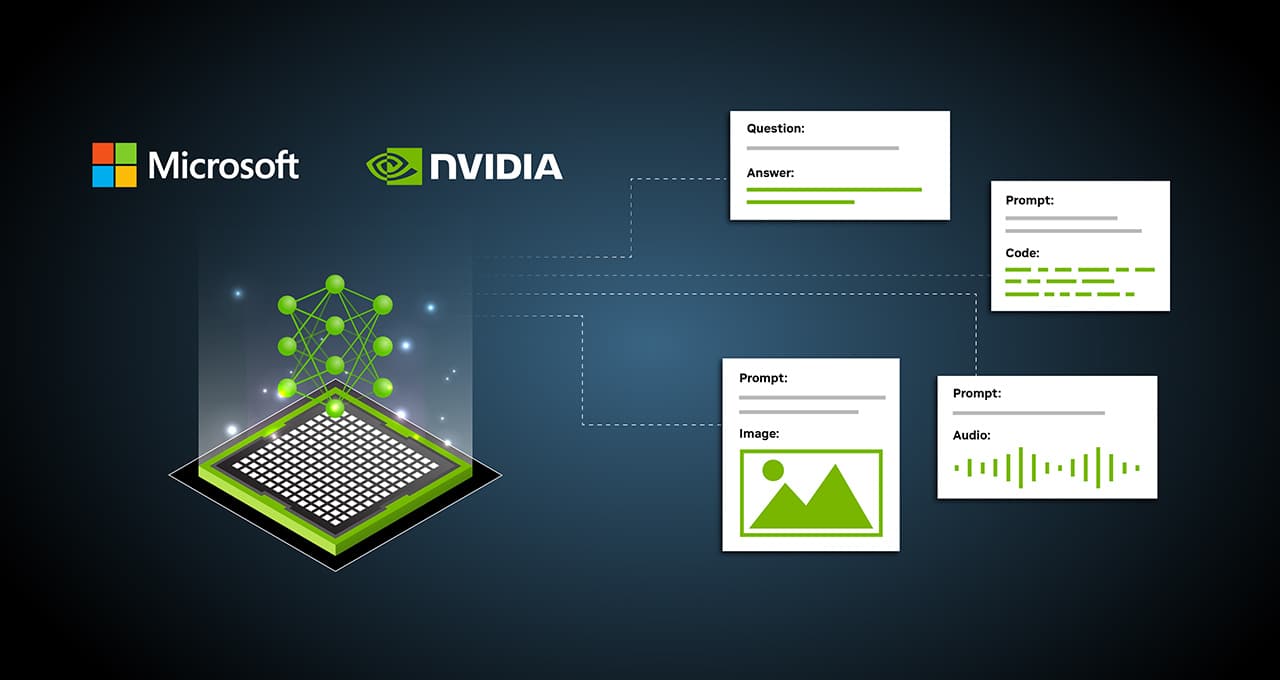

Improving gaming, creating and everyday productivity, NVIDIA RTX graphics cards feature specialized Tensor Cores that deliver cutting-edge performance and transformative capabilities for AI.

An arsenal of 100 million AI-ready, RTX-powered Windows 11 PCs and workstations are primed to excel in AI workflows and help launch a new wave of training models and apps.

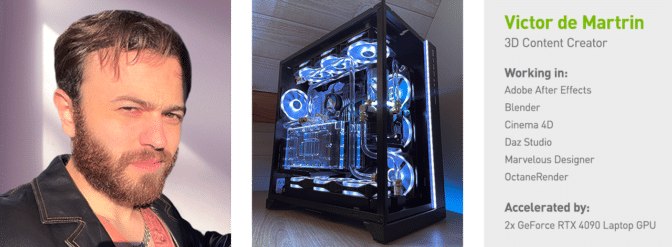

This week In the NVIDIA Studio, learn more about these AI power-players and read about Victor de Martrin, a self-taught 3D artist who shares the creative process behind his viral video Ascension, which features an assist from AI and uses a new visual effect process involving point clouds.

AI on the Prize

AI can elevate both work and play.

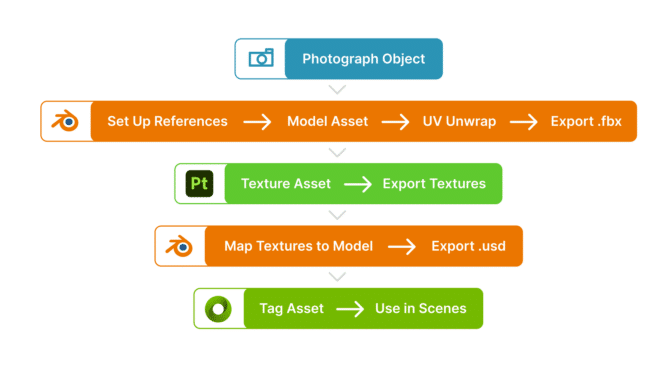

Content creators with NVIDIA Studio hardware can access over 100 AI-enabled and RTX-accelerated creative apps, including the Adobe Creative Cloud suite, Blender, Blackmagic Design’s DaVinci Resolve, OBS Studio and more.

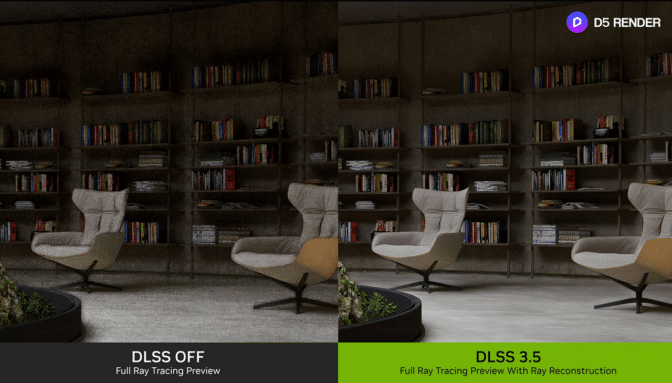

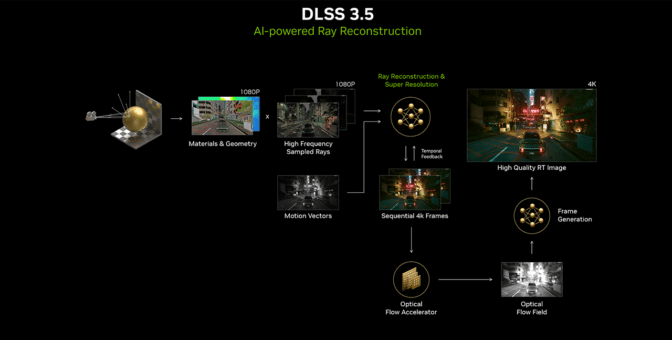

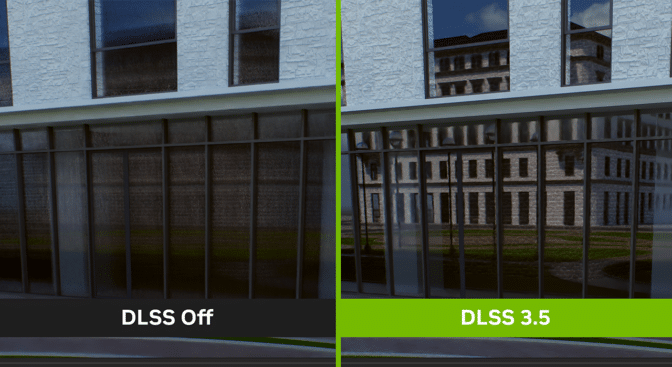

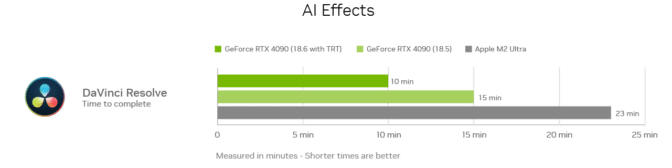

This includes the NVIDIA Studio suite of AI tools and exclusive next-generation technology such as NVIDIA DLSS 3.5, featuring Ray Reconstruction and OptiX. AI image generation on a GeForce RTX 4090-powered PC can be completed at lightning speed — the same task would take 8x longer with an Apple M2 Ultra.

3D modelers can benefit immensely from AI, with dramatic improvements in real-time viewport rendering and upscaling with DLSS in NVIDIA Omniverse, Adobe Substance, Adobe Painter, Blender, D5 Render, Unreal Engine and more.

Gamers are primed for AI boosts with DLSS in over 300 games, including Alan Wake 2, coming soon, and Cyberpunk 2077: Phantom Liberty, available for download today.

Modders can breathe new life into classic games with NVIDIA RTX Remix’s revolutionary AI upscaling and texture enhancements. Game studios deploying the NVIDIA Avatar Cloud Engine (ACE) can build intelligent game characters, interactive avatars and digital humans in apps at scale — saving time and money.

Everyday tasks also get a boost. AI can draft emails, summarize content and upscale video to stunning 4K on YouTube and Netflix with RTX Video Super Resolution. Frequent video chat users can harness the power of NVIDIA Broadcast for AI-enhanced voice and video features. And livestreamers can take advantage of AI-powered features like virtual backgrounds, noise removal and eye contact, which moves the eyes of the speaker to simulate eye contact with the camera.

NVIDIA hardware powers AI natively and in the cloud — including for the world’s leading cloud AIs like ChatGPT, Midjourney, Microsoft 365 Copilot, Adobe Firefly and more.

Get further with AI — faster on RTX — and learn more to get started.

AI on Windows

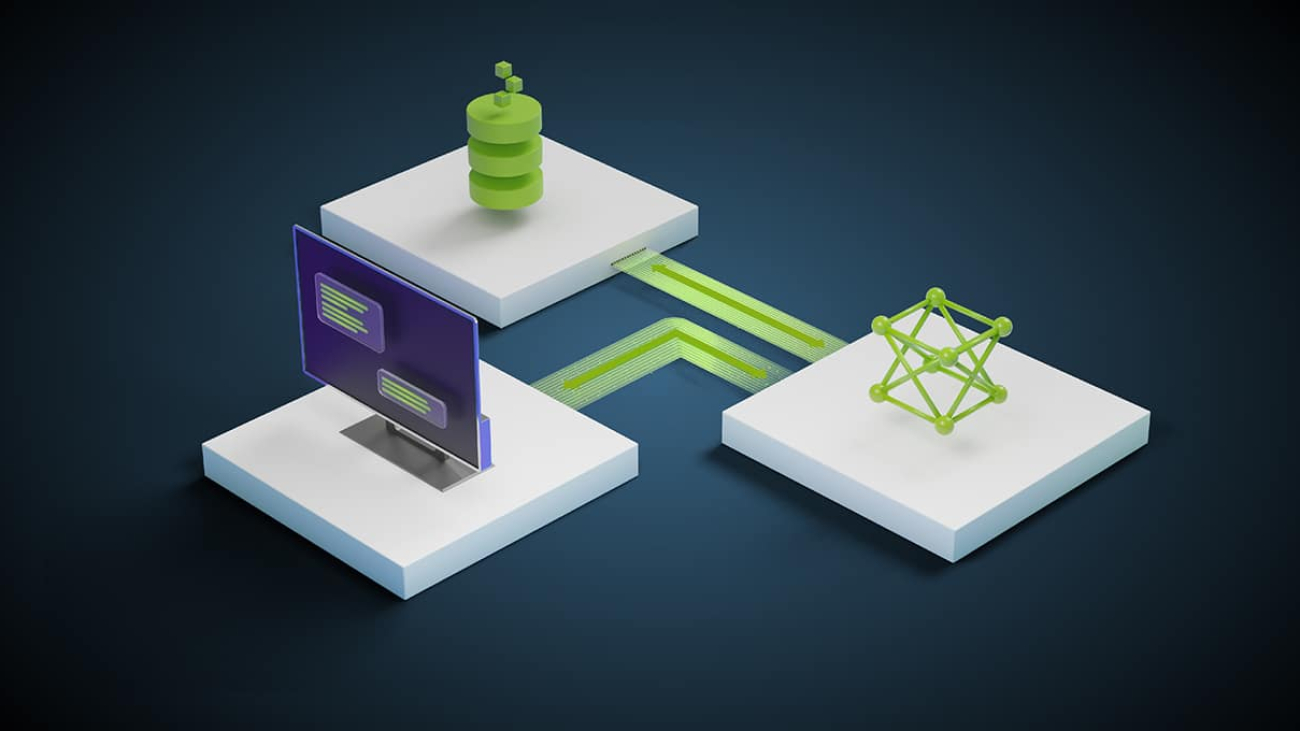

NVIDIA AI, the world’s leading AI development platform, is supported by 100 million AI-ready RTX-powered Windows 11 PCs and workstations.

State-of-the-art technologies behind the Windows platform and NVIDIA’s AI hardware and software enable GPU-accelerated workflows for training and deploying AI models, exclusive tools, containers, software development kits and new open-source models optimized for RTX.

With these integrations, Windows and NVIDIA are making it easier for developers to create the next generation of AI-powered Windows apps.

AI on Viral Content

3D content creator Martrin saw content creation as a means to gain financial, creative and geographical freedom — as well as a sense of gratification that he wasn’t getting in his day job in advertising.

“To gain recognition as an artist nowadays, unless you have connections, you’ve got to grind it out on social media,” admitted Martrin. As he began to showcase artwork to wider audiences, Martrin unexpectedly enjoyed the process of creating art aimed at garnering social media attention.

Best known for his viral video series Satisfying Marble Music — in which he replicates melodies by simulating a marble bouncing on xylophone notes — Martrin also takes a keen interest in the latest trends and advancements in the world of 3D to consistently meet his clients’ evolving demands.

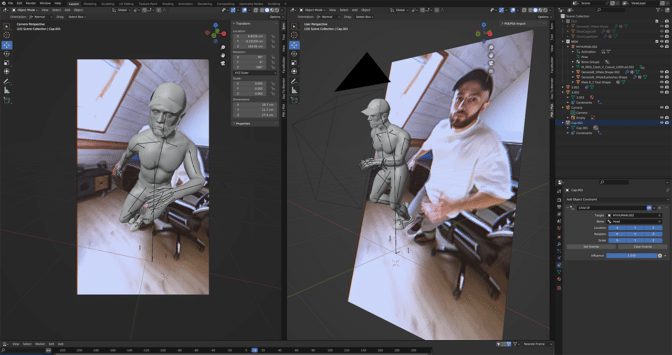

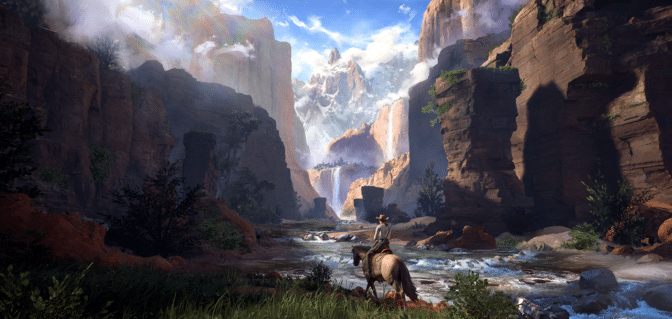

In his recent project Ascension, he experimented with a point-cloud modeling technique for the first time, aiming to create a transition between reality and 3D.

Martrin used several 3D apps to refine video footage and animate himself in 3D. The initial stages involved modeling and animating a portrait of himself, which he used Daz Studio and Blender to accomplish. His PC, equipped with two NVIDIA GeForce RTX 4090 GPUs, easily handled this task.

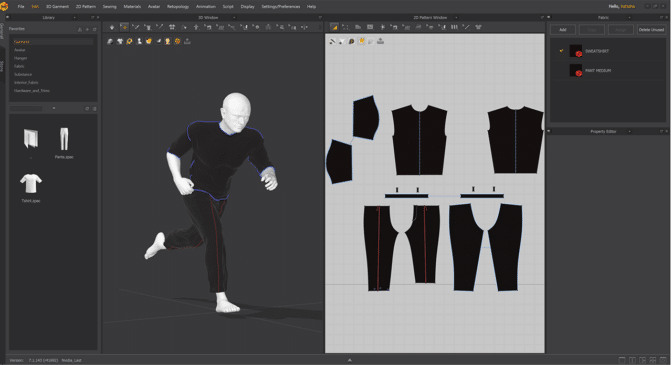

He then used the GPU-accelerated Marvelous Designer cloth simulation engine to craft and animate his clothing.

Martrin stressed the importance of GPU acceleration in his creative workflow. “When did I use it? The countless times I checked my live viewer and during the creation of every render,” said Martrin.

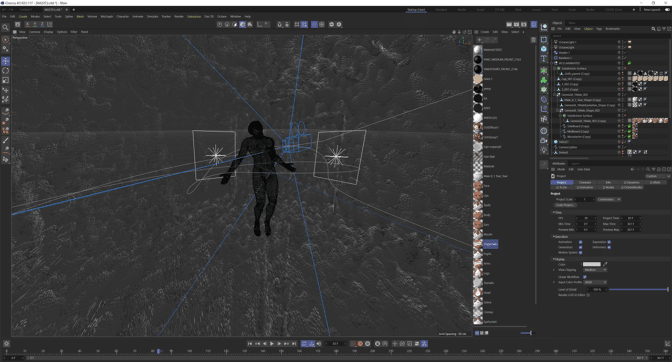

From there, Martrin tested point-cloud modeling techniques. He first 3D-scanned his room. Then, instead of using the room as conventional 3D mesh, he deployed it as a set of data points in space to create a unique visual style.

He then used Cinema 4D and OctaneRender with RTX-accelerated ray tracing to render the animation.

Martrin completed the project by applying a GPU-accelerated composition and special effects in Adobe After Effects, achieving faster rendering with NVIDIA CUDA technology.

“No matter how tough it is at the start, keep grinding and challenging — odds are, success will eventually follow,” said Martrin.

Check out Martrin’s viral content on TikTok.

Follow NVIDIA Studio on Instagram, Twitter and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

A clip of an industrial crate virtually “aging.”

A clip of an industrial crate virtually “aging.” Moment Factory’s interactive installation at InfoComm 2023.

Moment Factory’s interactive installation at InfoComm 2023.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)