Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology improves creative workflows.

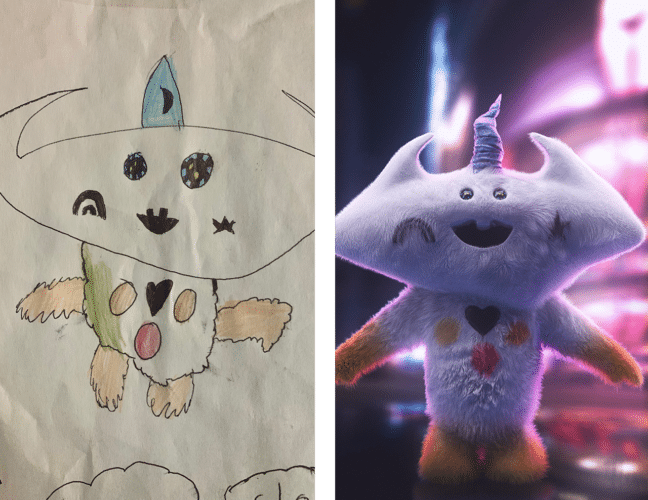

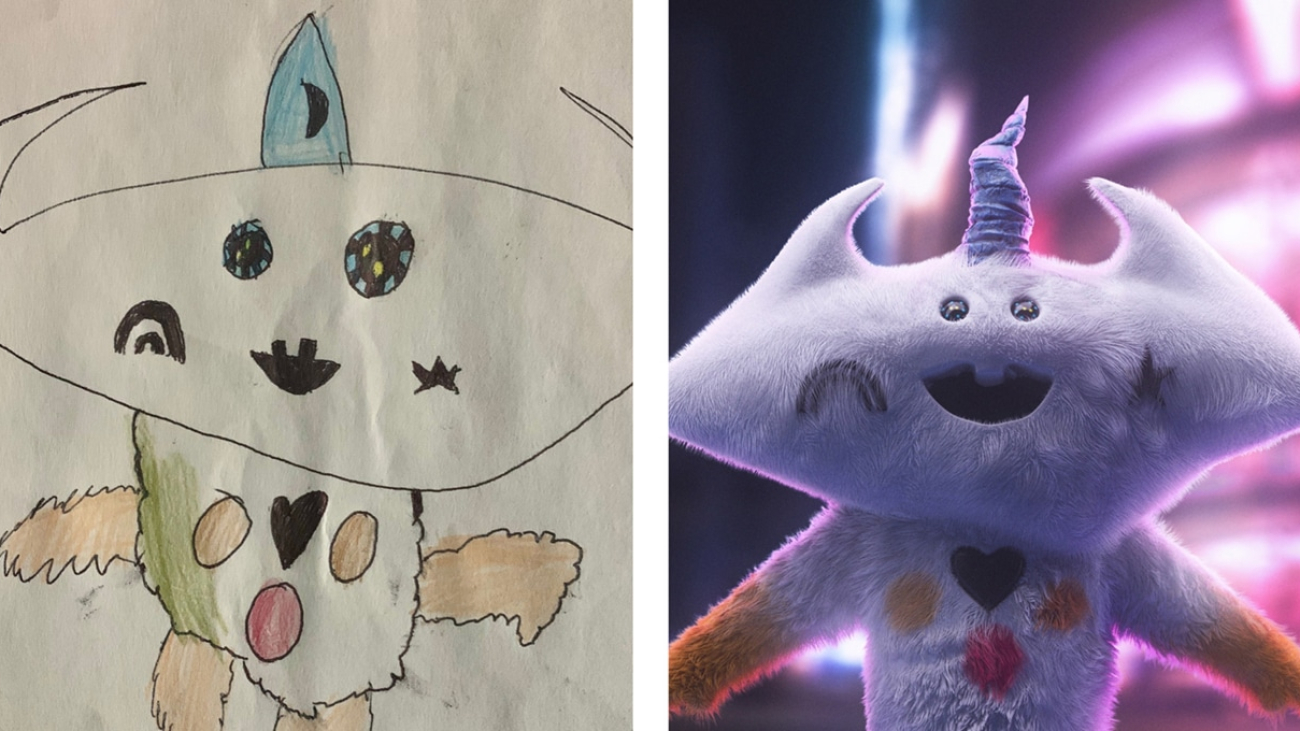

Principal NVIDIA artist and 3D expert Michael Johnson creates highly detailed art that’s both technically impressive and emotionally resonant. It’s evident in his latest piece, Father-Son Collaboration, which draws on inspiration from the vivid imagination of his son and is highlighted this week In the NVIDIA Studio.

“I love how art can bring joy and great memories to others — great work makes me feel special to be a human and an artist,” said Johnson. “Art can flip people’s perspectives and make them feel something completely different.”

“The story behind this piece is that I simply wanted to inspire my son and teach him how things can be perceived — how people can be inspired by others’ art,” said Johnson, who could tell that his son — a doodler himself — often considered his own artwork not good enough.

“I wanted to show him what I saw in his art and how it inspired me,” Johnson said.

Through this project, Johnson also aimed to demonstrate the NVIDIA Studio-powered workflows of art studios and concept artists across the world.

NVIDIA RTX GPU technology plays a pivotal role in accelerating Johnson’s creativity. “As an artist, I care about quick feedback and stability,” he said. “My NVIDIA A6000 RTX graphics card speeds up the rendering process so I can quickly iterate.”

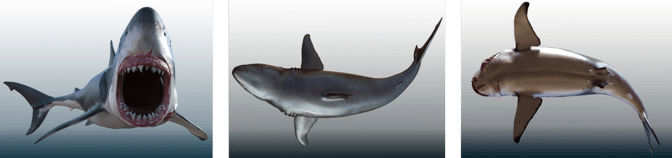

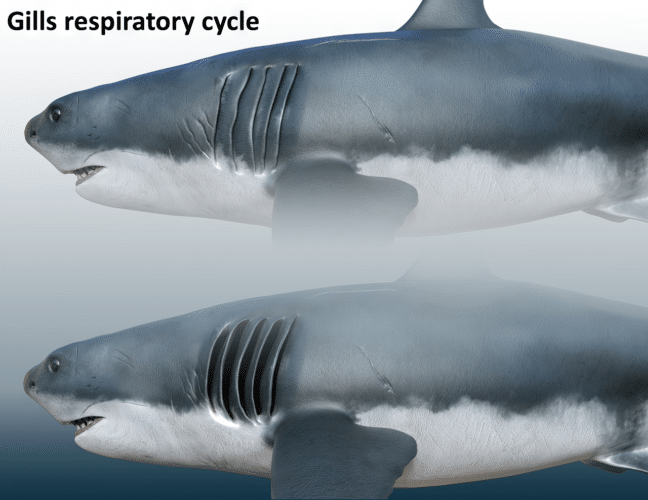

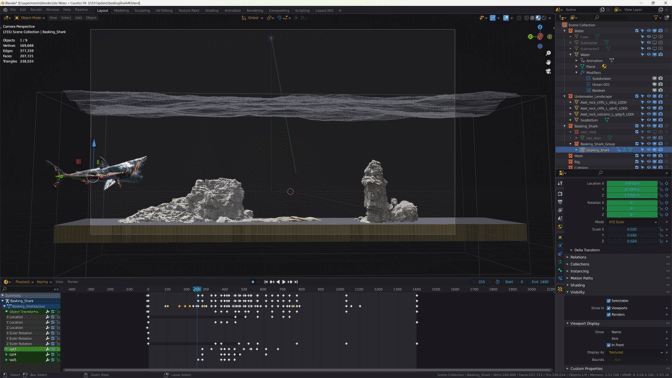

For Father-Son Collaboration, Johnson first opened Autodesk Maya to model the creature’s basic 3D shapes. His GPU-accelerated viewport enabled fast, interactive 3D modeling.

Next, he imported models into ZBrush for further sculpting, freestyling and details. “After I had my final sculpt down, I took the model into Rizom-Lab IV software to lay out the UVs,” Johnson said. UV mapping is the process of projecting a 3D model’s surface to a 2D image for texture mapping. It makes the model easier to texture and shade later in the creative workflow.

Johnson then used Adobe Substance 3D Painter to apply standard and custom textures and shaders on the character.

“Substance 3D Painter is really great because it displays the final look of the textures without bringing it into an external renderer,” said Johnson.

His GPU unlocked RTX-accelerated light and ambient occlusion baking, optimizing assets in mere seconds.

With the textures complete, Johnson imported his models back into Autodesk Maya for hair, grooming, lighting and rendering. For the hair and fur, the artist used XGen, Autodesk Maya’s built-in instancing tool. Autodesk Maya also offers third-party support of GPU-accelerated renderers such as Chaos V-Ray, OTOY OctaneRender and Maxon Redshift.

“Redshift is great — and having a great GPU makes renders really quick,” Johnson added. Redshift’s RTX-accelerated final-frame rendering with AI-powered OptiX denoising exported files with plenty of time to spare.

Johnson put the final touches on Father-Son Collaboration in Adobe Photoshop. With access to over 30 GPU-accelerated features, such as blur gallery, object selection, perspective warp and more, he applied the background and added minor touch-ups to complete the piece.

The joy, awe and wonderment he’d hoped to invoke in his son came to fruition when Johnson finally shared the piece.

“Art is one of the rare things in life that really has no end goal — as it’s really about the process, rather than the result,” Johnson said. “Every day, you learn something new, grow and see things in different ways.”

Check out Johnson’s portfolio on Instagram.

Follow NVIDIA Studio on Instagram, Twitter and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

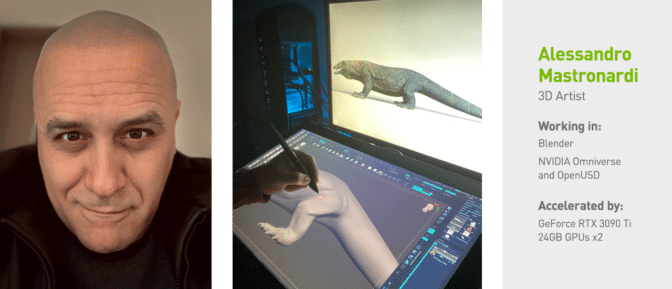

Learn about the latest with OpenUSD and Omniverse at SIGGRAPH, running August 6-10. Take advantage of showfloor experiences like hands-on labs, special events and demo booths — and don’t miss NVIDIA founder and CEO Jensen Huang’s keynote address on Tuesday, Aug. 8, at 8 a.m. PT.

Challenger

Challenger Medic

Medic Gunslinger

Gunslinger Handler

Handler Hunter

Hunter NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)