Robots are moving goods in warehouses, packaging foods and helping assemble vehicles — when they’re not flipping burgers or serving lattes.

How did they get so skilled so fast? Robotics simulation.

Making leaps in progress, it’s transforming industries all around us.

Robotics Simulation Summarized

A robotics simulator places a virtual robot in virtual environments to test the robot’s software without requiring the physical robot. And the latest simulators can generate datasets to be used to train machine learning models that will run on the physical robots.

In this virtual world, developers create digital versions of robots, environments and other assets robots might encounter. These environments can obey the laws of physics and mimic real-world gravity, friction, materials and lighting conditions.

Who Uses Robotics Simulation?

Robots boost operations at massive scale today. Some of the biggest and most innovative names in robots rely on robotics simulation.

Fulfillment centers handle tens of millions of packages a day, thanks to the operational efficiencies uncovered in simulation.

Amazon Robotics uses it to support its fulfillment centers. BMW Group taps into it to accelerate planning for its automotive assembly plants. Soft Robotics applies it to perfecting gripping for picking and placing foods for packaging.

Automakers worldwide are supporting their operations with robotics.

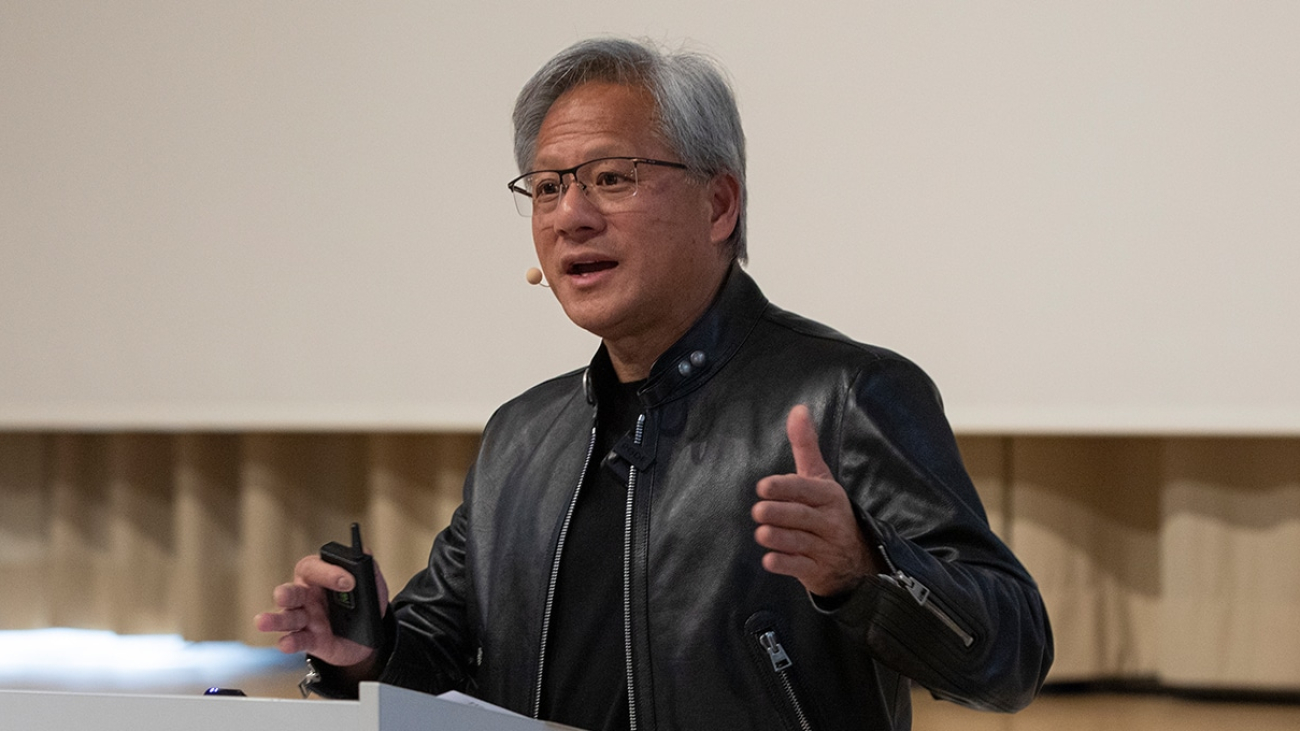

“Car companies employ nearly 14 million people. Digitalization will enhance the industry’s efficiency, productivity and speed,” said NVIDIA CEO Jensen Huang during his latest GTC keynote.

How Robotics Simulation Works, in Brief

An advanced robotics simulator begins by applying fundamental equations of physics. For example, it can use Newton’s laws of motion to determine how objects move over a small increment of time, or a timestep. It can also incorporate physical constraints of a robot, such as being composed of hinge-like joints, or being unable to pass through other objects.

Simulators use various methods to detect potential collisions between objects, identify contact points between colliding objects, and compute forces or impulses to prevent objects from passing through one another. Simulators can also compute sensor signals sought by a user, such as torques at robot joints or forces between a robot’s gripper and an object.

The simulator will then repeat this process for as many timesteps as the user requires. Some simulators — such as NVIDIA Isaac Sim, an application built on NVIDIA Omniverse — can also provide physically accurate visualizations of the simulator output at each timestep.

Using a Robotics Simulator for Outcomes

A robotics simulator user will typically import computer-aided design models of the robot and either import or generate objects of interest to build a virtual scene. A developer can use a set of algorithms to perform task planning and motion planning, and then prescribe control signals to carry out those plans. This enables the robot to perform a task and move in a particular way, such as picking up an object and placing it at a target location.

The developer can observe the outcome of the plans and control signals and then modify them as needed to ensure success. More recently, there’s been a shift toward machine learning-based methods. So instead of directly prescribing control signals, the user prescribes a desired behavior, like moving to a location without colliding. In this situation, a data-driven algorithm generates control signals based on the robot’s simulated sensor signals.

These algorithms can include imitation learning, in which human demonstrations can provide references, and reinforcement learning, where robots learn to achieve behaviors through intelligent trial-and-error, achieving years of learning quickly with an accelerated virtual experience.

Simulation Drives Breakthroughs

Simulation solves big problems. It is used to verify, validate and optimize robot designs and systems and their algorithms. Simulation also helps design facilities to be optimized for maximum efficiencies before construction or remodeling begins. This helps to reduce costly manufacturing change orders.

For robots to work safely among people, flawless motion planning is necessary. To handle delicate objects, robots need to be precise at making contact and grasping. These machines, as well as autonomous mobile robots and vehicle systems, are trained on vast sums of data to develop safe movement.

Drawing on synthetic data, simulations are enabling virtual advances that weren’t previously possible. Today’s robots born and raised in simulation will be used in the real world to solve all manner of problems.

Simulation Research Is Propelling Progress

Driven by researchers, recent simulation advances are rapidly improving the capabilities and flexibility of robotics systems, which is accelerating deployments.

University researchers, often working with NVIDIA Research and technical teams, are solving problems in simulation that have real-world impact. Their work is expanding the potential for commercialization of new robotics capabilities across numerous markets.

Among them, robots are learning to cut squishy materials such as beef and chicken, fasten nuts and bolts for automotive assembly, as well as maneuver with collision-free motion planning for warehouses and manipulate hands with new levels of dexterity.

Such research has commercial promise across trillion-dollar industries.

High-Fidelity, Physics-Based Simulation Breakthroughs

The ability to model physics, displayed in high resolution, ushered in the start of many industrial advances.

Researched for decades, simulations based on physics offer commercial breakthroughs today.

NVIDIA PhysX, part of Omniverse core technology, delivers high-fidelity physics-based simulations, enabling real-world experimentation in virtual environments.

PhysX enables development of the ability to assess grasp quality so that robots can learn to grasp unknown objects. PhysX is also highly capable for developing skills such as manipulation, locomotion and flight.

Launched into open source, PhysX 5 opens the doors for development of industrial applications everywhere. Today, roboticists can access PhysX as part of Isaac Sim, built on Omniverse.

The Nuts and Bolts of Assembly Simulation

With effective grasping enabled, based on physics, the next step was to simulate more capable robotic maneuvering applicable to industries.

Assembly is a big one. It’s an essential part of building products for automotive, electronics, aerospace and medical industries. Assembly tasks include tightening nuts and bolts, soldering and welding, inserting electrical connections and routing cables.

Robotic assembly, however, is a long-standing work in progress. That’s because the physical manipulation complexity, part variability and high accuracy and reliability requirements make it extra tricky to complete successfully — even for humans.

That hasn’t stopped researchers and developers from trying, putting simulation to work in these interactions involving a lot of contact, and there are signs of progress.

NVIDIA robotics and simulation researchers in 2022 came up with a novel simulation approach to overcome the robotics assemble challenge using Isaac Sim. Their research paper, titled Factory: Fast Contact for Robotic Assembly, outlines a set of physics simulation methods and robot learning tools for achieving real-time and faster simulation for a wide range of interactions requiring lots of contact, including for assembly.

Solving the Sim-to-Real Gap for Assembly Scenarios

Advancing the simulation work developed in the paper, researchers followed up with an effort to help solve what’s called the sim-to-real gap.

This gap is the difference between what a robot has learned in simulation and what it needs to learn to be ready for the real world.

In another paper, IndustReal: Transferring Contact-Rich Assembly Tasks from Simulation to Reality, researchers outlined a set of algorithms, systems and tools for solving assembly tasks in simulation and transferring these skills to real robots.

NVIDIA researchers have also developed a new, faster and more efficient method for teaching robot manipulation tasks in real life scenarios — opening drawers or dispensing soap — training significantly faster than the current standard.

The research paper RVT: Robotic View Transformer for 3D Object Manipulation uses a type of neural network called a multi-view transformer to produce virtual views from the camera input.

The work combines text prompts, video input and simulation to achieve 36x faster training time than the current state of the art — reducing the time needed to teach the robot from weeks to days — with a 26 percent improvement in the robot’s task success rate.

Robots Hands Are Grasping Dexterity

Researchers have taken on the challenge of creating more agile hands that can work in all kinds of settings and take on new tasks.

Developers are building robotic gripping systems to pick and place items, but creating highly capable hands with human-like dexterity has so far proven too complex. Using deep reinforcement learning can require billions of labeled images, making it impractical.

NVIDIA researchers working on a project, called DeXtreme, tapped into NVIDIA Isaac Gym and Omniverse Replicator to show that it could be used to train a robot hand to quickly manipulate a cube into a desired position. Tasks like this are challenging for robotics simulators because there is a large number of contacts involved in the manipulation and because the motion has to be fast to do the manipulation in a reasonable amount of time.

The advances in hand dexterity pave the way for robots to handle tools, making them more useful in industrial settings.

The DeXtreme project, which applies the laws of physics, is capable of training robots inside its simulated universe 10,000x faster than if trained in the real world. This equates to days of training versus years.

This simulator feat shows it has the ability to model contacts, which allows a sim-to-real transfer, a holy grail in robotics for hand dexterity.

Cutting-Edge Research on Robotic Cutting

Robots that are capable of cutting can create new market opportunities.

In 2021, a team of researchers from NVIDIA, University of Southern California, University of Washington, University of Toronto and Vector Institute, and University of Sydney won “Best Student Paper” at the Robotics: Science and Systems conference. The work, titled DiSECt: A Differentiable Simulation Engine for Autonomous Robotic Cutting, details a “differentiable simulator” for teaching robots to cut soft materials. Previously, robots trained in this area were unreliable.

The DiSECt simulator can accurately predict the forces on a knife as it presses and slices through common biological materials.

DiSECt relies on the finite element method, which is used for solving differential equations in mathematical modeling and engineering. Differential equations show how a rate of change, or derivative, in one variable relates to others. In robotics, differential equations usually describe the relationship between forces and movement.

Applying these principles, the DiSECt project holds promise for training robots in surgery and food processing, among other areas.

Teaching Collision-Free Motion for Autonomy

So, robotic grasping, assembling, manipulating and cutting are all making leaps. But what about autonomous mobile robots that can safely navigate?

Currently, developers can train robots for specific settings — a factory floor, fulfillment center or manufacturing plant. Within that, simulations can solve problems for specific robots, such as palette jacks, robotic arms and walking robots. Amid these chaotic setups and robot types, there are plenty of people and obstacles to avoid. In such scenes, collision-free motion generation for unknown, cluttered environments is a core component of robotics applications.

Traditional motion planning approaches that attempt to address these challenges can come up short in unknown or dynamic environments. SLAM — or simultaneous localization and mapping — can be used to generate 3D maps of environments with camera images from multiple viewpoints, but it requires revisions when objects move and environments are changed.

To help overcome some of these shortcomings, the NVIDIA Robotics research team has co-developed with the University of Washington a new model, dubbed Motion Policy Networks (or MπNets). MπNets is an end-to-end neural policy that generates collision-free motion in real time using a continuous stream of data coming from a single fixed camera. MπNets has been trained on more than 3 million motion planning problems using a pipeline of geometric fabrics from NVIDIA Omniverse and 700 million point clouds rendered in simulation. Training it on large datasets enables navigation of unknown environments in the real world.

Apart from directly learning a trajectory model as in MπNets, the team also recently unveiled a new point cloud-based collision model called CabiNet. With the CabiNet model, one can deploy general purpose pick-and-place policies of unknown objects beyond a tabletop setup. CabiNet was trained with over 650,000 procedurally generated simulated scenes and was evaluated in NVIDIA Isaac Gym. Training with a large synthetic dataset allowed it to generalize to even out-of-distribution scenes in a real kitchen environment, without needing any real data.

Simulation Benefits to Businesses

Developers, engineers and researchers can quickly experiment with different kinds of robot designs in virtual environments, bypassing time-consuming and expensive physical testing methods.

Applying different kinds of robot designs, in combination with robot software, to test the robot’s programming in a virtual environment before building out the physical machine reduces risks of having quality issues to fix afterwards.

While this can vastly accelerate the development timeline, it can also drastically cut costs for building and testing robots and AI models while ensuring safety.

Additionally, robot simulation helps connect robots with business systems, such as inventory databases, so a robot knows where an item is located.

Simulation of cobots, or robots working with humans, promises to reduce injuries and make jobs easier, enabling more efficient delivery of all kinds of products.

And with packages arriving incredibly fast in homes everywhere, what’s not to like.

Learn about NVIDIA Isaac Sim, Jetson Orin, Omniverse Enterprise and Metropolis.

Learn more from this Deep Learning Institute course: Introduction to Robotic Simulations in Isaac Sim

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)