Thanks to “street views,” modern mapping tools can be used to scope out a restaurant before deciding to go there, better navigate directions by viewing landmarks in the area or simulate the experience of being on the road.

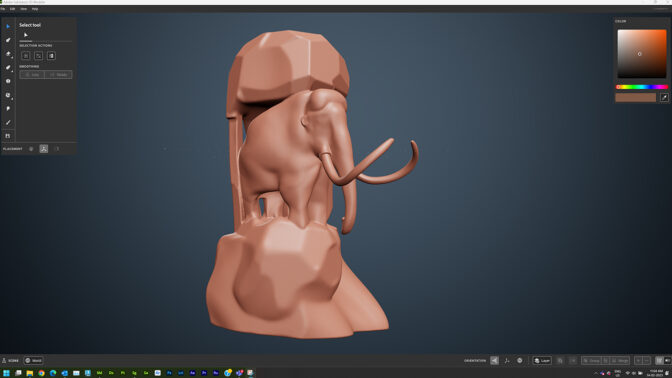

The technique for creating these 3D views is called photogrammetry — the process of capturing images and stitching them together to create a digital model of the physical world.

It’s almost like a jigsaw puzzle, where pieces are collected and then put together to create the bigger picture. In photogrammetry, each puzzle piece is an image. And the more images that are captured and collected, the more realistic and detailed the 3D model will be.

How Photogrammetry Works

Photogrammetry techniques can also be used across industries, including architecture and archaeology. For example, an early example of photogrammetry was from 1849, when French officer Aimé Laussedat used terrestrial photographs to create his first perspective architectural survey at the Hôtel des Invalides in Paris.

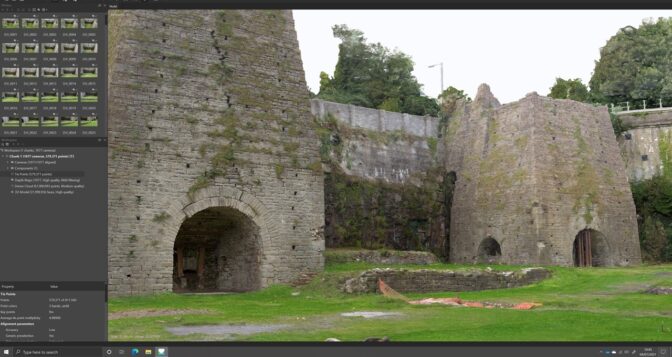

By capturing as many photos of an area or environment as possible, teams can build digital models of a site that they can view and analyze.

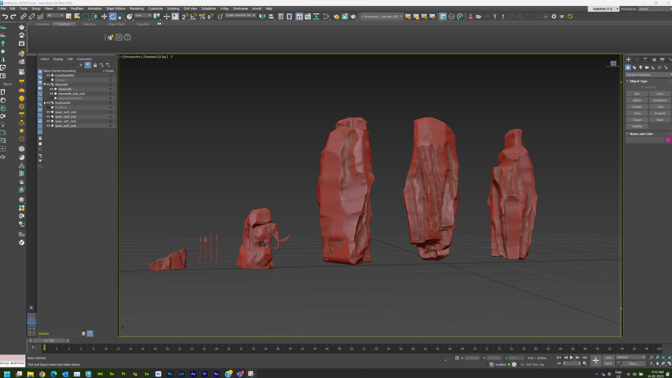

Unlike 3D scanning, which uses structured laser light to measure the locations of points in a scene, photogrammetry uses actual images to capture an object and turn it into a 3D model. This means good photogrammetry requires a good dataset. It’s also important to take photos in the right pattern, so that every area of a site, monument or artifact is covered.

Types of Photogrammetry Methods

Those looking to stitch together a scene today take multiple pictures of a subject from varying angles, and then run them through a specialized application, which allows them to combine and extract the overlapping data to create a 3D model.

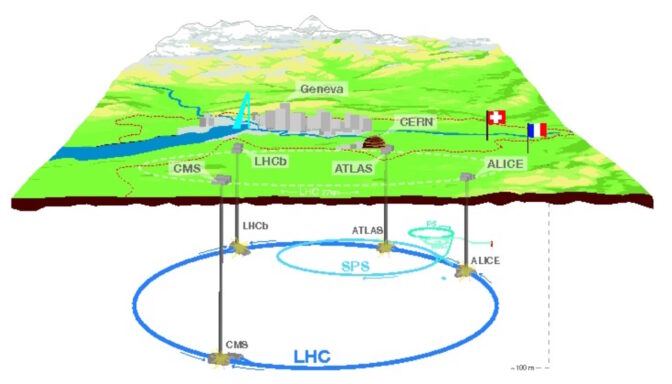

There are two types of photogrammetry: aerial and terrestrial.

Aerial photogrammetry stations the camera in the air to take photos from above. This is generally used on larger sites or in areas that are difficult to access. Aerial photogrammetry is one of the most widely used methods for creating geographic databases in forestry and natural resource management.

Terrestrial photogrammetry, aka close-range photogrammetry, is more object-focused and usually relies on images taken by a camera that’s handheld or on a tripod. It enables speedy onsite data collection and more detailed image captures.

Accelerating Photogrammetry Workflows With GPUs

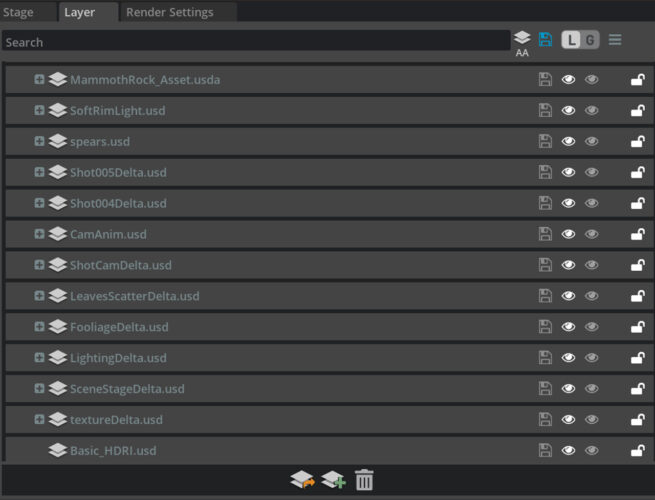

For the most accurate photogrammetry results, teams need a massive, high-fidelity dataset. More photos will result in greater accuracy and precision. However, large datasets can take longer to process, and teams need more computational power to handle the files.

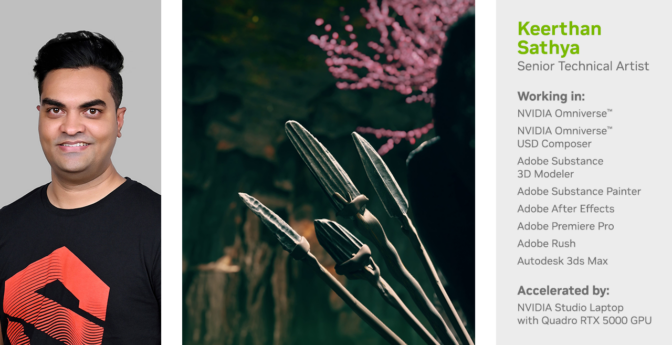

The latest advancements in GPUs help teams address this. Using advanced GPUs like NVIDIA RTX cards allows users to speed up processing and maintain higher-fidelity models, all while inputting larger datasets.

For example, construction teams often rely on photogrammetry techniques to show progress on construction sites. Some companies capture images of a site to create a virtual walkthrough. But an underpowered system can result in a choppy visual experience, which detracts from a working session with clients or project teams.

With the large memory of RTX professional GPUs, architects, engineers and designers can easily manage massive datasets to create and handle photogrammetry models faster.

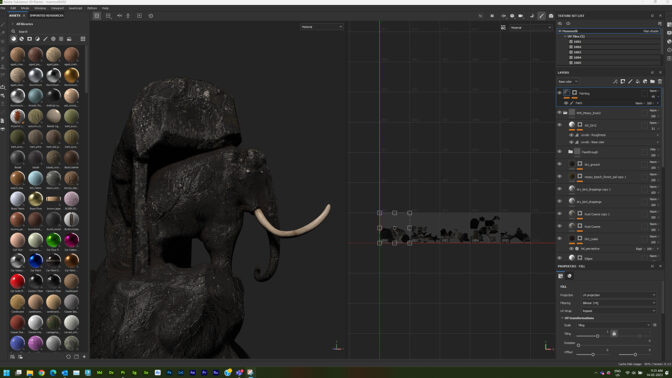

Photogrammetry uses GPU power to assist in vectorization of the photo, which accelerates stitching thousands of images together. And with the real-time rendering and AI capabilities of RTX professional GPUs, teams can accelerate 3D workflows, create photorealistic renderings and keep 3D models up to date.

History and Future of Photogrammetry

The idea of photogrammetry dates to the late 1400s, nearly four centuries before the invention of photography. Leonardo da Vinci developed the principles of perspective and projective geometry, which are foundational pillars of photogrammetry.

Geometric perspective is a method that enables illustrating a 3D object in a 2D field by creating points that showcase depth. On top of this foundation, aspects such as geometry, shading and lighting are the building blocks of realistic renderings.

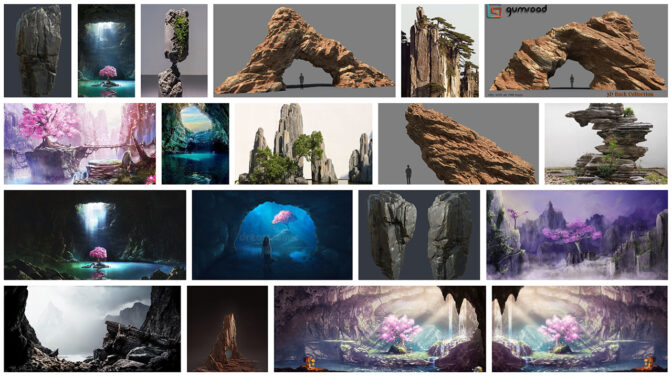

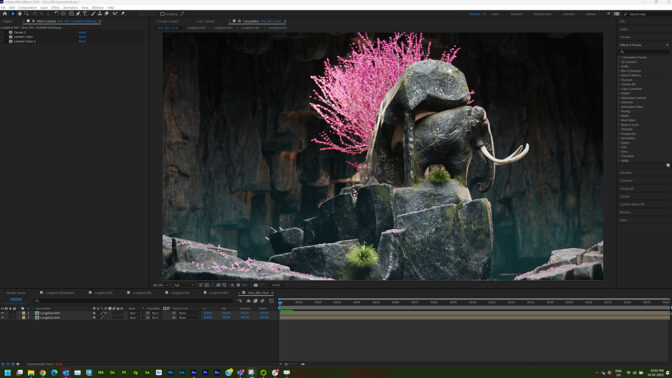

Photogrammetry advancements now allow users to achieve new levels of immersiveness in 3D visualizations. The technique has also paved the way for other groundbreaking tools like reality-capture technology, which collects data on real-world conditions to give users reliable, accurate information about physical objects and environments.

NVIDIA Research is also developing AI techniques that rapidly generate 3D scenes from a small set of images.

Instant NeRF and Neuralangelo, for example, use neural networks to render complete 3D scenes from just a few-dozen still photos or 2D video clips. Instant NeRF could be a powerful tool to help preserve and share cultural artifacts through online libraries, museums, virtual-reality experiences and heritage-conservation projects. Many artists are already creating beautiful scenes from different perspectives with Instant NeRF.

Learn More About Photogrammetry

Objects, locations and even industrial digital twins can be rendered volumetrically — in real time — to be shared and preserved, thanks to advances in photogrammetric technology. Photogrammetry applications are expanding across industries and becoming increasingly accessible.

Museums can provide tours of items or sites they otherwise wouldn’t have had room to display. Buyers can use augmented-reality experiences to see how a product might fit in a space before purchasing it. And sports fans can choose seats with the best view.

Learn more about NVIDIA RTX professionals GPUs and photogrammetry by joining an upcoming NVIDIA webinar, Getting Started With Photogrammetry for AECO Reality Capture, on Thursday, June 22, at 10 a.m. PT.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)