Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology improves creative workflows.

An adrenaline-fueled virtual ride in the sky is sure to satisfy all thrill seekers — courtesy of 3D artist Kosei Wano’s sensational animation, Moon Hawk. Wano outlines his creative workflow this week In the NVIDIA Studio.

Plus, join the #GameArtChallenge — running through Sunday, April 30 — by using the hashtag to share video game fan art, character creations and more for a chance to be featured across NVIDIA social media channels.

Welcome to the #GameArtChallenge!

Ever created a video game or some game inspired art like this one from @beastochahin?

Share it with us using #GameArtChallenge from today to the end of April for a chance to be featured on the Studio or @nvidiaomniverse channels!

pic.twitter.com/7tqLtWk9pV

— NVIDIA Studio (@NVIDIAStudio) March 6, 2023

Original game content can be made with NVIDIA Omniverse — a platform for creating and operating metaverse applications — using the Omniverse Machinima app. This enables users to collaborate in real time when animating characters and environments in virtual worlds.

Who Dares, Wins

Wano often finds inspiration exploring the diversity of flora and fauna. He has a penchant for examining birds — and even knows the difference in wing shapes between hawks and martins, he said. This interest in flying entities extends to his fascination with aircrafts. For Moon Hawk, Wano took on the challenge of visually evolving a traditional, fuel-based fighter jet into an electric one.

With reference material in hand, Wano opened the 3D app Blender to scale the fighter jet to accurate, real-life sizing, then roughly sketched within the 3D design space, his preferred method to formulate models.

The artist then deployed several tips and tricks to model more efficiently: adding Blender’s automatic detailing modifier, applying neuro-reflex modeling to change the aircraft’s proportions, then dividing the model’s major 3D shapes into sections to edit individually — a step Wano calls “dividing each difficulty.”

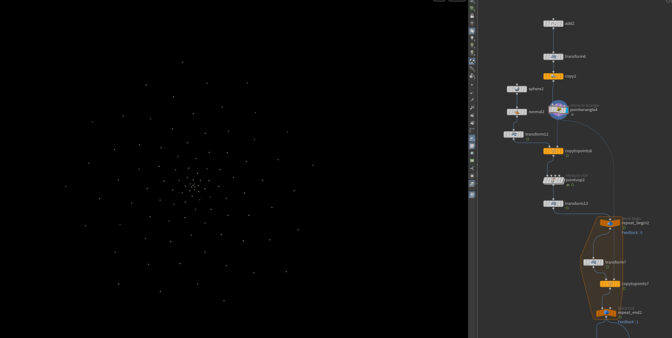

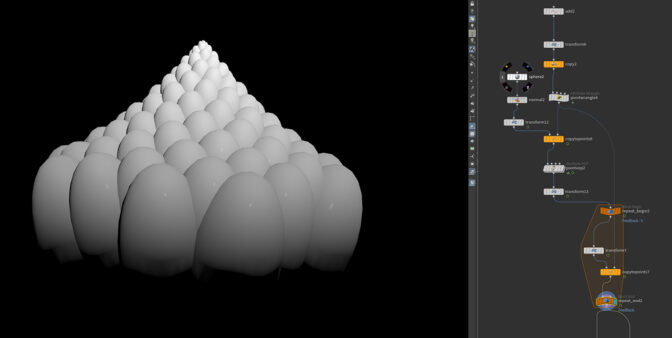

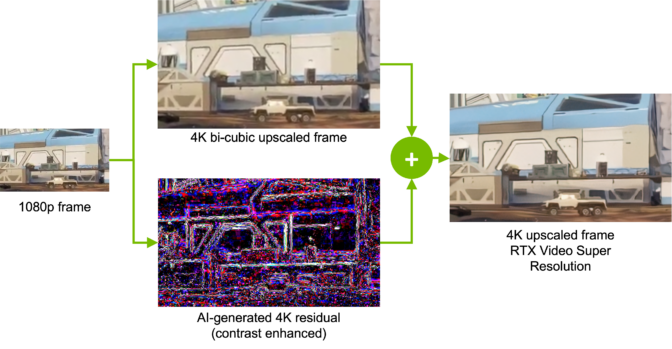

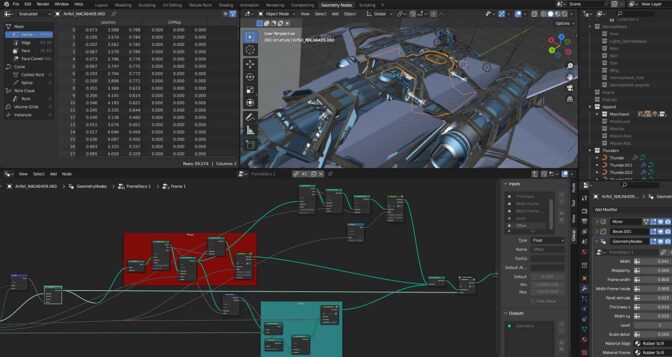

Blender Cycles RTX-accelerated OptiX ray tracing, unlocked by the artist’s GeForce RTX 3080 Ti GPU, enabled interactive, photorealistic modeling in the viewport. “Optix’s AI-powered denoiser renders lightly, allowing for comfortable trial and error,” said Wano, who then applied sculpting and other details. Next, Wano used geo nodes to add organic style and customization to his Blender scenes and animate his fighter jet.

Blender geo nodes make modeling an almost completely procedural process — allowing for non-linear, non-destructive workflows and the instancing of objects — to create incredibly detailed scenes using small amounts of data.

For Moon Hawk, Wano applied geo nodes to mix materials not found in nature, creating unique textures for the fighter jet. Being able to make real-time base mesh edits without the concern of destructive workflows gave Wano the freedom to alter his model on the fly with an assist from his GPU. “With the GeForce RTX 3080 Ti, there’s no problem, even with a model as complicated as this,” he said.

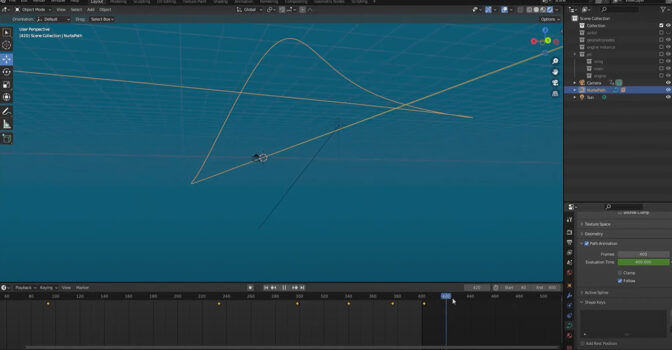

Wano kicked off the animation phase by selecting the speed of the fighter jet and roughly designing its flight pattern.

The artist referenced popular fighter jet scenes in cinema and video games, as well as studied basic rules of physics, such as inertia, to ensure the flight patterns in his animation were realistic. Then, Wano returned to using geo nodes to add 3D lighting effects without the need to simulate or bake. Such lighting modifications helped to make rendering the project simpler in its final stage.

Parameters were edited with ease, in addition to applying particle simulations and manually shaking the camera to add more layers of immersion to the scenes.

With the animation complete, Wano added short motion blur. Accelerated motion blur rendering enabled by his RTX GPU and the NanoVBD toolset for easy rendering of volumes let him apply this effect quickly. And RTX-accelerated OptiX ray tracing in Blender Cycles delivered the fastest final frame renders.

Wano imported final files into Blackmagic Design’s DaVinci Resolve application, where GPU-accelerated color grading, video editing and color scopes helped the artist complete the animation in record time.

Choosing GeForce RTX was a simple choice for Wano, who said, “NVIDIA products have been trusted by many people for a long time.”

For a deep dive into Wano’s workflow, visit the NVIDIA Studio YouTube channel to browse the playlist Designing and Modeling a Sci-Fi Ship in Blender With Wanoco4D and view each stage: Modeling, Materials, Geometry Nodes and Lightning Effect, Setting Animation and Lights and Rendering.

View more of Wano’s impressive portfolio on ArtStation.

Who Dares With Photogrammetry, Wins Again

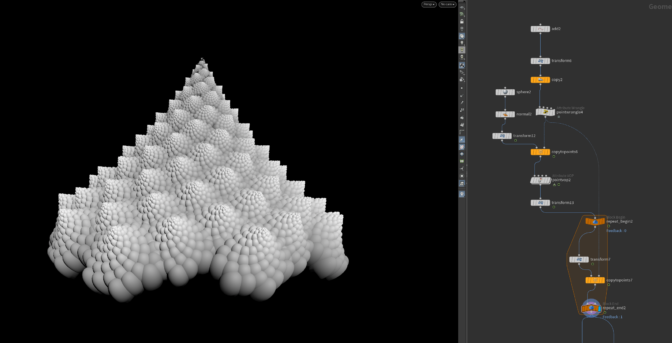

Wano, like most artists, is always growing his craft, refining essential skills and learning new techniques, including photogrammetry — the art and science of extracting 3D information from photographs.

In the NVIDIA Studio artist Anna Natter recently highlighted her passion for photogrammetry, noting that virtually anything can be preserved in 3D and showcasing features that have the potential to save 3D artists countless hours. Wano saw this same potential when experimenting with the technology in Adobe Substance 3D Sampler.

“Photogrammetry can accurately reproduce the complex real world,” said Wano, who would encourage other artists to think big in terms of both individual objects and environments. “You can design an entire realistic space by placing it in a 3D virtual world.”

Try out photogrammetry and post your creations with the #StudioShare hashtag for a chance to be featured across NVIDIA Studio’s social media channels.

Follow NVIDIA Studio on Instagram, Twitter and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)