Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology improves creative workflows. We’re also deep diving on new GeForce RTX 40 Series GPU features, technologies and resources, and how they dramatically accelerate content creation.

The first NVIDIA Studio laptops powered by GeForce RTX 40 Series Laptop GPUs are now available, starting with systems from MSI and Razer — with many more to come.

Featuring GeForce RTX 4090 and 4080 Laptop GPUs, the new Studio laptops use the NVIDIA Ada Lovelace architecture and fifth-generation Max-Q technologies for maximum performance and efficiency. They’re fueled by powerful NVIDIA RTX technology like DLSS 3, which routinely increases frame rates by 2x or more.

Backed by the NVIDIA Studio platform, these laptops give creators exclusive access tools and apps — including NVIDIA Omniverse, Canvas and Broadcast — and deliver breathtaking visuals with full ray tracing and time-saving AI features.

They come preinstalled with regularly updated NVIDIA Studio Drivers. This month’s driver is available for download starting today.

And when creating turns to gaming, the laptops enable playing at previously impossible levels of detail and speed.

Plus, In the NVIDIA Studio this week highlights the making of The Artists’ Metaverse, a video showcasing the journey of 3D collaboration between seven creators, across several time zones, using multiple creative apps simultaneously — all powered by NVIDIA Omniverse.

The Future of Content Creation, Anywhere

NVIDIA Studio laptops, powered by new GeForce RTX 40 Series Laptop GPUs, deliver the largest-ever generational leap in portable performance and are the world’s fastest laptops for creating and gaming.

These creative powerhouses run up to 3x more efficiently than the previous generation, enabling users to power through creative workloads in a fraction of the time, all using thin, light laptops — with 14-inch designs coming soon for the first time.

MSI’s Stealth 17 Studio comes with up to a GeForce RTX 4090 Laptop GPU and an optional 17-inch, Mini LED 4K, 144Hz, 1000 Nits, DisplayHDR 1000 display — perfect for creators of all types. It’s available in various configurations at Amazon, Best Buy, B&H and Newegg.

Razer is upgrading its Blade laptops with up to a GeForce RTX 4090 Laptop GPU. Available with a 16- or 18-inch HDR-capable, dual-mode, mini-LED display, they feature a Creator mode that enables sharp, ultra-high-definition+ native resolution at 120Hz. It’s available at Razer, Amazon, Best Buy, B&H and Newegg.

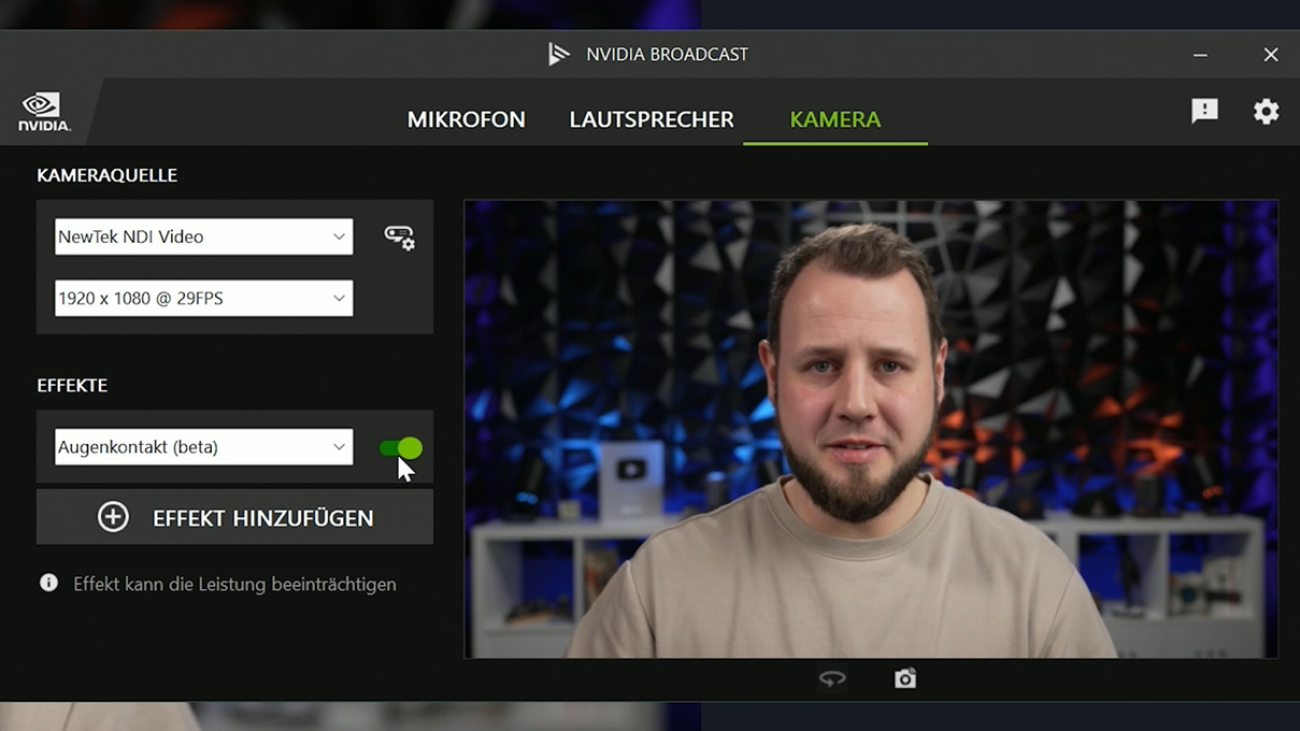

The MSI Stealth 17 Studio and Razer Blade 16 and 18 come preinstalled with NVIDIA Broadcast. The app’s recent update to version 1.4 added an Eye Contact feature, ideal for content creators who want to record themselves while reading notes or avoid having to stare directly at the camera. The feature also lets video conference presenters appear as if they’re looking at their audience, improving engagement.

Designed for gamers, new units from ASUS, GIGABYTE and Lenovo are also available today and deliver great performance in creator applications with access to NVIDIA Studio benefits.

Groundbreaking Performance

The new Studio laptops have been put through rigorous testing, and many reviewers are detailing the new levels of performance and AI-powered creativity that GeForce RTX 4090 and 4080 Laptop GPUs make possible. Here’s what some are saying:

“NVIDIA’s GeForce RTX 4090 pushes laptops to blistering new frontiers: Yes, it’s fast, but also much more.” — PC World

“GeForce RTX 4090 Laptops can also find the favor of content creators thanks to NVIDIA Studio as well as AV1 support and the double NVENC encoder.” — HDBLOG.IT

“With its GeForce RTX 4090… and bright, beautiful dual-mode display, the Razer Blade 16 can rip through games with aplomb, while being equally adept at taxing, content creations workloads.” — Hot Hardware

“The Nvidia GeForce RTX 4090 mobile GPU is a step up in performance, as we’d expect from the hottest graphics chip.” — PC Magazine

“Another important point – particularly in the laptop domain – is the presence of enhanced AV1 support and dual hardware encoders. That’s really useful for streamers or video editors using a machine like this.” – KitGuru

Pick up the latest Studio systems or configure a custom system today.

Revisiting ‘The Artists’ Metaverse’

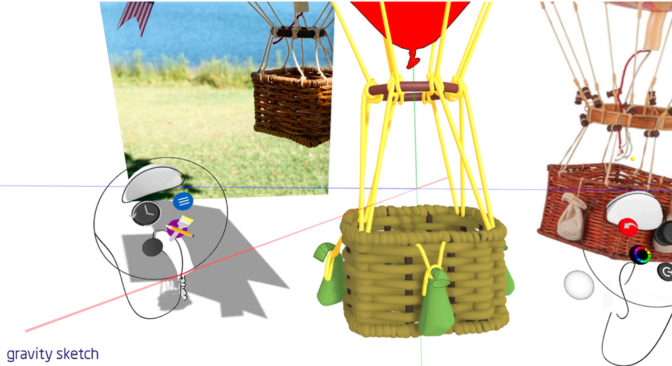

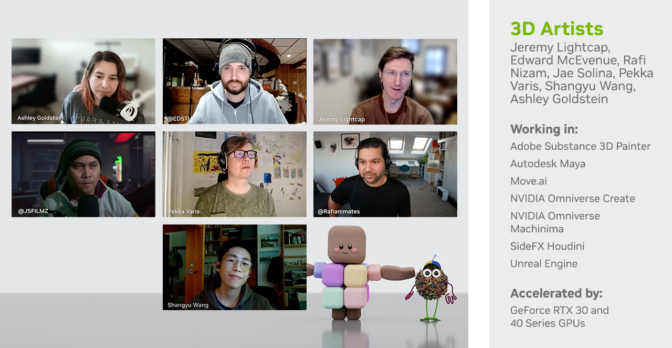

Seven talented artists join us In the NVIDIA Studio this week to discuss building The Artists’ Metaverse — a spotlight demo from last month’s CES. The group reflected on how easy it was to collaborate in real time from different parts of the world.

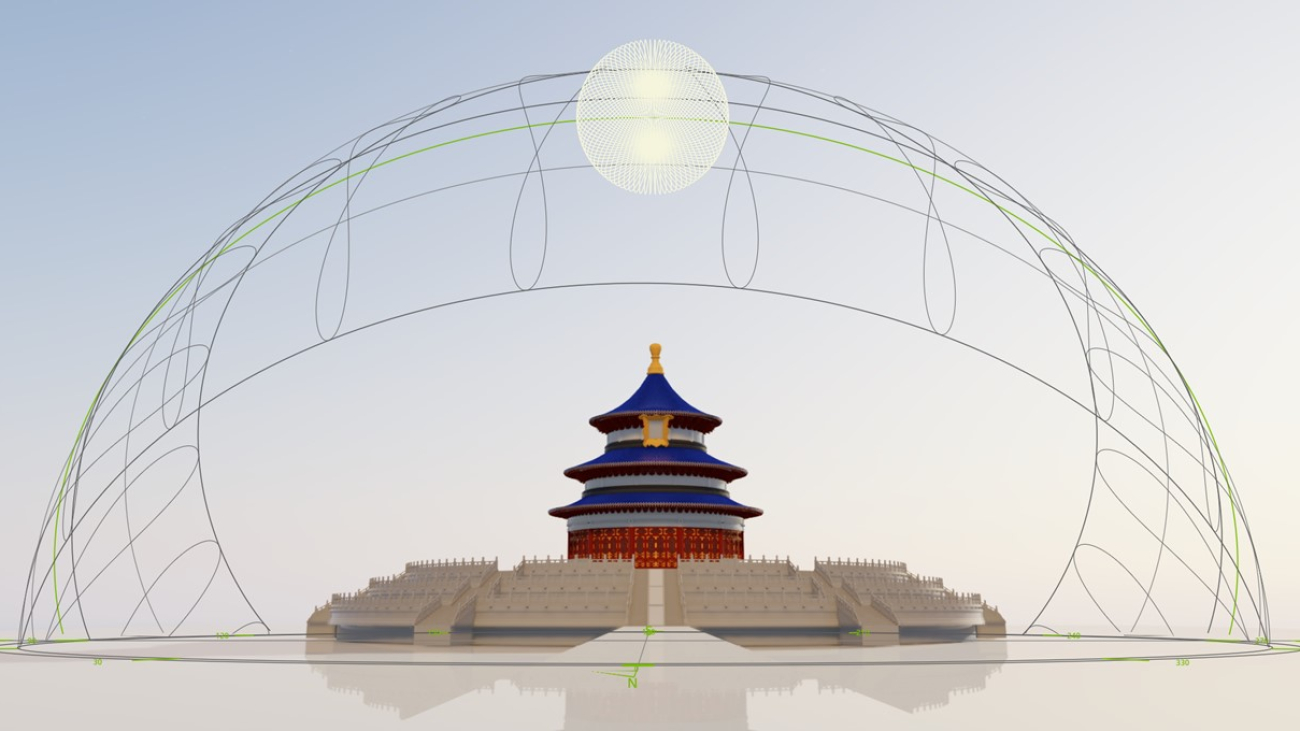

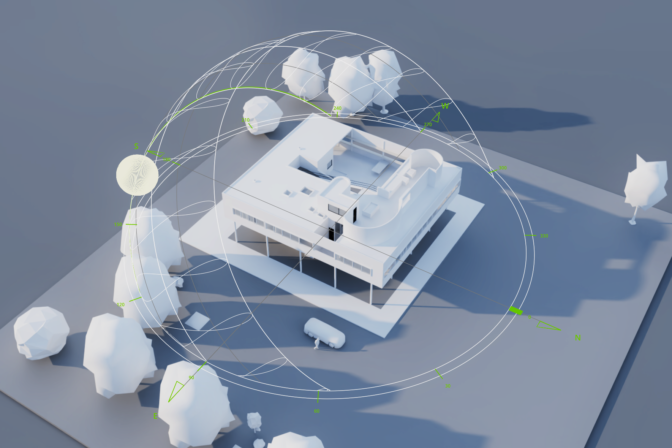

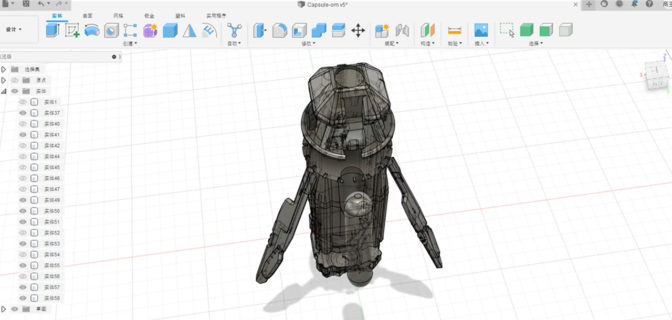

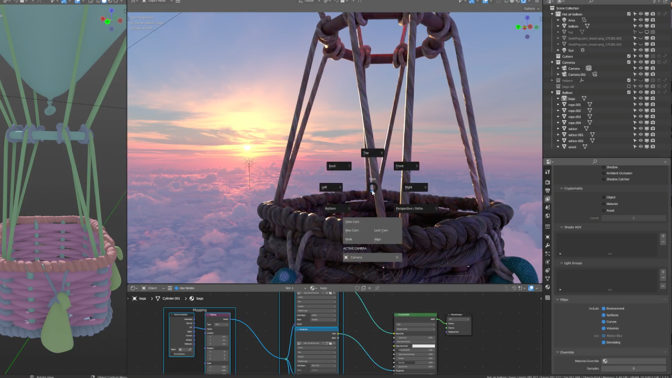

It started in NVIDIA Omniverse, a hub to interconnect 3D workflows replacing linear pipelines with live-sync creation. The artists connected to the platform via Omniverse Cloud.

“Setting up the Omniverse Cloud collaboration demo was a super easy process,” said award-winning 3D creator Rafi Nizam. “It was cool to see avatars appearing as people popped in, and the user interface makes it really clear when you’re working in a live state.”

Filmmaker Jae Solina, aka JSFILMZ, animated characters in Omniverse using Xsens and Unreal Engine.

“Prior to Omniverse, creating animations was such a hassle, let alone getting photorealistic animations,” Solina said. “Instead of having to reformat and upload files individually, everything is done in Omniverse in real time, leading to serious time saved.”

Jeremy Lightcap reflected on the incredible visual quality of the virtual scene, highlighting the seamless movement within the viewport.

“We had three Houdini simulations, a volume database file storm cloud, three different characters with motion capture and a very dense Western town set with about 100 materials,” Lightcap said. “I’m not sure how many other programs could handle that and still give you instant, path-traced lighting results.”

For Ashley Goldstein, an NVIDIA 3D artist and tutorialist, the demo highlighted the versatility of Omniverse. “I could update the scene and save it as a new USD layer, so when someone else opened it up, they had all of my updates immediately,” she said. “Or, if they were working on the scene at the same time, they’d be instantly notified of the updates and could fetch new content.”

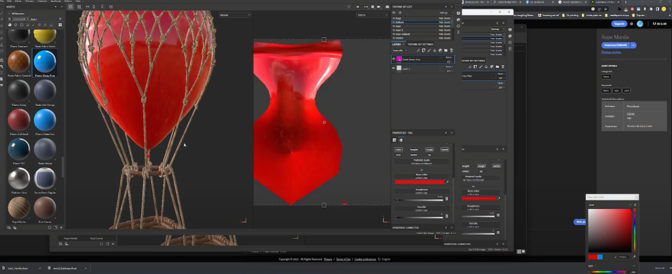

Edward McEvenue, aka edstudios, reflected on the immense value Omniverse on RTX hardware provides, displaying fully ray-traced graphics with instant feedback. “3D production is a very iterative process, where you have to make hundreds if not thousands of small decisions along the way before finalizing a scene,” he said. “Using GPU acceleration with RTX path tracing in the viewport makes that process so much easier, as you get near-instant feedback on the changes you’re making, with all of the full-quality lighting, shadows, reflections, materials and post-production effects directly in the working viewport.”

3D artist Shangyu Wang noted Omniverse is his preferred 3D collaborative content-creation platform. “Autodesk’s Unreal Live Link for Maya gave me a ray-traced, photorealistic preview of the scene in real time, no waiting to see the final render result,” he said.

Fellow 3D artist Pekka Varis mentioned Omniverse’s positive trajectory. “New features are coming in faster than I can keep up!” he said. “It can become the main standard of the metaverse.”

Download Omniverse today, free for all NVIDIA and GeForce RTX GPU owners — including those with new GeForce RTX 40 Series laptops.

Follow NVIDIA Studio on Instagram, Twitter and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter. Learn more about Omniverse on Instagram, Medium, Twitter and YouTube for additional resources and inspiration. Check out the Omniverse forums, and join our Discord server and Twitch channel to chat with the community.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)