Demand for intelligent robots is growing as more industries embrace automation to address supply chain challenges and labor force shortages.

The installed base of industrial and commercial robots will grow more than 6.4x — from 3.1 million in 2020 to 20 million in 2030, according to ABI Research. Developing, validating and deploying these new AI-based robots requires simulation technology that places them in realistic scenarios.

At CES, NVIDIA announced major updates to Isaac Sim, its robotics simulation tool to build and test virtual robots in realistic environments across varied operating conditions. Now accessible from the cloud, Isaac Sim is built on NVIDIA Omniverse, a platform for creating and operating metaverse applications.

Powerful AI-Driven Capabilities for Roboticists

With humans increasingly working side by side with collaborative robots (cobots) or autonomous mobile robots (AMRs), it’s critical that people and their common behaviors are added to simulations.

Isaac Sim’s new people simulation capability allows human characters to be added to a warehouse or manufacturing facility and tasked with executing familiar behaviors— like stacking packages or pushing carts. Many of the most common behaviors are already supported, so simulating them is as simple as issuing a command.

To minimize the difference between results observed in a simulated world versus those seen in the real world, it’s imperative to have physically accurate sensor models.

Using NVIDIA RTX technology, Isaac Sim can now render physically accurate data from sensors in real time. In the case of an RTX-simulated lidar, ray tracing provides more accurate sensor data under various lighting conditions or in response to reflective materials.

Isaac Sim also provides numerous new simulation-ready 3D assets, which are critical to building physically accurate simulated environments. Everything from warehouse parts to popular robots come ready to go, so developers and users can quickly start building.

Significant new capabilities for robotics researchers include advances in Isaac Gym for reinforcement learning and Isaac Cortex for collaborative robot programming. Additionally, a new tool, Isaac ORBIT, provides simulation operating environments and benchmarks for robot learning and motion planning.

For the large community of Robot Operating System (ROS) developers, Isaac Sim upgrades support for ROS 2 Humble and Windows. All of the Isaac ROS software can now be used in simulation.

Expanding Isaac Platform Capabilities and Ecosystem Drives Adoption

The large and complex robotics ecosystem spans multiple industries, from logistics and manufacturing to retail, energy, sustainable farming and more.

The end-to-end Isaac robotics platform provides advanced AI and simulation software as well as accelerated compute capabilities to the robotics ecosystem. Over a million developers and more than a thousand companies rely on one or many parts of it. This includes many companies that have deployed physical robots developed and tested in the virtual world using Isaac Sim.

Telexistence has deployed beverage restocking robots across 300 convenience stores in Japan. To improve safety, Deutsche Bahn is training AI models to handle very important but unexpected corner cases that happen rarely in the real world — like luggage falling on a train track. Sarcos Robotics is developing robots to pick and place solar panels in renewable energy installations.

Festo uses Isaac Cortex to simplify programming for cobots and transfer simulated skills to the physical robots. Fraunhofer is developing advanced AMRs using the physically accurate and full-fidelity visualization features of Isaac Sim. Flexiv is using Isaac Replicator for synthetic data generation to train AI models.

While training robots is important, simulation is playing a critical role in training the human operators to work with and program robots. Ready Robotics is teaching programming of industrial robots with Isaac Sim. Universal Robotics is using Isaac Sim for workforce development to train end operators from the cloud.

Cloud Access Puts Isaac Platform Within Reach Everywhere

With Isaac Sim available in the cloud, global, multidisciplinary teams working on robotics projects can collaborate with increased accessibility, agility and scalability for testing and training virtual robots.

A lack of adequate training data often hinders deployment when building new facilities with robotics systems or scaling existing autonomous systems. Isaac Sim taps into Isaac Replicator to enable developers to create massive ground-truth datasets that mimic the physics of real-world environments.

Once deployed, dynamic route planning is required to operate an efficient fleet of hundreds of robots as automation requirements scale. NVIDIA cuOpt, a real-time fleet task-assignment and route-planning engine improves operational efficiencies with automation.

Get Started on Isaac Sim

Watch NVIDIA’s special address at CES, where its executives unveiled products, partnerships and offerings in autonomous machines, robotics, design, simulation and more.

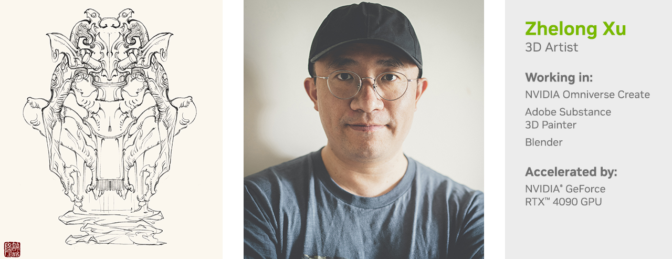

by Edward McEvenue, created using

by Edward McEvenue, created using