Smart hospitals — which utilize data and AI insights to facilitate decision-making at each stage of the patient experience — can provide medical professionals with insights that enable better and faster care.

A smart hospital uses data and technology to accelerate and enhance the work healthcare professionals and hospital management are already doing, such as tracking hospital bed occupancy, monitoring patients’ vital signs and analyzing radiology scans.

What’s the Difference Between a Smart Hospital and a Traditional Hospital?

Hospitals are continuously generating and collecting data, much of which is now digitized. This creates an opportunity for them to apply such technologies as data analytics and AI for improved insights.

Data that was once stored as a paper file with a patient’s medical history, lab results and immunization information is now stored as electronic health records, or EHRs. Digital CT and MRI scanners, as well as software including the PACS medical imaging storage system, are replacing analog radiology tools. And connected sensors in hospital rooms and operating theaters can record multiple continuous streams of data for real-time and retrospective analysis.

As hospitals transition to these digital tools, they’re poised to make the shift from a regular hospital to a smart hospital — one that not only collects data, but also analyzes it to provide valuable, timely insights.

Natural language processing models can rapidly pull insights from complex pathology reports to support cancer care. Data science can monitor emergency room wait times to resolve bottlenecks. AI-enabled robotics can assist surgeons in the operating room. And video analytics can detect when hand sanitizer supplies are running low or a patient needs attention — such as detecting the risk of falls in the hospital or at home.

What Are Some Benefits of a Smart Hospital?

Smart hospital technology benefits healthcare systems, medical professionals and patients in the following ways:

- Healthcare providers: Smart hospital data can be used to help healthcare facilities optimize their limited resources, increasing operational efficiency for a better patient-centric approach. Sensors can monitor patients when they’re alone in the room. AI algorithms can help inform which patients should be prioritized based on the severity of their case. And telehealth solutions can help deliver care to patients outside of hospital visits.

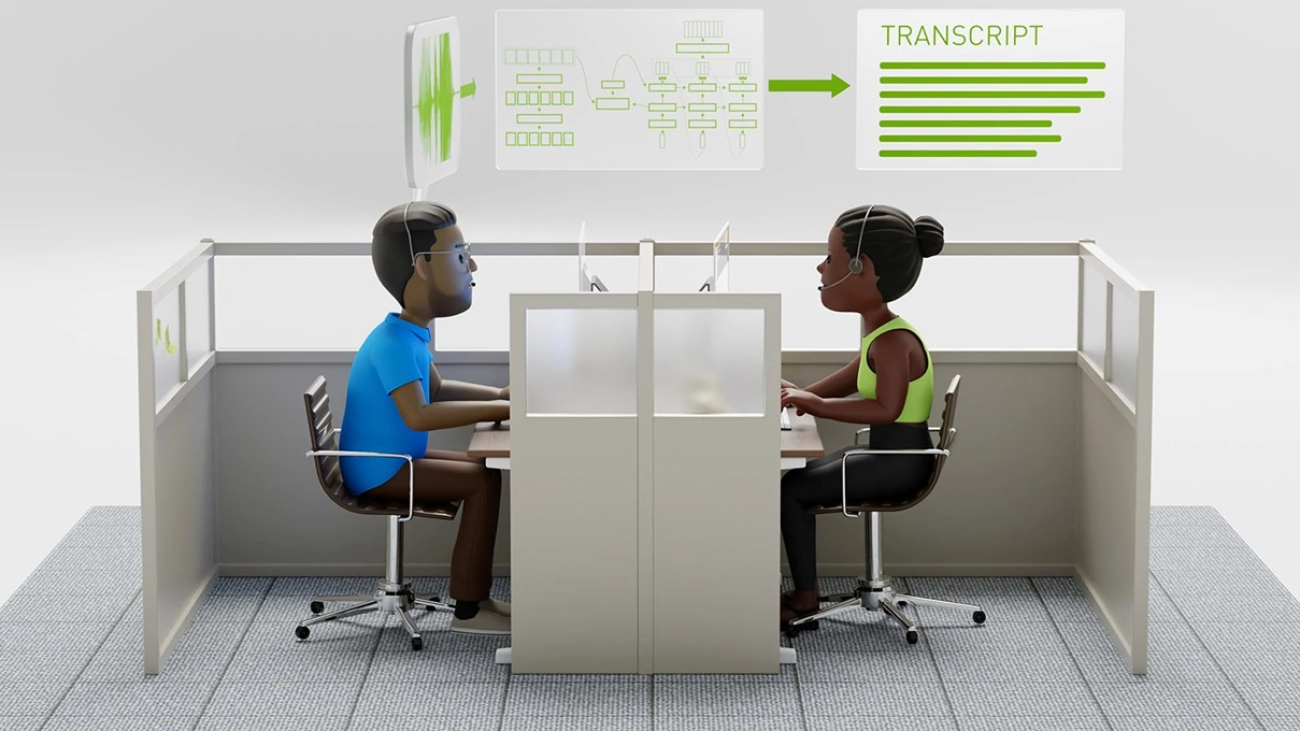

- Clinicians: Smart hospital tools can enable doctors, nurses, medical imaging technicians and other healthcare experts to spend more time focusing on patient care by taking care of routine or laborious tasks, such as writing notes about each patient interaction, segmenting anatomical structures in an MRI or converting doctor’s notes into medical codes for insurance billing. They can also aid clinical decision-making with AI algorithms that provide a second opinion or triage recommendation for individual patients based on historical data.

- Patients: Smart hospital technology can bring health services closer to the goal of consistent, high-quality patient care — anywhere in the world, from any doctor. Clinicians vary in skill level, areas of expertise, access to resources and time available per patient. By deploying AI and robotics to monitor patterns and automate time-consuming tasks, smart hospitals can allow clinicians to focus on interacting with their patients for a better experience.

How Can I Make My Hospital Smart?

Running a smart hospital requires an entire ecosystem of hardware and software solutions working in harmony with clinician workflows. To accelerate and improve patient care, every application, device, sensor and AI model in the system must share data and insights across the institution.

Think of the smart hospital as an octopus. Its head is the organization’s secure server that stores and processes the entire facility’s data. Each of its tentacles is a different department — emergency room, ICU, operating room, radiology lab — covered in sensors (octopus suckers) that take in data from their surroundings.

If each tentacle operated in a silo, it would be impossible for the octopus to take rapid action across its entire body based on the information sensed by a single arm. Every tentacle sends data back to the octopus’ central brain, enabling the creature to flexibly respond to its changing environment.

In the same way, the smart hospital is a hub-and-spoke model, with sensors distributed across a facility that can send critical insights back to a central brain, helping inform facility-wide decisions. For instance, if camera feeds in an operating room show that a surgical procedure is almost complete, AI would alert staff in the recovery room to be ready for the patient’s arrival.

To power smart hospital solutions, medical device companies, academic medical centers and startups are turning to NVIDIA Clara, an end-to-end AI platform that integrates with the entire hospital network — from medical devices running real-time applications to secure servers that store and process data in the long term. It supports edge, data center and cloud infrastructure, numerous software libraries, and a global partner ecosystem to power the coming generation of smart hospitals.

Smart Hospital Operations and Patient Monitoring

A bustling hospital has innumerable moving parts — patients, staff, medicine and equipment — presenting an opportunity for AI automation to optimize operations around the facility.

While a doctor or nurse can’t be at a patient’s side at every moment of their hospital stay, a combination of intelligent video analytics and other smart sensors can closely monitor patients, alerting healthcare providers when the person is in distress and needs attention.

In an ICU, for instance, patients are connected to monitoring devices that continuously collect vital signs. Many of these continuously beep with various alerts, which can lead healthcare practitioners to sometimes overlook the alarm of a single sensor.

By instead aggregating the streaming data from multiple devices into a single feed, AI algorithms can analyze the data in real time, helping more quickly detect if a patient’s condition takes a sudden turn for the better or worse.

The Houston Methodist Institute for Academic Medicine is working with Mark III Systems, an Elite member of the NVIDIA Partner Network, to deploy an AI-based tool called DeepStroke that can detect stroke symptoms in triage more accurately and earlier based on a patient’s speech and facial movements. By integrating these AI models into the emergency room workflow, the hospital can more quickly identify the proper treatment for stroke patients, helping ensure clinicians don’t miss patients who would potentially benefit from life-saving treatments.

Using enterprise-grade solutions from Dell and NVIDIA — including GPU-accelerated Dell PowerEdge servers, the NVIDIA Fleet Command hybrid cloud system and the DeepStream software development kit for AI streaming analytics — Inception startup Artisight manages a smart hospital network including over 2,000 cameras and microphones at Northwestern Medicine.

One of Artisight’s models alerts nurses and physicians to patients at risk of harm. Another system, based on in-door positioning system data, automates clinic workflows to maximize staff productivity and improve patient satisfaction. A third detects preoperative, intraoperative and postoperative events to coordinate surgical throughput.

These systems make it easy to add functionality regardless of location: an AI-backed sensor network that monitors hospital rooms to prevent a patient from falling can also detect when hospital supplies are running low, or when an operating room needs to be cleaned.The systems even extend beyond the hospital walls via Artisight’s integrated teleconsult tools to monitor at-risk patients at home.

The last key element of healthcare operations is medical coding, the process of turning a clinician’s notes into a set of alphanumeric codes representing every diagnosis and procedure. These codes are of particular significance in the U.S., where they form the basis for the bills that doctors, clinics and hospitals submit to stakeholders including insurance providers and patients.

Inception startup Fathom has developed AI models to automate the painstaking process of medical coding, reducing costs while increasing speed and precision. Founded in 2016, the company works with the nation’s largest health systems, billing companies and physician groups, coding over 20 million patient encounters annually.

Medical Imaging in Smart Hospitals

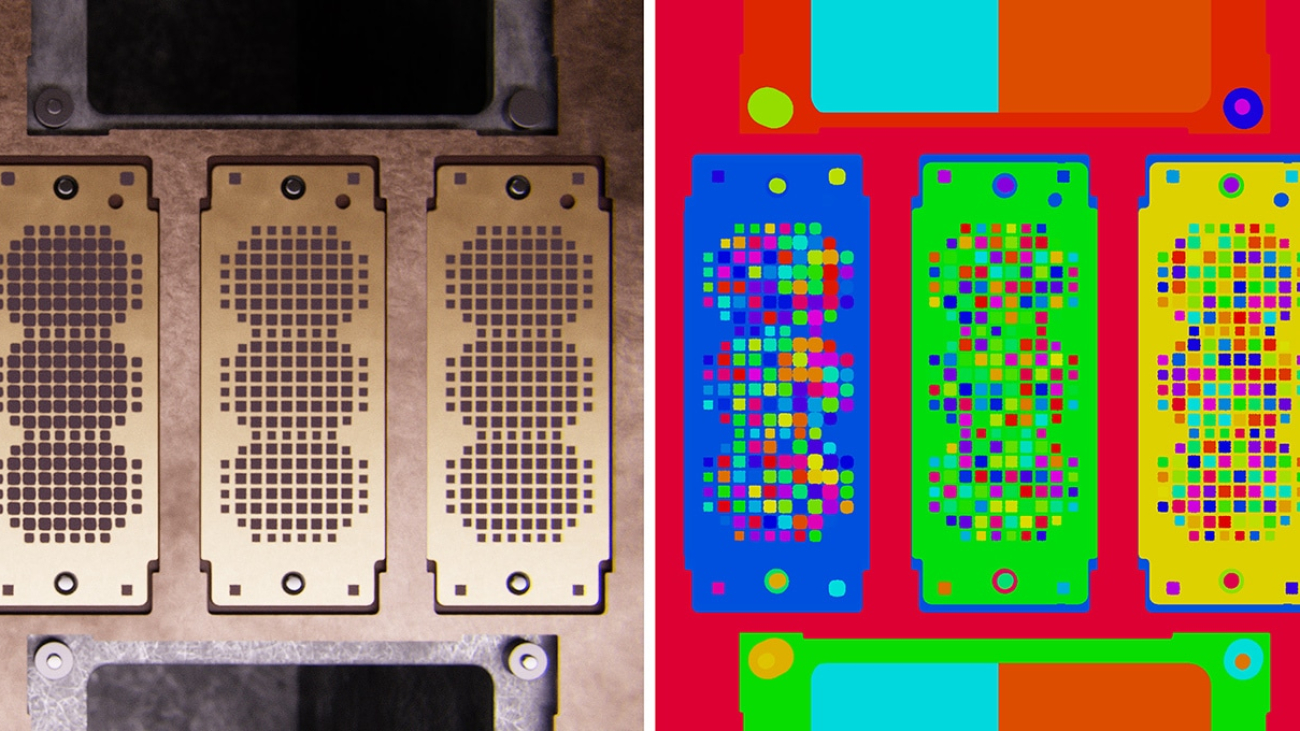

Deep learning first gained its popularity as a tool for identifying objects in images. This is one of the earliest healthcare industry uses for the technology, too. There are dozens of AI models with regulatory approval in the medical imaging space, helping radiology departments in smart hospitals accelerate the analysis of CT, MRI and X-ray data.

AI can pre-screen scans, flagging areas that require a radiologist’s attention to save time — giving them more bandwidth to look at additional scans or explain results to patients. It can move critical cases like brain bleeds to the top of a radiologist’s worklist, shortening the time to diagnose and treat life-threatening cases. And it can enhance the resolution of radiology images, allowing clinicians to reduce the necessary dosage per patient.

Leading medical imaging companies and researchers are using NVIDIA technology to power next-generation applications that can be used in smart hospital environments.

Siemens Healthineers developed deep learning-based autocontouring solutions, enabling precise contouring of organs at risk in radiation therapy.

And Fujifilm Healthcare uses NVIDIA GPUs to power its Cardio StillShot software, which conducts precise cardiac imaging during a CT scan. To accelerate its work, the team used software including the NVIDIA Optical Flow SDK to estimate pixel-level motion and NVIDIA Nsight Compute to optimize performance.

Startups in NVIDIA Inception, too, are advancing medical imaging workflows with AI, such as Shanghai-based United Imaging Intelligence. The company’s uAI platform empowers devices, doctors and researchers with full-stack, full-spectrum AI applications, covering imaging, screening, follow-up, diagnosis, treatment and evaluation. Its uVision intelligent scanning system runs on the NVIDIA Jetson edge AI platform.

Learn more about startups using NVIDIA AI for medical imaging applications.

Digital and Robotic Surgery in Smart Hospitals

In a smart hospital’s operating room, intelligent video analytics and robotics are embedded to take in data and provide AI-powered alerts and guidance to surgeons.

Medical device developers and startups are working on tools to advance surgical training, help surgeons plan procedures ahead of time, provide real-time support and monitoring during an operation, and aid in post-surgery recordkeeping and retrospective analysis.

Paris-based robotic surgery company Moon Surgical is designing Maestro, an accessible, adaptive surgical-assistant robotics system that works with the equipment and workflows that operating rooms already have in place. The startup has adopted NVIDIA Clara Holoscan to save time and resources, helping compress its development timeline.

Activ Surgical has selected Holoscan to accelerate development of its AI and augmented-reality solution for real-time surgical guidance. The Boston-based company’s ActivSight technology allows surgeons to view critical physiological structures and functions, like blood flow, that cannot be seen with the naked eye.

And London-based Proximie will use Holoscan to enable telepresence in the operating room, bringing expert surgeons and AI solutions into each procedure. By integrating this information into surgical imaging systems, the company aims to reduce surgical complication rates, improving patient safety and care.

Telemedicine — Smart Hospital Technology at Home

Another part of smart hospital technology is ensuring patients who don’t need to be admitted to the hospital can receive care from home through wearables, smartphone apps, video appointments, phone calls and text-based messaging tools. Tools like these reduce the burden on healthcare facilities — particularly with the use of AI chatbots that can communicate effectively with patients.

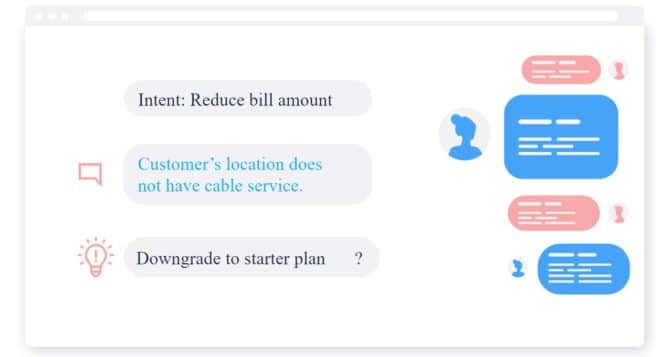

Natural language processing AI is powering intelligent voice assistants and chatbots for telemedicine at companies like Curai, a member of the NVIDIA Inception global network of startups.

Curai is applying GPU-powered AI to connect patients, providers and care teams via a chat-based application. Patients can input information about their conditions, access their medical profiles and chat with providers 24/7. The app also supports providers by offering diagnostic and treatment suggestions based on Curai’s deep learning algorithms.

Curai’s main areas of AI focus have been natural language processing (for extracting data from medical conversations), medical reasoning (for providing diagnosis and treatment recommendations), and image processing and classification (largely for images uploaded by patients).

Virtual care tools like Curai’s can be used for preventative or convenient care at any time, or after a patient’s doctor visit to ensure they’re responding well to treatment.

Medical Research Using Smart Hospital Data

The usefulness of smart hospital data doesn’t end when a patient is discharged — it can inform years of research, becoming part of an institution’s database that helps improve operational efficiency, preventative care, drug discovery and more. With collaborative tools like federated learning, the benefits can go beyond a single medical institution and improve research across the healthcare field globally.

Neurosurgical Atlas, the largest association of neurosurgeons in the world, aims to advance the care of patients suffering from neurosurgical disorders through new, efficient surgical techniques. The Atlas includes a library of surgery recordings and simulations that give neurosurgeons unprecedented understanding of potential pitfalls before conducting an operation, creating a new standard for technical excellence. In the future, Neurosurgical Atlas plans to enable digital twin representations specific to individual patients.

The University of Florida’s academic health center, UF Health, has used digital health records representing more than 50 million interactions with 2 million patients to train GatorTron, a model that can help identify patients for lifesaving clinical trials, predict and alert health teams about life-threatening conditions, and provide clinical decision support to doctors.

The electronic medical records were also used to develop SynGatorTron, a language model that can generate synthetic health records to help augment small datasets — or enable AI model sharing while preserving the privacy of real patient data.

In Texas, MD Anderson is harnessing hospital records for population data analysis. Using the NVIDIA NeMo toolkit for natural language processing, the researchers developed a conversational AI platform that performs genomic analysis with cancer omics data — including survival analysis, mutation analysis and sequencing data processing.

Learn more about smart hospital technology and subscribe to NVIDIA healthcare news.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)

by Edward McEvenue, created using

by Edward McEvenue, created using

(@Prayag_13)

(@Prayag_13)