With tens of millions of weekly transactions across its more than 2,000 stores, Lowe’s helps customers achieve their home-improvement goals. Now, the Fortune 50 retailer is experimenting with high-tech methods to elevate both the associate and customer experience.

Using NVIDIA Omniverse Enterprise to visualize and interact with a store’s digital data, Lowe’s is testing digital twins in Mill Creek, Wash,. and Charlotte, N.C. Its ultimate goal is to empower its retail associates to better serve customers, collaborate with one another in new ways and optimize store operations.

“At Lowe’s, we are always looking for ways to reimagine store operations and remove friction for our customers,” said Seemantini Godbole, executive vice president and chief digital and information officer at Lowe’s. “With NVIDIA Omniverse, we’re pulling data together in ways that have never been possible, giving our associates superpowers.”

Augmented Reality Restocking and ‘X-Ray Vision’

With its interactive digital twin, Lowe’s is exploring a variety of novel augmented reality use cases, including reconfiguring layouts, restocking support, real-time collaboration and what it calls “X-ray vision.”

Wearing a Magic Leap 2 AR headset, store associates can interact with the digital twin. This AR experience helps an associate compare what a store shelf should look like with what it actually looks like, and ensure it’s stocked with the right products in the right configurations.

And this isn’t just a single-player activity. Store associates on the ground can communicate and collaborate with centralized store planners via AR. For example, if a store associate notices an improvement that could be made to a proposed planogram for their store, they can flag it on the digital twin with an AR “sticky note.”

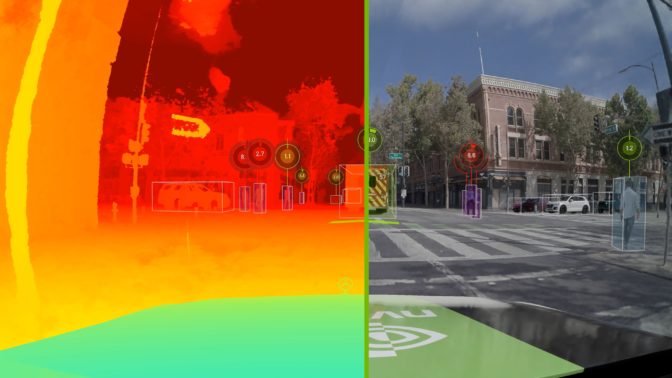

Lastly, a benefit of the digital twin and Magic Leap 2 headset is the ability to explore “X-ray vision.” Traditionally, a store associate might need to climb a ladder to scan or read small labels on cardboard boxes held in a store’s top stock. With an AR headset and the digital twin, the associate could look up at a partially obscured cardboard box from ground level, and, thanks to computer vision and Lowe’s inventory application programming interfaces, “see” what’s inside via an AR overlay.

Store Data Visualization and Simulation

Home-improvement retail is a tactile business. And when making decisions about how to create a new store display, a common way for retailers to see what works is to build a physical prototype, put it out into a brick-and-mortar store and examine how customers react.

With NVIDIA Omniverse and AI, Lowe’s is exploring more efficient ways to approach this process.

Just as e-commerce sites gather analytics to optimize the customer shopping experience online, the digital twin enables new ways of viewing sales performance and customer traffic data to optimize the in-store experience. 3D heatmaps and visual indicators that show the physical distance of items frequently bought together can help associates put these objects near each other. Within a 100,000 square-foot location, for example, minimizing the number of steps needed to pick up an item is critical.

Using historical order and product location data, Lowe’s can also use NVIDIA Omniverse to simulate what might happen when a store is set up differently. Using AI avatars created in Lowe’s Innovation Labs, the retailer can simulate how far customers and associates might need to walk to pick up items that are often bought together.

NVIDIA Omniverse allows for hundreds of simulations to be run in a fraction of the time that it takes to build a physical store display, Godbole said.

Expanding Into the Metaverse

Lowe’s also announced today at NVIDIA GTC that it will soon make the over 600 photorealistic 3D product assets from its home-improvement library free for other Omniverse creators to use in their virtual worlds. All of these products will be available in the Universal Scene Description format on which Omniverse is built, and can be used in any metaverse created by developers using NVIDIA Omniverse Enterprise.

For Lowe’s, the future of home improvement is one in which AI, digital twins and mixed reality play a part in the daily lives of its associates, Godbole said. With NVIDIA Omniverse, the retailer is taking steps to build this future – and there’s a lot more to come as it tests new strategies.

Join a GTC panel discussion on Wednesday, Sept. 21, with Lowe’s Innovation Labs VP Cheryl Friedman and Senior Director of Creative Technology Mason Sheffield, who will discuss how Lowe’s is using AI and NVIDIA Omniverse to make the home-improvement retail experience even better.

Watch the GTC keynote on demand to see all of NVIDIA’s latest announcements, and register free for the conference — running through Thursday, Sept. 22 — to explore how digital twins are transforming industries.

The post Reinventing Retail: Lowe’s Teams With NVIDIA and Magic Leap to Create Interactive Store Digital Twins appeared first on NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)