Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology accelerates creative workflows.

The gripping sci-fi comic Huxley was brought to life in an action-packed 3D trailer full of excitement and intrigue this week In the NVIDIA Studio.

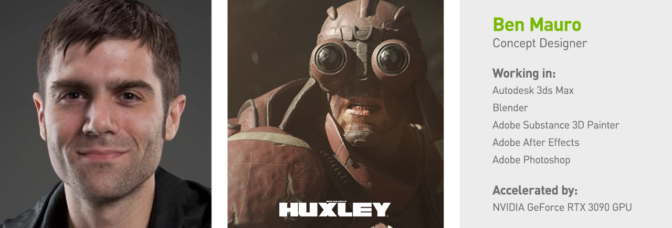

3D artist, concept designer and storyteller Ben Mauro has contributed to some of the world’s biggest entertainment franchises. He’s worked on movies like Elysium, Valerian and Metal Gear Solid, as well as video games such as Halo Infinite and Call of Duty: Black Ops III.

Mauro has met many inspirational artists throughout his storied career, and he collaborated with a few of them to bring Huxley to life. He called the 3D trailer a year’s worth of work, worth every minute spent — following his decade-long process of creating the comic itself.

In Mauro’s fantastical, fictional world, two post-apocalyptic scavengers stumble upon a forgotten treasure map in the form of an ancient sentient robot, finding themselves amidst a mystery of galactic scale.

In designing Huxley the comic, Mauro worked old-school magic with a pad and pencil, sketching characters and environments before importing visuals into Adobe Photoshop. His NVIDIA GeForce RTX 3090 GPU provided fast performance and AI features to speed up his creative workflow.

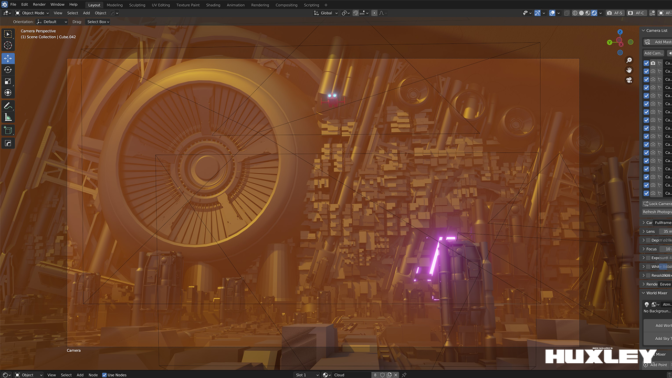

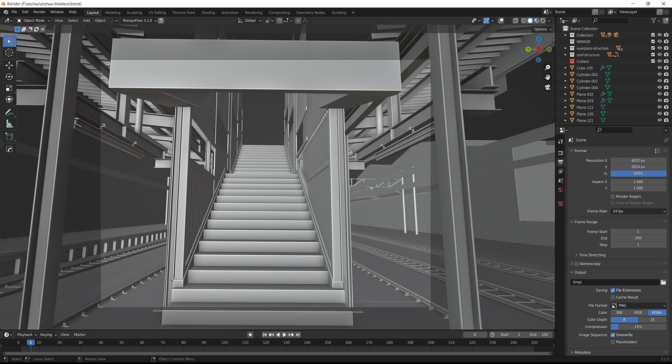

The artist used Photoshop’s “Artboards” to quickly view reference artwork for inspiration, as well as “Image Size” to preserve critical details — both features accelerated by his GPU. To finish up the comic, Mauro turned to Blender software to create mockups and block out scenes with the intention of later converting back to 3D from 2D.

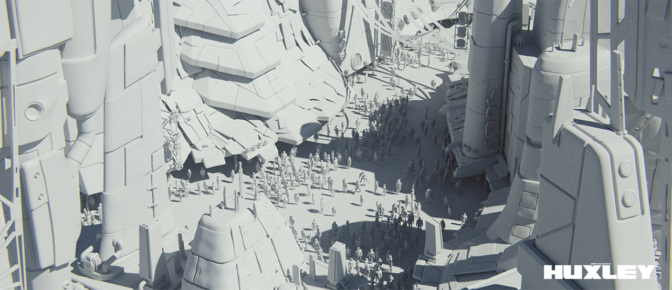

With 3D trailer production in progress, matte painter and environment artist Steve Cormann used Mauro’s Blender models as a convenient starting point, virtually a one-to-one match to the desired 3D outcome.

Cormann, who specializes in Autodesk 3ds Max software, applied advanced modeling techniques in building the scene. 3ds Max has a GPU-accelerated viewport that guarantees fast and interactive 3D modeling. It also lets artists choose their preferred 3D renderer — which in Cormann’s case is Maxon’s Redshift, where combining GPU acceleration and AI-powered OptiX denoising resulted in lightning-fast final-frame rendering.

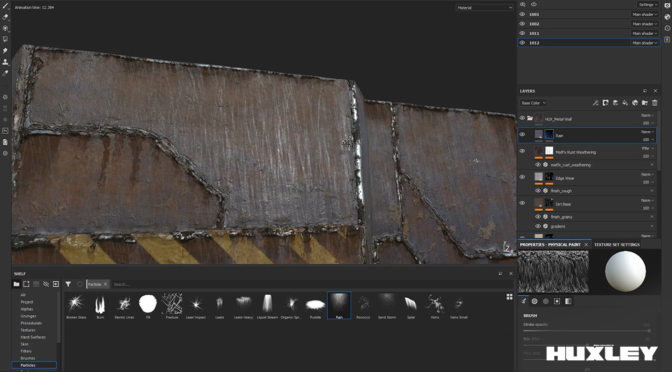

This proved useful as Cormann exported scenes into Adobe Substance 3D Painter to apply various textures and colors. RTX-accelerated light- and ambient-occlusion features baked and optimized assets within the scenes in mere seconds, giving Cormann the option to experiment with different visual aesthetics quickly and easily.

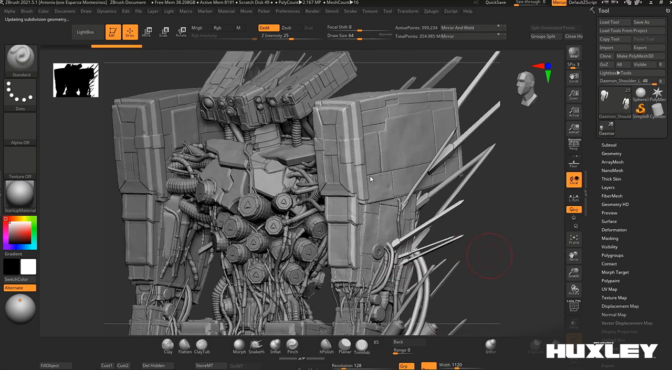

Enter more of Mauro’s collaborators: lead character artist Antonio Esparza and his team, who spent significant time in 3ds Max to refine individual scenes and generate the staggering number of hero characters. This included uniquely texturing each of the characters and props. Esparza said his GeForce RTX 2080 SUPER GPU allowed him to modify characters and export renders dramatically faster than his previous hardware.

Esparza joked that before his hardware upgrade, “Most of the last hours of the day, it was me here, you know, like, waiting.” Director Sava Živković would say to Esparza, “Turn the lights off Antonio, we don’t want to see that progress bar.”

Meanwhile, Živković turned his focus to lighting in 3ds Max. His trusty GeForce RTX 2080 Ti GPU enabled RTX-accelerated AI denoising with Maxon’s Redshift, resulting in photorealistic visuals while remaining highly interactive. This let the director tweak and modify scenes freely and easily.

With renders and textures in a good place, rigging and modeling artist Lucas Salmon began building meshes and rigging in 3ds Max to prepare for animation. Motion capture work was then outsourced to the well-regarded Belgrade-based studio, Take One. With 54 Vicon cameras and one of the biggest capture stages in Europe, it’s no surprise the animation quality in Huxley is world class.

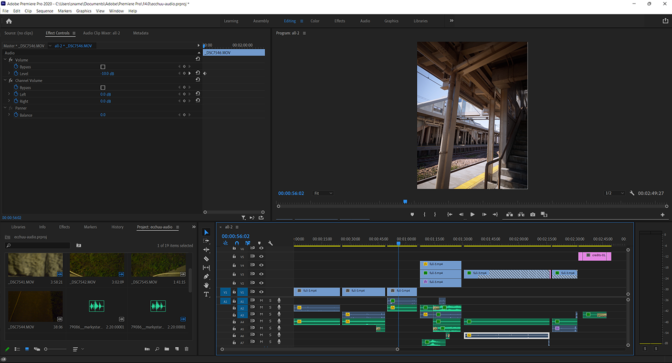

Živković then deployed Adobe After Effects to composite the piece. Roughly 90% of the visual effects (VFX) were accomplished with built-in tools, stock footage and various plugins. Key 3D VFX such as ship smoke trails were simulated in Blender and then added in comp. The ability to move between multiple apps quickly is a testament to the power of the RTX GPU, Živković said.

“I love the RTX 3090 GPU for the extra VRAM, especially for increasingly bigger scenes where I want everything to look really nice and have quality texture sizes,” he said.

Satisfied with the trailer, Mauro reflected on artistry. “As creatives, if we don’t see the film, game, or universe we want to experience in our entertainment, we’re in the position to create it with our hard-earned skills. I feel this is our duty as artists and creators to leave behind more imagined worlds than existed before we got there, to inspire the world and the next generation of artists/creators to push things even further than we did.” he said.

Access Mauro’s impressive portfolio on his website.

Huxley is an entire world rich in history and intrigue, currently being developed into a feature film and TV series.

Onwards and Upwards

Many of the techniques Mauro deployed can be learned by viewing free Studio Session tutorials on the NVIDIA Studio YouTube channel.

Learn core foundational warm-up exercises to inspire and ignite creative thinking, discover how to design sci-fi objects such as props, and transform 2D sketches into 3D models.

Also, in the spirit of learning, the NVIDIA Studio team has posed a challenge for the community to show off personal growth. Participate in the #CreatorsJourney challenge for a chance to be showcased on NVIDIA Studio social media channels.

It’s time to show how you’ve grown as an artist (just like @lowpolycurls)!

Join our #CreatorJourney challenge by sharing something old you created next to something new you’ve made for a chance to be featured on our channels.

Tag #CreatorJourney so we can see your post.

pic.twitter.com/PmkgOvhcBW

— NVIDIA Studio (@NVIDIAStudio) August 15, 2022

Entering is easy. Post an older piece of artwork alongside a more recent one to showcase your growth as an artist. Follow and tag NVIDIA Studio on Instagram, Twitter or Facebook, and use the #CreatorsJourney tag to join.

Get creativity-inspiring updates directly to your inbox by subscribing to the NVIDIA Studio newsletter.

The post Concept Designer Ben Mauro Delivers Epic 3D Trailer ‘Huxley’ This Week ‘In the NVIDIA Studio’ appeared first on NVIDIA Blog.

It’s time to show how you’ve grown as an artist (just like

It’s time to show how you’ve grown as an artist (just like

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)