Want inspiration? Try being charged by a two-ton African black rhino.

Early in her career, wildlife biologist Zoe Jewell and her team came across a mother rhino and her calf and carefully moved closer to get a better look.

The protective mother rhino charged, chasing Jewell across the dusty savannah. Eventually, Jewell got a flimsy thorn bush between herself and the rhino. Her heart was racing.

“I thought to myself, ‘There has to be a better way,’” she said.

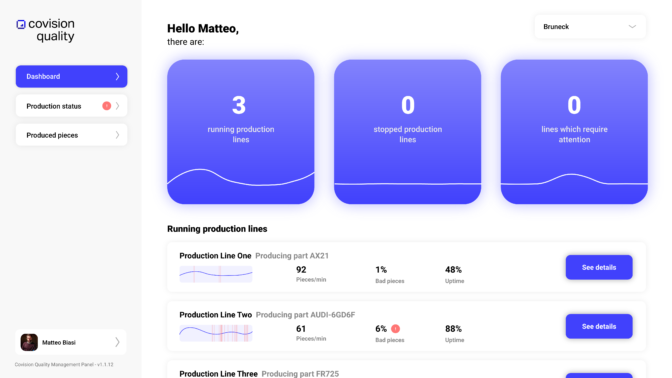

In the latest example of how researchers like Jewell are using the latest technologies to track animals less invasively, a team of researchers has proposed harnessing high-flying AI-equipped drones powered by the NVIDIA Jetson edge AI platform to track the endangered black rhino through the wilds of Namibia.

In a paper published this month in the journal PeerJ, the researchers show the potential of drone-based AI to identify animals in even the remotest areas and provide real-time updates on their status from the air.

For more, read the full paper at https://peerj.com/articles/13779/.

While drones — and technology of just about every kind — have been harnessed to track African wildlife, the proposal promises to help gamekeepers move faster to protect rhinos and other megafauna from poachers.

“We have to be able to stay one step ahead,” said Jewell, co-founder of WildTrack, a global network of biologists and conservationists dedicated to non-invasive wildlife monitoring techniques.

Jewell, president and co-founder of WildTrack, has a B.Sc. in Zoology/Physiology, an M.Sc in Medical Parasitology from the London School of Tropical Medicine and Hygiene and a veterinary medical degree from Cambridge University. She has long sought to find less invasive ways to track, and protect, endangered species, such as the African black rhino.

In addition to Jewell, the paper’s authors include conservation biology and data science specialists at UC Berkeley, the University of Göttingen in Germany, Namibia’s Kuzikus Wildlife Reserve and Duke University.

The stakes are high.

African megafauna have become icons, even as global biodiversity declines.

“Only 5,500 black rhinos stand between this magnificent species, which preceded humans on earth by millions of years, and extinction,” Jewell says.

That’s made them bigger targets for poachers, who sell rhino horns and elephant tusks for huge sums, the paper’s authors report. Rhino horns, for example, reportedly go for as much as $65,000 per kilogram.

To disrupt poaching, wildlife managers must deploy effective protection measures.

This, in turn, depends on getting reliable data fast.

The challenge: many current monitoring technologies are invasive, expensive or impractical.

Satellite monitoring is a potential tool for the biggest animals — such as elephants. But detecting smaller species requires higher resolution imaging.

And the traditional practice of capturing rhinos, attaching a radio collar to the animals and then releasing them can be stressful for humans and rhinos.

It’s even been found to depress the fertility of captured rhinos.

High-flying drones are already being used to study wildlife unobtrusively.

But rhinos most often live in areas with poor wireless networks, so drones can’t stream images back in real-time.

As a result, images have to be downloaded when drones return to researchers, who then have to comb through images looking to identify the beasts.

Identifying rhinos instantly onboard a drone and alerting authorities before it lands would ensure a speedy response to poachers.

“You can get a notification out and deploy units to where those animals are straight away,” Jewell said. “You could even protect these animals at night using heat signatures.”

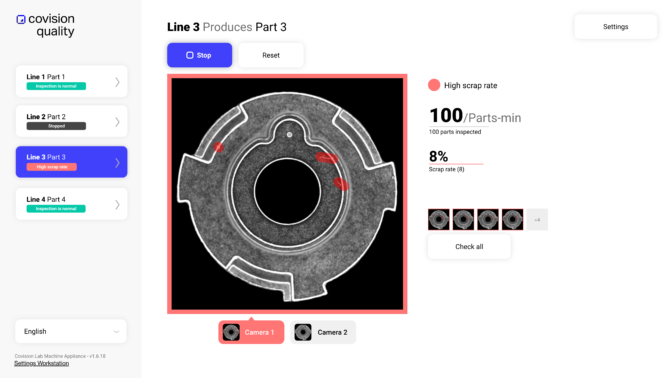

To do this, the paper’s authors propose using an NVIDIA Jetson Xavier NX module onboard a Parrot Anafi drone.

The drone can connect to the relatively poor-quality wireless networks available in areas where rhinos live and deliver notifications whenever the target species are spotted.

To build the drone’s AI, the researchers used a YOLOv5l6 object-detection architecture. They trained it to identify a bounding box for one of five objects of interest in a video frame.

Most of the images used for training were gathered in Namibia’s Kuzikus Wildlife Reserve, an area of roughly 100 square kilometers on the edge of the Kalahari desert.

With tourists gone, Jewell reports that her colleagues in Namibia had plenty of time to gather training images for the AI.

The researchers used several technologies to optimize performance and overcome the challenge of small animals in the data.

These techniques included images of other species in the AI’s training data, emulating field conditions with many animals.

They used data augmentation techniques, such as generative adversarial networks, to train the AI on synthetic data, the paper’s authors wrote.

And they also trained the model on a dataset with many kinds of terrain and images taken from different angles and lighting conditions.

Looking at footage of rhinos gathered in the wild, the AI correctly identified black rhinos — the study’s primary target — 81 percent of the time and giraffes 83 percent of the time, they reported.

The next step: putting this system to work in the wild, where wildlife conversationalists are already deploying everything from cameras to radio collars to track rhinos.

Many of the techniques combine the latest technology with ancient practices.

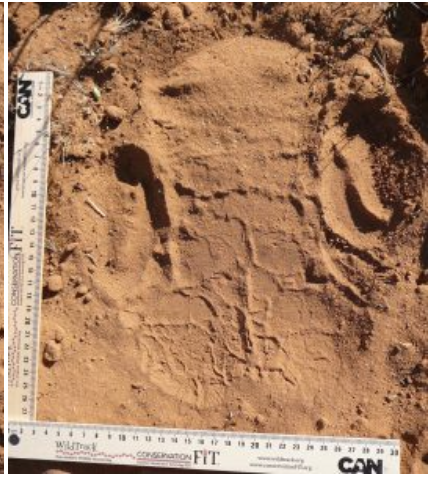

Jewell and WildTrack co-founder Sky Alibhai have already created a system, FIT, that uses sophisticated new techniques to analyze animal tracks (see image of a rhino track, left). The software, initially developed using morphometrics — or the quantitative analysis of an animal’s form — on JMP statistical analysis software, now uses the latest AI techniques.

Jewell and WildTrack co-founder Sky Alibhai have already created a system, FIT, that uses sophisticated new techniques to analyze animal tracks (see image of a rhino track, left). The software, initially developed using morphometrics — or the quantitative analysis of an animal’s form — on JMP statistical analysis software, now uses the latest AI techniques.

Jewell says that modern science and the ancient art of tracking are much more alike than you might think.

“’When you follow a footprint, you’re really recreating the origins of science that shaped humanity,” Jewell said. “You’re deciding who made that footprint, and you’re following a trail to see if you’re correct.”

Jewell and her colleagues are now working to take their work another step forward, to use drones to identify rhino trails in the environment.

“Without even seeing them on the ground we’ll be able to create a map of where they’re going and interacting with each other to help us understand how to best protect them,” Jewell says.

All Images courtesy of WildTrack

The post An AI-Enabled Drone Could Soon Become Every Rhino Poacher’s… Horn Enemy appeared first on NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)