Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology accelerates creative workflows.

Many artists can edit a video, paint a picture or build a model — but transforming one’s imagination into stunning creations can now involve breakthrough design technologies.

Kate Parsons, a digital art professor at Pepperdine University and this week’s featured In the NVIDIA Studio artist, helped bring a music video for How Do I Get to Invincible to life using virtual reality and NVIDIA GeForce RTX GPUs.

The project, a part of electronic music trio The Glitch Mob’s visual album See Without Eyes, quickly and seamlessly moved from 3D to VR, thanks to NVIDIA GPU acceleration.

We All FLOAT On

Parsons has blended her passions for art and technology as co-founder of FLOAT LAND, a pioneering studio that innovates across digital media, including video, as well as virtual and augmented reality.

She and co-founder Ben Vance collaborate on projects at the intersection of art and interactivity. They design engaging animations, VR art exhibits and futuristic interactive AR displays.

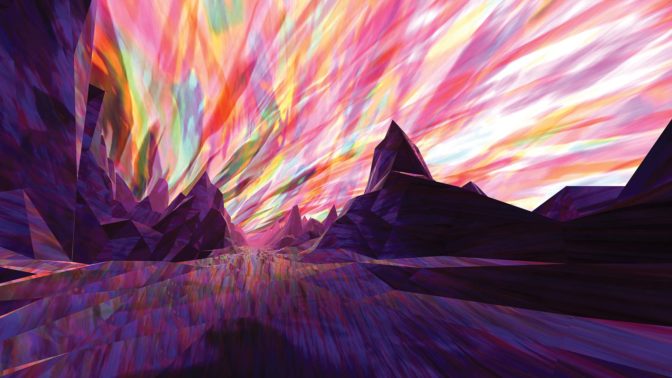

When The Glitch Mob set out to turn See Without Eyes into a state-of-the-art visual album, the group tapped long-term collaborators Strangeloop Studios, who in turn reached out to FLOAT LAND to create art for the song How Do I Get to Invincible. Parsons and her team brought a dreamlike feel to the project.

Working with the team at Strangeloop Studios, FLOAT LAND created the otherworldly landscapes for How Do I Get to Invincible, harnessing the power of NVIDIA RTX GPUs.

“We have a long history of using NVIDIA GPUs due to our early work in the VR space,” Parsons said. “The early days of VR were a bit like the Wild West, and it was really important for us to have reliable systems — we consider NVIDIA GPUs to be a key part of our rigs.”

Where Dreams Become (Virtual) Reality

The FLOAT LAND team used several creative applications for the visual album. They began by researching techniques in real-time visual effects to work within Unity software. This included using custom shaders inspired by the Shadertoy computer graphics tool and exploring different looks to create a surreal mix of dark and moody.

Then, the artists built test terrains using Cinema 4D, a professional 3D animation, simulation and rendering solution, and Unity, a leading platform for creating and operating interactive, real-time 3D content, to explore post-effects like tilt shift, ambient occlusion and chromatic aberration. They also used the Unity plugin Fog Volume 3 to create rich, dynamic clouds to quickly explore many options.

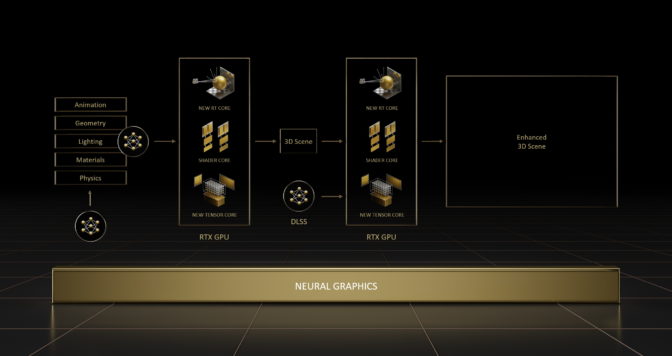

Using NVIDIA RTX GPUs in Unity accelerated the work of Parsons’s team through advanced shading techniques. Plus, NVIDIA DLSS increased the interactivity of the viewport.

“Unity was central to our production process, and we iterated both in editor and in real time to get the look we wanted,” Parsons said. “Some of the effects really pushed the limits of our GPUs. It wouldn’t have been possible to work in real time without GPU acceleration – we would’ve had to render out clips, which takes anywhere from 10 to thousands of times longer.”

And like all great projects, even once it was done, the visual album wasn’t done, Parsons said. Working with virtual entertainment company Wave, FLOAT LAND’s work for the visual album was used to turn the entirety of the piece into a VR experience. Using the Unity and GPU-native groundwork greatly accelerated this process, Parsons added.

The Glitch Mob called it “a completely new way to experience music.”

Best in Class

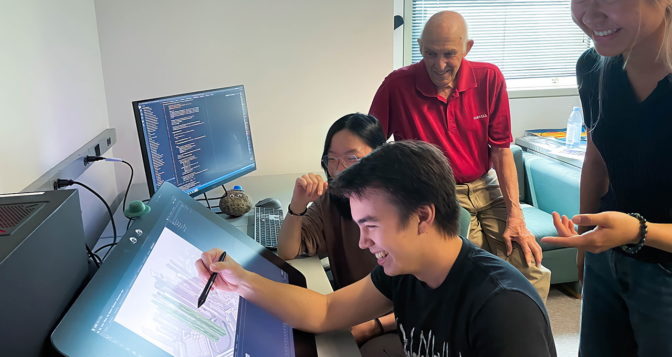

When she isn’t making her own breathtaking creations, Parsons helps her students grow as creators. She teaches basic and advanced digital art at Pepperdine — including how to use emerging technologies to transform creative workflows.

“Many of my students get really obsessed with learning certain kinds of software — as if learning the software will automatically bypass the need to think creatively,” she said. “In this sense, software is just a tool.”

Parsons advises her students to try a bit of everything and see what sticks. “If there’s something you want to learn, spend about three weeks with it and see if it’s a tool that will be useful for you,” she said.

While many of her projects dip into new, immersive fields like AR and VR, Parsons highlighted the importance of understanding the fundamentals, like workflows in Adobe Photoshop and Illustrator. “Students should learn the difference between bitmap and vector images early on,” she said.

Parsons works across multiple systems powered by NVIDIA GPUs — a Dell Alienware PC with a GeForce RTX 2070 GPU in her classroom; a custom PC with a GeForce RTX 2080 in her home office; and a Razer Blade 15 with a GeForce RTX 3070 Laptop GPU for projects on the go. When students ask which laptop they should use for their creative education, Parsons points them to NVIDIA Studio-validated PCs.

Creatives going back to school can start off on the right foot with an NVIDIA Studio-validated laptop. Whether for 3D modeling, VR, video and photo editing or any other creative endeavor, a powerful laptop is ready to be the backbone of creativity. Explore these task-specific recommendations for NVIDIA Studio laptops.

#CreatorJourney Challenge

In the spirit of learning, the NVIDIA Studio team is posing a challenge for the community to show off personal growth. Participate in the #CreatorJourney challenge for a chance to be showcased on NVIDIA Studio social media channels.

Entering is easy. Post an older piece of artwork alongside a more recent one to showcase your growth as an artist. Follow and tag NVIDIA Studio on Instagram, Twitter or Facebook, and use the #CreatorJourney tag to join.

It’s time to show how you’ve grown as an artist (just like @lowpolycurls)!

Join our #CreatorJourney challenge by sharing something old you created next to something new you’ve made for a chance to be featured on our channels.

Tag #CreatorJourney so we can see your post.

pic.twitter.com/PmkgOvhcBW

— NVIDIA Studio (@NVIDIACreators) August 15, 2022

Learn something new today: Access tutorials on the Studio YouTube channel and get creativity-inspiring updates directly to your inbox by subscribing to the NVIDIA Studio newsletter.

The post Digital Art Professor Kate Parsons Inspires Next Generation of Creators This Week ‘In the NVIDIA Studio’ appeared first on NVIDIA Blog.

Founded in 2016 by a group of veterans from the Israel Defense Forces, the startup has deployed its AI to analyze millions of cases across more than 1,000 medical facilities, primarily in the U.S., Europe and Israel.

Founded in 2016 by a group of veterans from the Israel Defense Forces, the startup has deployed its AI to analyze millions of cases across more than 1,000 medical facilities, primarily in the U.S., Europe and Israel.

or

or

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)